This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Axoflow Platform

Axoflow is a security data curation pipeline that helps you get high quality, reduced security data, automatically, without coding. Better quality data allows your organization and SOC team to detect and respond to threats faster, use AI, and reduce compliance breaches and costs.

Collect security data from anywhere

Axoflow allows you to collect data from any source, including:

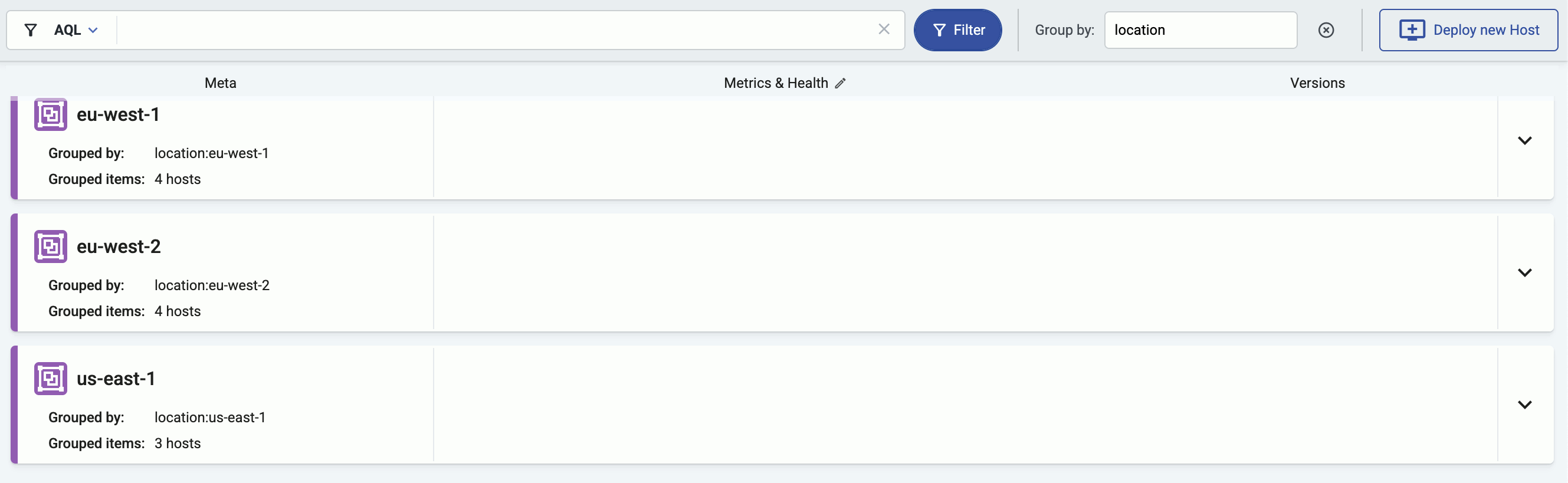

- cloud services

- cloud-native sources like OpenTelemetry or Kubernetes

- traditional sources like Microsoft Windows endpoints (WEC) and Linux servers (syslog)

- networking appliances like firewalls

- applications.

Shift left

Axoflow deals with tasks like data classification, parsing, curation, reduction, and routing. Except for routing, these tasks are commonly performed in the SIEM, often manually. Axoflow automates all of these processing steps and shifts them left into the data pipeline, so:

- it’s automatically applied to all your security data,

- all destinations benefit from it (multi-SIEM and storage+SIEM scenarios),

- data format is optimized for the specific destination (for example, Splunk, Azure Sentinel, Google Pub/Sub),

- unneeded and redundant data can be dropped before having to pay for it, reducing data volume and storage costs.

Curation

Axoflow can automatically classify, curate, enrich, and optimize data right at the edge – limiting excess network overhead and costs as well as downstream manual data-engineering in the analytics tools. Axoflow has over 100 zero-maintenance connectors that automatically identify and classify the incoming data, and apply automatic, device- and source-specific curation steps.

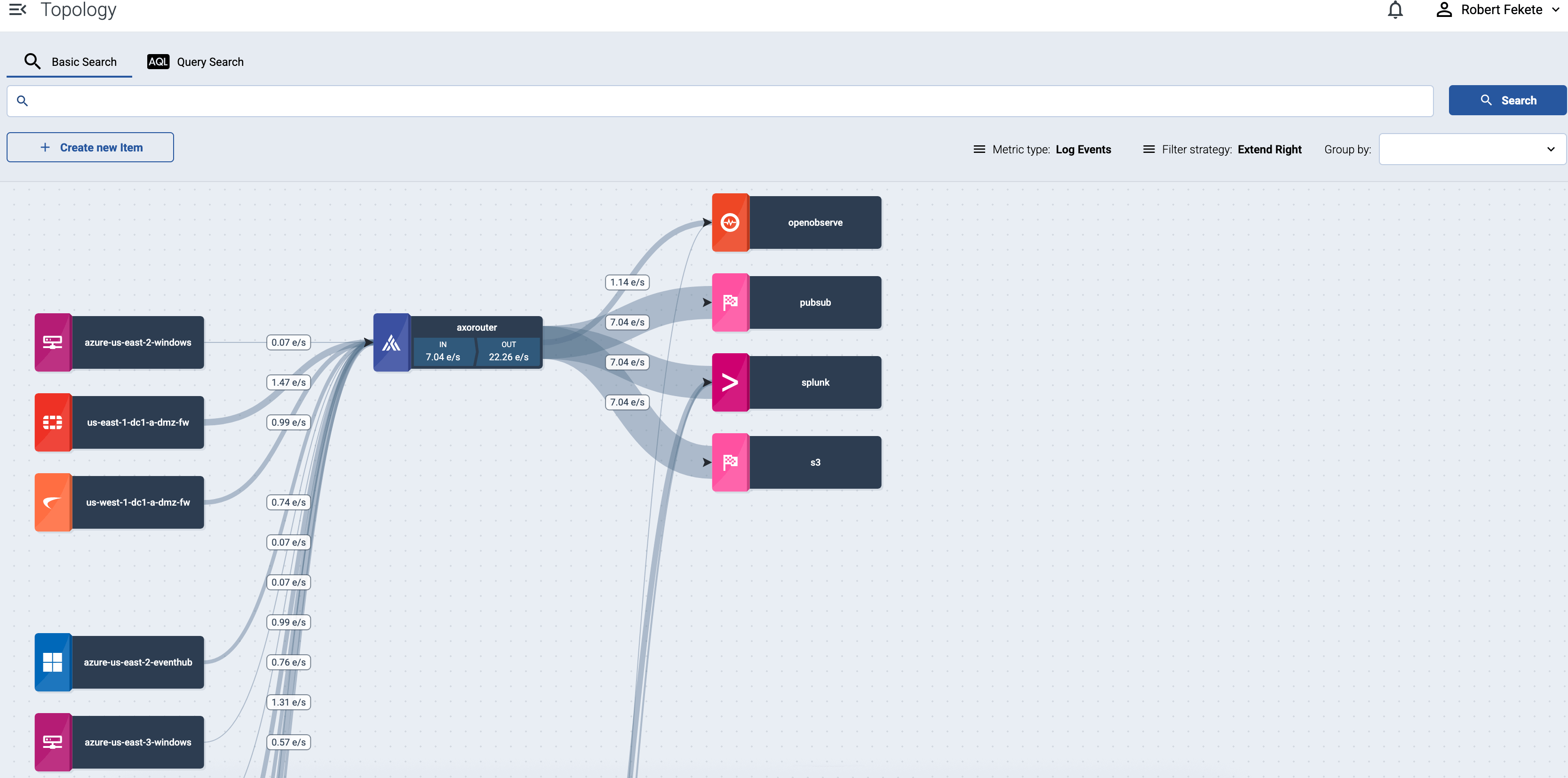

Policy-based routing

Axoflow can configure your aggregator and edge devices to intelligently route data based on the high-level flows you configure. Flows can use labels and other properties of the transferred data as well as your inventory to automatically map your business and compliance policies into configuration files, without coding.

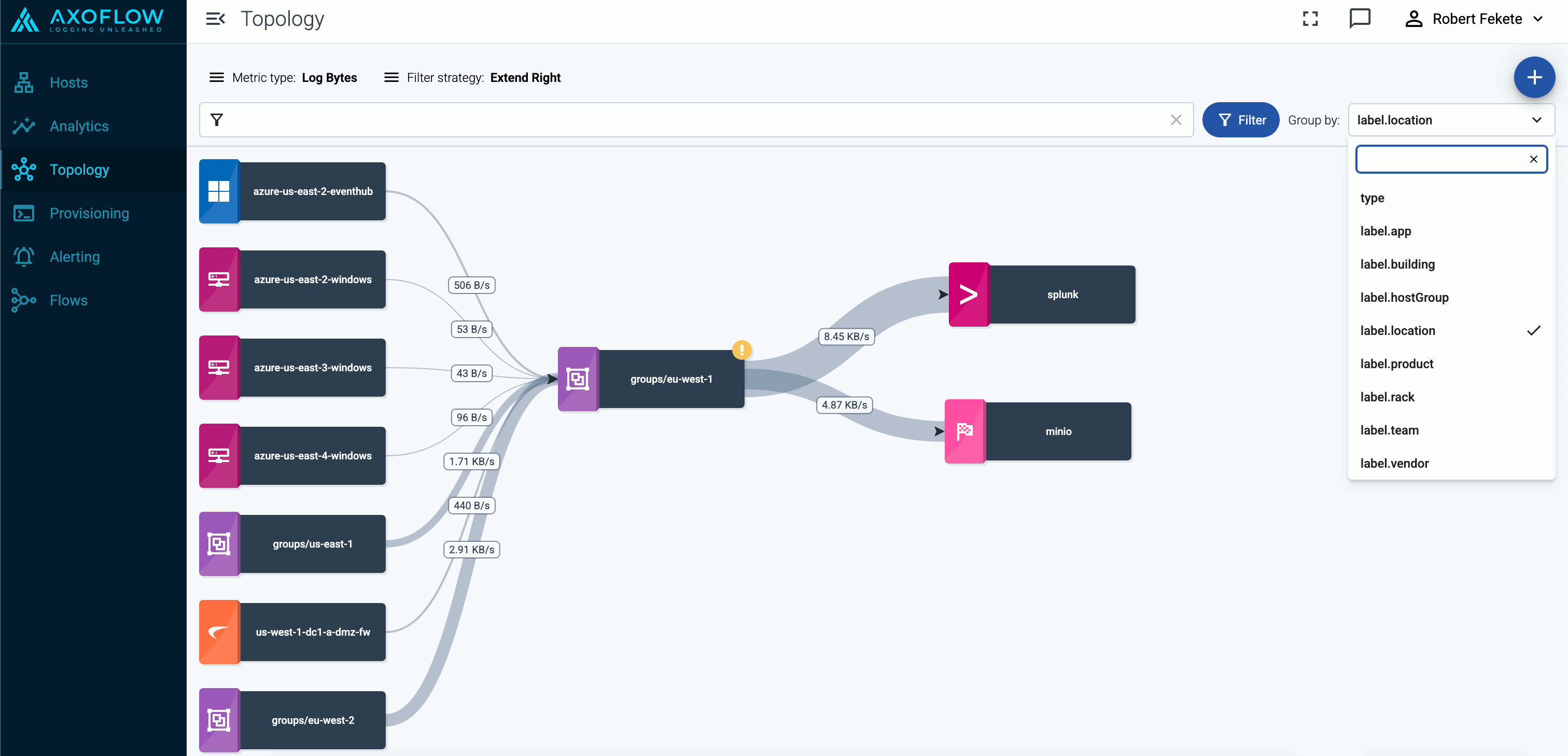

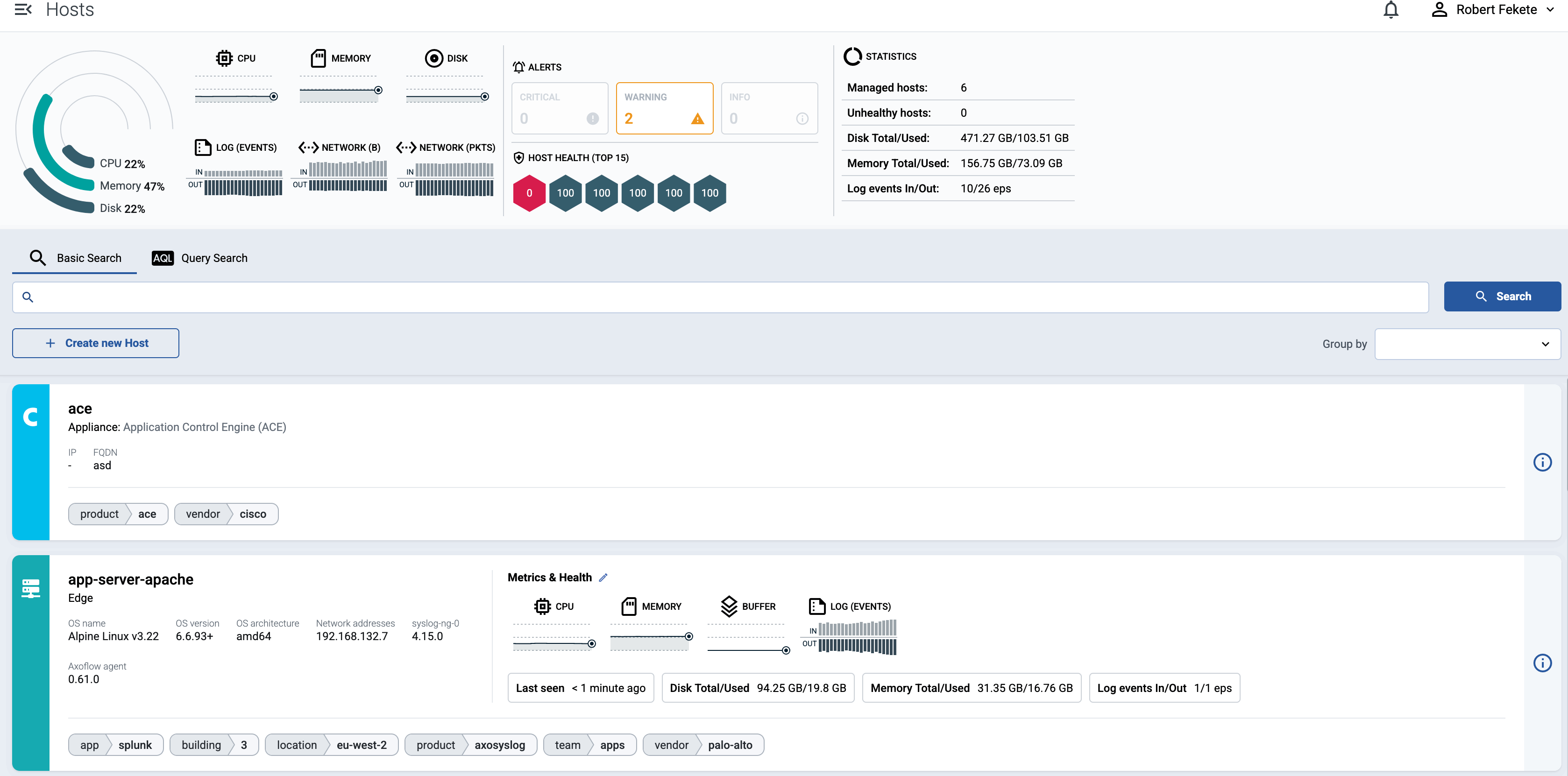

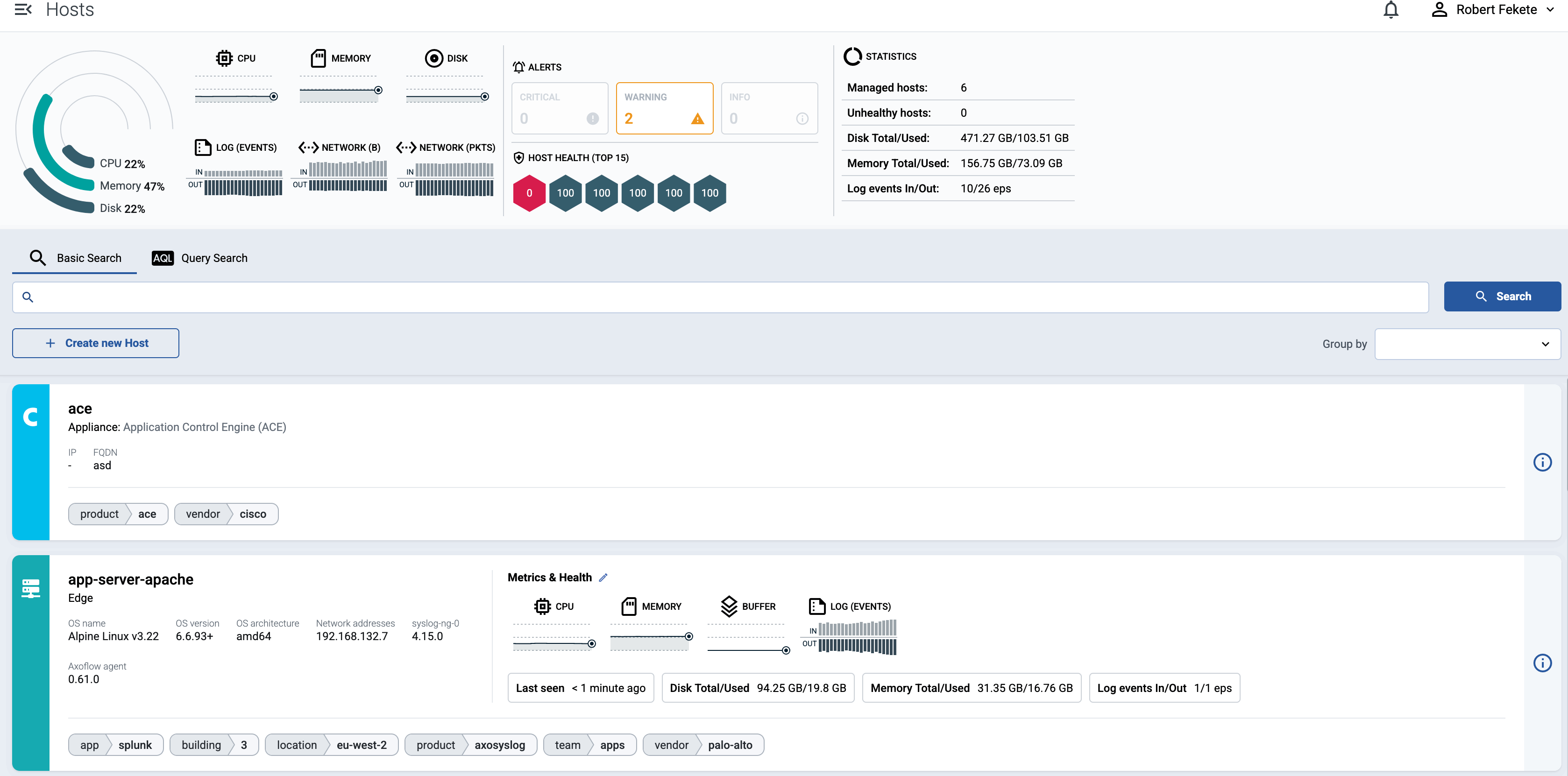

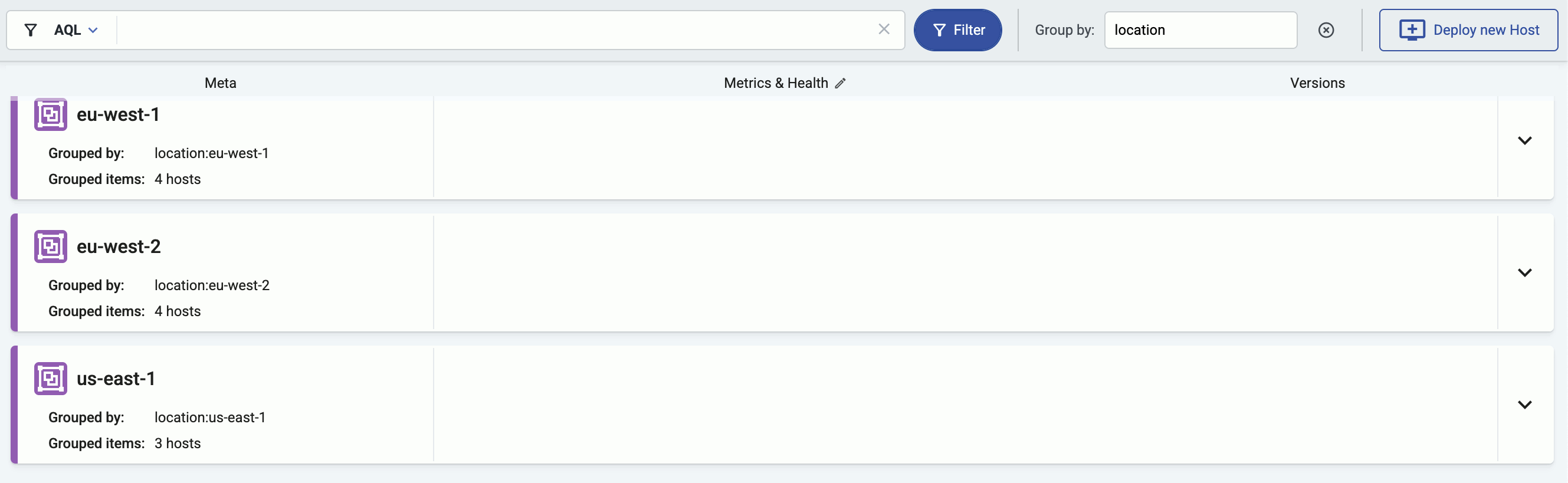

Management

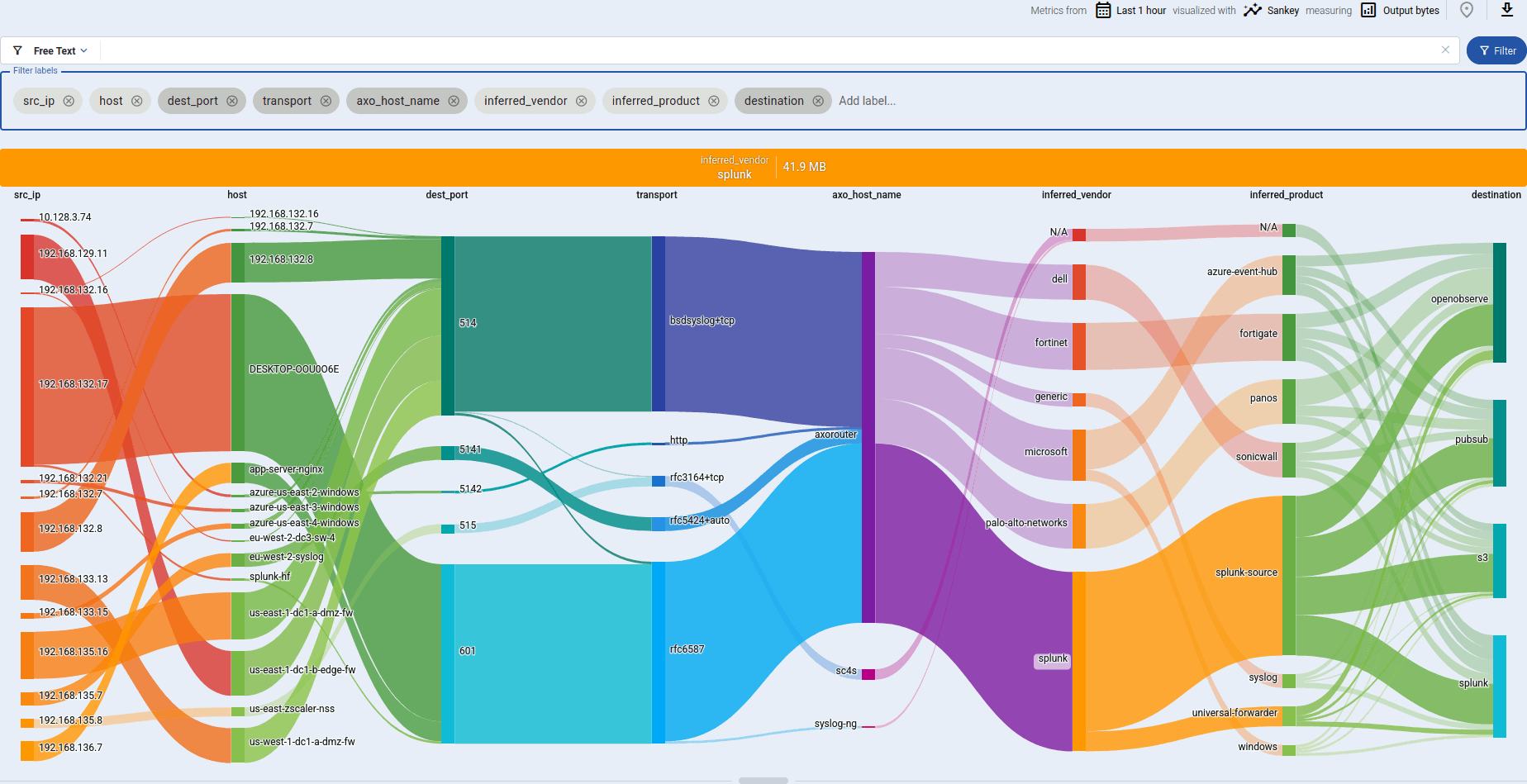

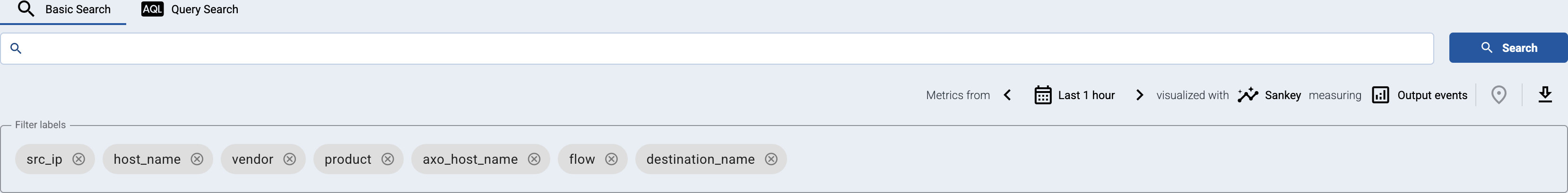

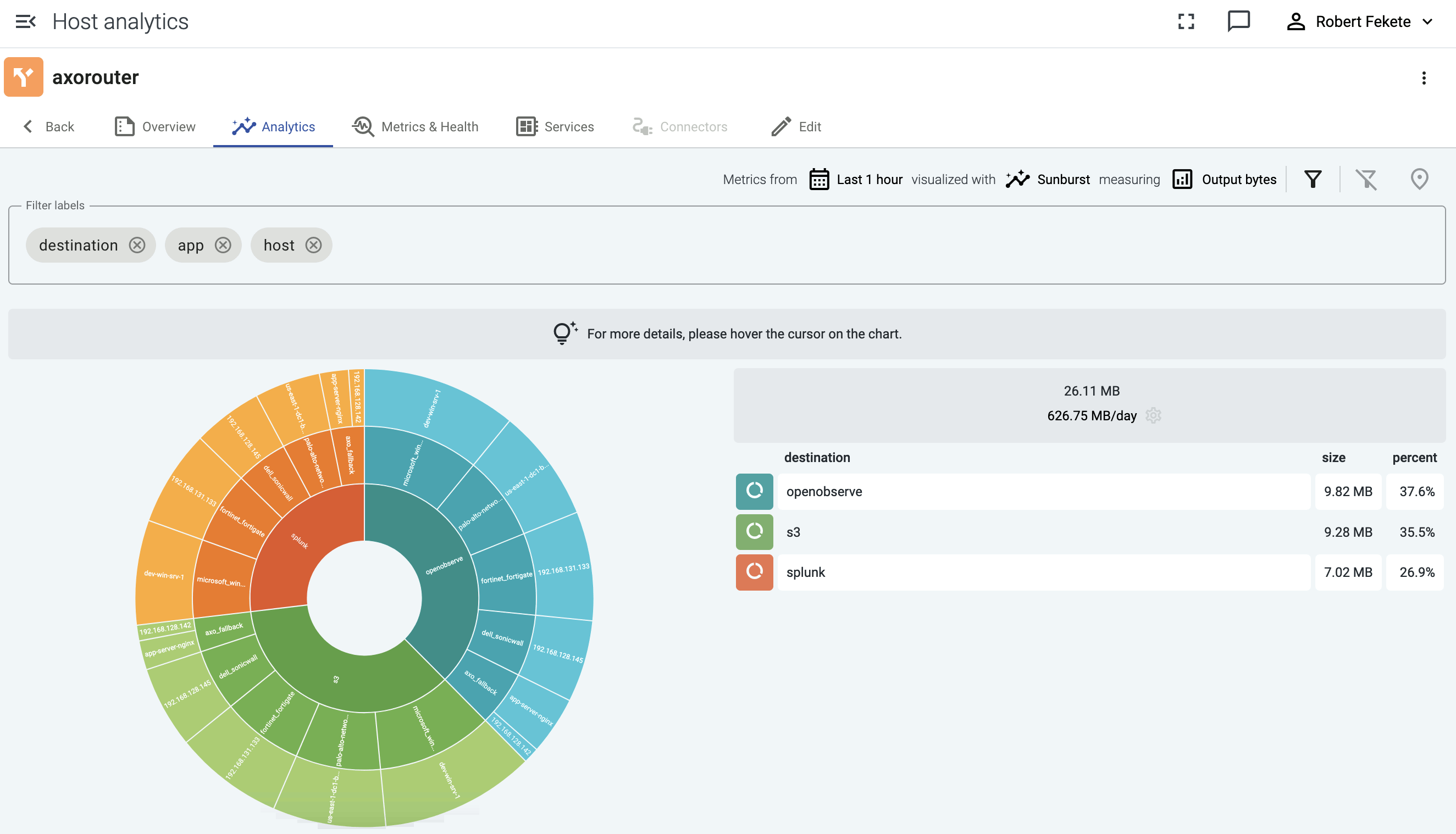

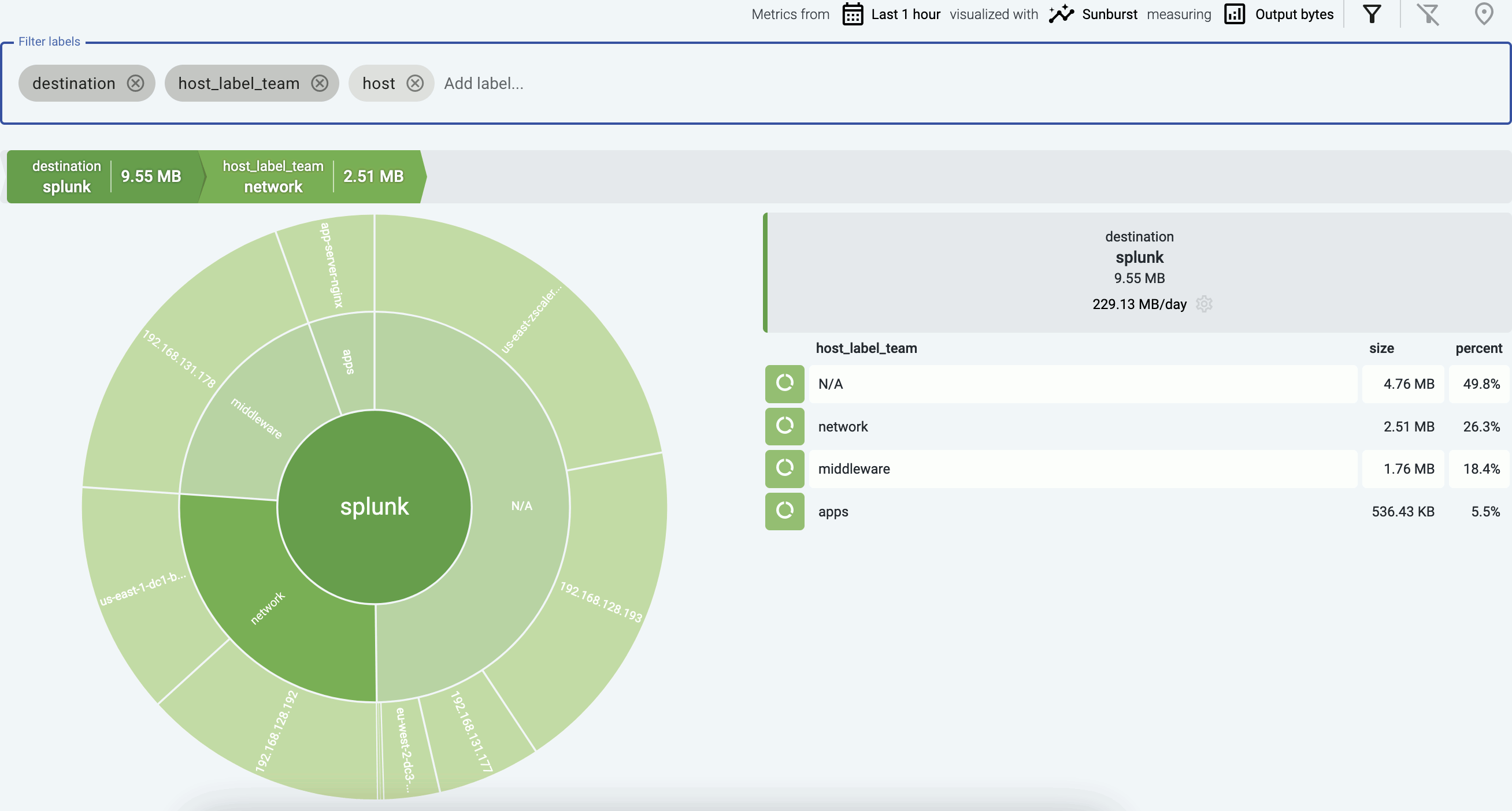

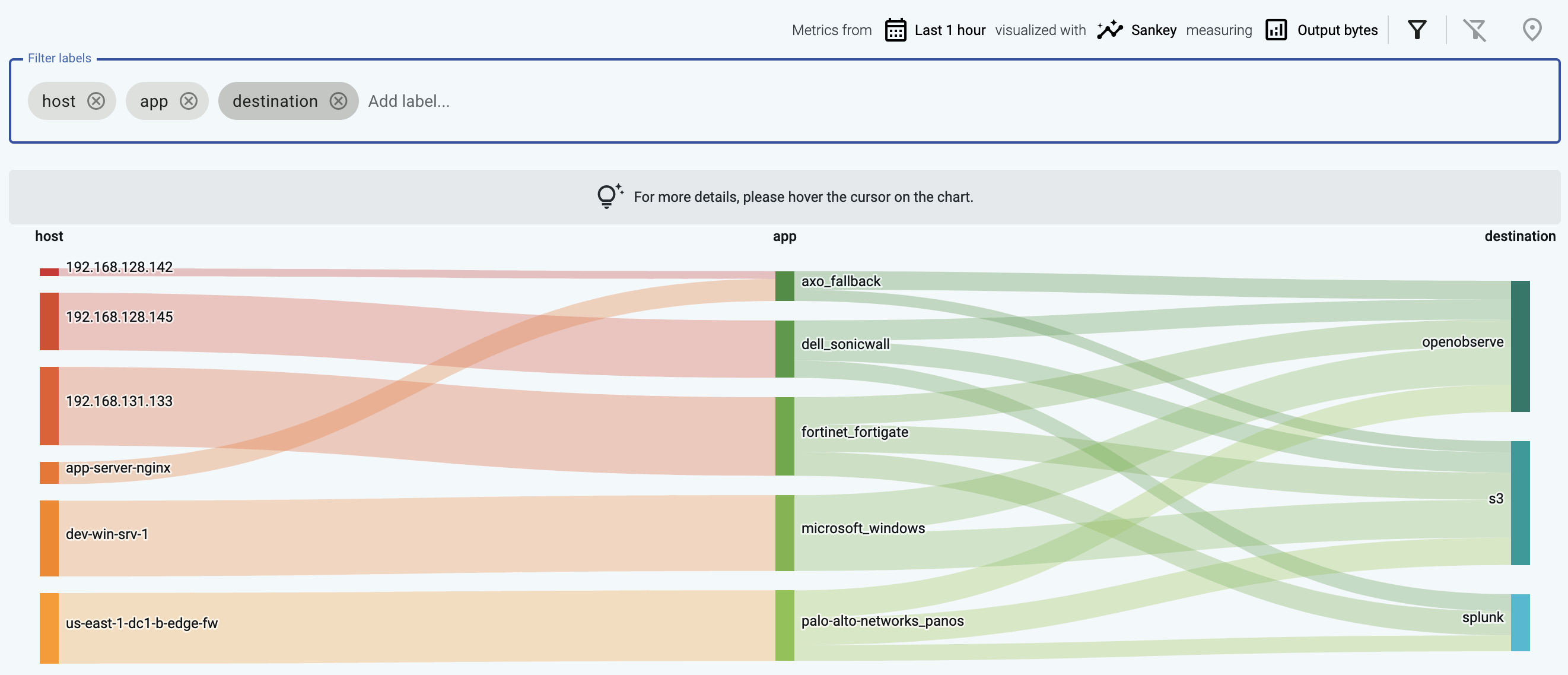

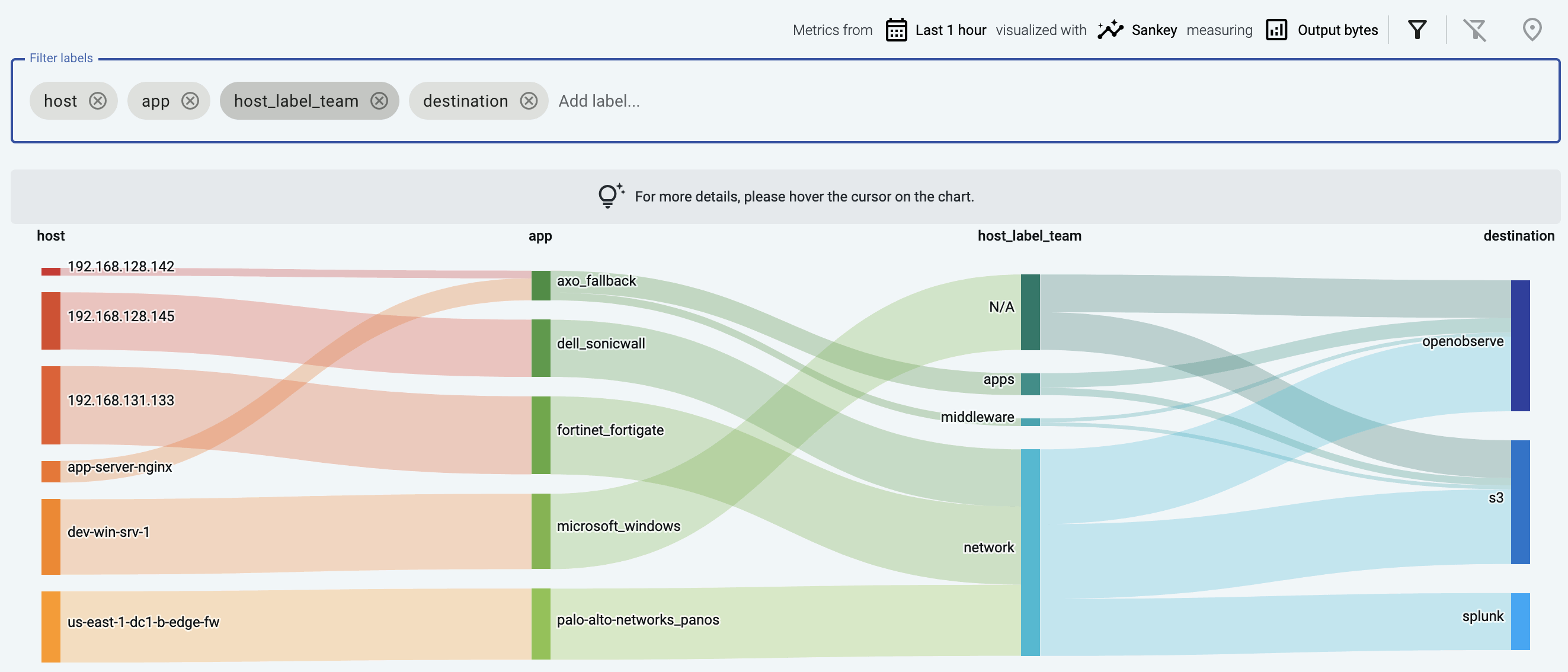

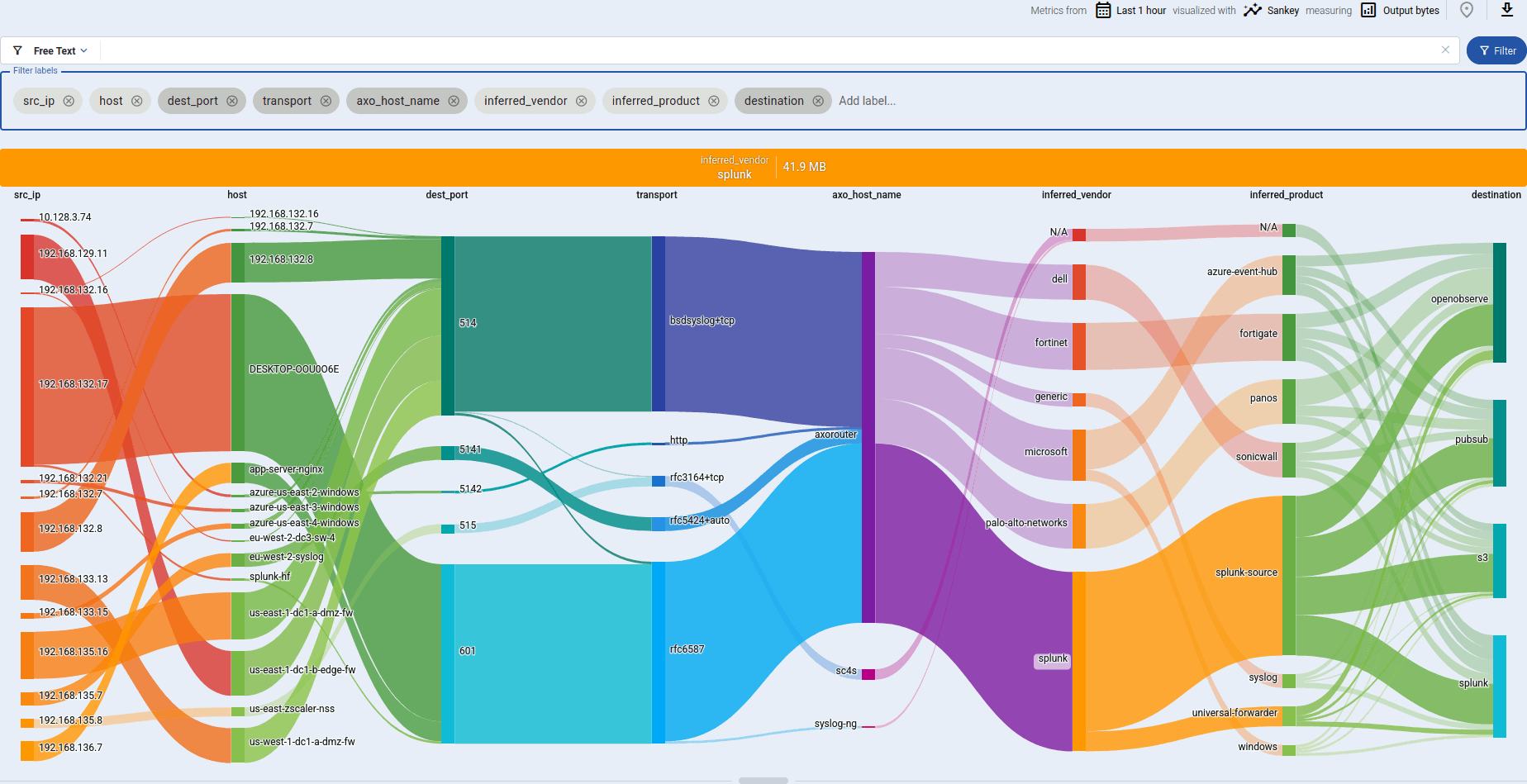

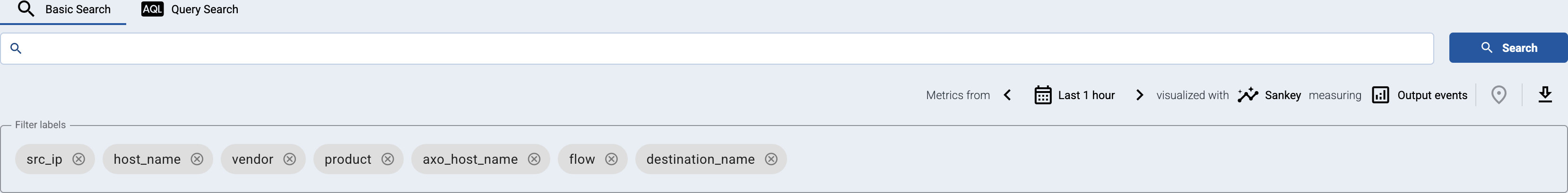

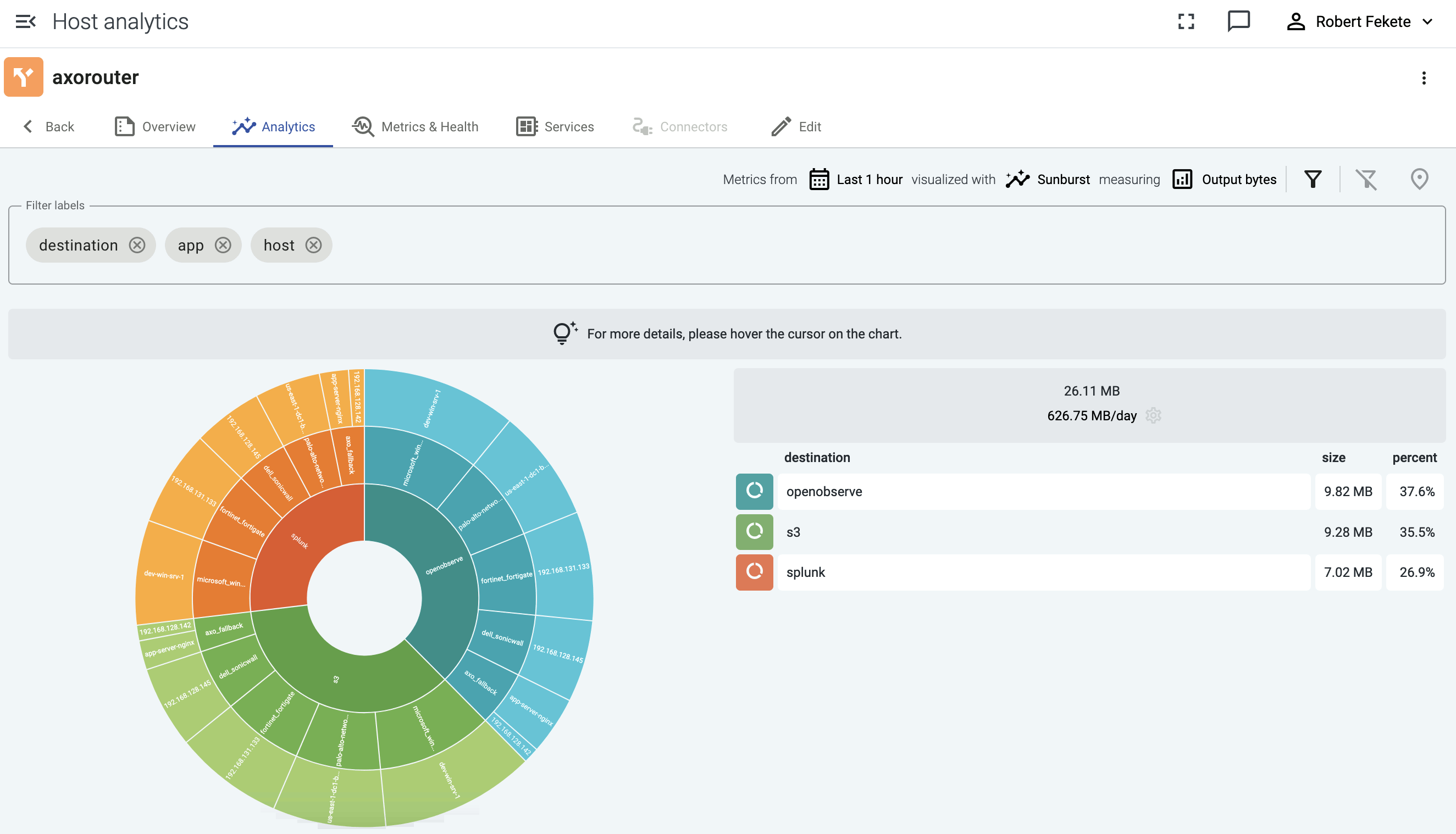

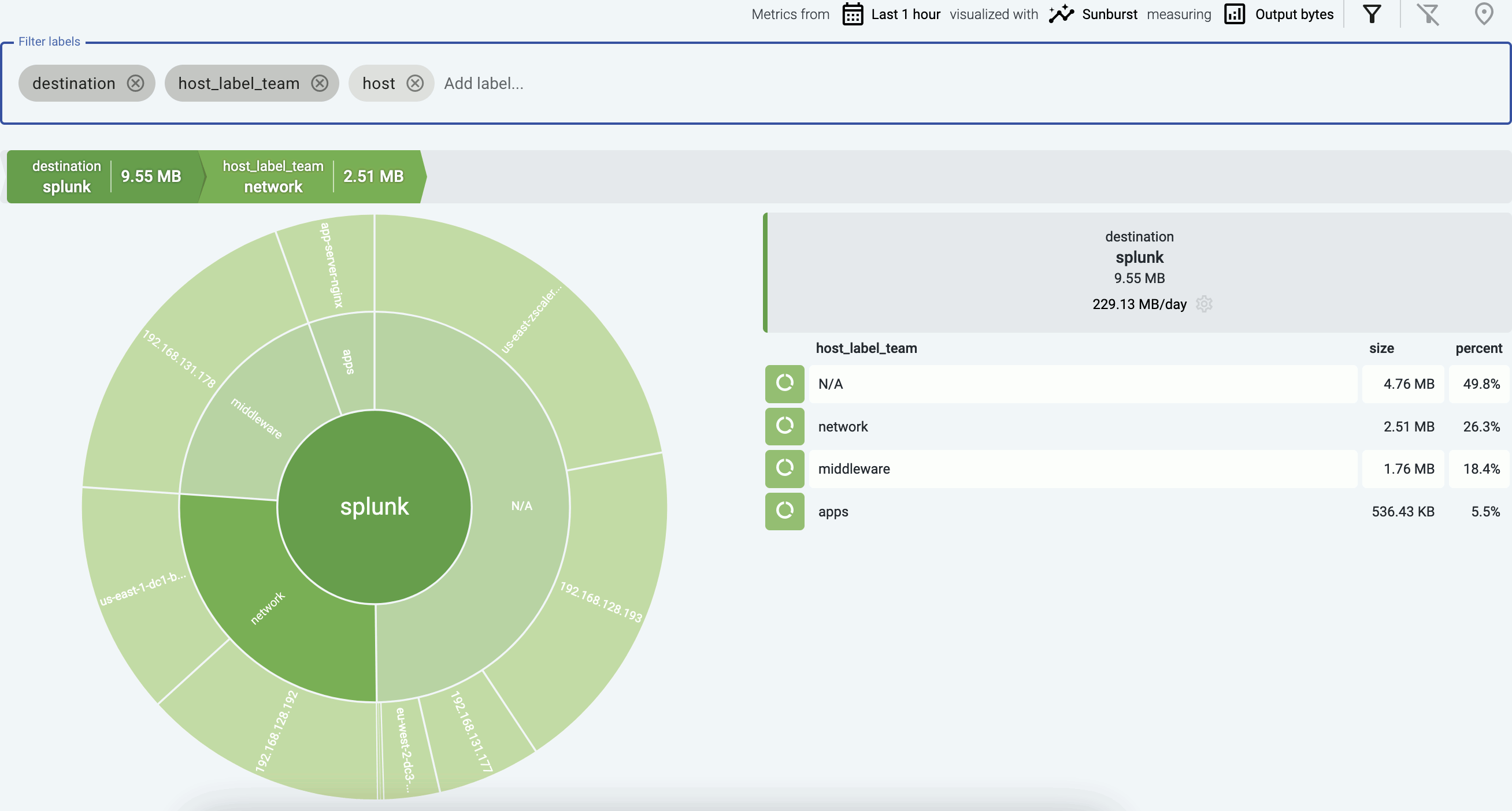

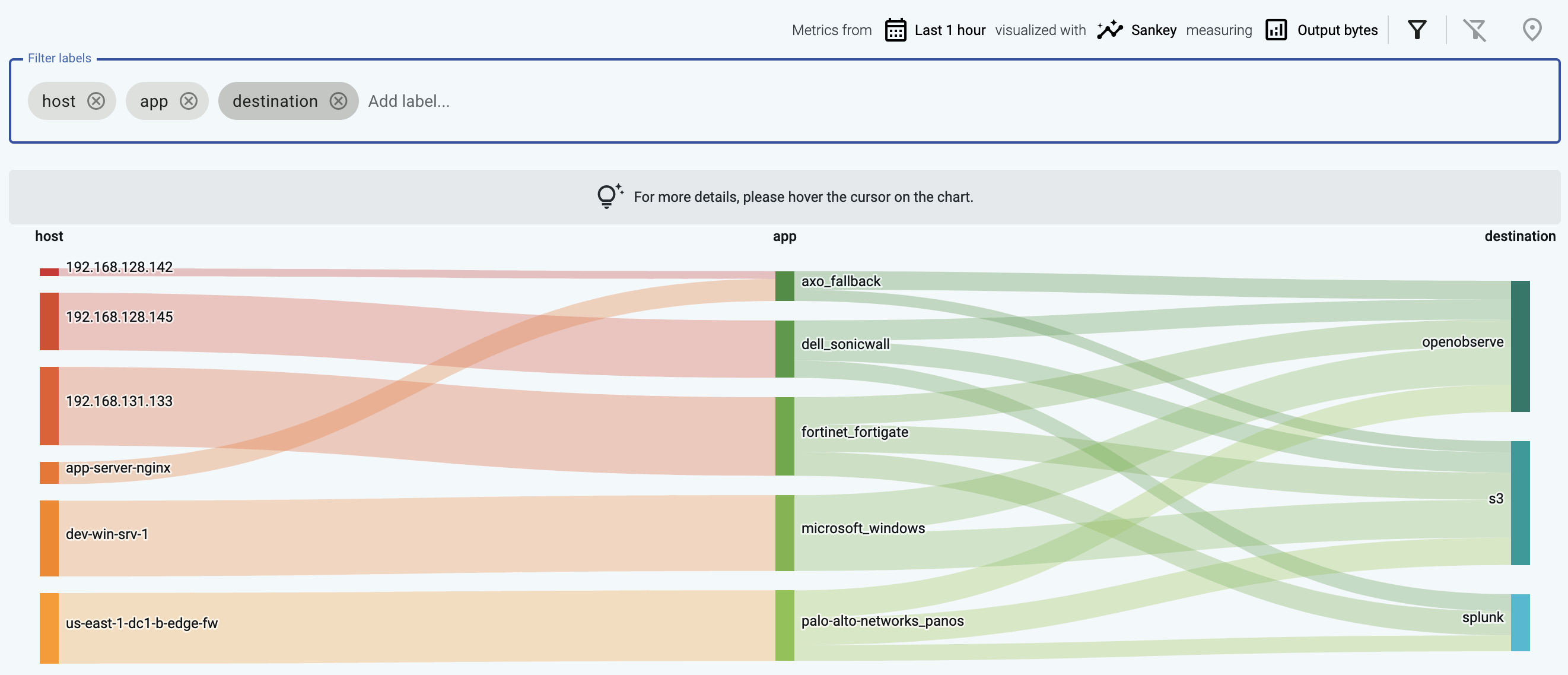

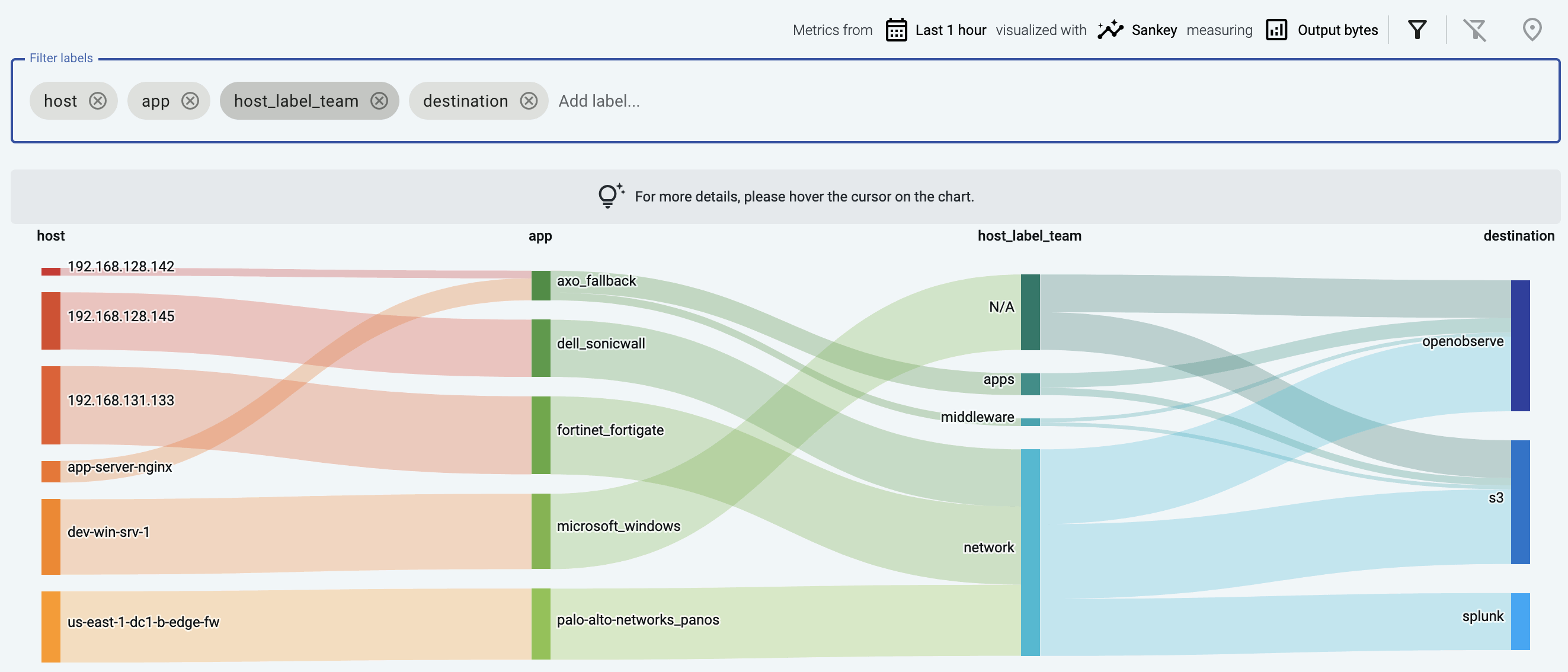

Axoflow gives you a vendor-agnostic management plane for best-in-class visibility into your data pipeline. You can:

- automatically discover and identify existing logging infrastructure,

- visualize the complete edge-to-edge flow of security data to understand the contribution of sources to the data pipeline, and

- monitor the elements of the pipeline and the data flow.

Try Axoflow

Would you like to try Axoflow? Request a zero-commitment demo or a sandbox environment to experience the power of our platform.

Ready to get started? Go to our Getting started section for the first steps.

1 - Introduction

2 - Axoflow architecture

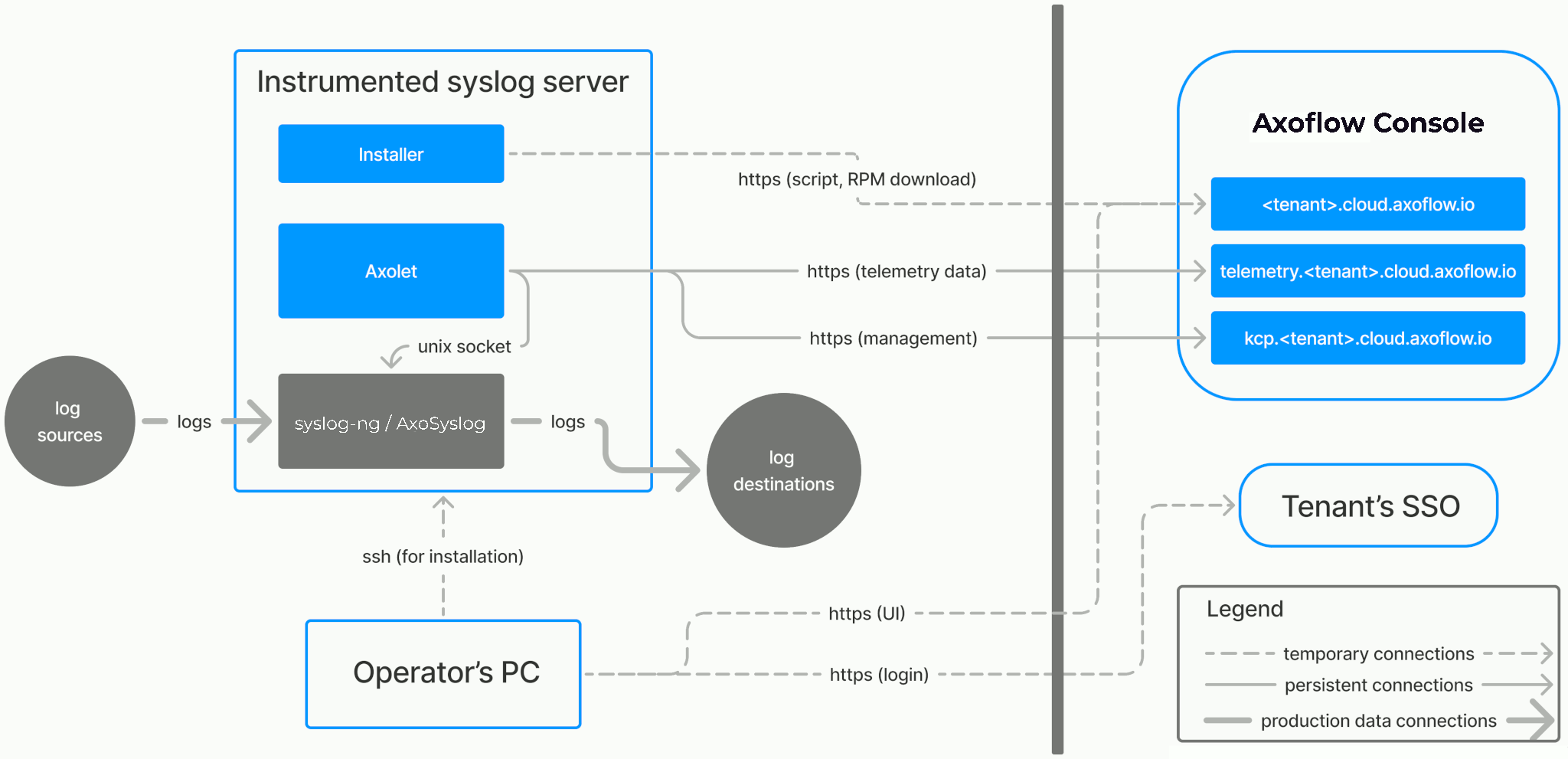

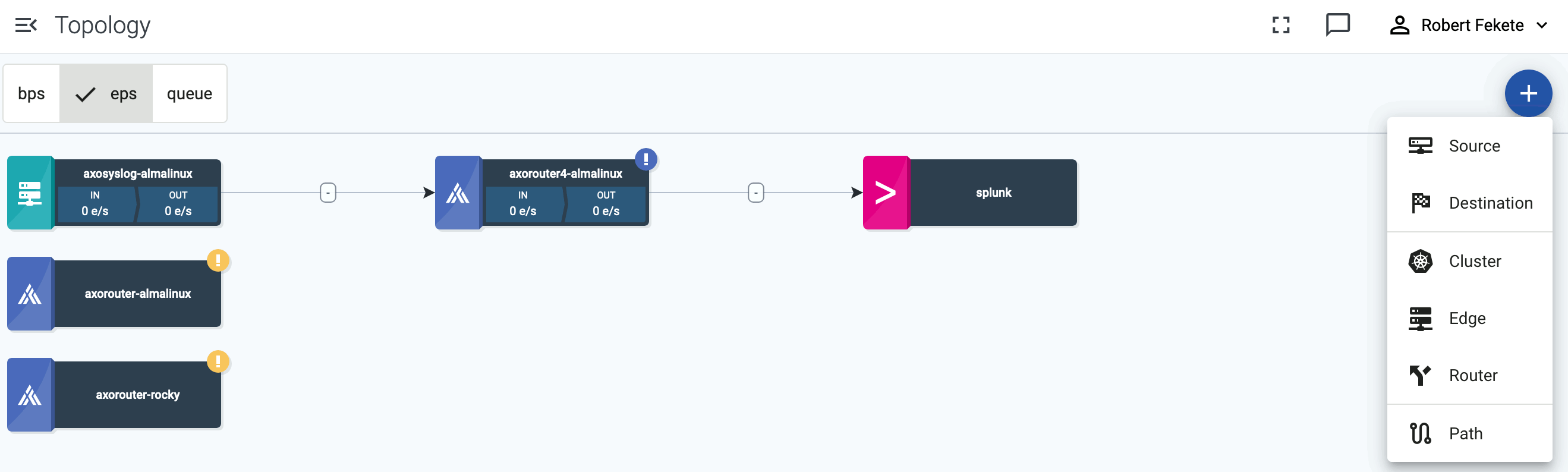

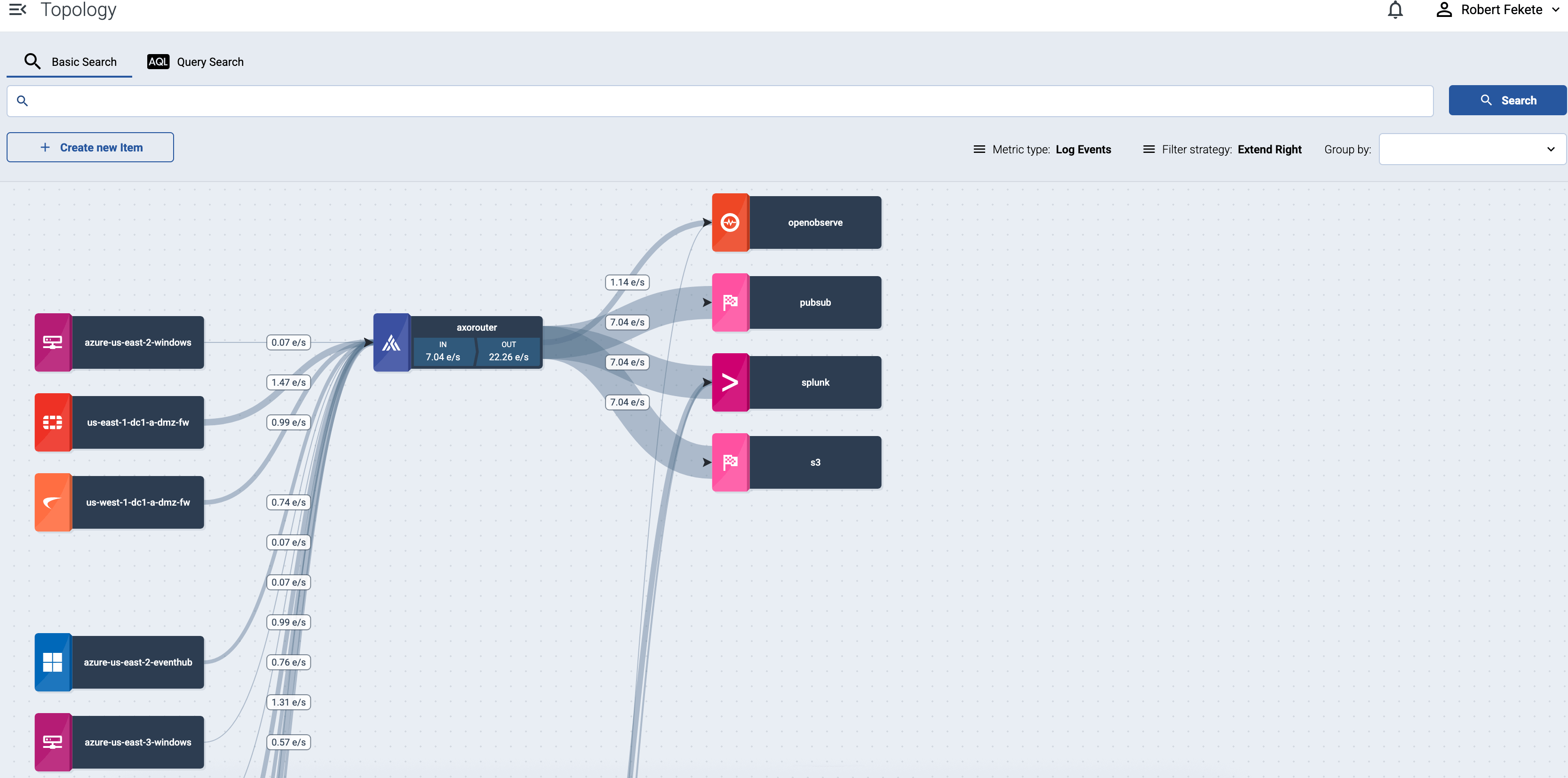

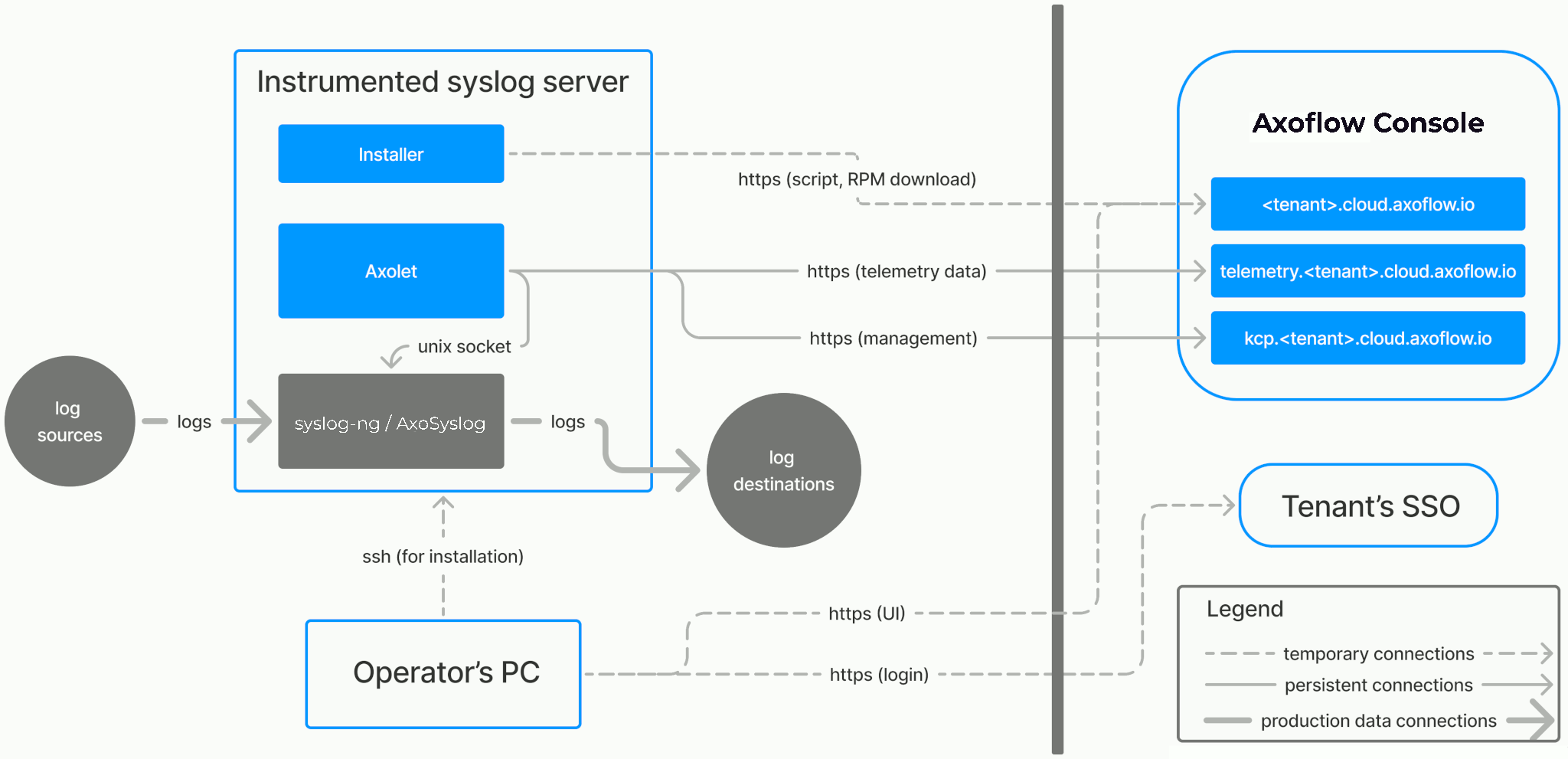

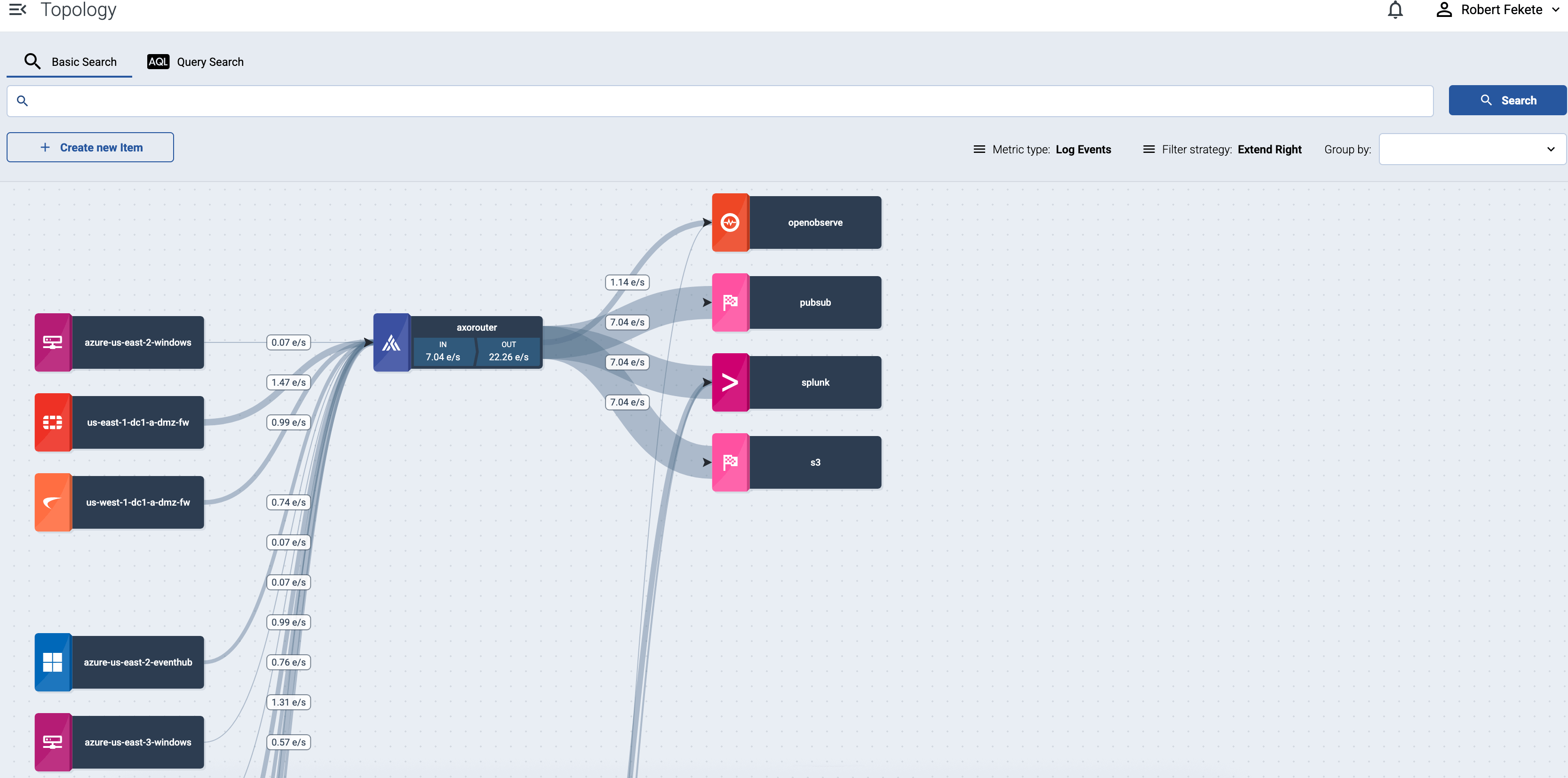

The Axoflow provides an end-to-end pipeline automating the collection, management and loading of your security data in a vendor-agnostic way. The following figure highlights the Axoflow data flow:

Axoflow architecture

The architecture of Axoflow is comprised of two main elements: the Axoflow Console and the Data Plane.

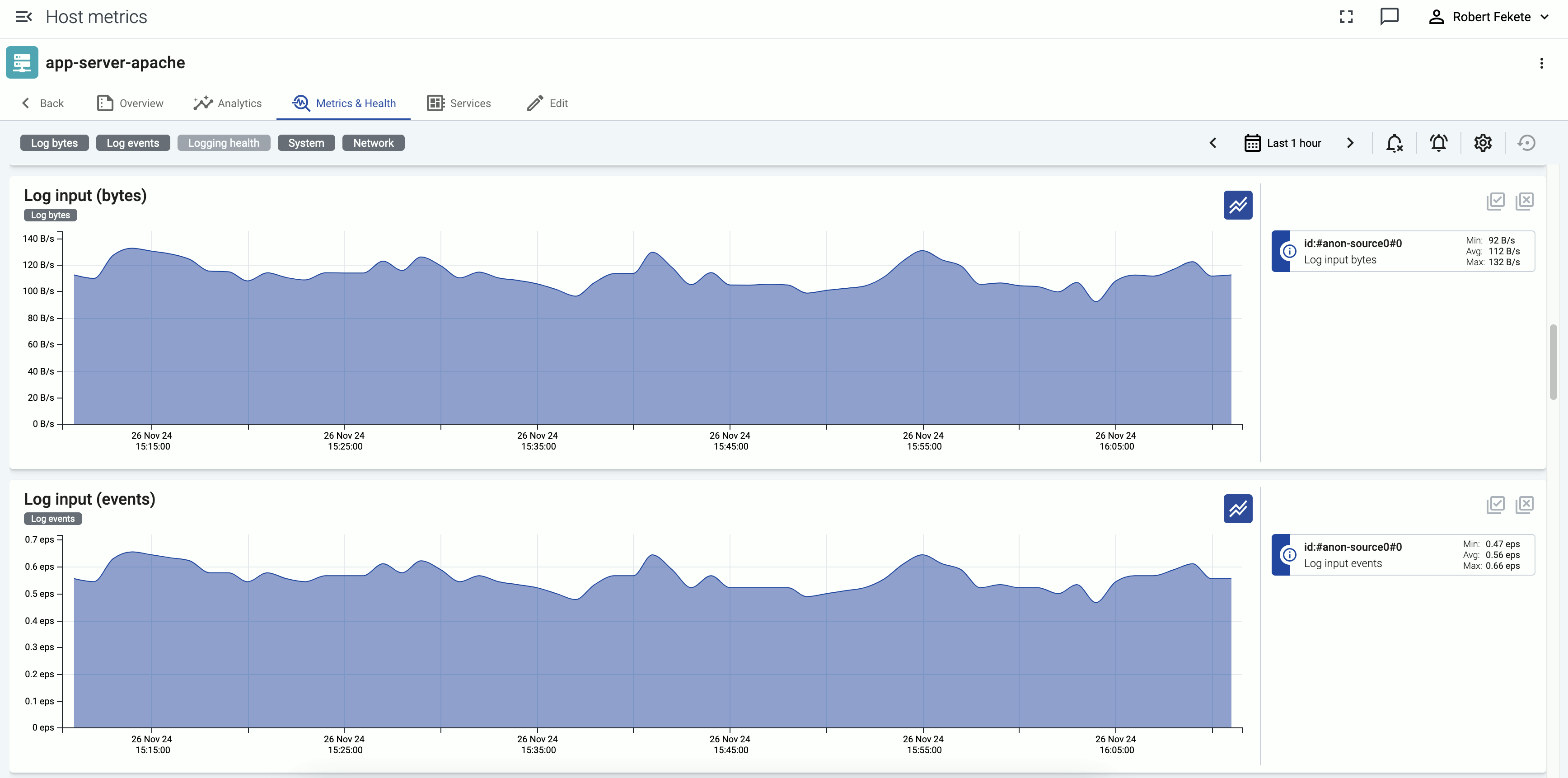

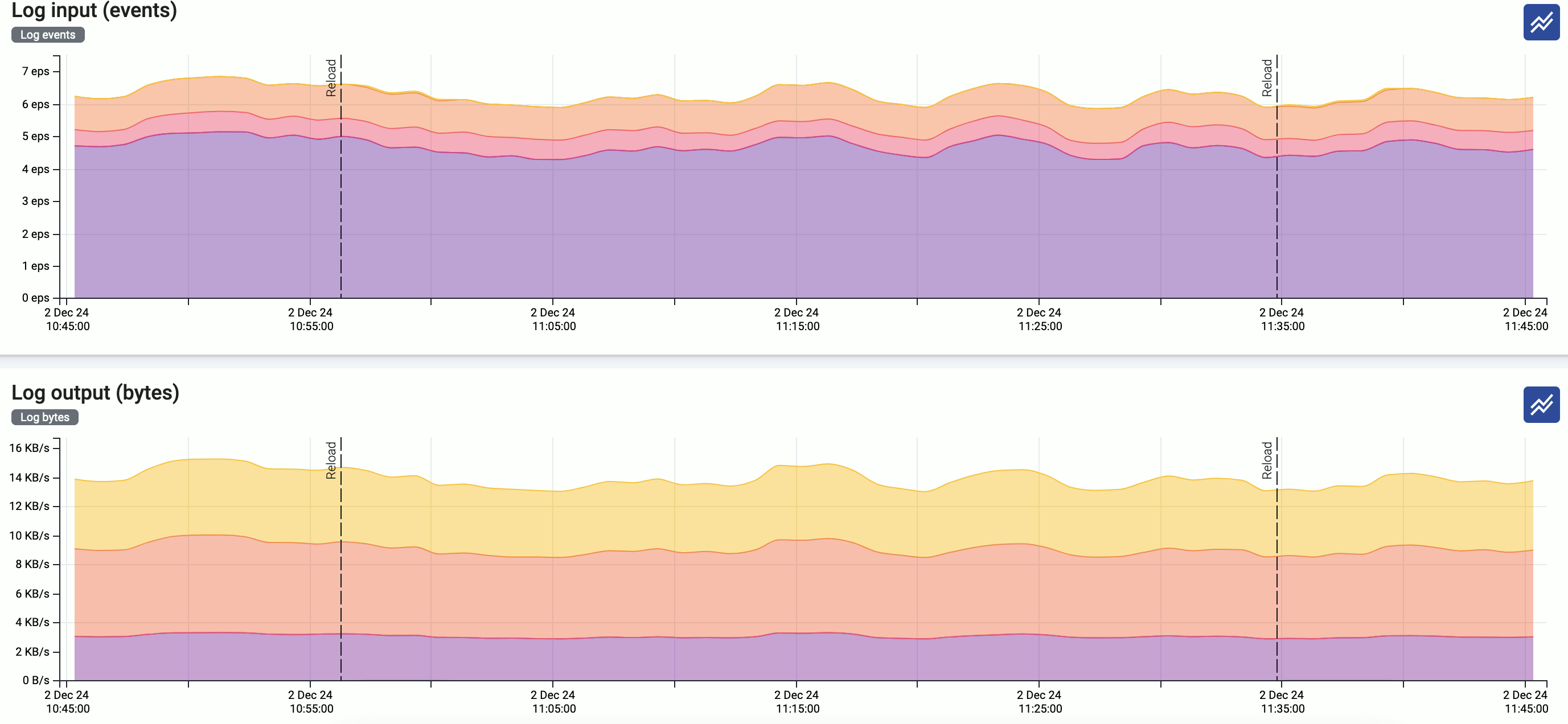

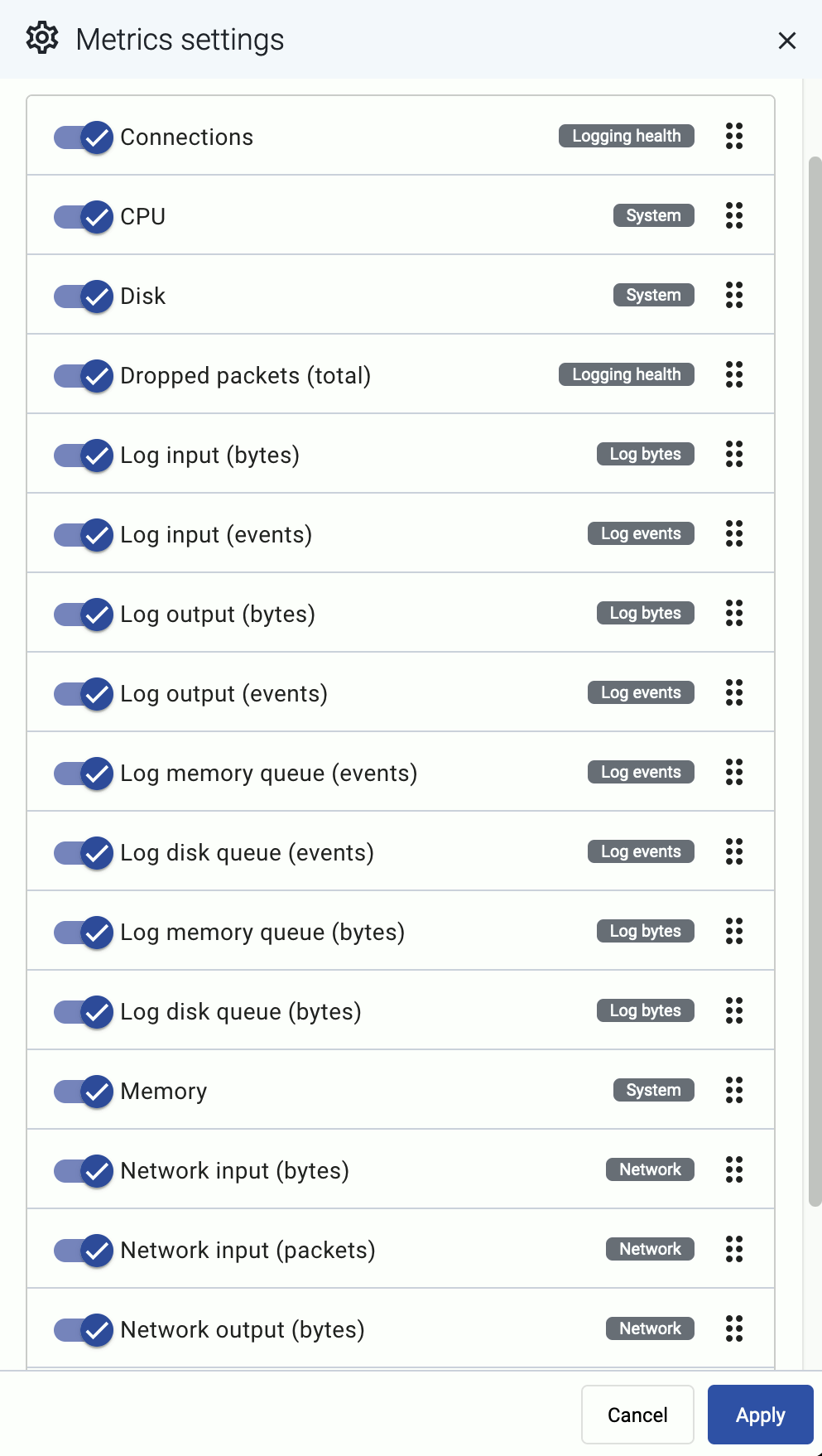

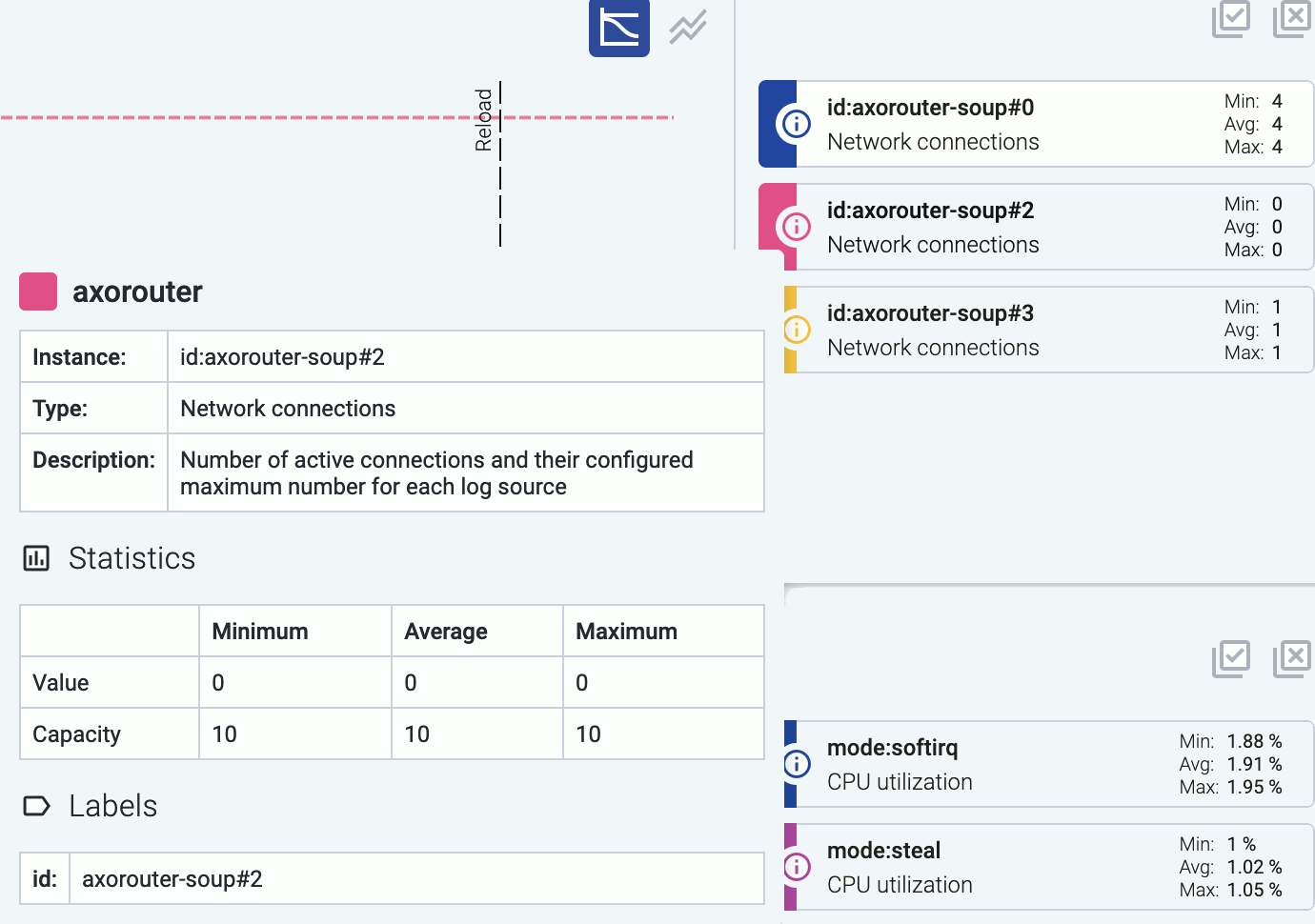

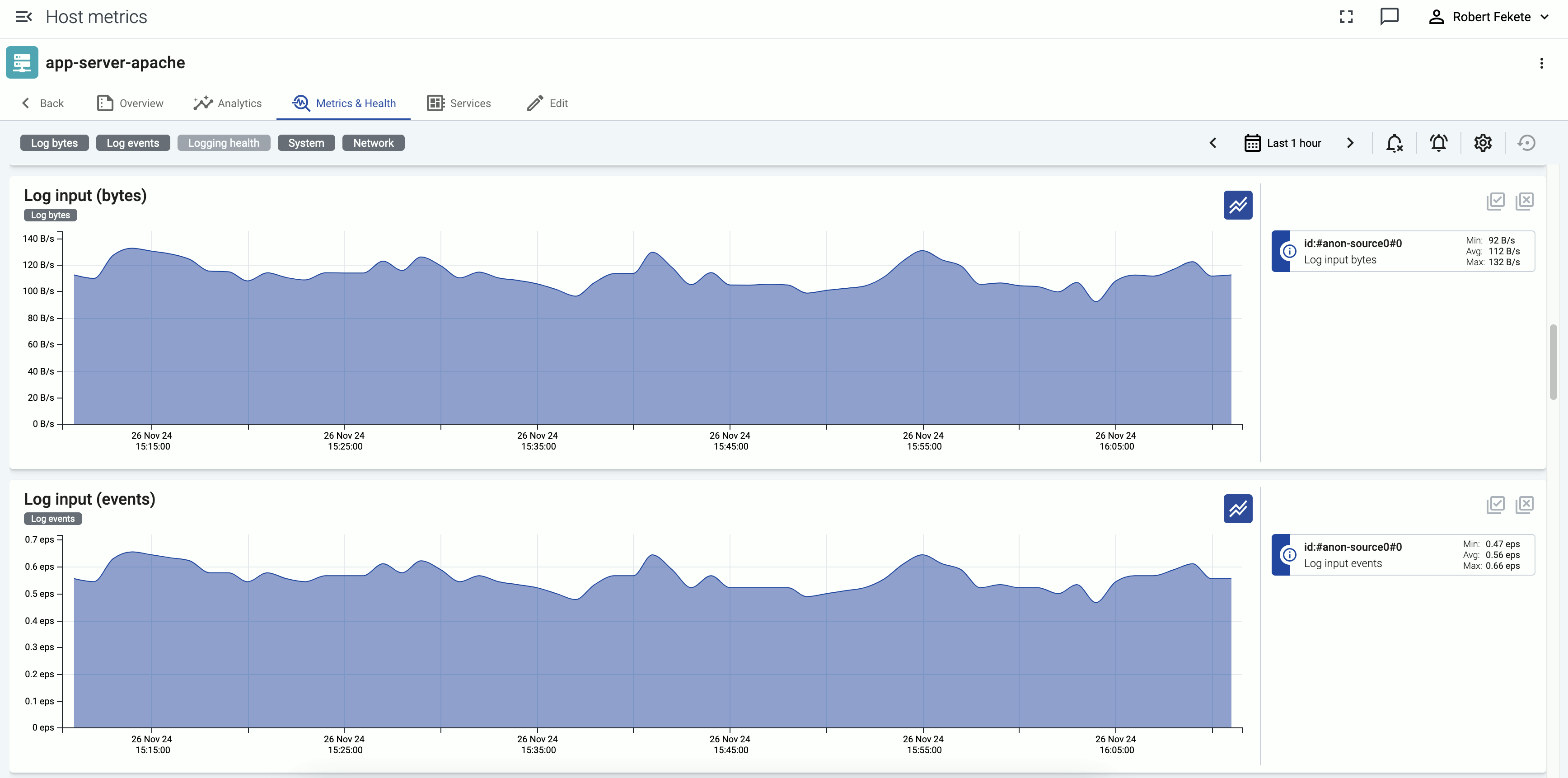

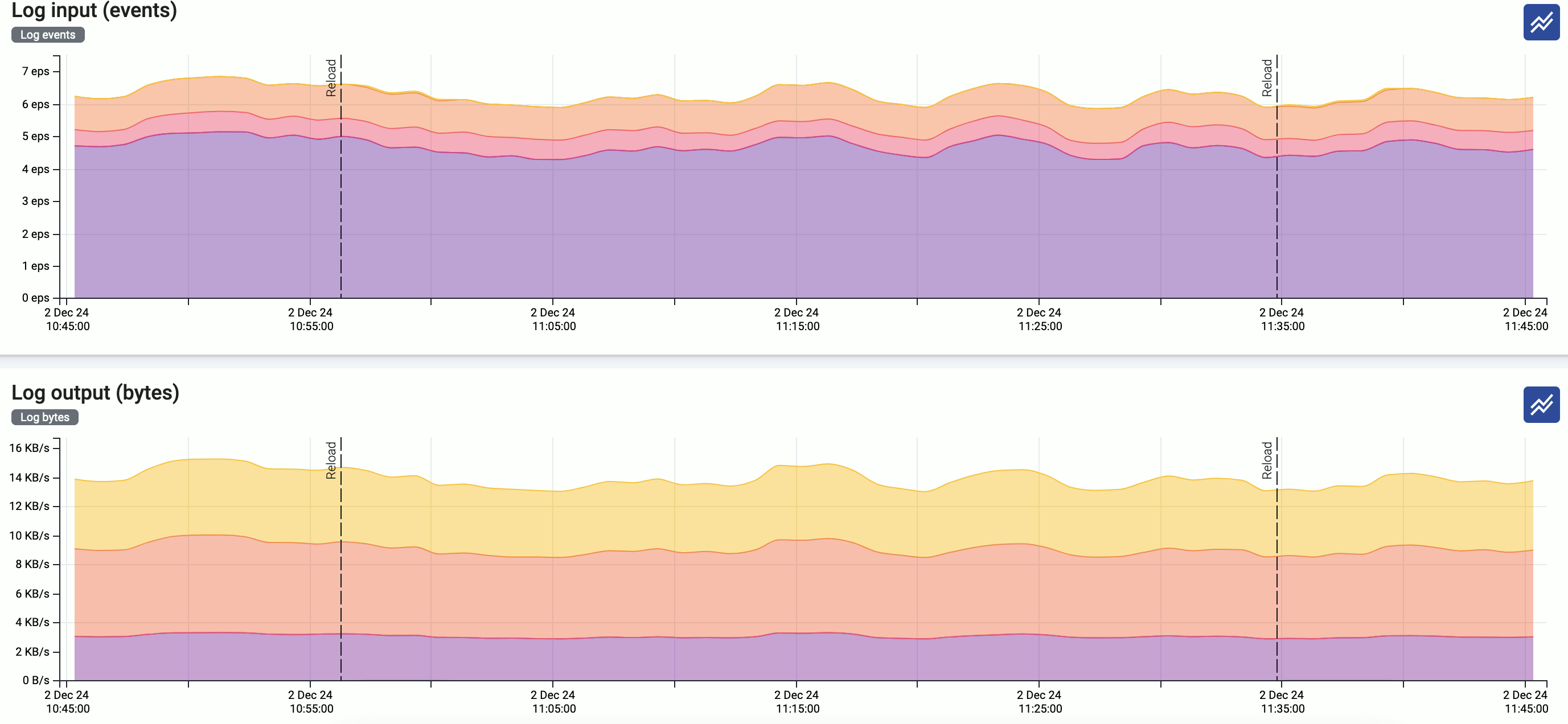

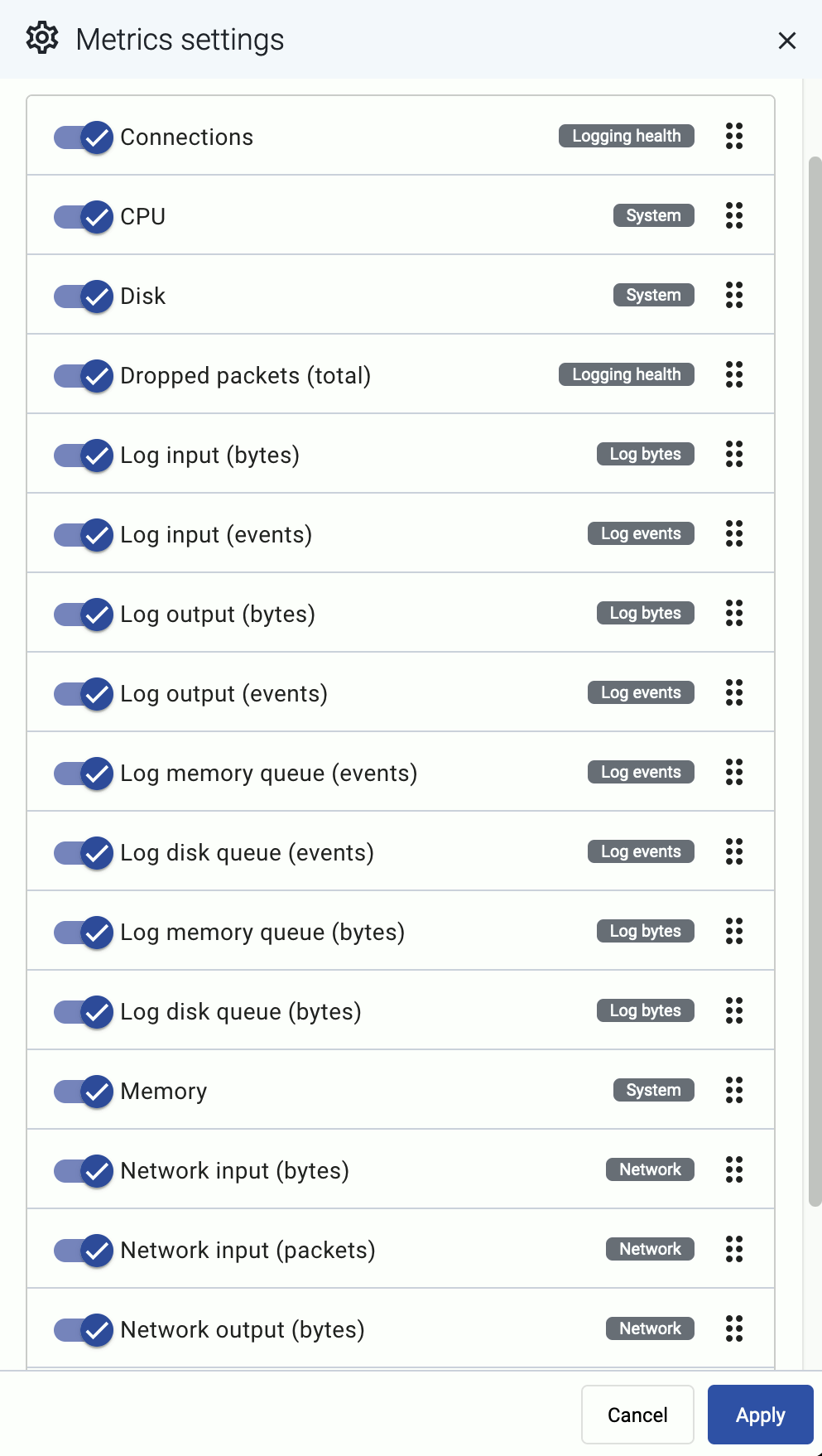

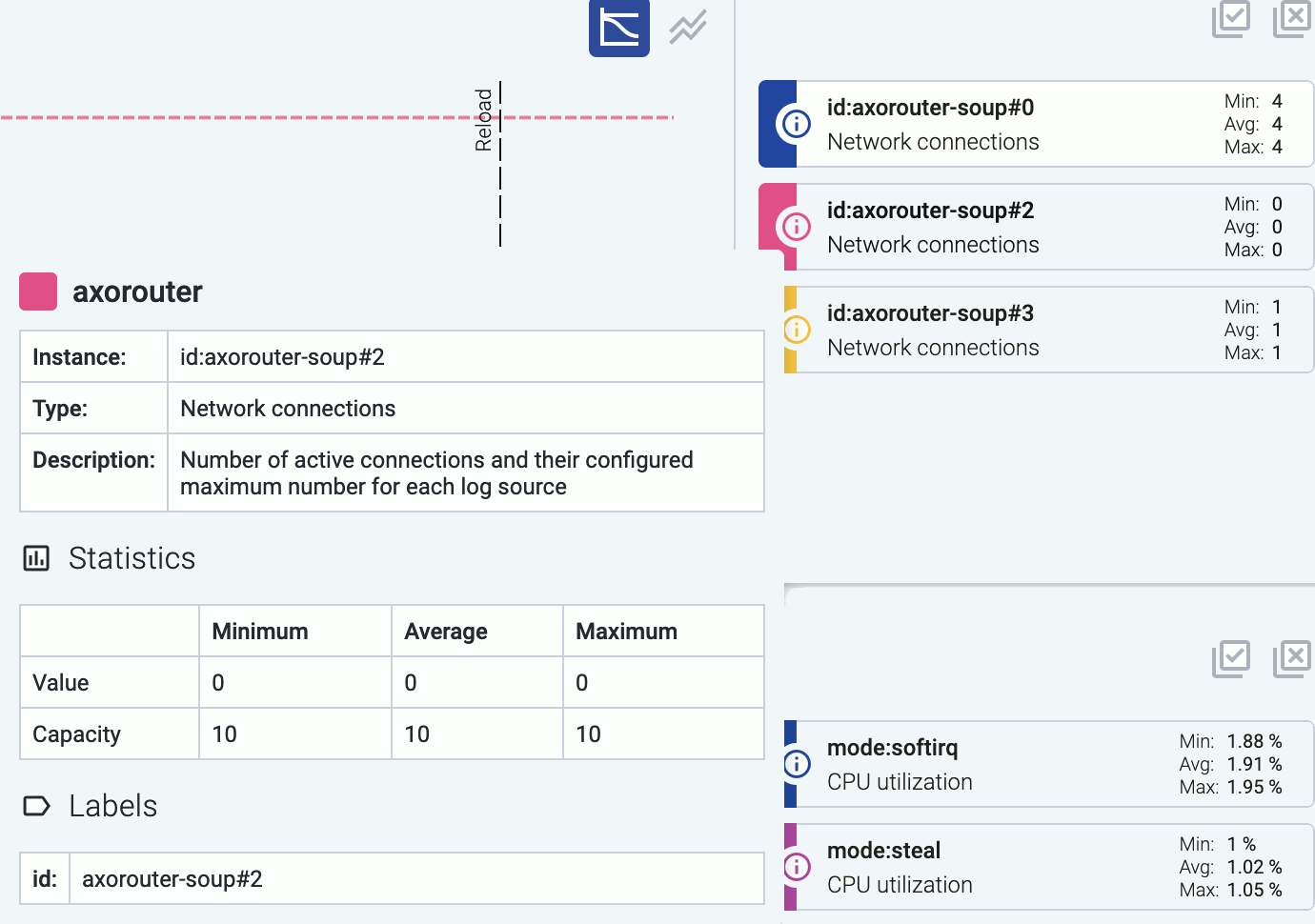

- The Axoflow Console is primarily concerned with highlighting the metadata of each event. This includes the source from which it originated, the size in bytes (and event count over time), its destination, and any other element which describes the data.

- The Data Plane includes collector agents and processing engines (like AxoRouter) that collect, classify, filter, transform, and deliver telemetry data to its proper destinations (SIEMs, storage), and provide metrics to the Axoflow Console. The components of the Data Plane can be managed from the Axoflow Console, or can be independent.

Pipeline components

A telemetry pipeline consists of the following high-level components:

-

Data Sources: Data sources are the endpoints of the pipeline that generate the logs and other telemetry data you want to collect. For example, firewalls and other appliances, Kubernetes clusters, application servers, and so on can all be data sources. Data sources send their data either directly to a destination, or to a router.

Axoflow provides several log collecting agents and solutions to collect data in different environments, including connectors for cloud services, Kubernetes clusters, Linux servers, and Windows servers.

-

Routers: Router (also called relays or aggregators) collect the data from a set of data sources and transport them to the destinations.

AxoRouter can collect, curate, and enrich the data: it automatically identifies your log sources and fixes common errors in the incoming data. It also converts the data into a format that best suits the destination to optimize ingestion speed and data quality.

-

Destinations: Destinations are your SIEM and storage solutions where the telemetry pipeline delivers your security data.

Your telemetry pipeline can consist of managed and unmanaged components. You can deploy and configure managed components from the Axoflow Console. Axoflow provides several managed components that help you collect or fetch data from your various data sources, or act as routers.

Axoflow Console

Axoflow Console is the data visualization and management UI of Axoflow. Available both as a SaaS and an on-premises solution, it collects and visualizes the metrics received from the pipeline components to provide insight into the details of your telemetry pipeline and the data it processes. It also allows you to:

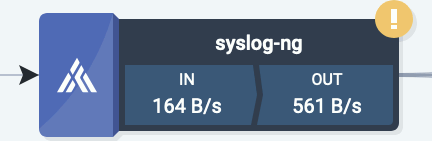

AxoRouter

AxoRouter is a router (aggregator) and data curation engine: it collects all kinds of telemetry and security data and has all the low-level functions you would expect of log-forwarding agents and routers. AxoRouter can also curate and enrich the collected data: it automatically identifies your log sources and fixes common errors in the incoming data: for example, it corrects missing hostnames, invalid timestamps, formatting errors, and so on.

AxoRouter also has a range of zero-maintenance connectors for various networking and security products (for example, switches, firewalls, and web gateways), so it can classify the incoming data (by recognizing the product that is sending it), and apply various data curation and enrichment steps to reduce noise and improve data quality.

Before sending your data to its destination, AxoRouter automatically converts the data into a format that best suits the destination to optimize ingestion speed and data quality. For example, when sending data to Splunk, setting the proper sourcetype and index is essential.

Note

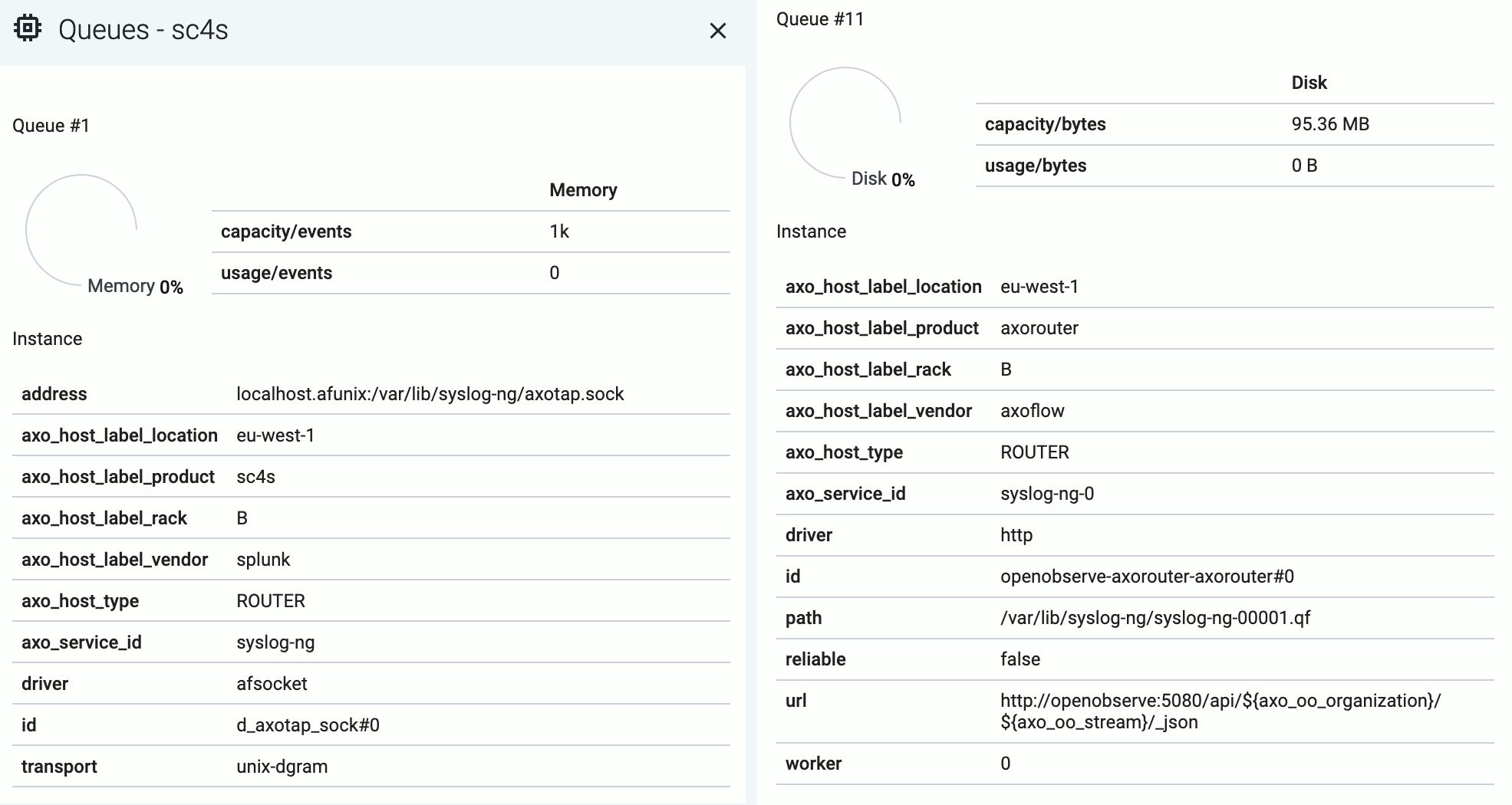

Note that AxoRouter collects detailed, real-time metrics about the data-flows – giving you observability over the health of the security data pipeline and its components. Your security data remains in your self-managed cloud or in your on-prem instance where your sources, destinations, and AxoRouters are running, only metrics are forwarded to Axoflow Console.

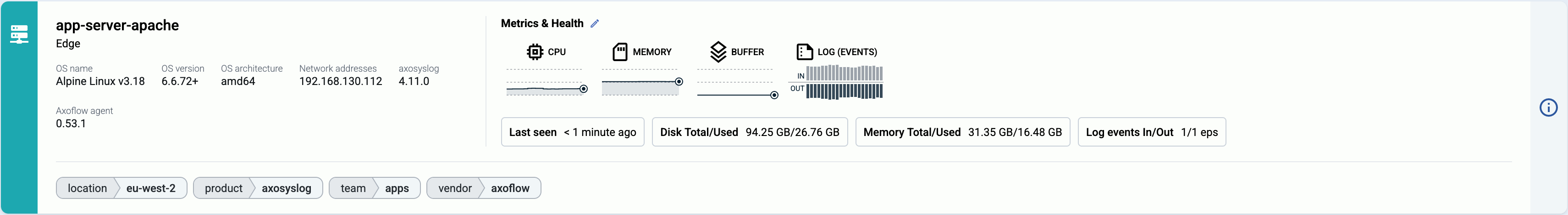

Axolet

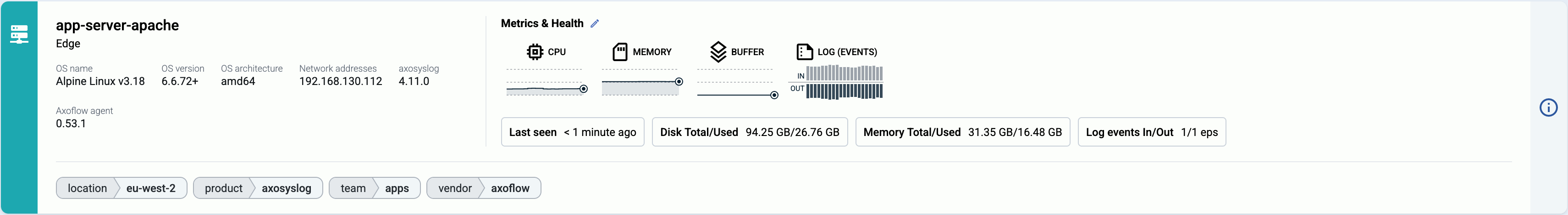

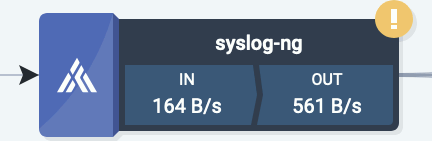

Axolet is a monitoring and management agent that integrates with the local log collector (like AxoSyslog, Splunk Connect for Syslog, or syslog-ng) that runs on the data source and provides detailed metrics about the host and its data traffic to the Axoflow Console.

3 - Deployment scenarios

Thanks to its flexible deployment modes, you can quickly insert an Axoflow and its processing node (called AxoRouter) transparently into your data pipeline and gain instant benefits:

- Data reduction

- Improved SIEM accuracy

- Configuration UI for routing data

- Automatic data classification

- Metrics about log ingestion, processing, and data drops

- Analytics about the transported data

- Health check, highlighting anomalies

Note

Note that AxoRouter collects detailed, real-time metrics about the data-flows – giving you observability over the health of the security data pipeline and its components. Your security data remains in your self-managed cloud or in your on-prem instance where your sources, destinations, and AxoRouters are running, only metrics are forwarded to Axoflow Console.

After the first step, you can further integrate your pipeline, by deploying Axoflow agents to collect and manage your security data, or onboarding your existing log collector agents (for example, syslog-ng). Let’s see what these scenarios look like in detail.

3.1 - Axoflow Console deployment

You can use Axoflow Console:

We also support hybrid environments.

The Axoflow Console provides the UI for accessing metrics and analytics, deploying and configuring AxoRouter instances, configuring data flows, and so on.

3.2 - Transparent router mode

AxoRouter is a powerful aggregator and data processing engine that can receive data from a wide variety of sources, including:

- OpenTelemetry,

- syslog,

- Windows Event Forwarding, or HTTP.

In transparent mode, you deploy AxoRouter in front of your SIEM and configure your sources or aggregators to send the logs to AxoRouter instead of the SIEM. The transparent deployment method is a quick, minimally invasive way to get instant benefits and value from Axoflow, and:

Note

Note that AxoRouter collects detailed, real-time metrics about the data-flows – giving you observability over the health of the security data pipeline and its components. Your security data remains in your self-managed cloud or in your on-prem instance where your sources, destinations, and AxoRouters are running, only metrics are forwarded to Axoflow Console.

Axoflow Console as SaaS

Axoflow Console on premises

3.3 - Router and edge deployment

Axoflow provides agents to collect data from all kinds of sources:

- Kubernetes clusters,

- cloud sources,

- security appliances,

- Linux servers,

- Microsoft Windows hosts.

(If you’d prefer to keep using your existing syslog infrastructure instead of the Axoflow agents, see Onboard existing syslog infrastructure).

You can deploy the AxoRouter data aggregator on Linux and Kubernetes.

Using the Axoflow collector agents gives you:

- Reliable transport: Between its components, Axoflow transports security data using the reliable OpenTelemetry protocol (OTLP) for high performance, and to avoid losing messages.

- Managed components: You can configure, manage, monitor, and troubleshoot all these components from the Axoflow Console. For example, you can sample the data flowing through each component.

- Metrics: Detailed metrics from every collector provide unprecedented insight into the status of your data pipeline.

3.4 - Onboard existing syslog infrastructure

If your organization already has a syslog architecture in place, Axoflow provides ways to reuse it. This allows you to integrate your existing infrastructure with Axoflow, and optionally – in a later phase – replace your log collectors with the agents provided by Axoflow.

Managed AxoRouter deployments

In this deployment mode you use the centralized management UI of Axoflow Console to manage your AxoRouter instances. This provides the tightest integration and the most benefits, including:

Unmanaged AxoRouter deployments

In this mode, you install AxoRouter on the data source to replace its local collector agent, and manage it manually. That way you get the functional benefits of using AxoRouter as an aggregator and data curation engine to collect and classify your data, but can manage its configuration as you see fit. This gives you all the benefits of the read-only mode (since AxoRouter includes Axolet as well), and in addition, it provides:

Read-only mode

In this scenario, you install Axolet on the data source. Axolet is a monitoring (and management) agent that integrates with the local log collector, like AxoSyslog, Splunk Connect for Syslog, or syslog-ng, and sends detailed metrics about the host and its data traffic to the Axoflow Console. This allows you to use the Axoflow Console to:

4 - Try for free

To see Axoflow in action, you have a number of options:

- Watch some of the product videos on our blog.

- Request a demo sandbox environment. This pre-deployed environment contains a demo pipeline complete with destinations, routers, and sources that generate traffic, and you can check the metrics, use the analytics, modify the flows, and so on to get a feel of using a real Axoflow deployment.

- Request a free evaluation version. That’s an empty cloud deployment you can use for testing and PoC: you can onboard your own pipeline elements, add sources and destinations, and see how Axoflow performs with your data!

- Request a live demo where our engineers show you how Axoflow works, and can discuss your specific use cases in detail.

5 - Getting started

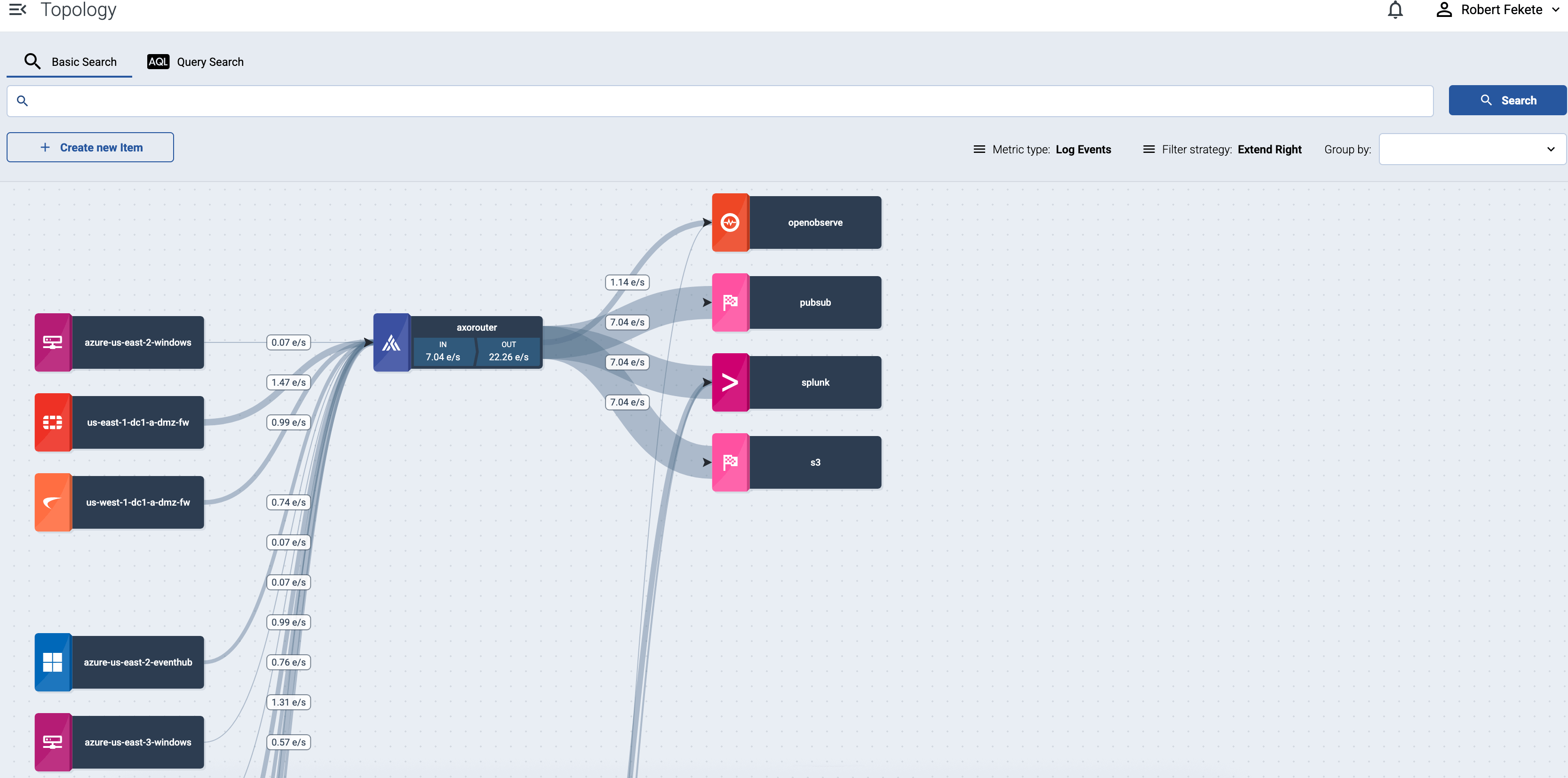

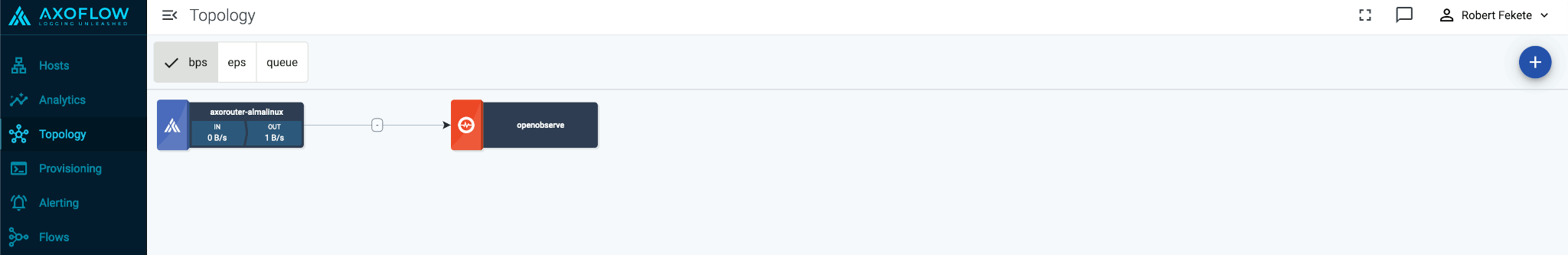

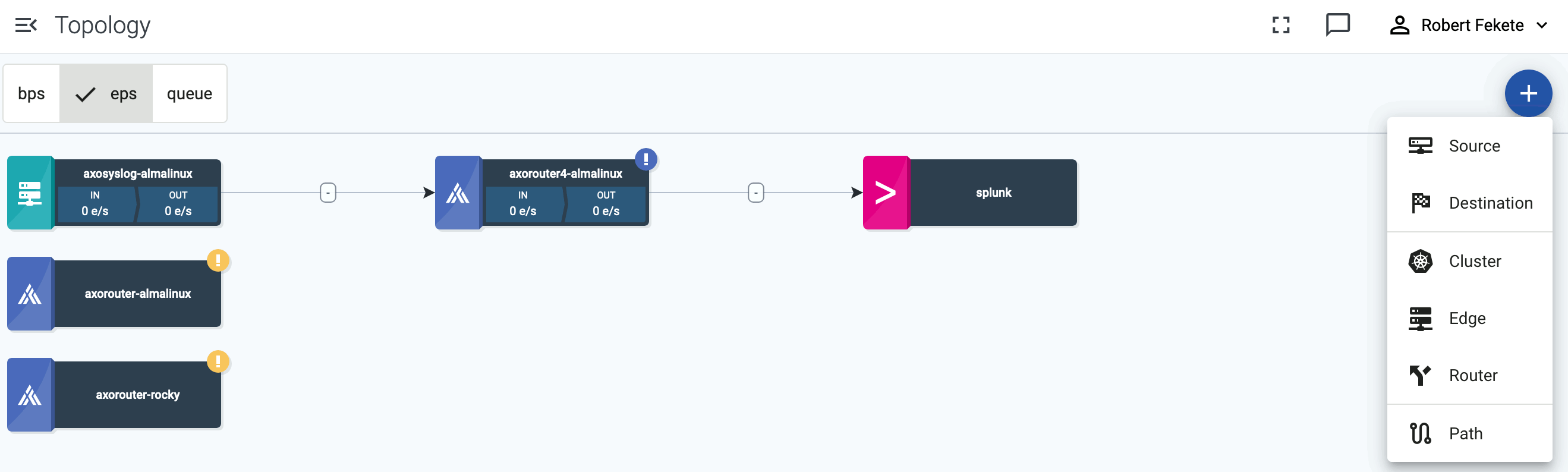

This guide shows you how to get started with Axoflow. You’re going to install AxoRouter, and configure or create a source to send data to AxoRouter. You’ll also configure AxoRouter to forward the received data to your destination SIEM or storage provider. The resulting topology will look something like this:

Why use Axoflow

Using the Axoflow security data pipeline automatically corrects and augments the security data you collect, resulting in high-quality, curated, SIEM-optimized data. It also removes redundant data to reduce storage and SIEM costs. In addition, it allows automates pipeline configuration and provides metrics and alerts for your telemetry data flows.

Prerequisites

You’ll need:

-

An Axoflow subscription, access to a free evaluation version, or an on-premise deployment.

-

A data source. This can be any host that you can configure to send syslog or OpenTelemetry data to your AxoRouter instance that you’ll install. If you don’t want to change the configuration of an existing device, you can use a virtual machine or a docker container on your local computer.

-

A host that you’ll install AxoRouter on. This can be a separate Linux host, or a virtual machine running on your local computer.

AxoRouter should work on most Red Hat and Debian compatible Linux distributions. For production environments, we recommend using Red Hat 9.

-

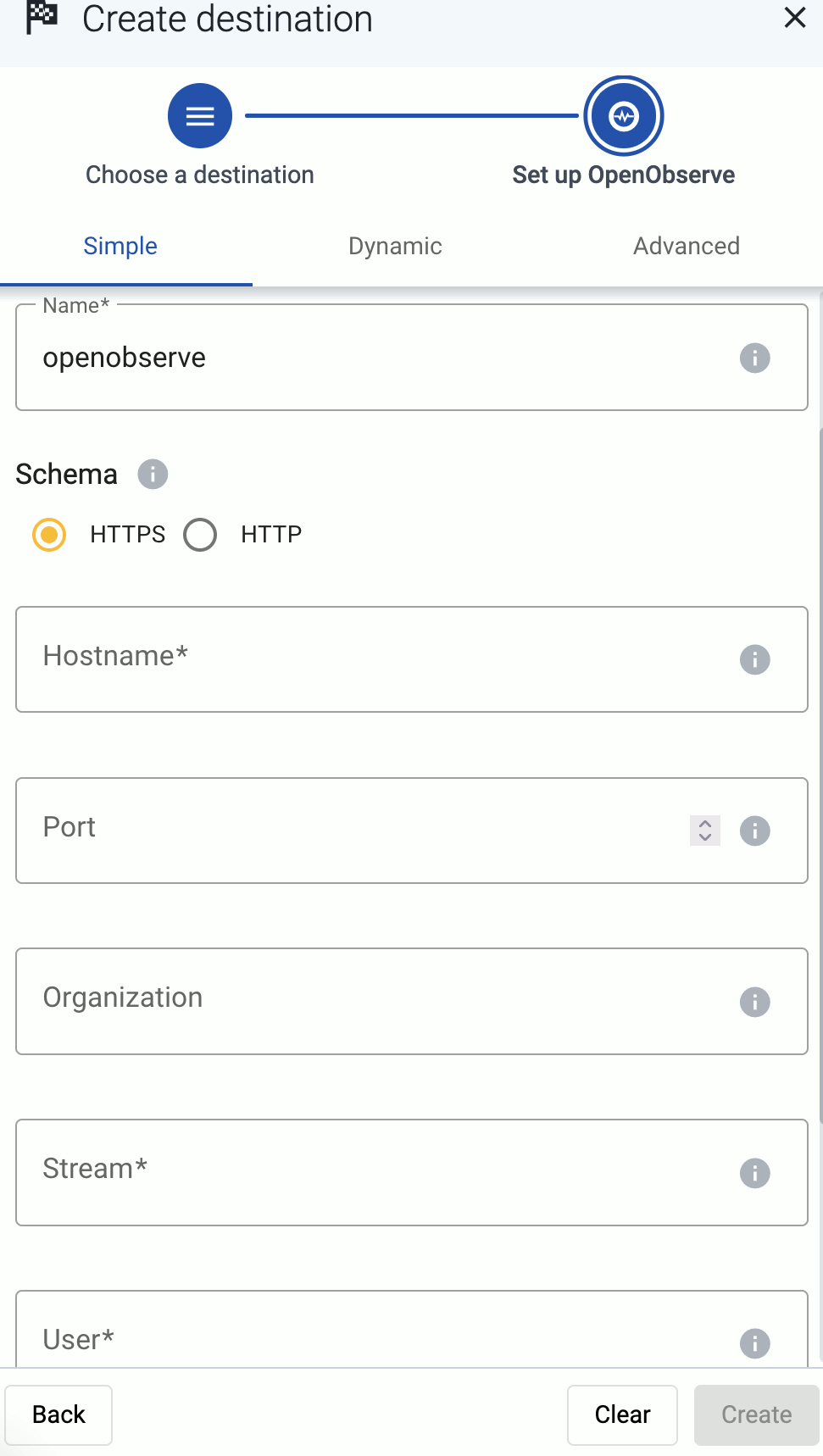

Access to a supported SIEM or storage provider, like Splunk or Amazon S3. For a quick test of Axoflow, you can use a free Splunk or OpenObserve account as well.

Network access

The hosts must be able to access the following domains related to the Axoflow Console:

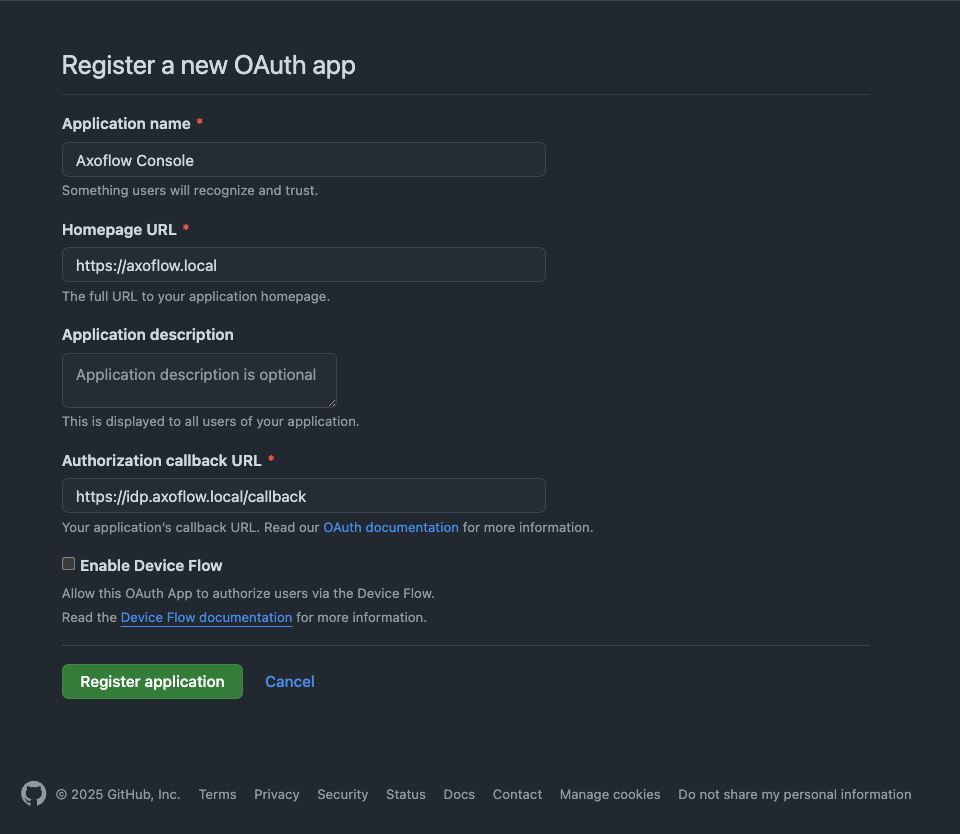

Log in to the Axoflow Console

Verify that you have access to the Axoflow Console.

- Open

https://<your-tenant-id>.axoflow.io/ in your browser.

- Log in using Google Authentication.

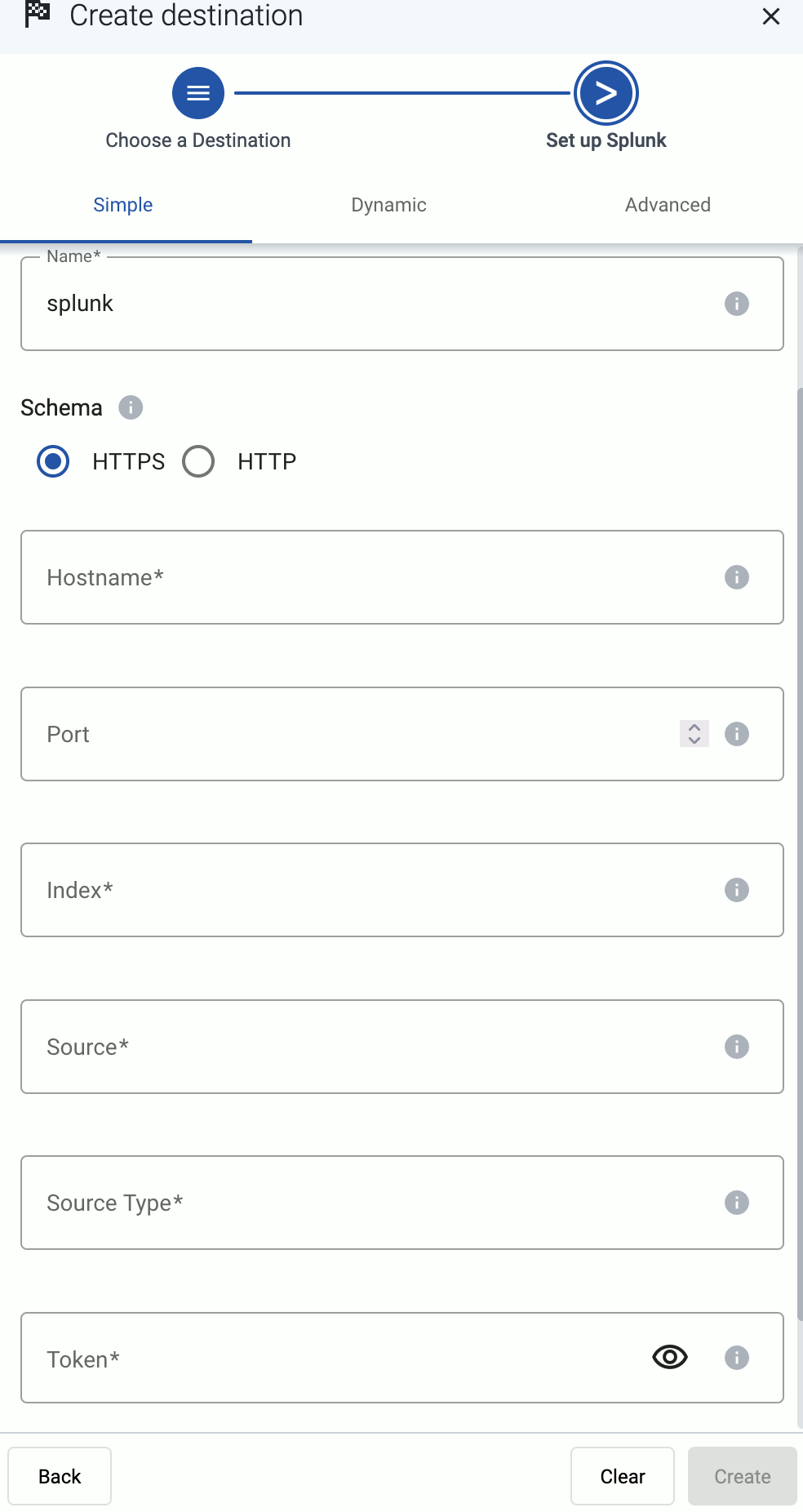

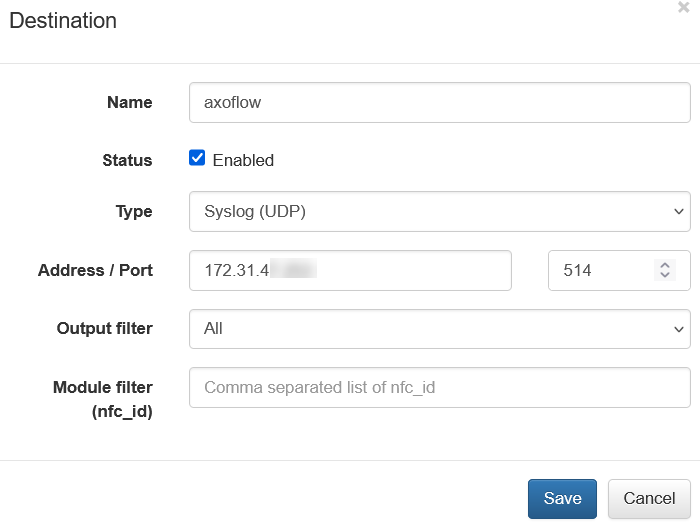

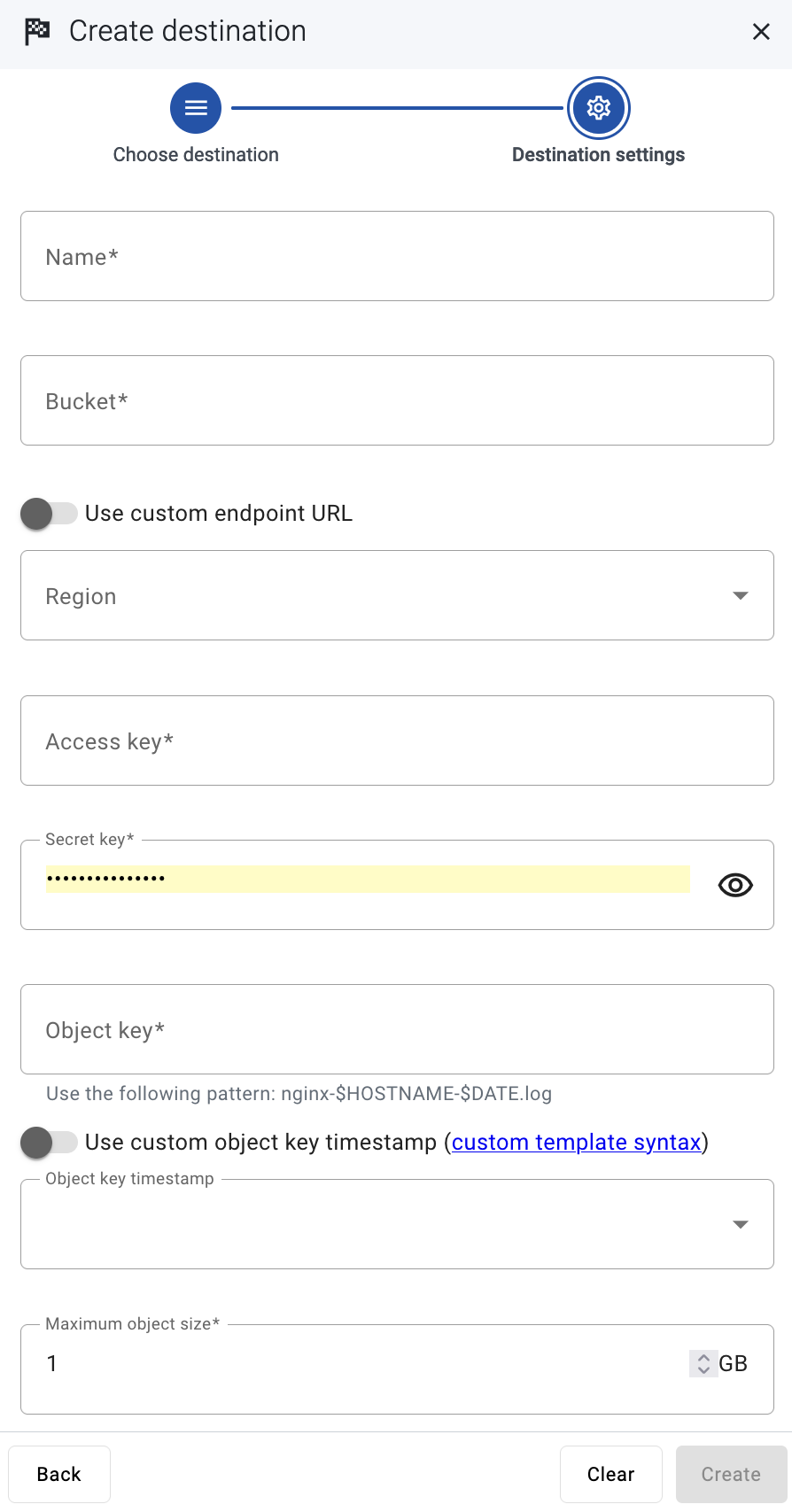

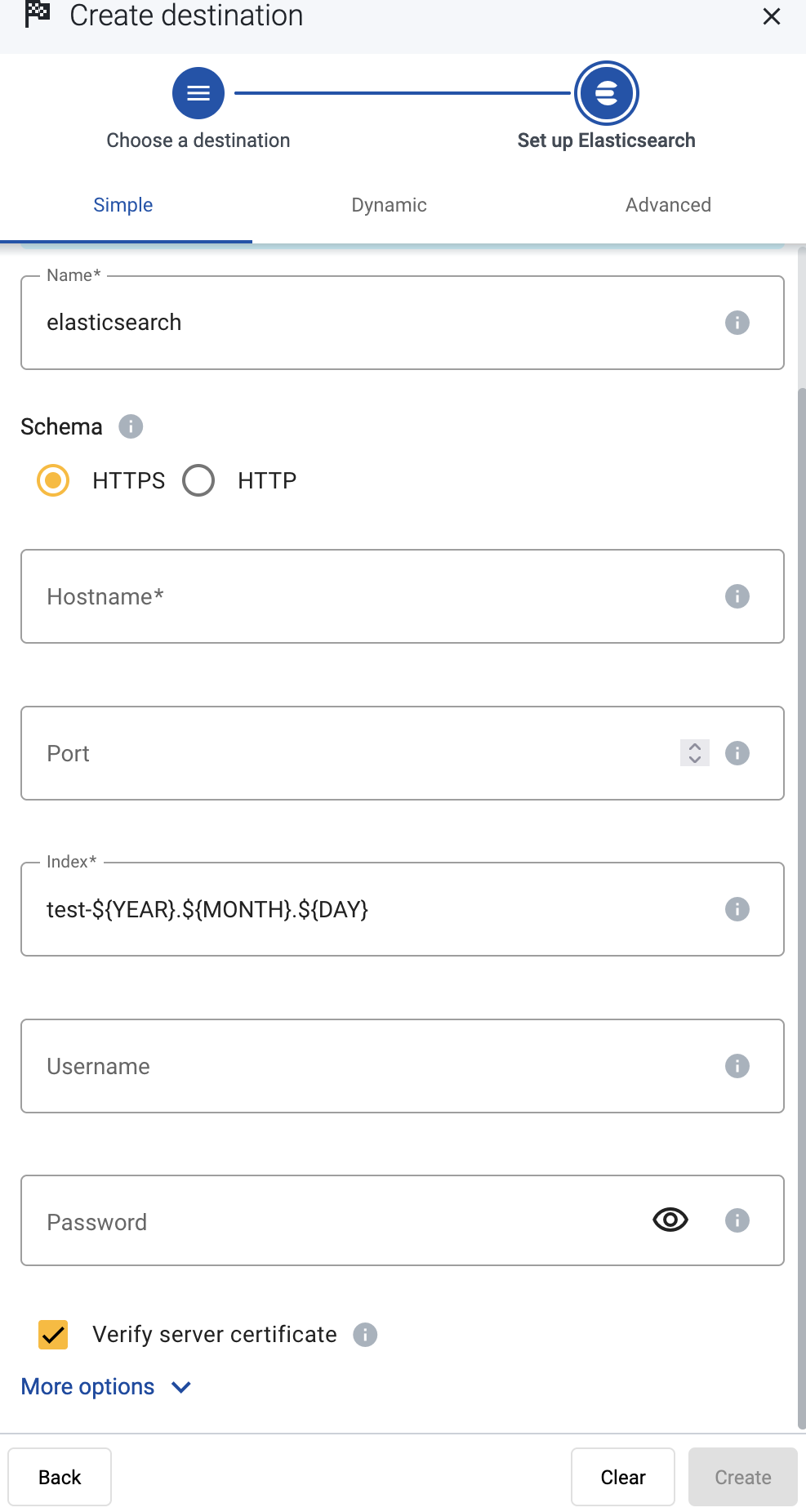

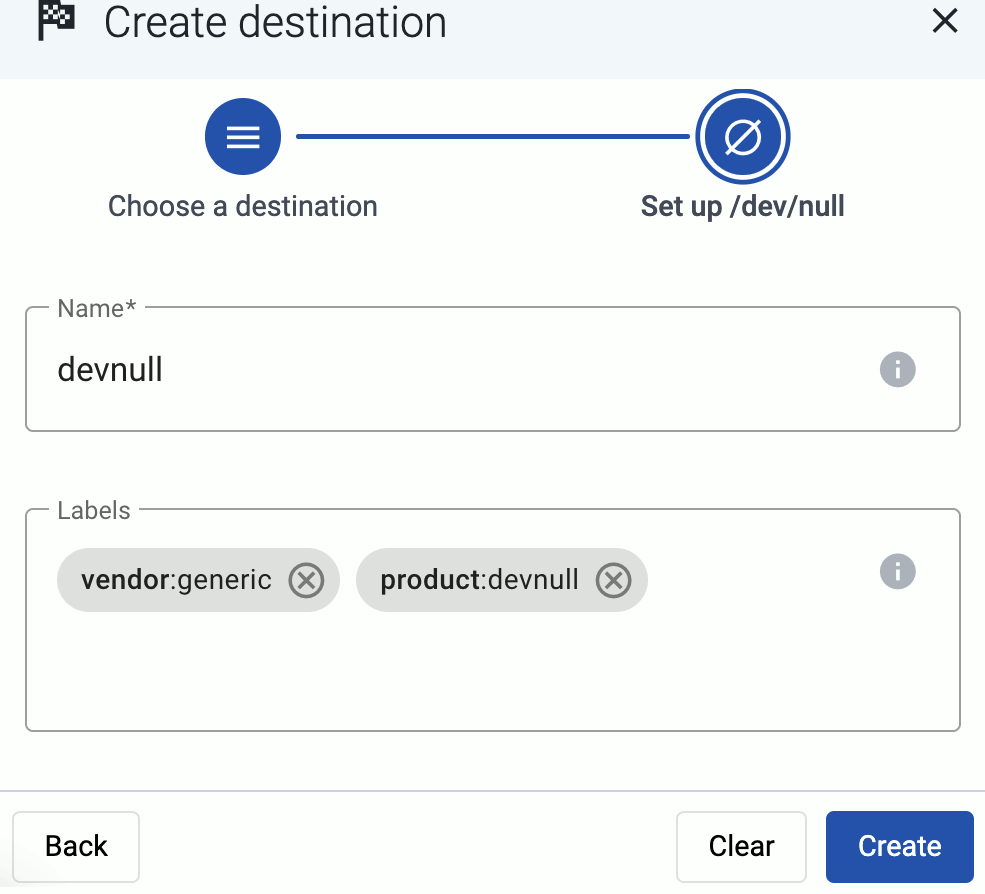

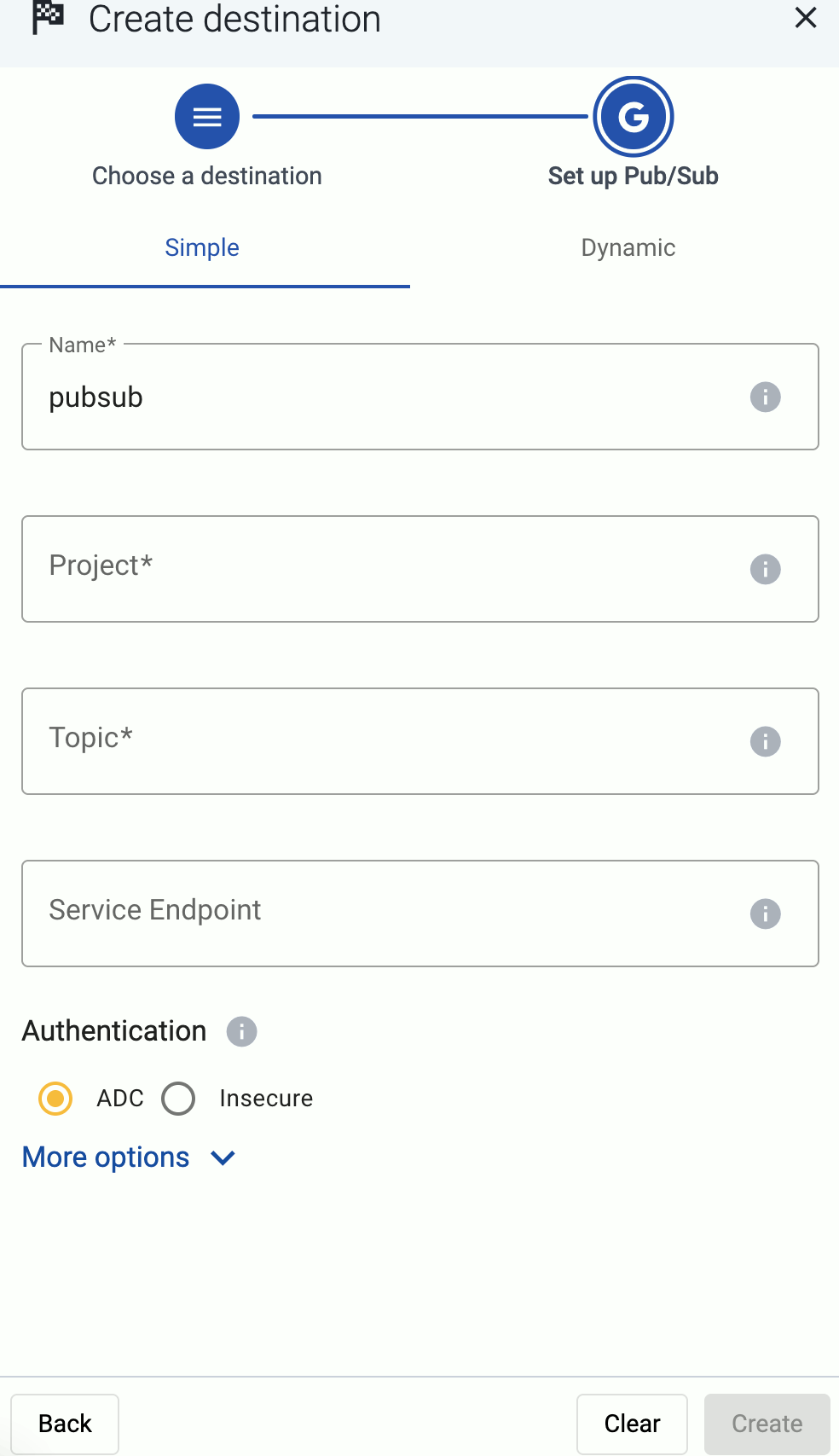

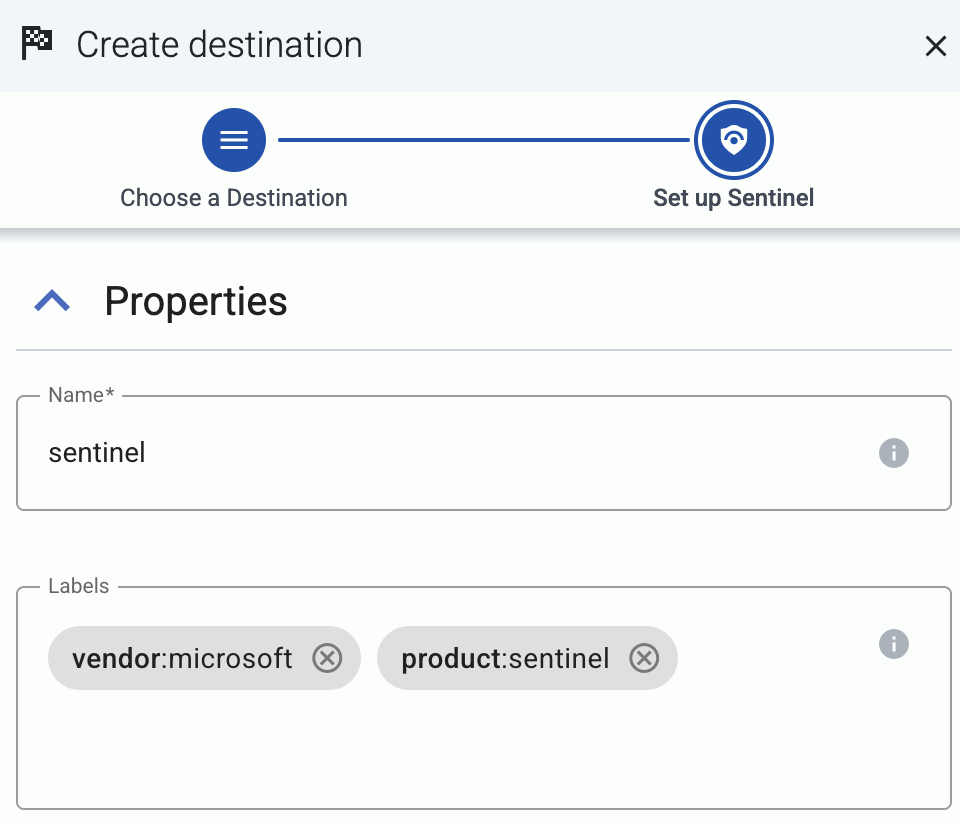

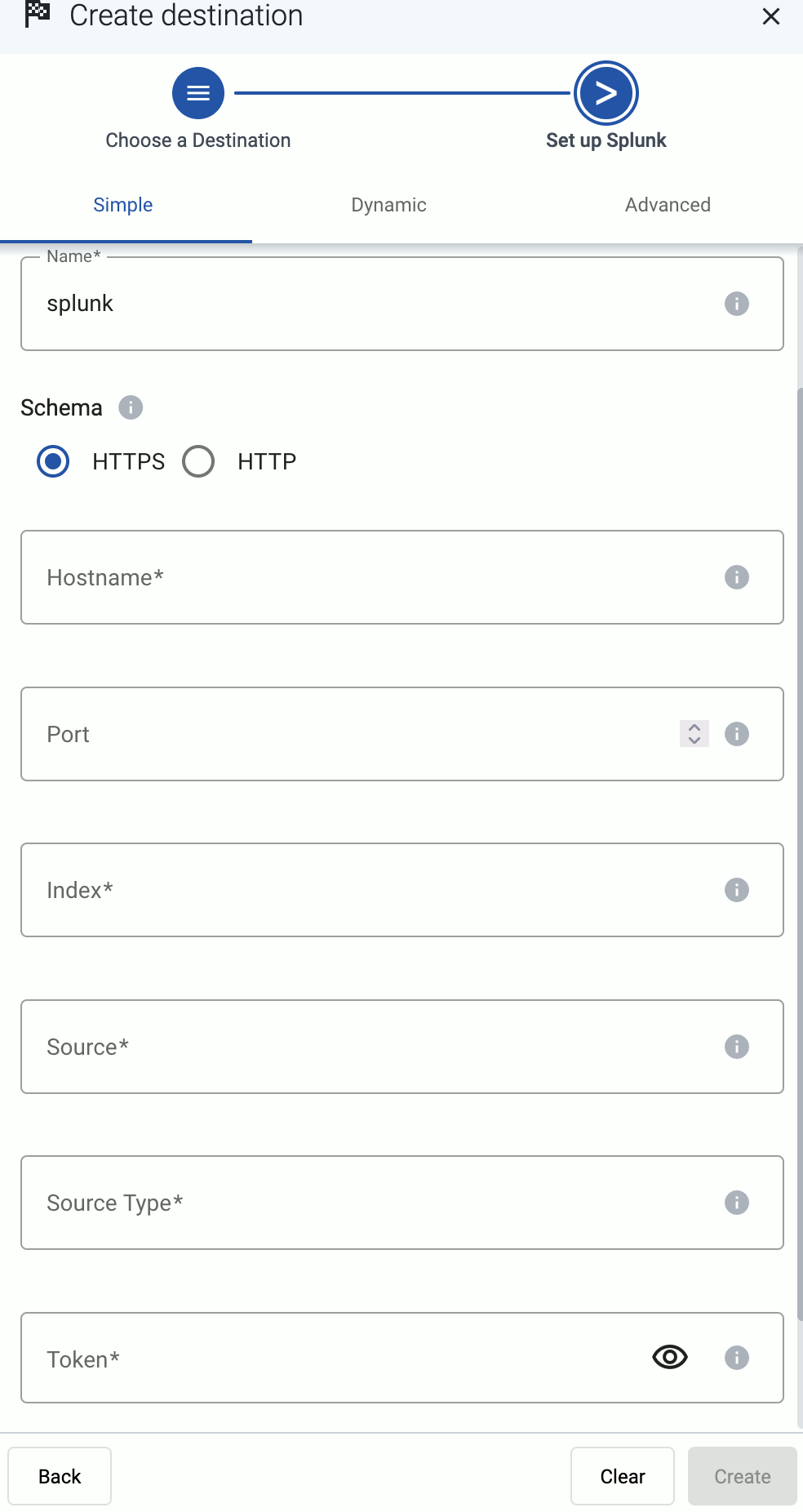

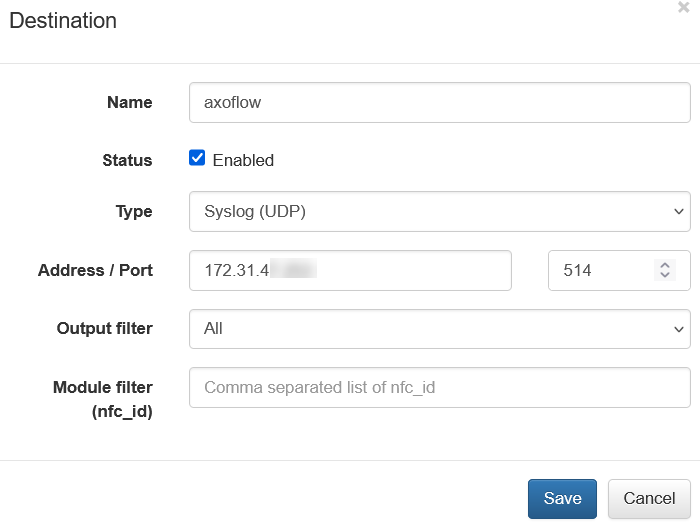

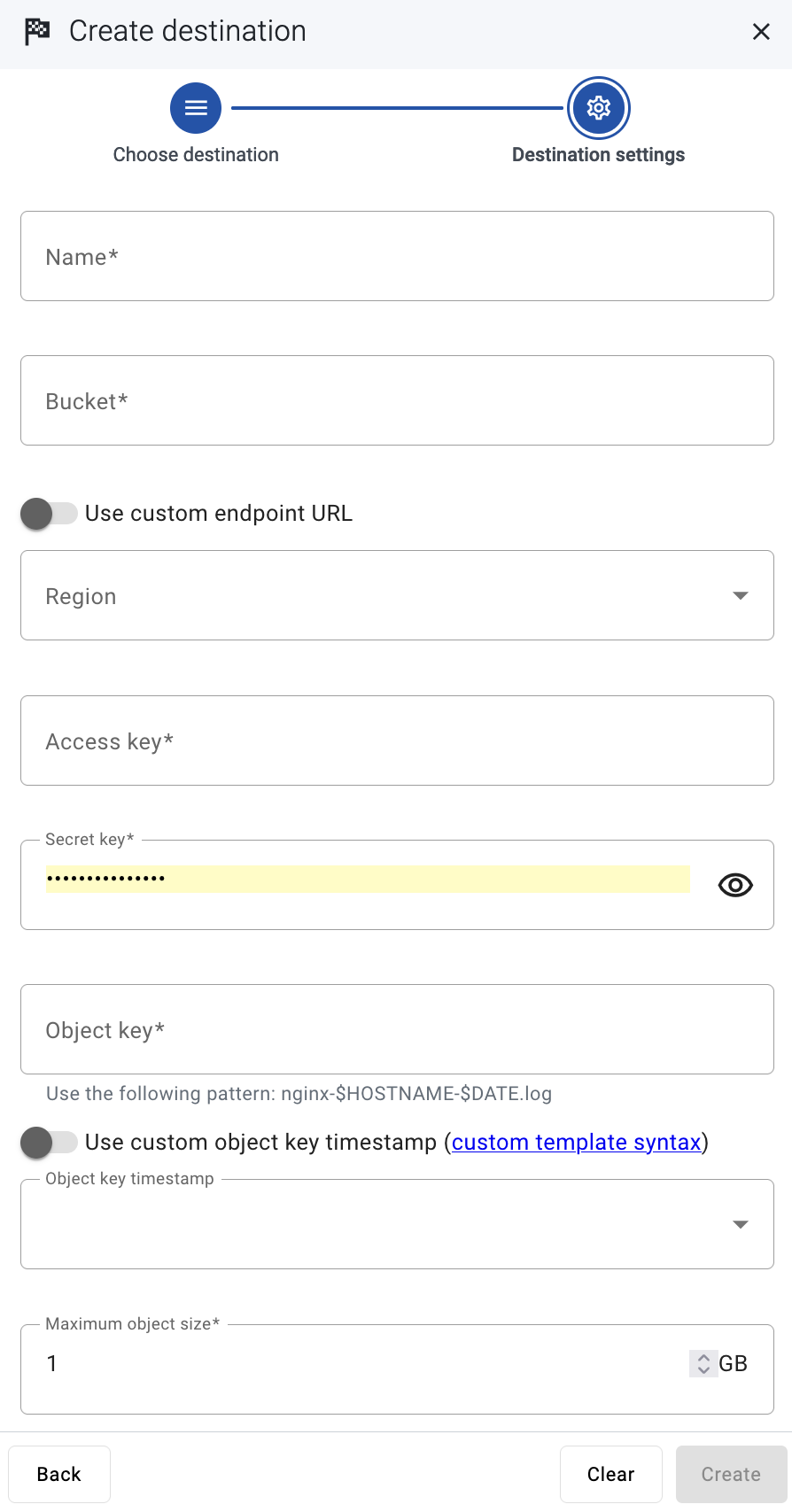

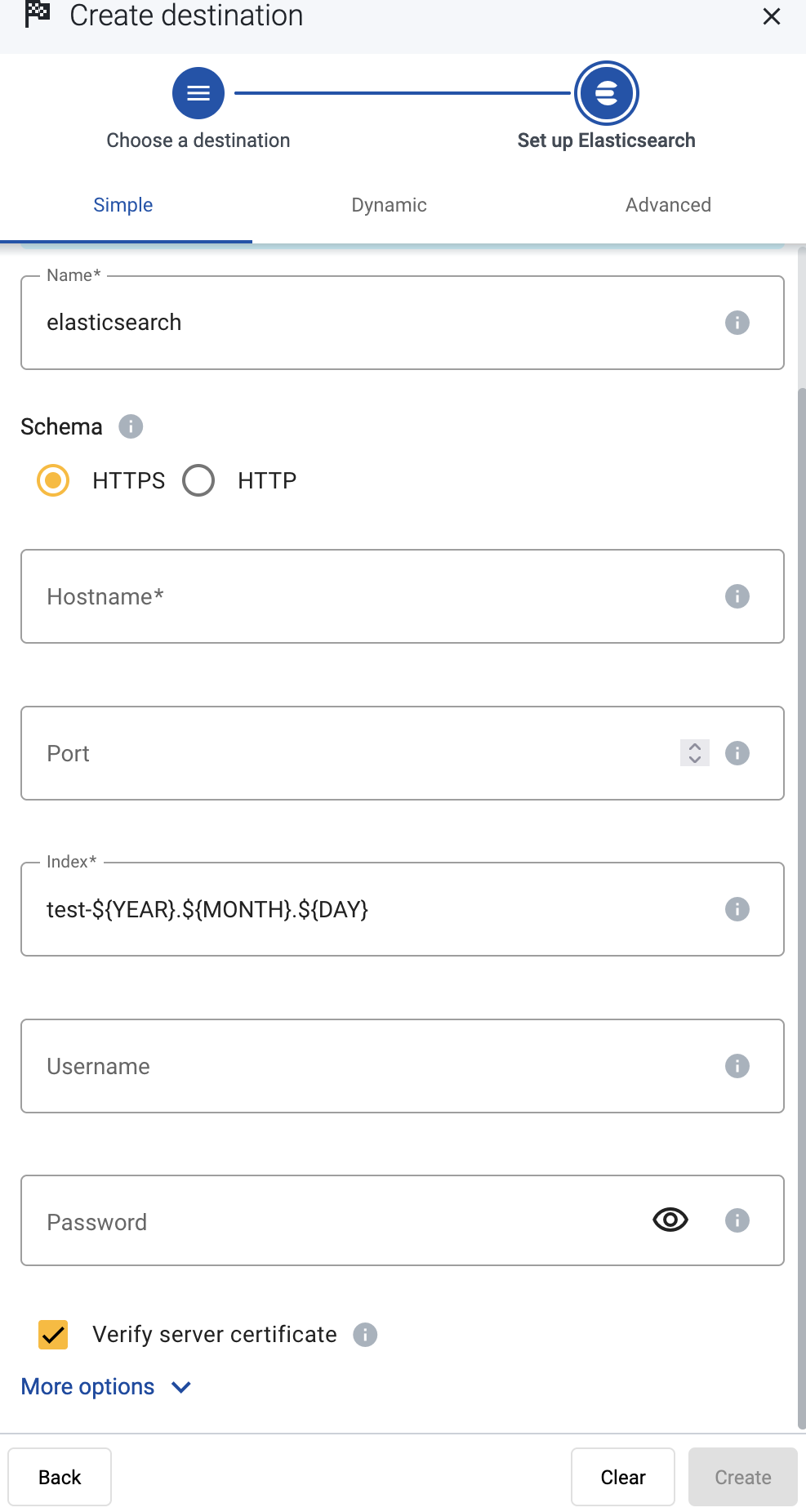

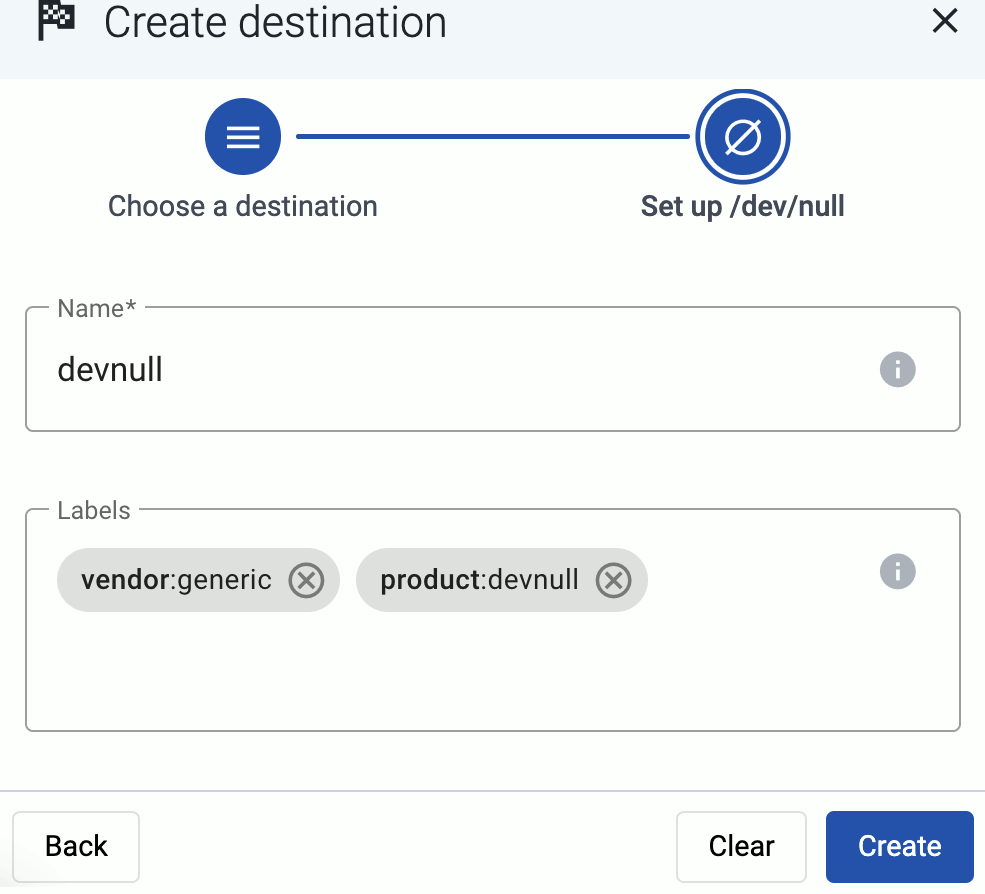

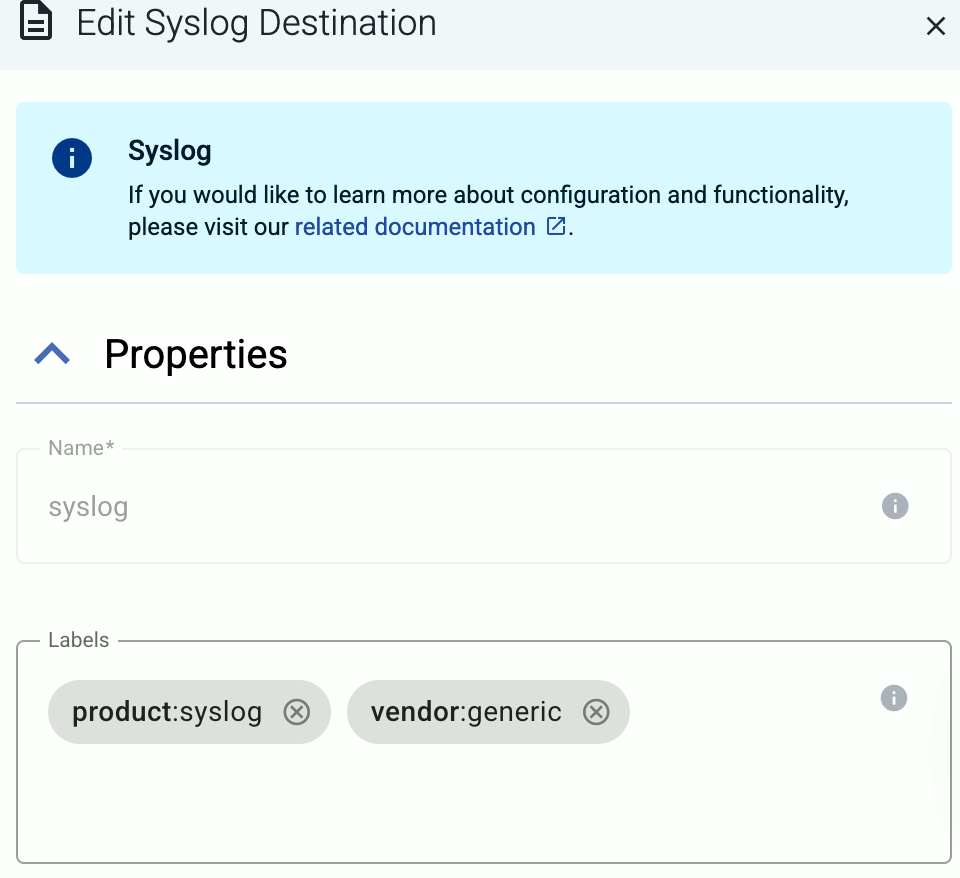

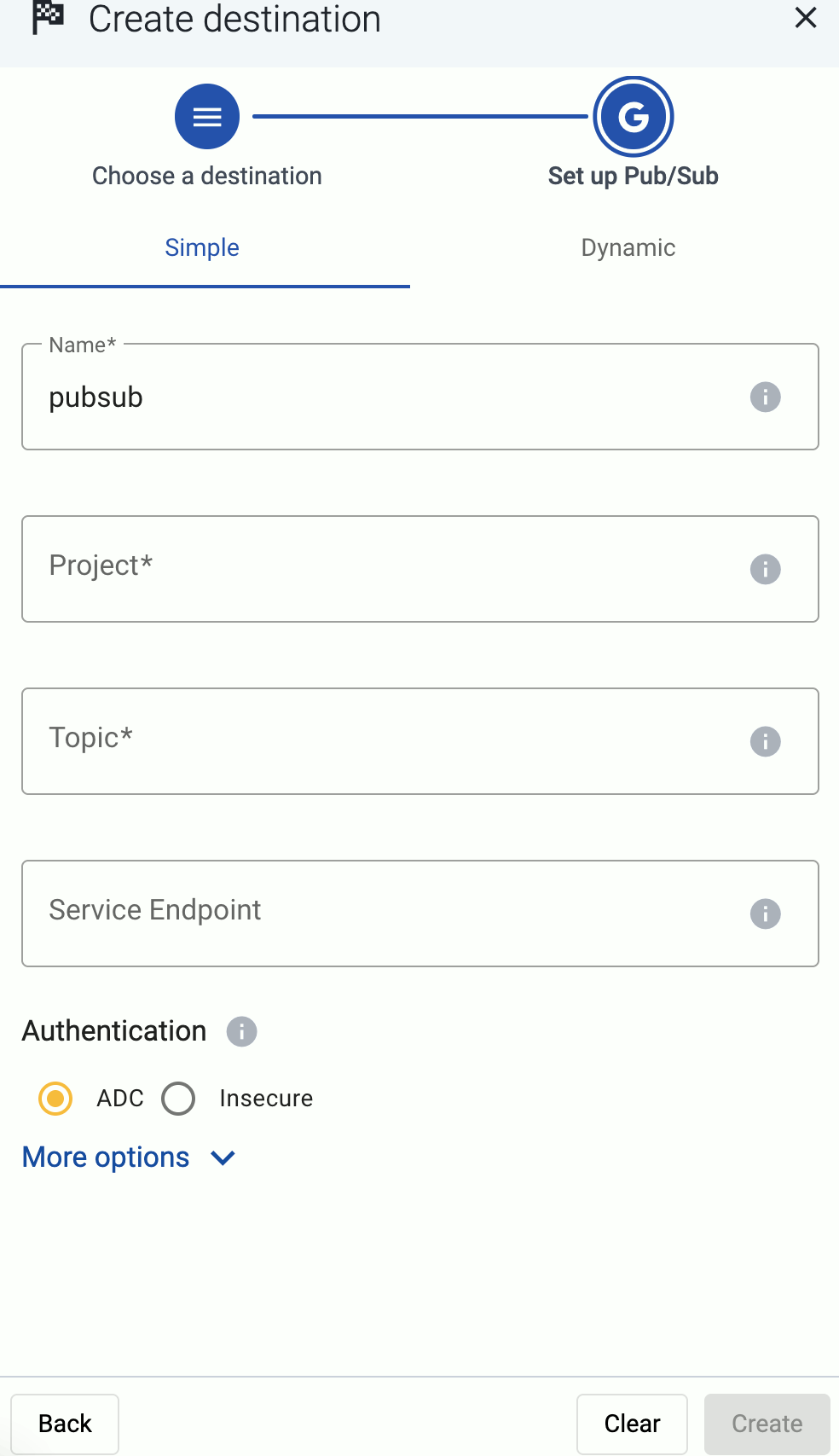

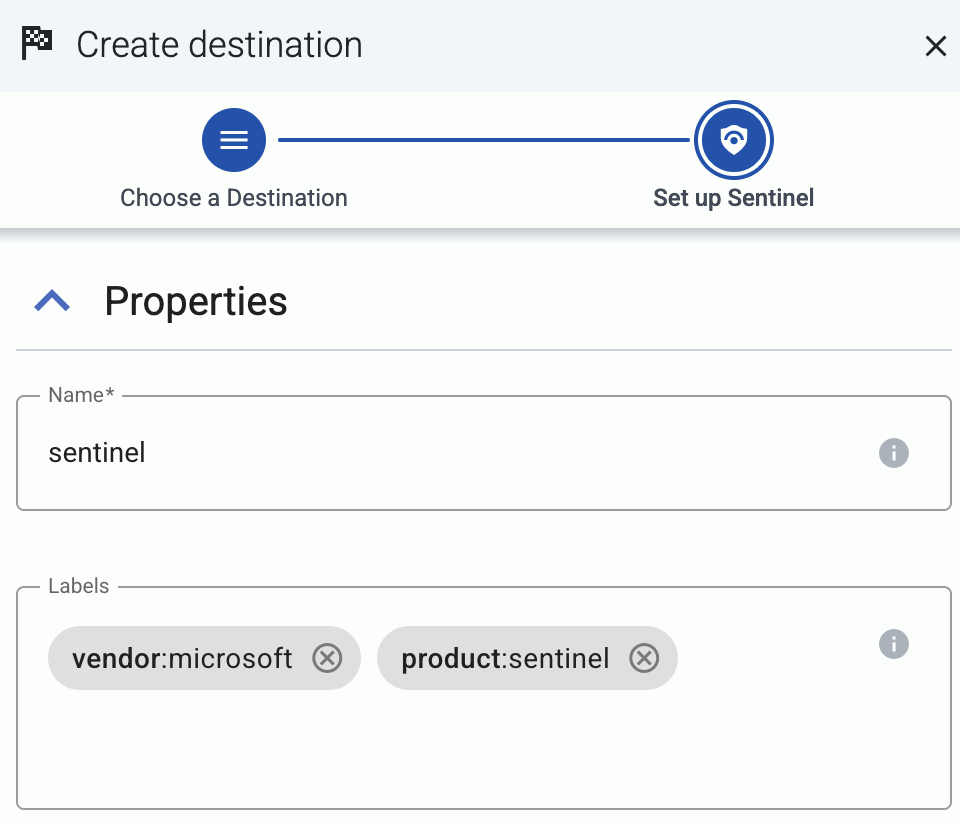

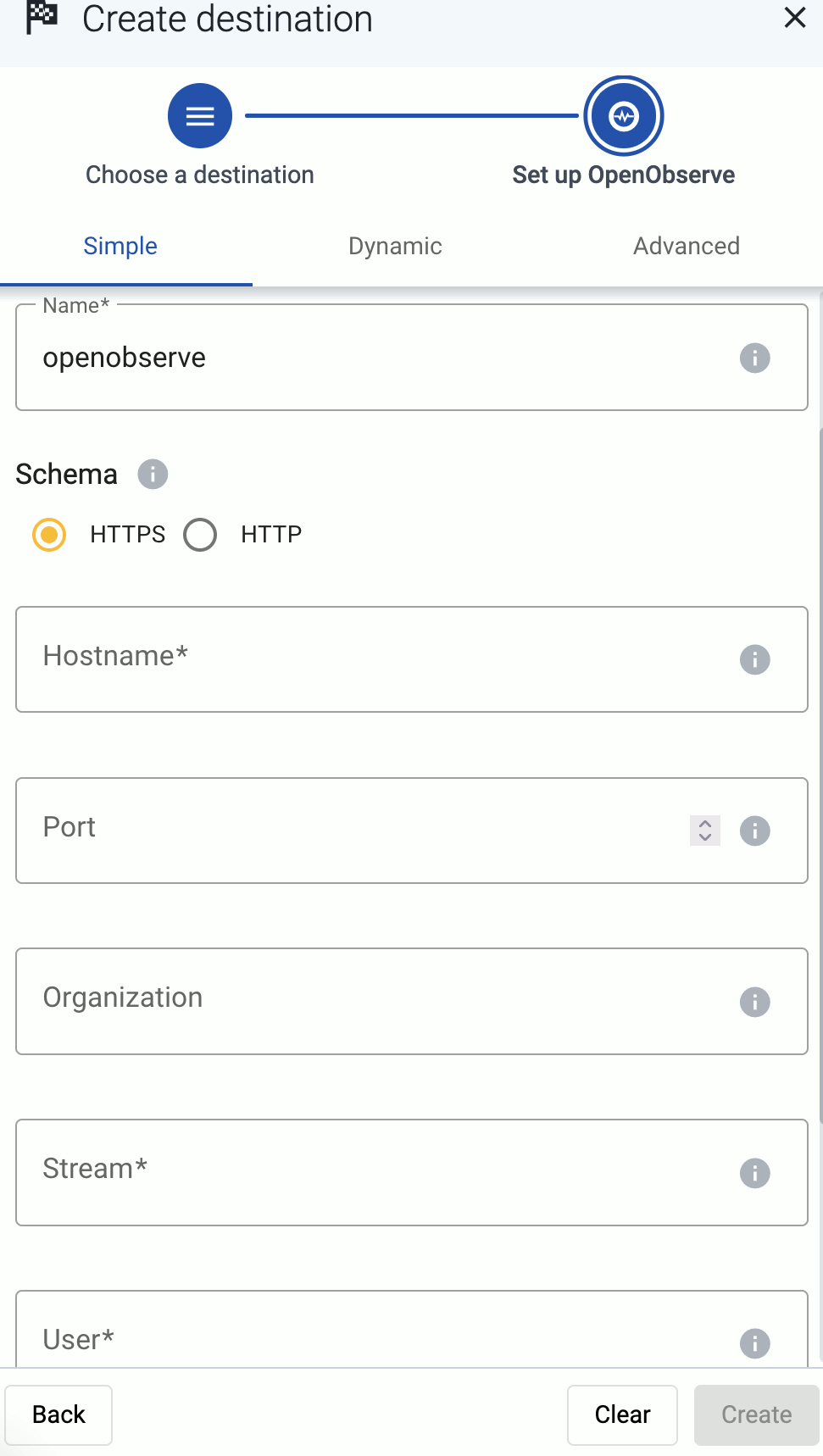

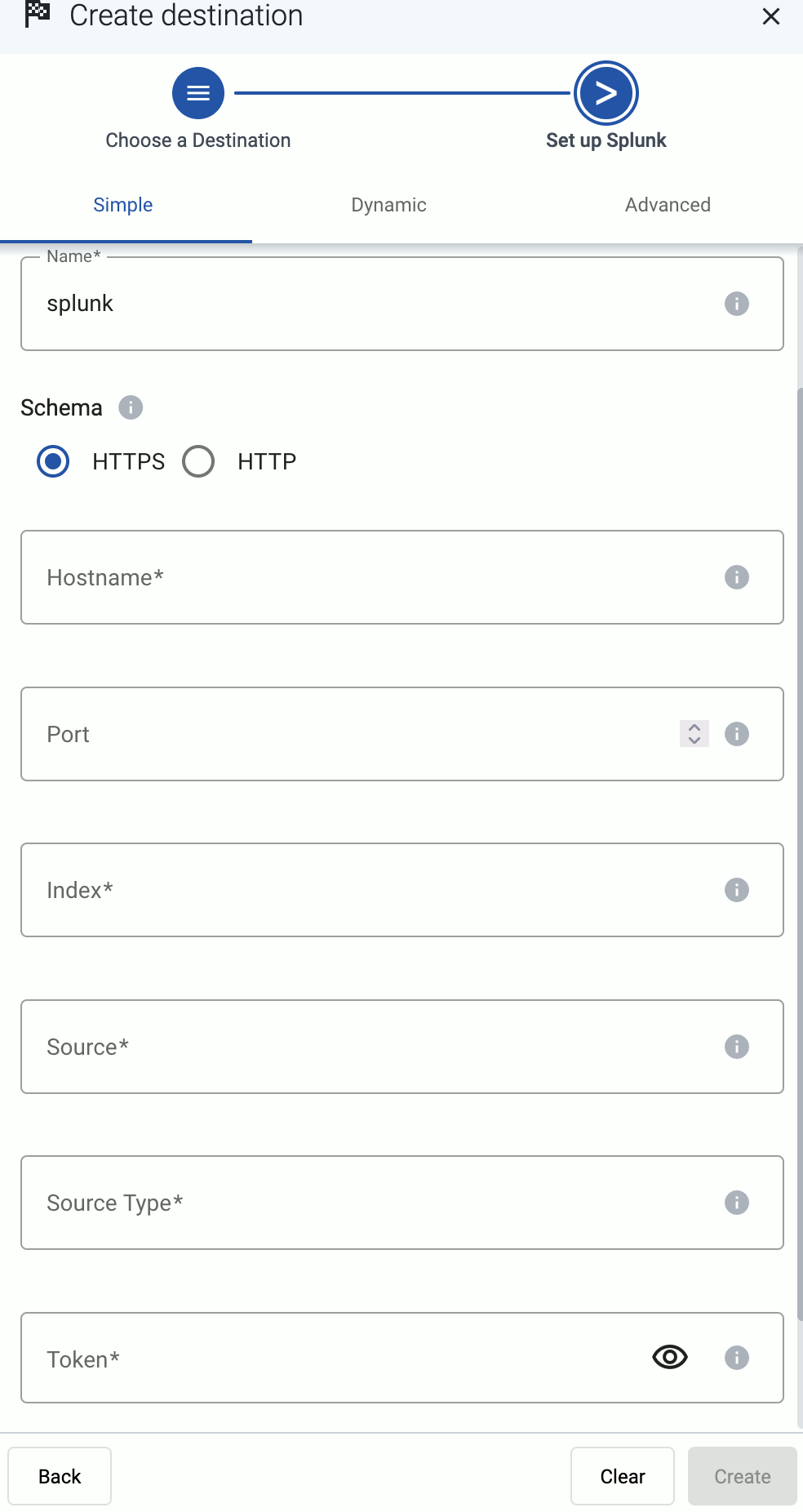

Add a destination

Add the destination where you’re sending your data. For a quick test, you can use a free Splunk or OpenObserve account.

Add a Splunk Cloud destination. For other destinations, see Destinations.

Prerequisites

-

Enable the HTTP Event Collector (HEC) on your Splunk deployment if needed. On Splunk Cloud Platform deployments, HEC is enabled by default.

-

Create a token for Axoflow to use in the destination. When creating the token, use the syslog source type.

For details, see Set up and use HTTP Event Collector in Splunk Web.

-

If you’re using AxoRouter, create the indexes where Axoflow sends the log data. Which index is needed depends on the sources you have, but create at least the following event indices: axoflow, infraops, netops, netfw, osnix (for unclassified messages). Check your sources in the Sources section for a detailed lists on which indices their data is sent.

Steps

-

Create a new destination.

- Open the Axoflow Console.

- Select Topology.

- Select + > Destination.

-

Configure the destination.

-

Select Splunk.

-

Select Dynamic. This will allow you to set a default index, source, and source type for messages that aren’t automatically identified.

-

Enter your Splunk URL into the Hostname field, for example, <your-splunk-tenant-id>.splunkcloud.com for Splunk Cloud Platform free trials, or <your-splunk-tenant-id>.splunkcloud.com for Splunk Cloud Platform instances.

-

Enter the name of the Default Index. The data will be sent into this index if no other index is set during the processing of the message (based on automatic classification, or by the processing steps of the Flow). Make sure that the index exists in Splunk.

-

Enter the Default Source and Default Source Type. These will be assigned to the messages that have no source or source type set during the processing of the message (based on automatic classification, or by the processing steps of the Flow).

-

Enter the token you’ve created into the Token field.

-

Disable the Verify server certificate option unless your deployment has a valid, non-self-signed certificate. Free Splunk Cloud accounts have self-signed certificates.

-

(Optional) You can set other options as needed for your environment. For details, see Splunk.

-

Select Create.

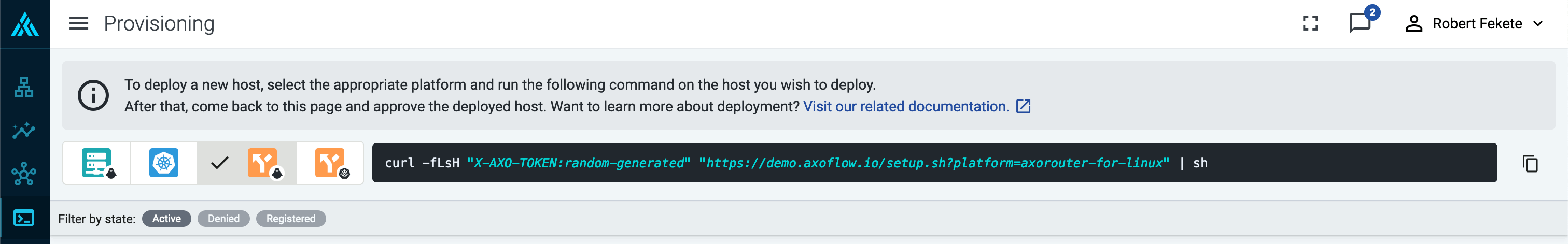

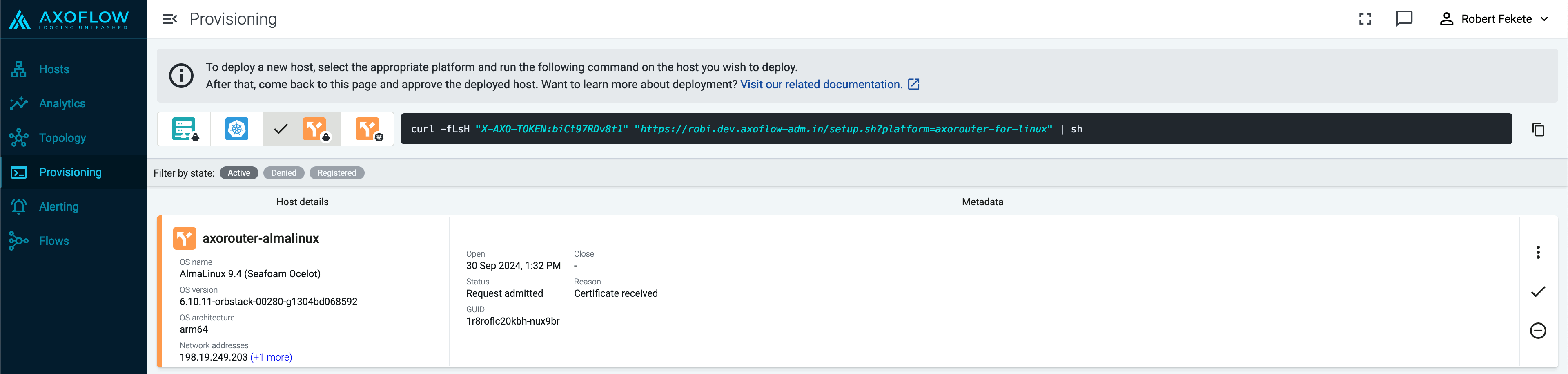

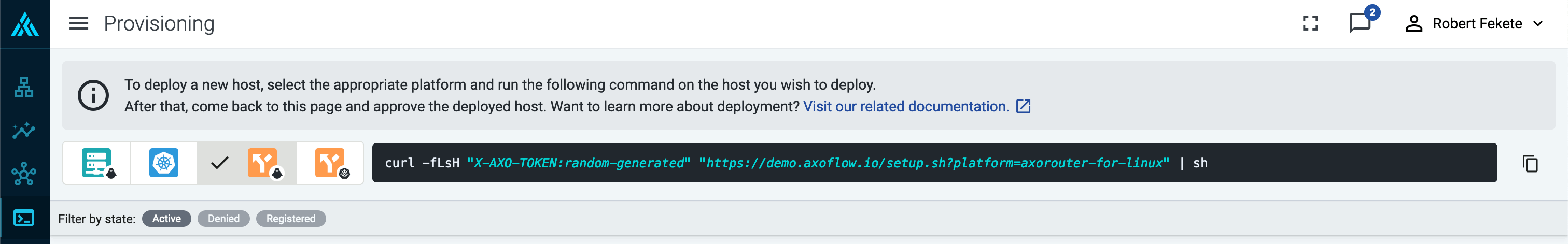

Deploy an AxoRouter instance

Deploy an AxoRouter instance that will route, curate, and enrich your log data.

Note

Note that AxoRouter collects detailed, real-time metrics about the data-flows – giving you observability over the health of the security data pipeline and its components. Your security data remains in your self-managed cloud or in your on-prem instance where your sources, destinations, and AxoRouters are running, only metrics are forwarded to Axoflow Console.

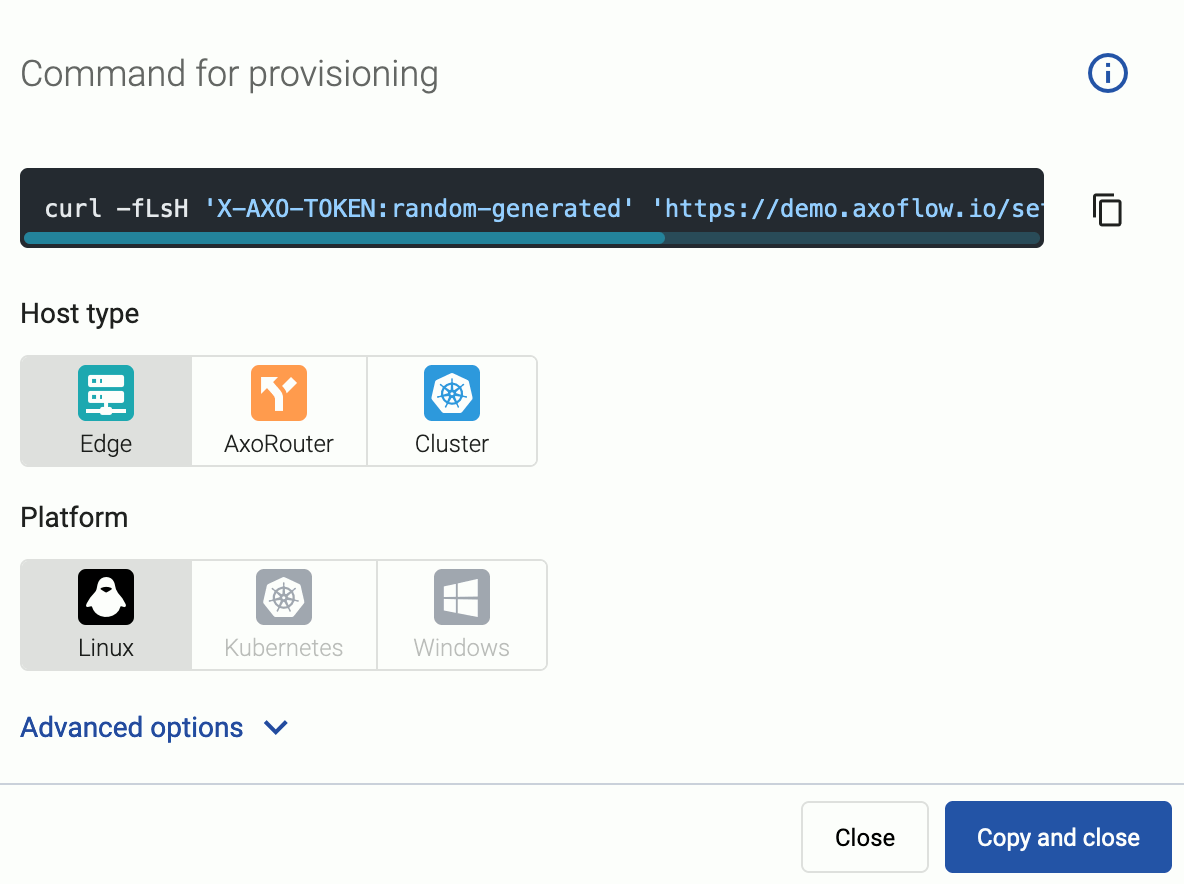

Deploy AxoRouter on Linux. For other platforms, see AxoRouter.

-

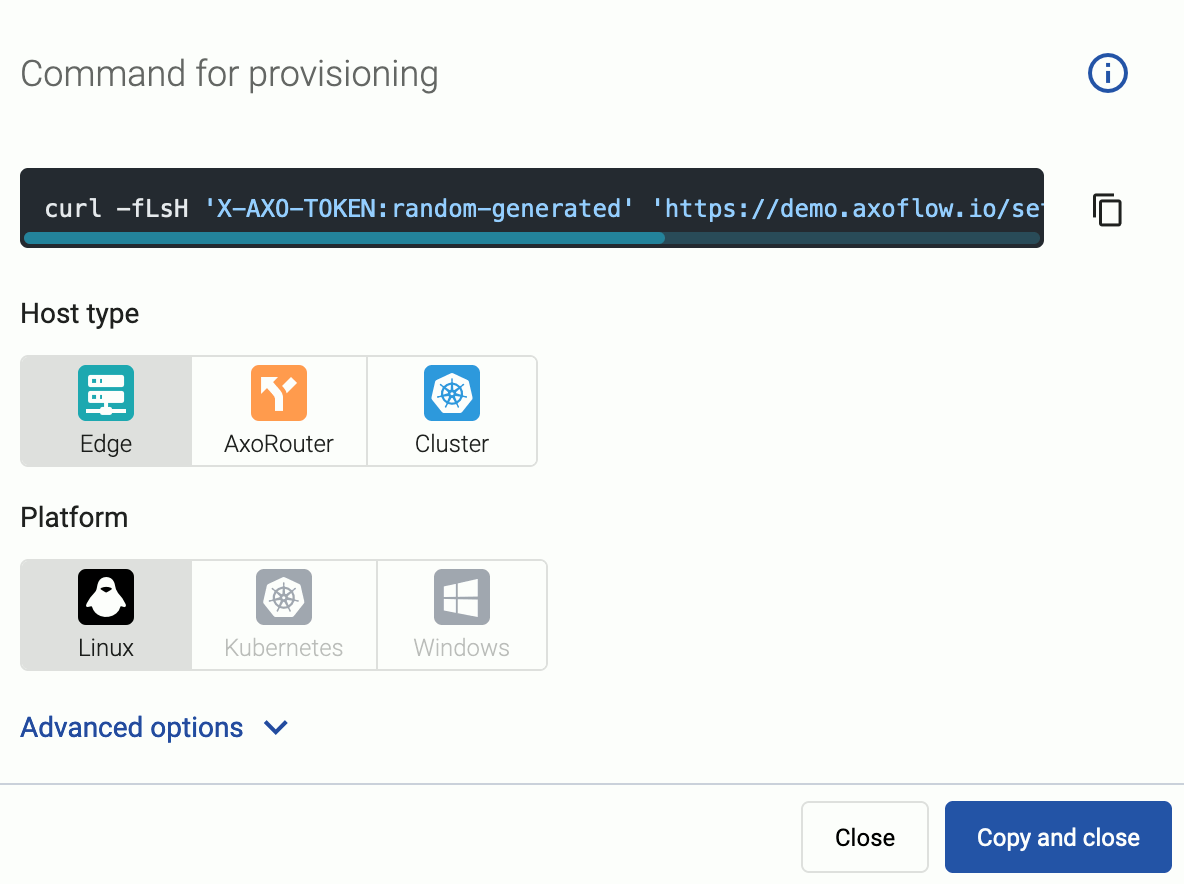

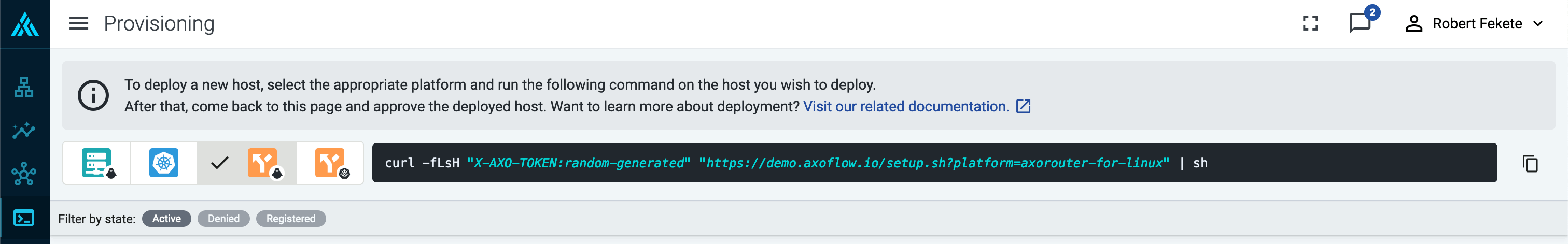

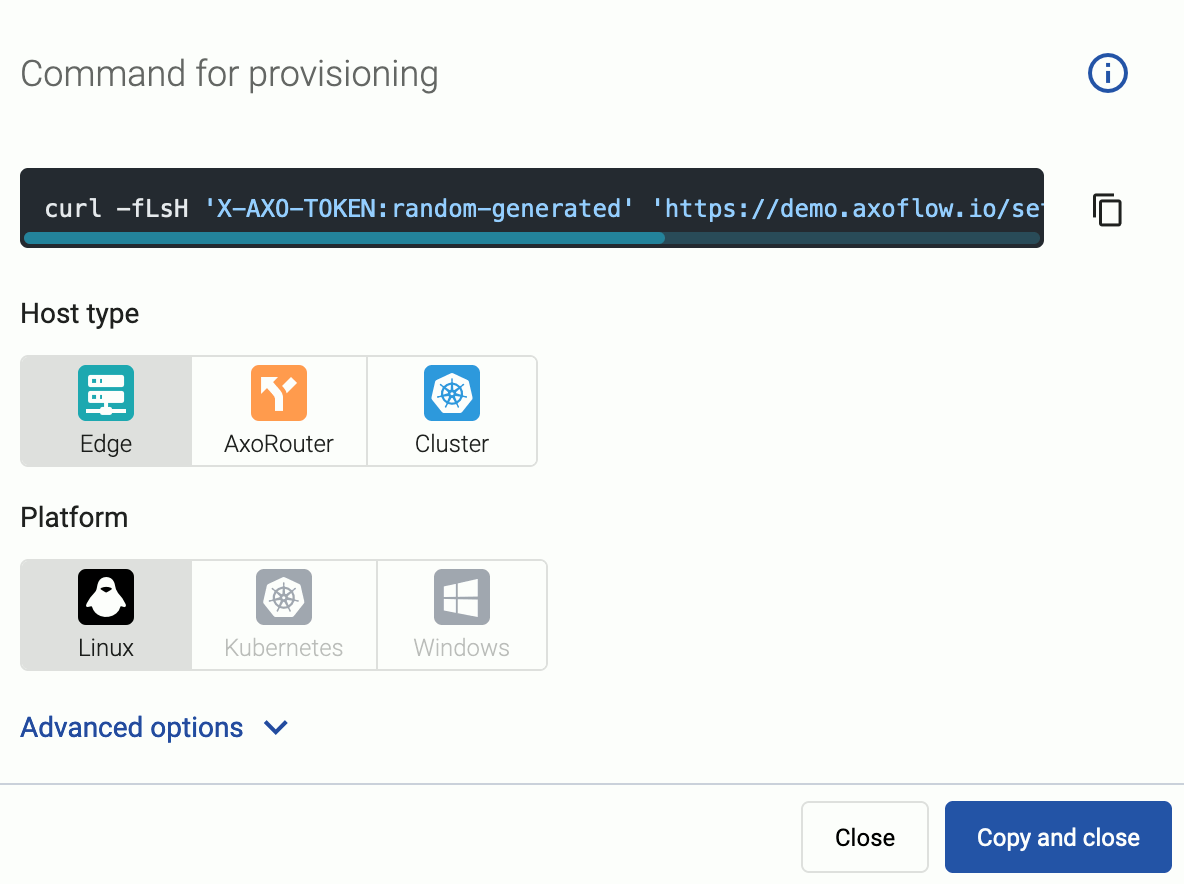

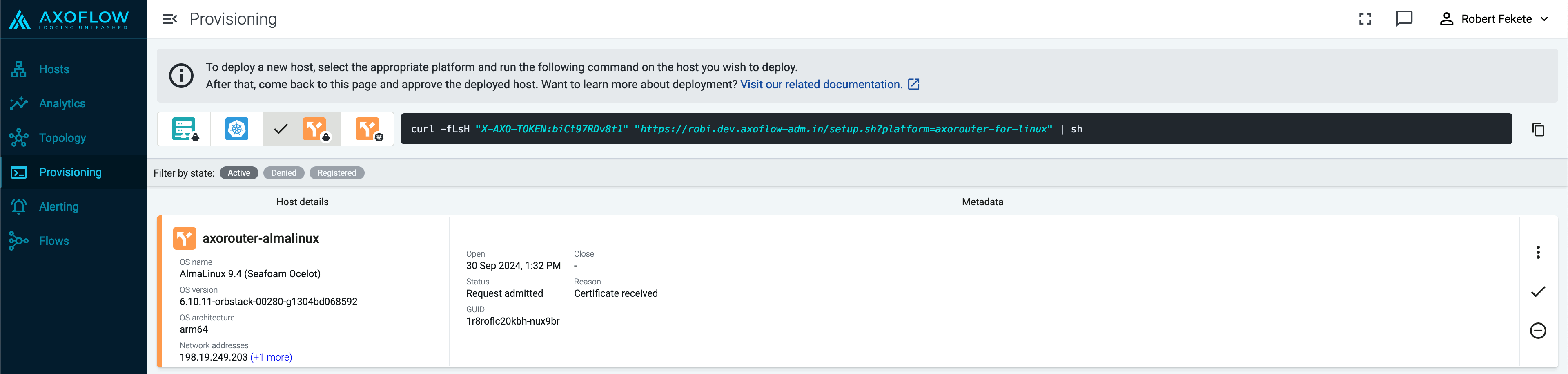

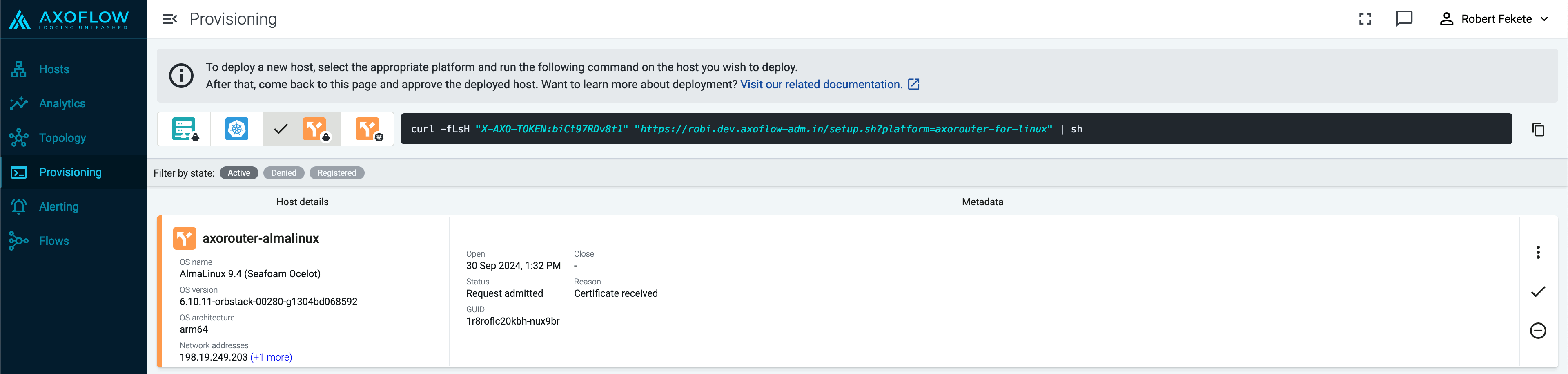

Select Provisioning > Select type and platform.

-

Select the type (AxoRouter) and platform (Linux). The one-liner installation command is displayed.

If needed, set the Advanced options (for example, proxy settings) to modify the installation parameters. Usually, you don’t have to use advanced options unless the Axoflow support team instructs you to do so.

-

Open a terminal on the host where you want to install AxoRouter.

-

Run the one-liner, then follow the on-screen instructions.

Note

Running the provisioning command with sudo would mask environment variables of the calling shell. Either start the whole procedure from a root shell, or let the install script call sudo when it needs to. In other words: don’t add the sudo command to the provisioning command.

Example output:

Do you want to install AxoRouter now? [Y]

y

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4142 100 4142 0 0 19723 0 --:--:-- --:--:-- --:--:-- 19818

Verifying packages...

Preparing packages...

axorouter-0.40.0-1.aarch64

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 31.6M 100 31.6M 0 0 2092k 0 0:00:15 0:00:15 --:--:-- 2009k

Verifying packages...

Preparing packages...

axolet-0.40.0-1.aarch64

Created symlink /etc/systemd/system/multi-user.target.wants/axolet.service → /usr/lib/systemd/system/axolet.service.

Now continue with onboarding the host on the Axoflow web UI.

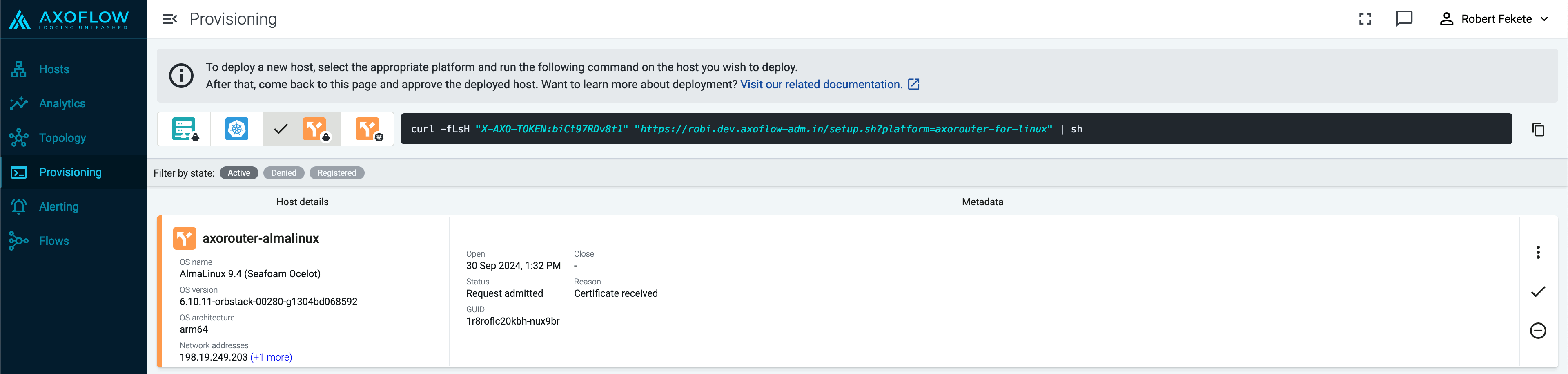

-

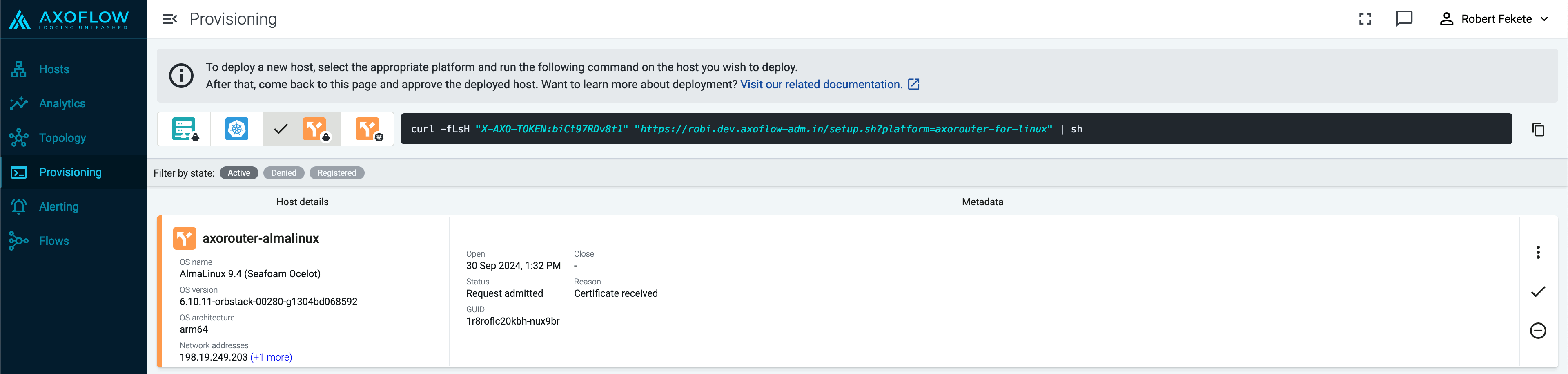

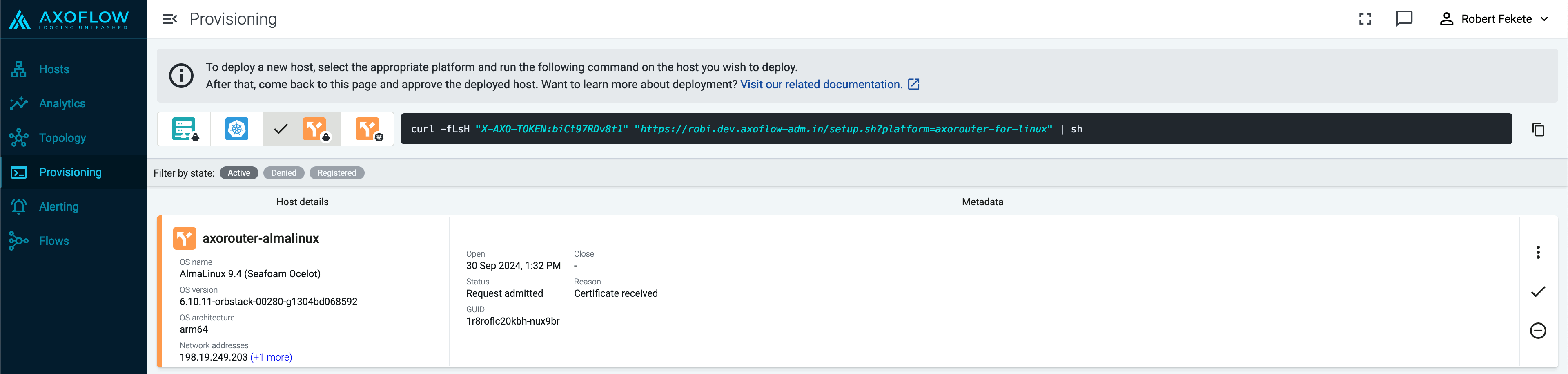

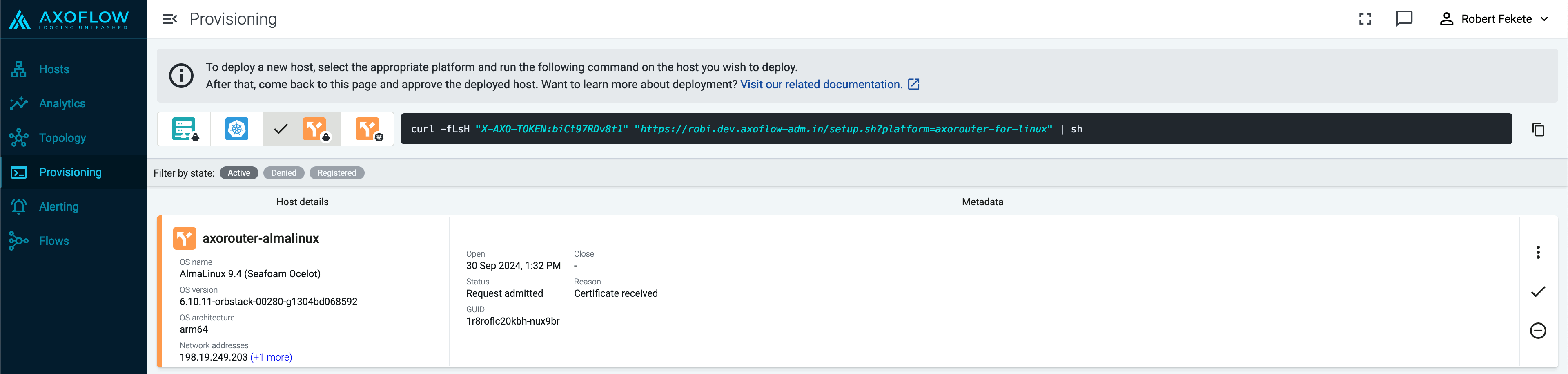

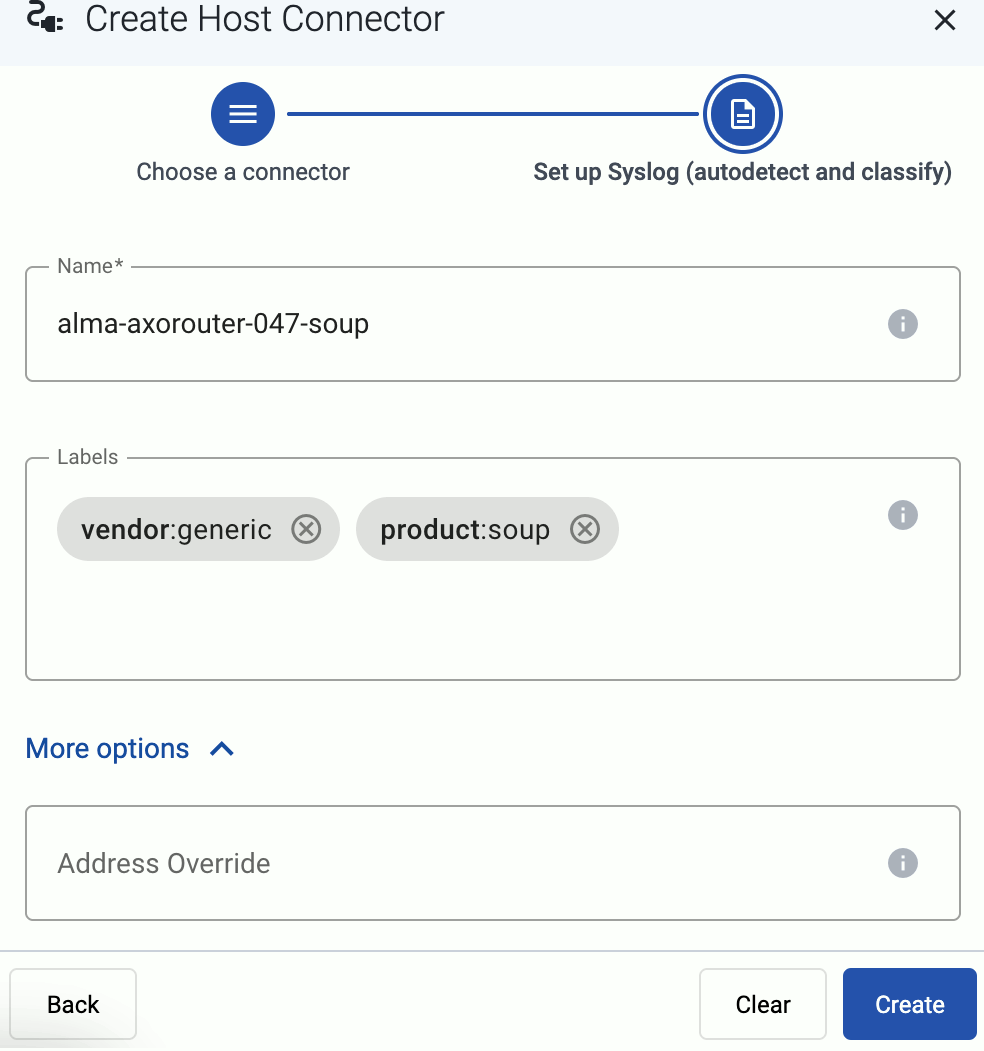

Register the host.

-

Reload the Provisioning page. There should be a registration request for the new AxoRouter deployment. Select ✓.

-

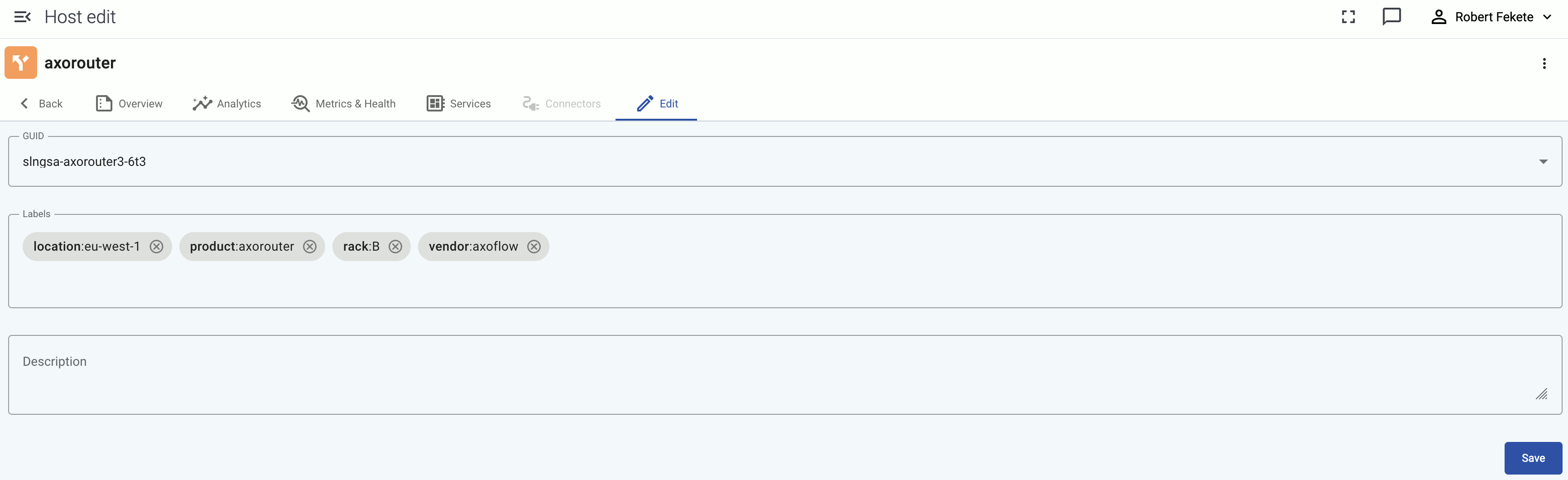

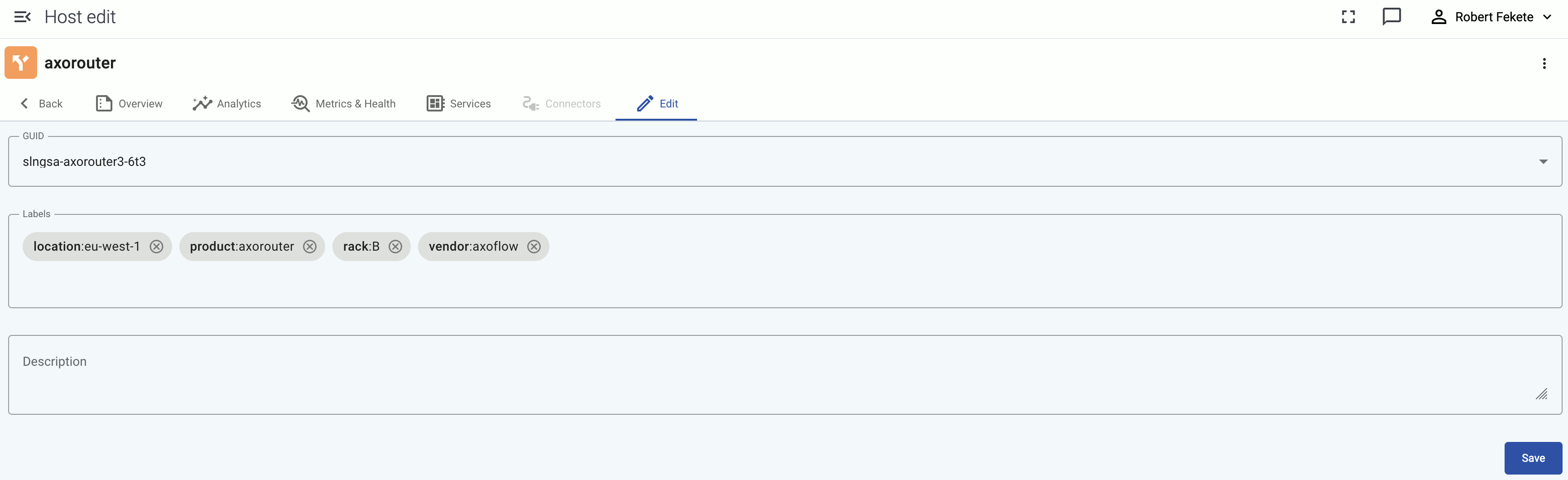

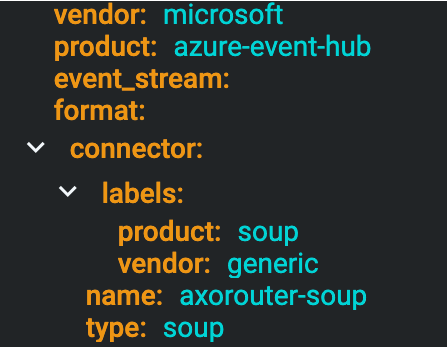

Select Register to register the host. You can add a description and labels (in label:value format) to the host.

-

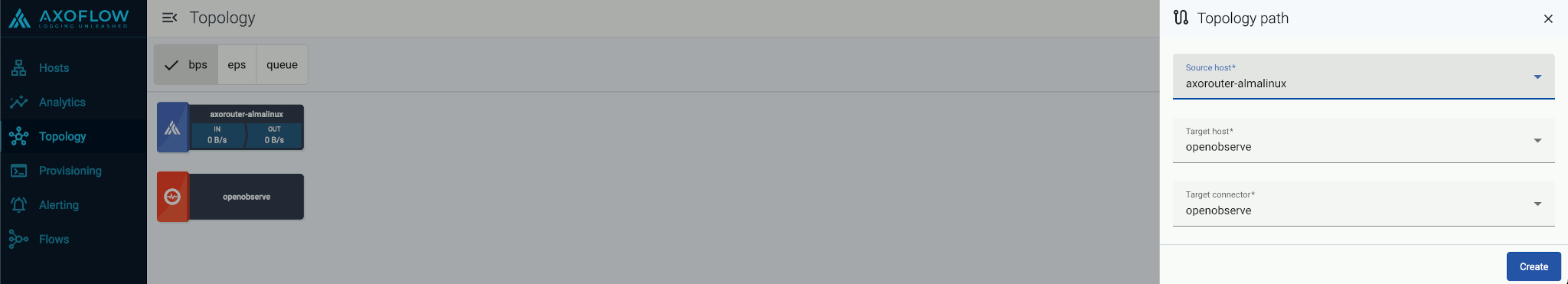

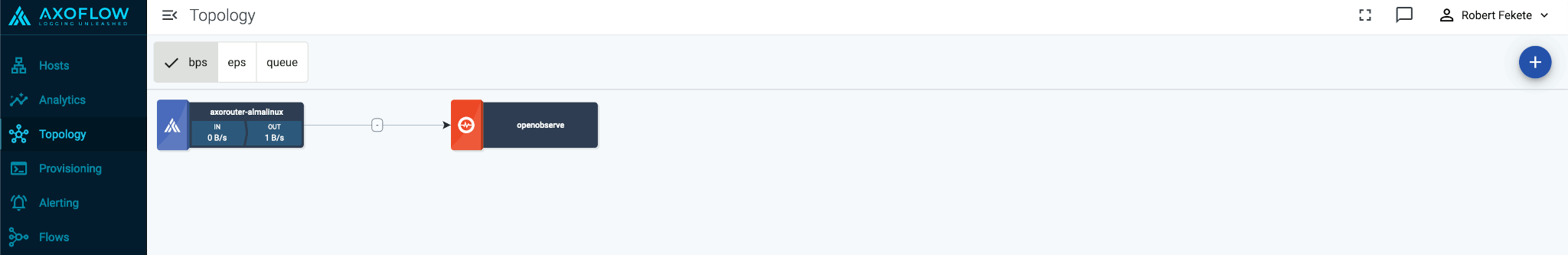

Select the Topology page. The new AxoRouter instance is displayed.

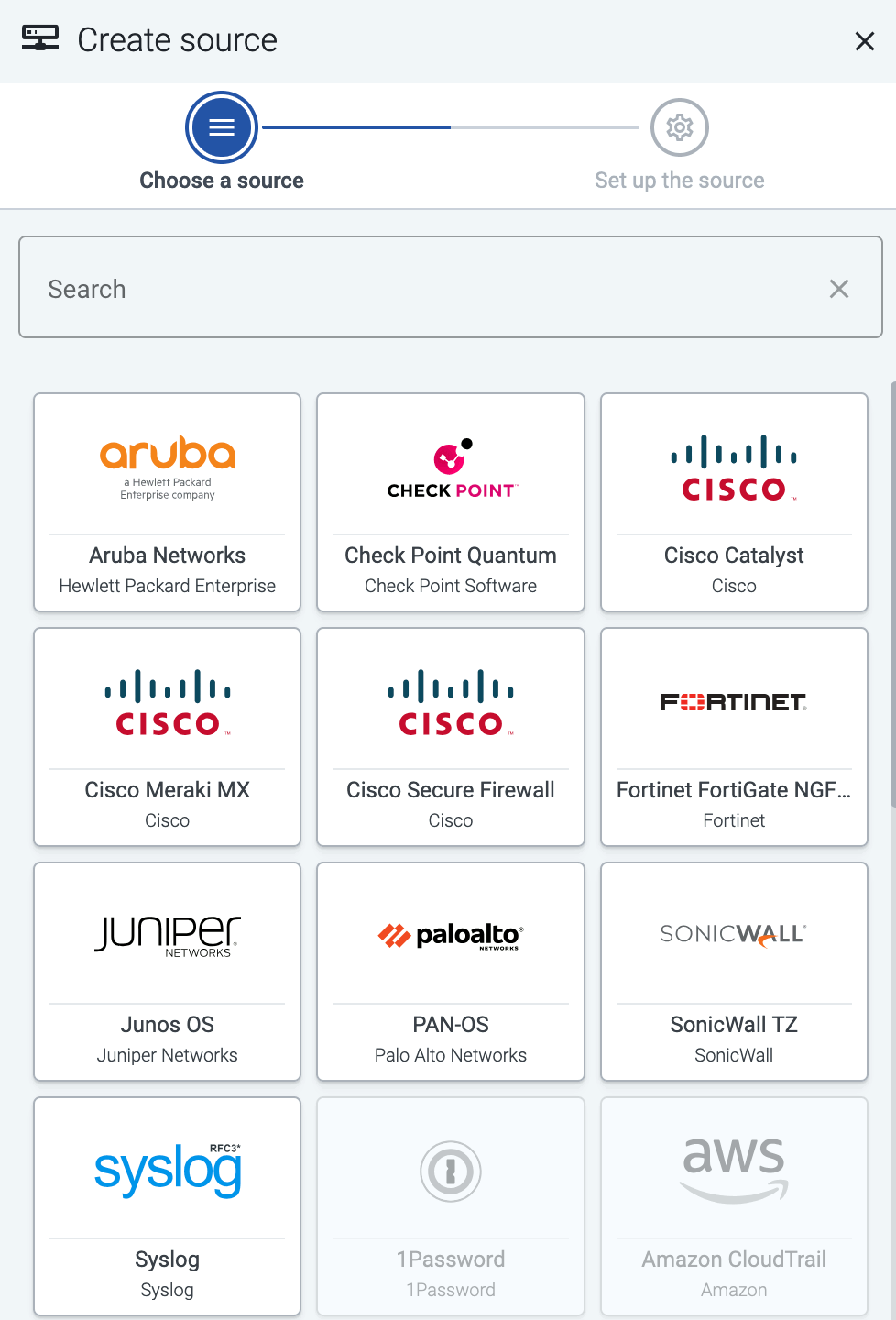

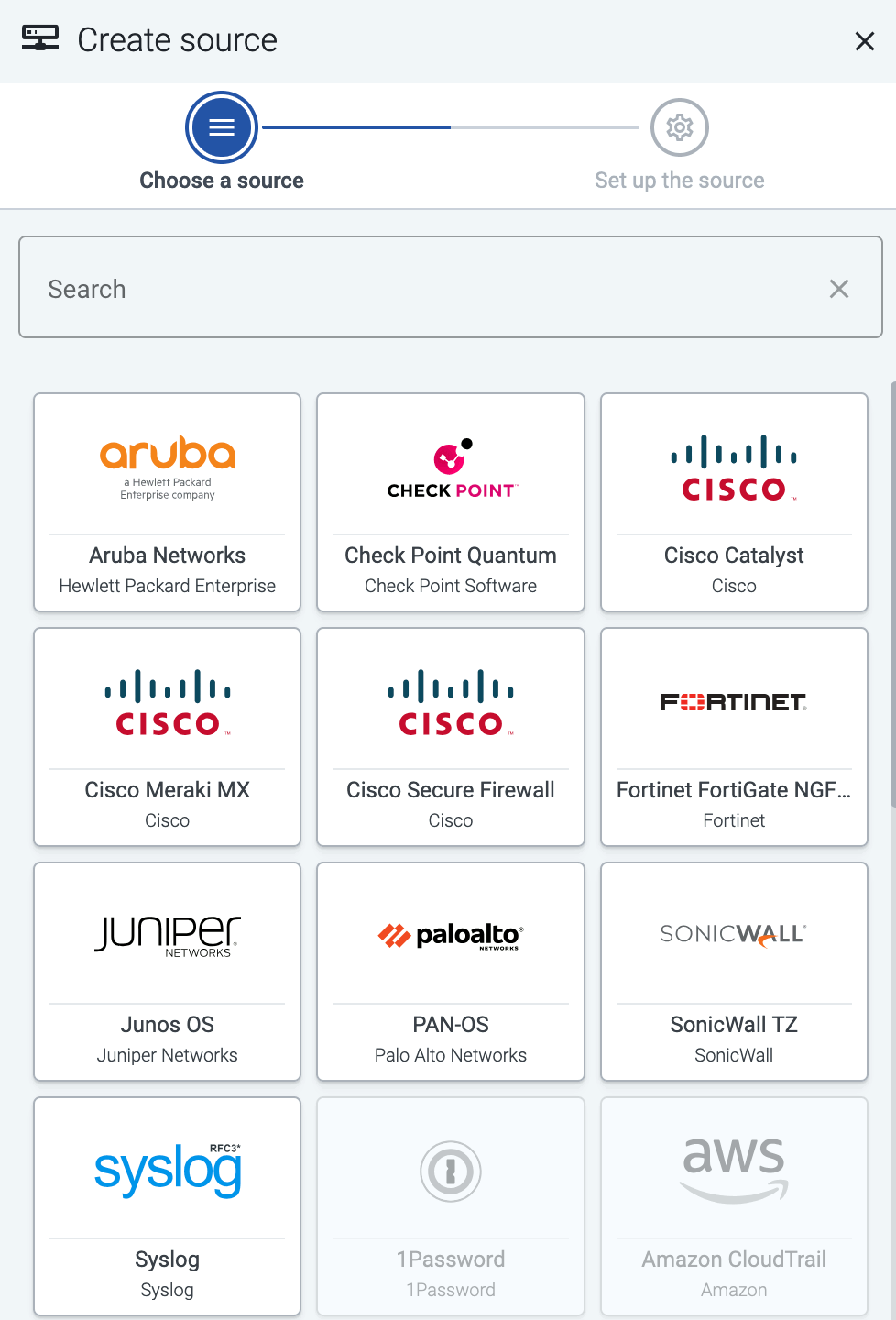

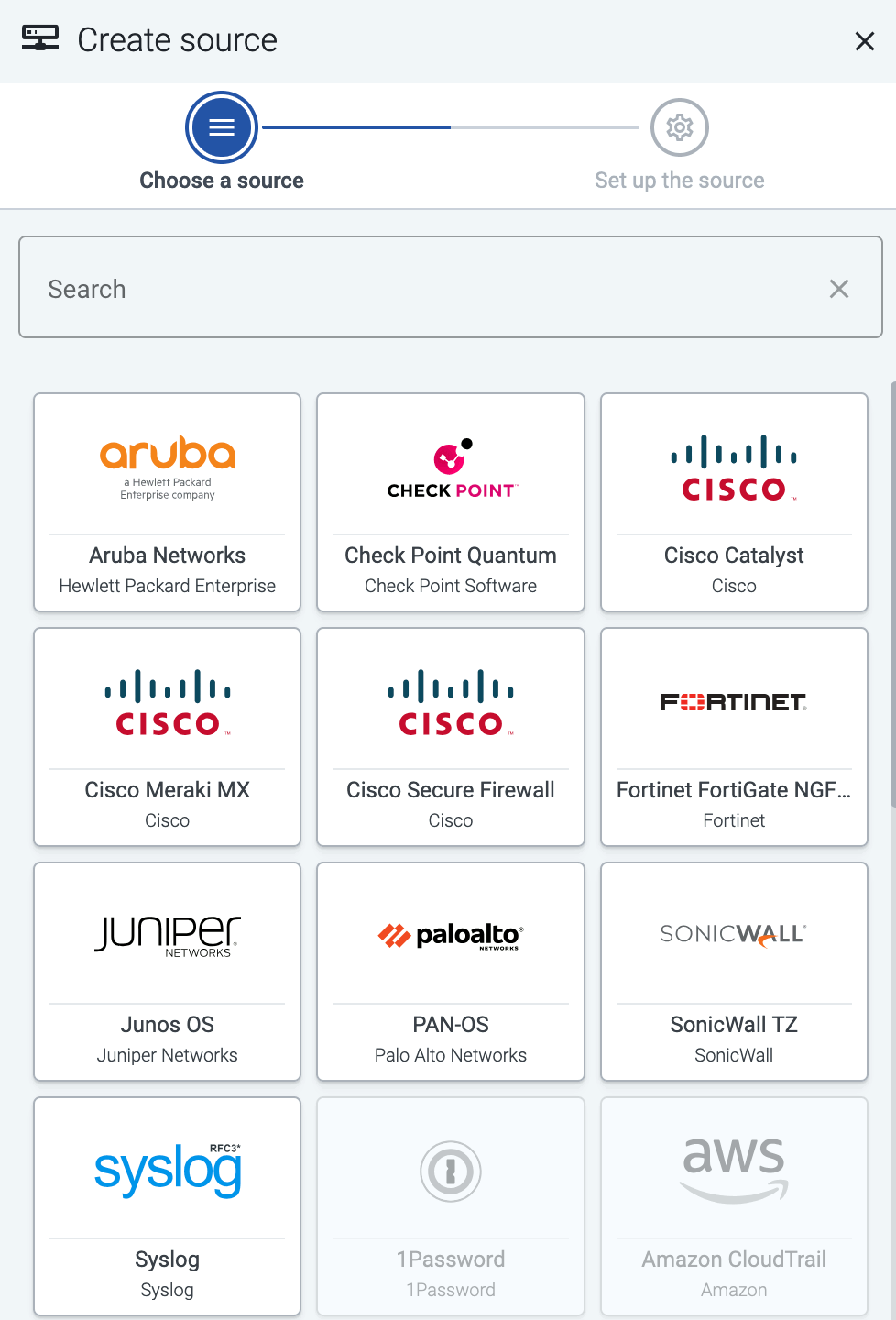

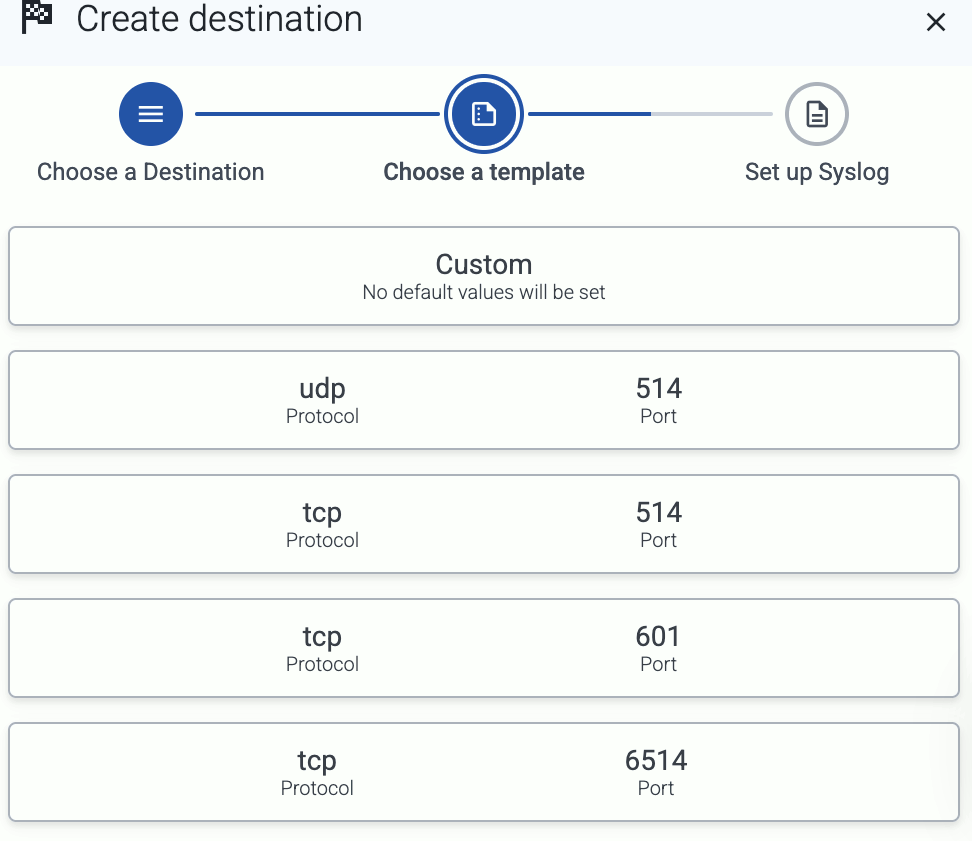

Add a source

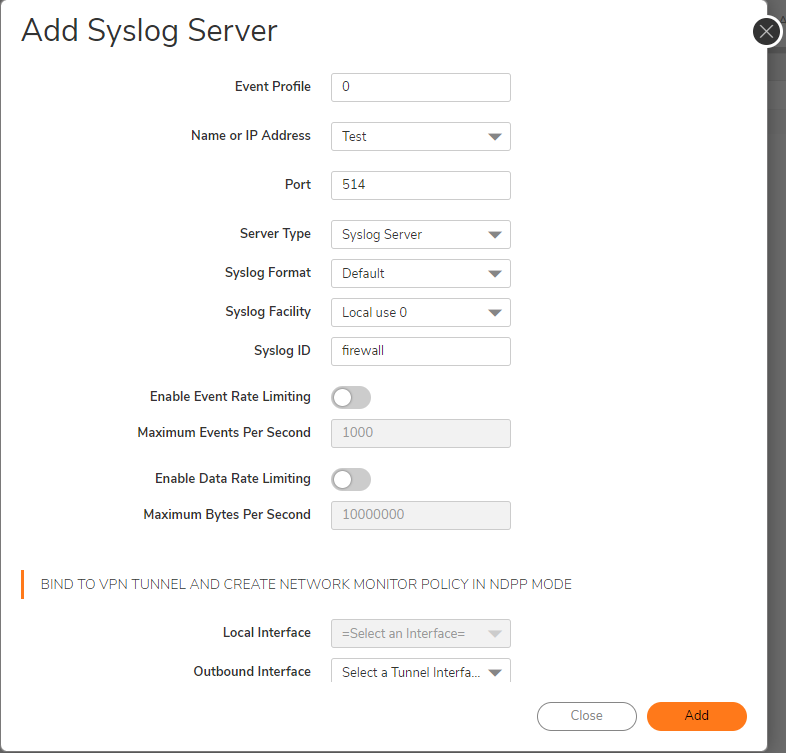

Configure a host to send data to AxoRouter.

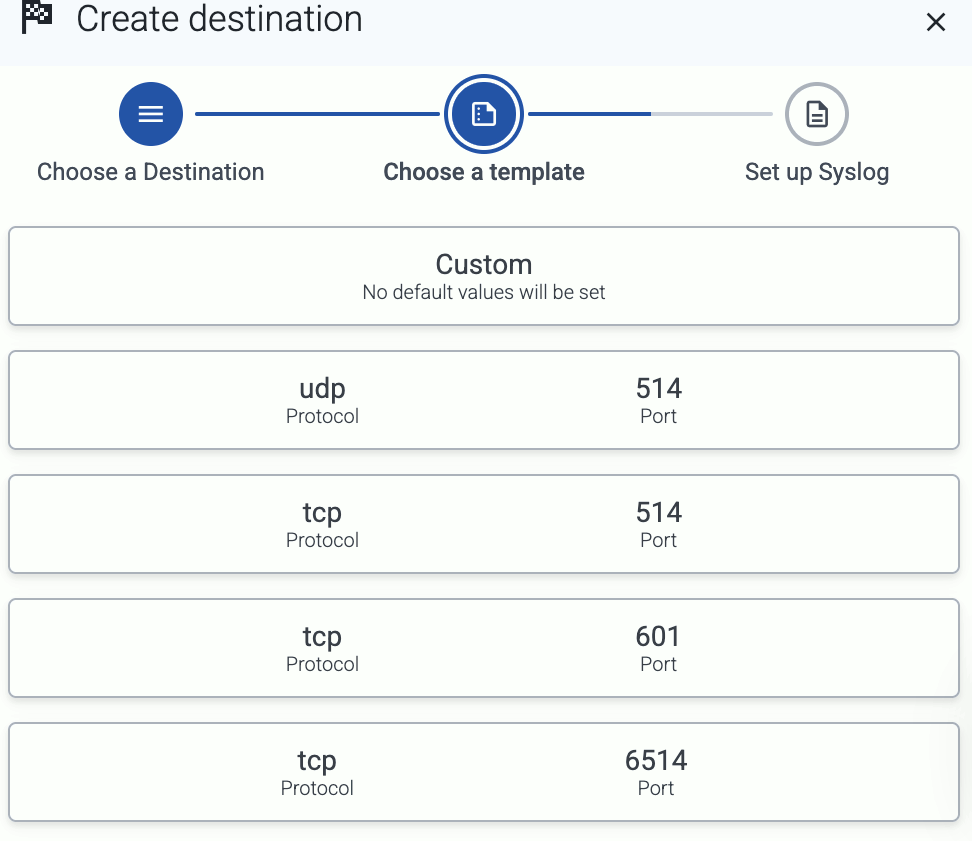

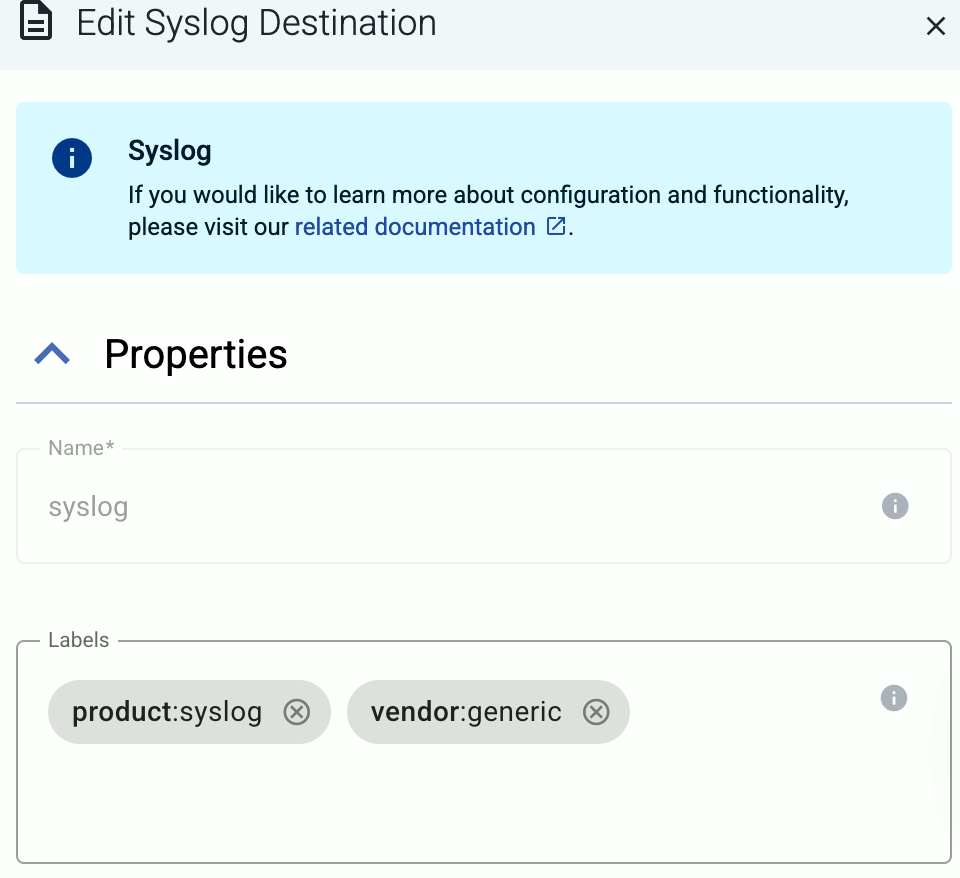

Configure a generic syslog host. For sources that are specifically supported by Axoflow, see Sources.

-

Log in to your device. You need administrator privileges to perform the configuration.

-

If needed, enable syslog forwarding on the device.

-

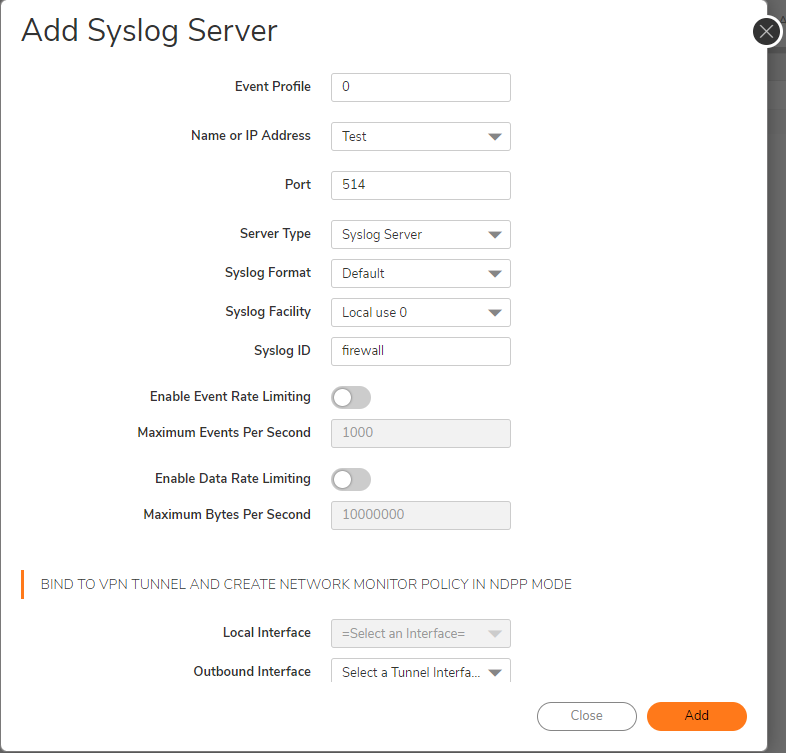

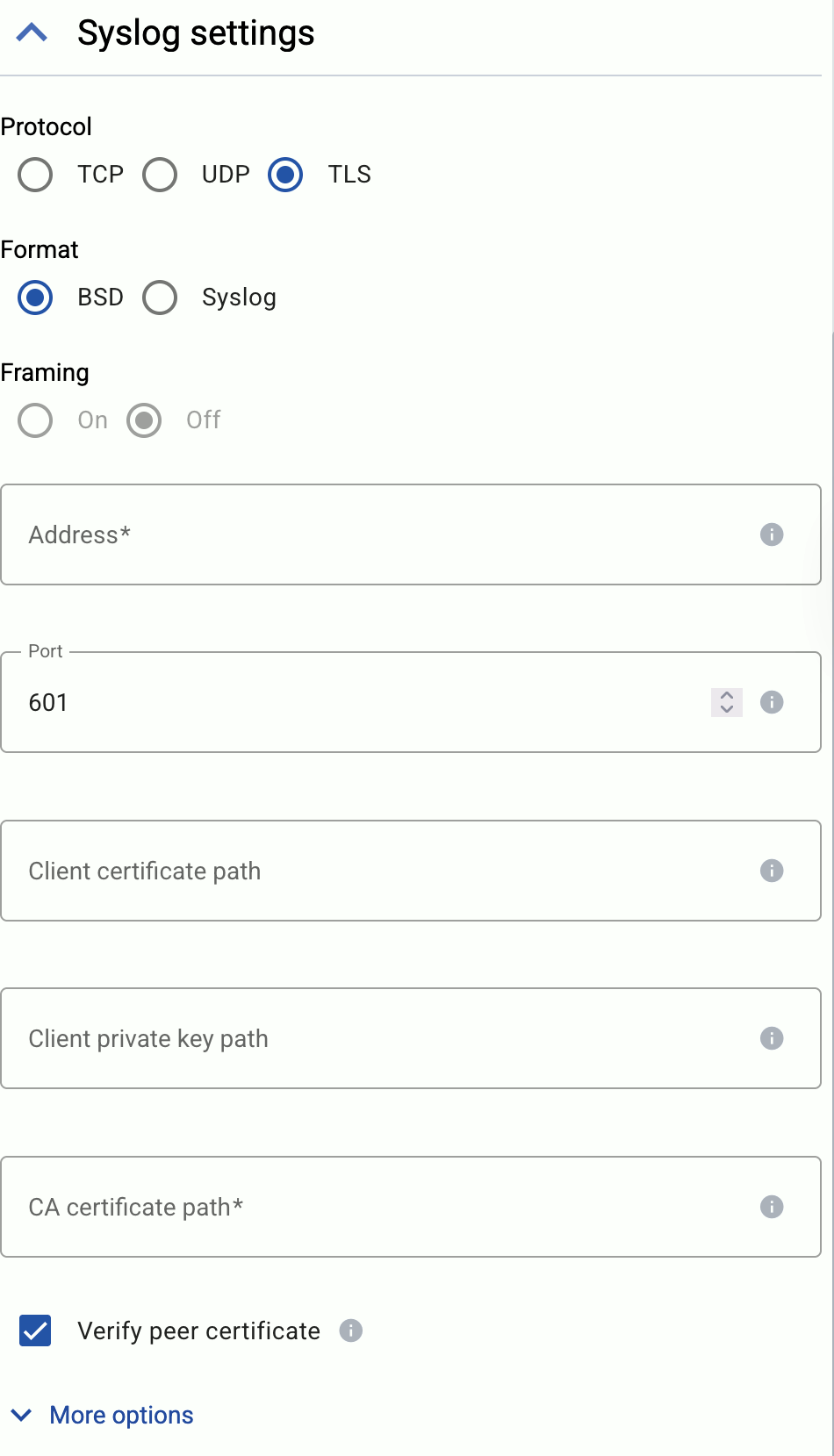

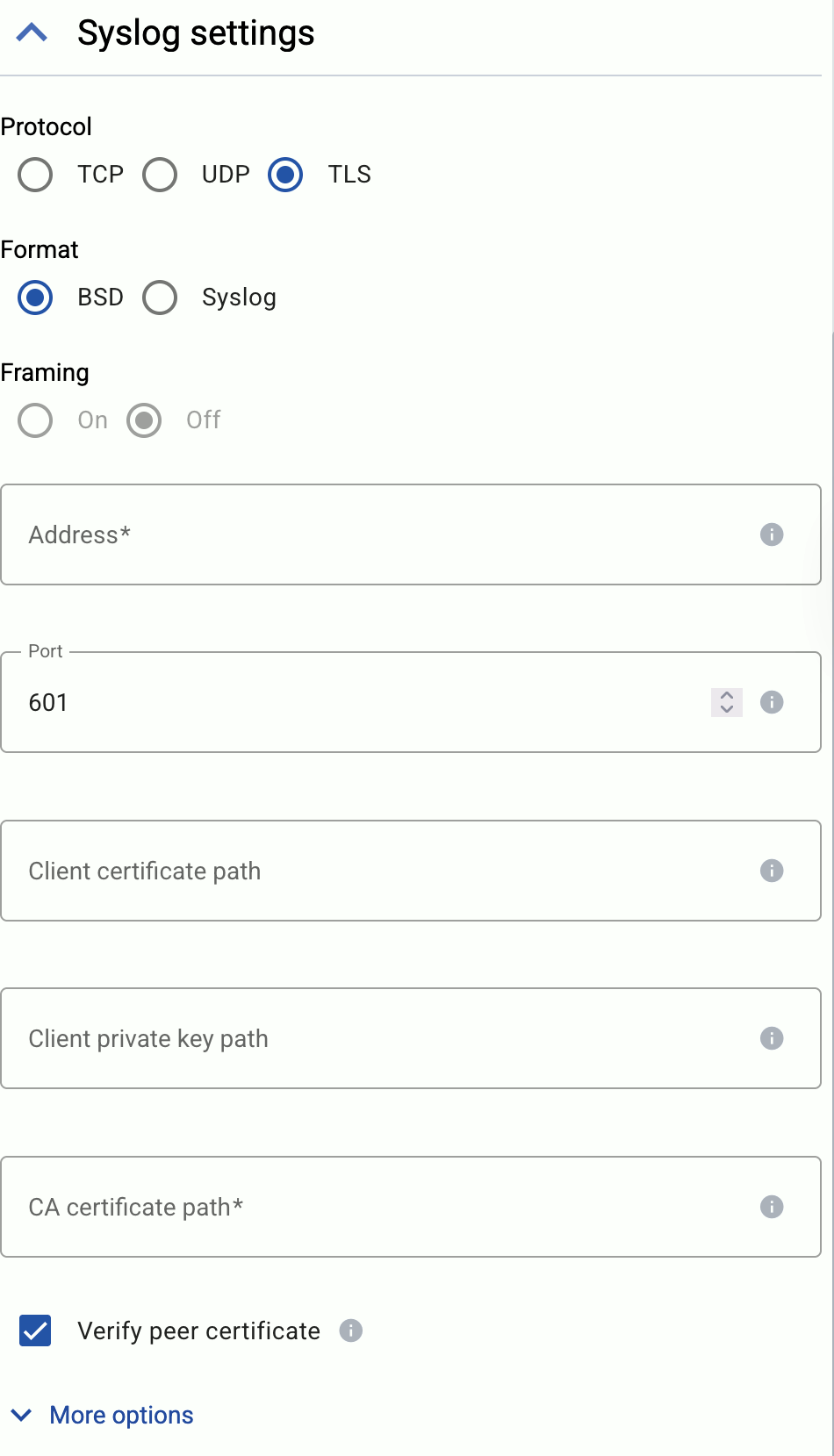

Set AxoRouter as the syslog server. Typically, you can configure the following parameters:

-

Name or IP Address of the syslog server: Set the address of your AxoRouter.

-

Protocol: If possible, set TCP or TLS.

-

Syslog Format: If possible, set RFC5424 (or equivalent), otherwise leave the default.

-

Port: Set a port appropriate for the protocol and syslog format you have configured.

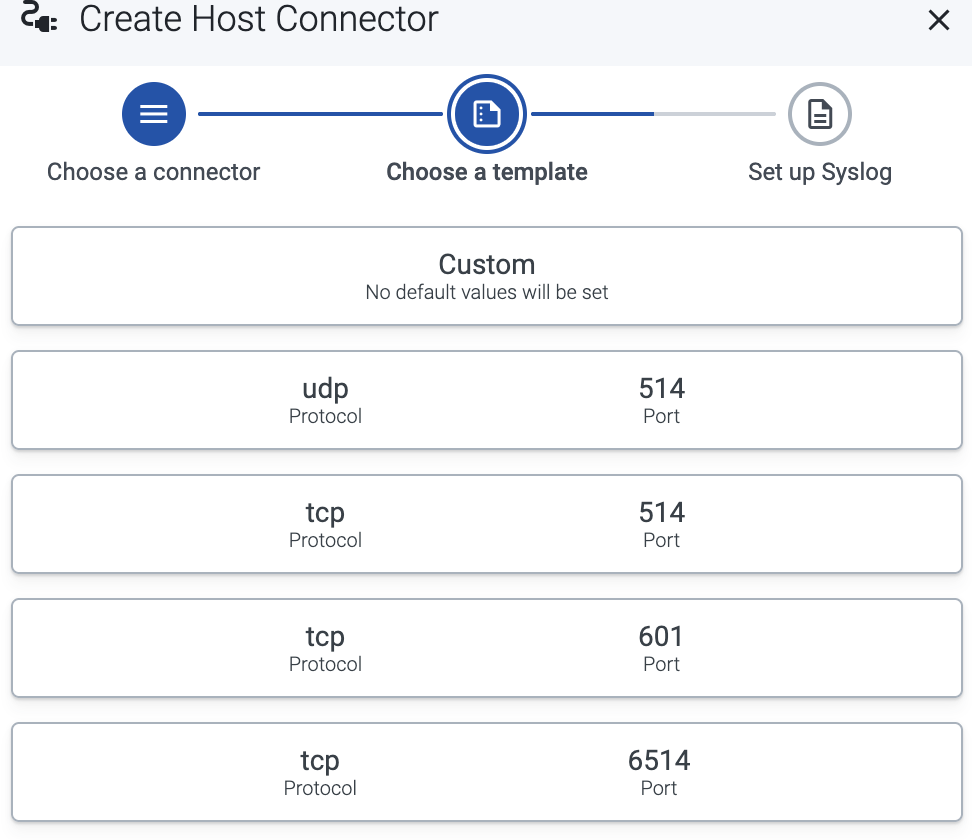

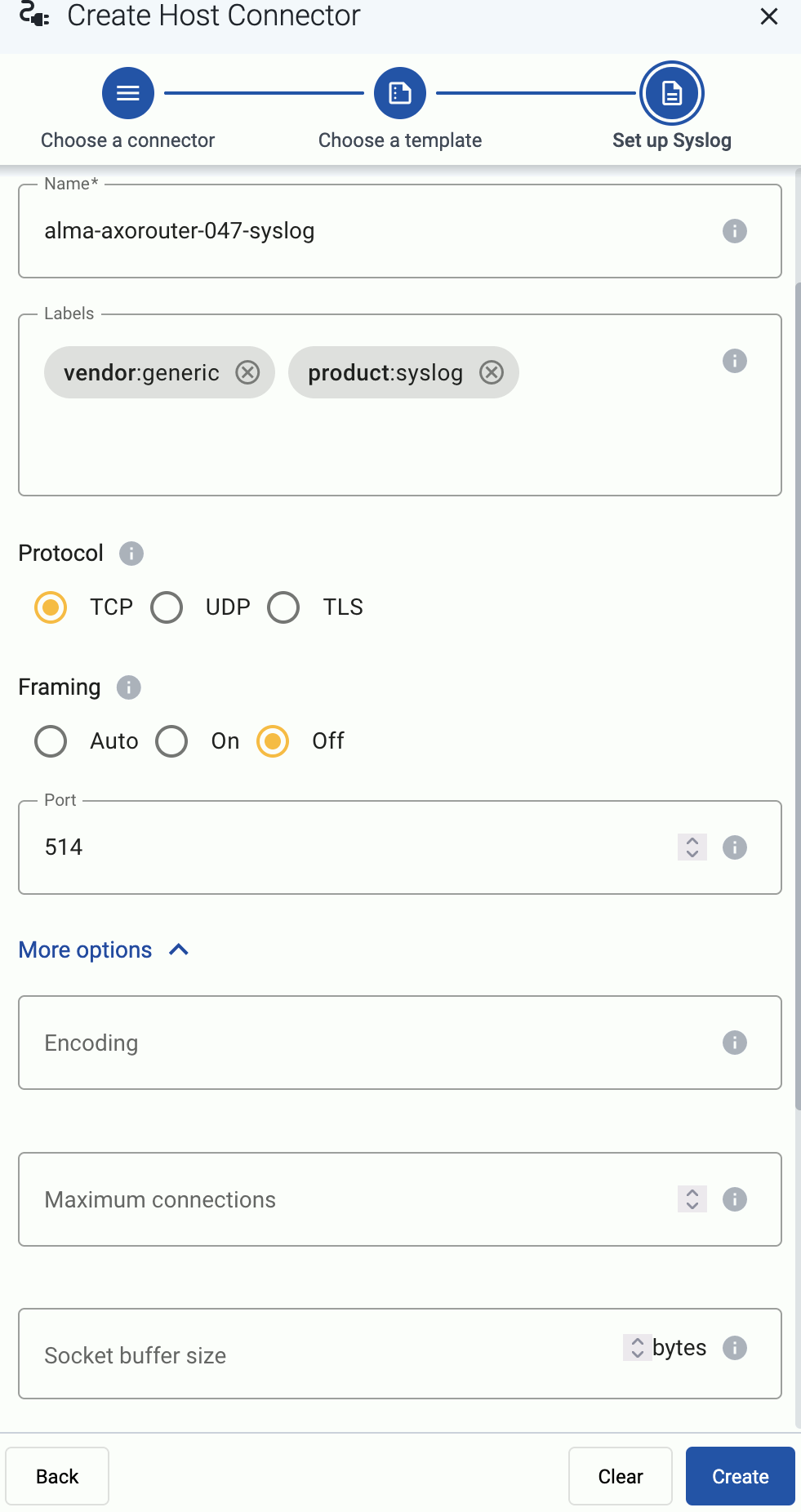

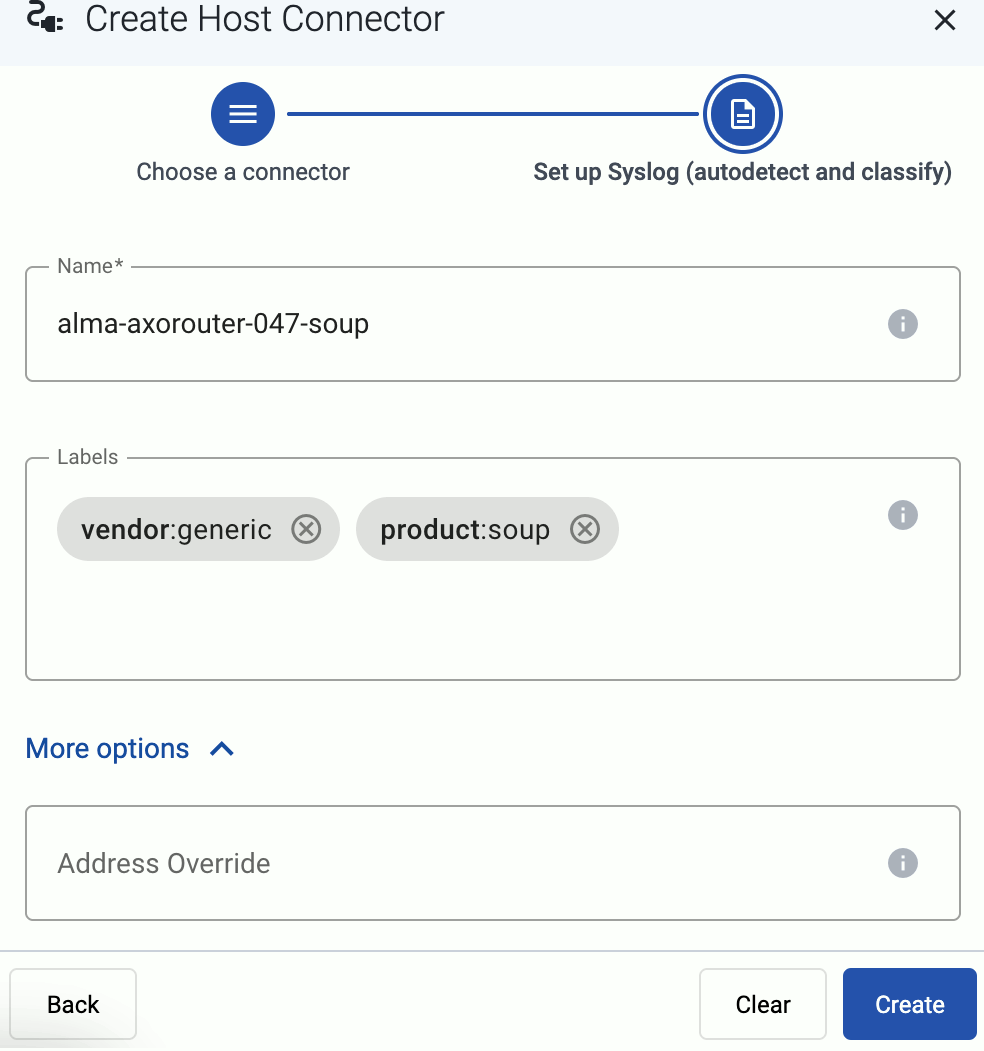

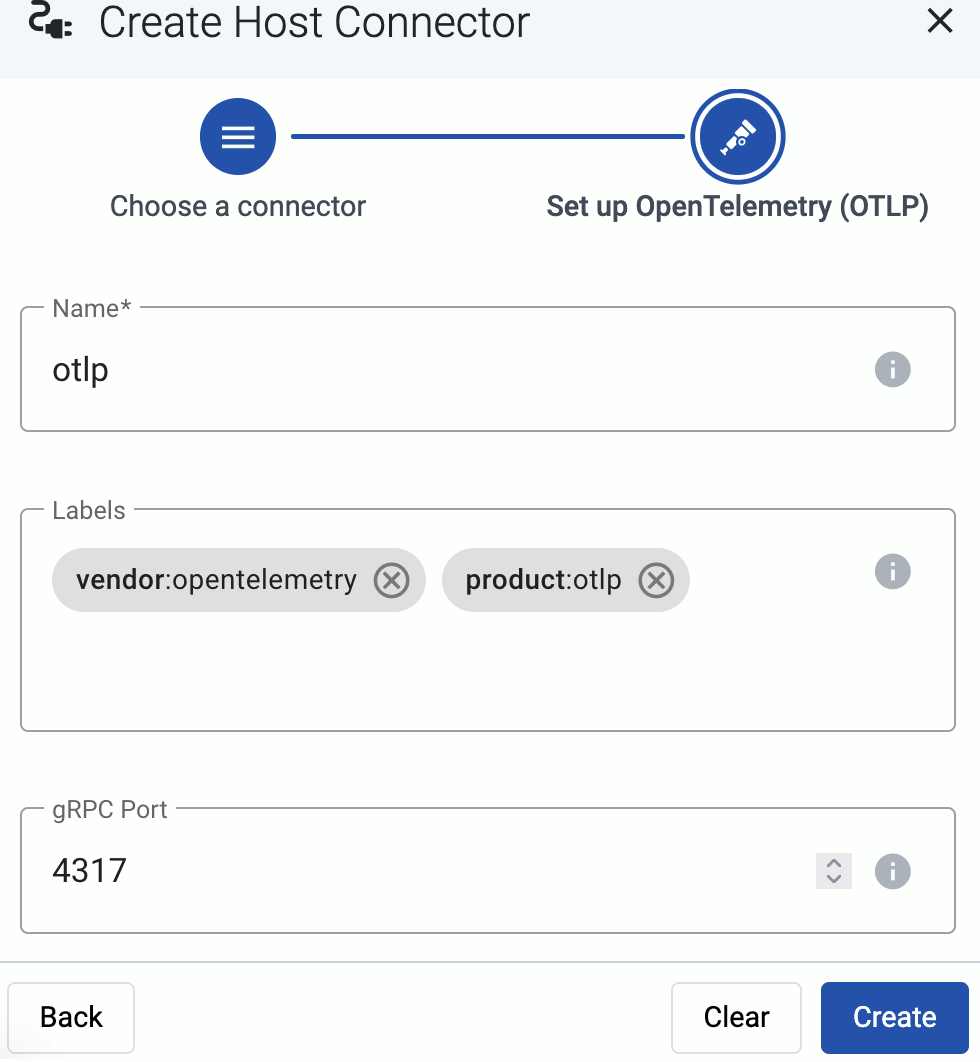

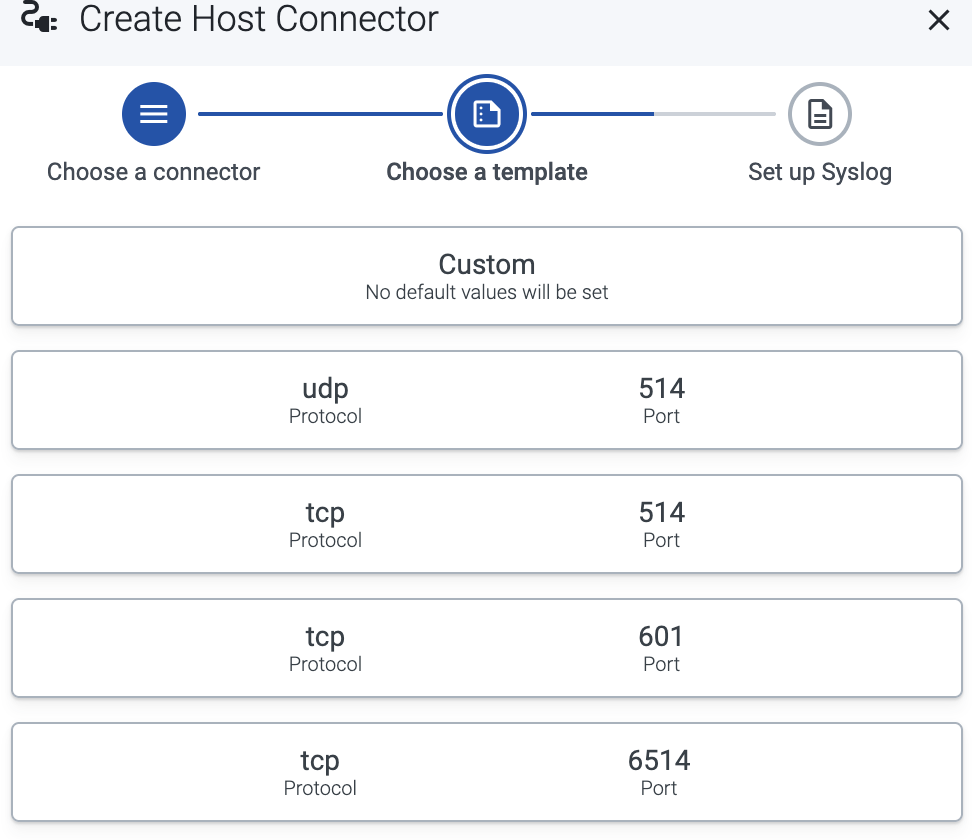

By default, AxoRouter accepts data on the following ports (unless you’ve modified the default connector rules):

- 514 TCP and UDP for RFC3164 (BSD-syslog) formatted traffic.

- 601 TCP for RFC5424 (IETF-syslog) formatted traffic.

- 6514 TCP for TLS-encrypted syslog traffic.

- 4317 TCP for OpenTelemetry log data.

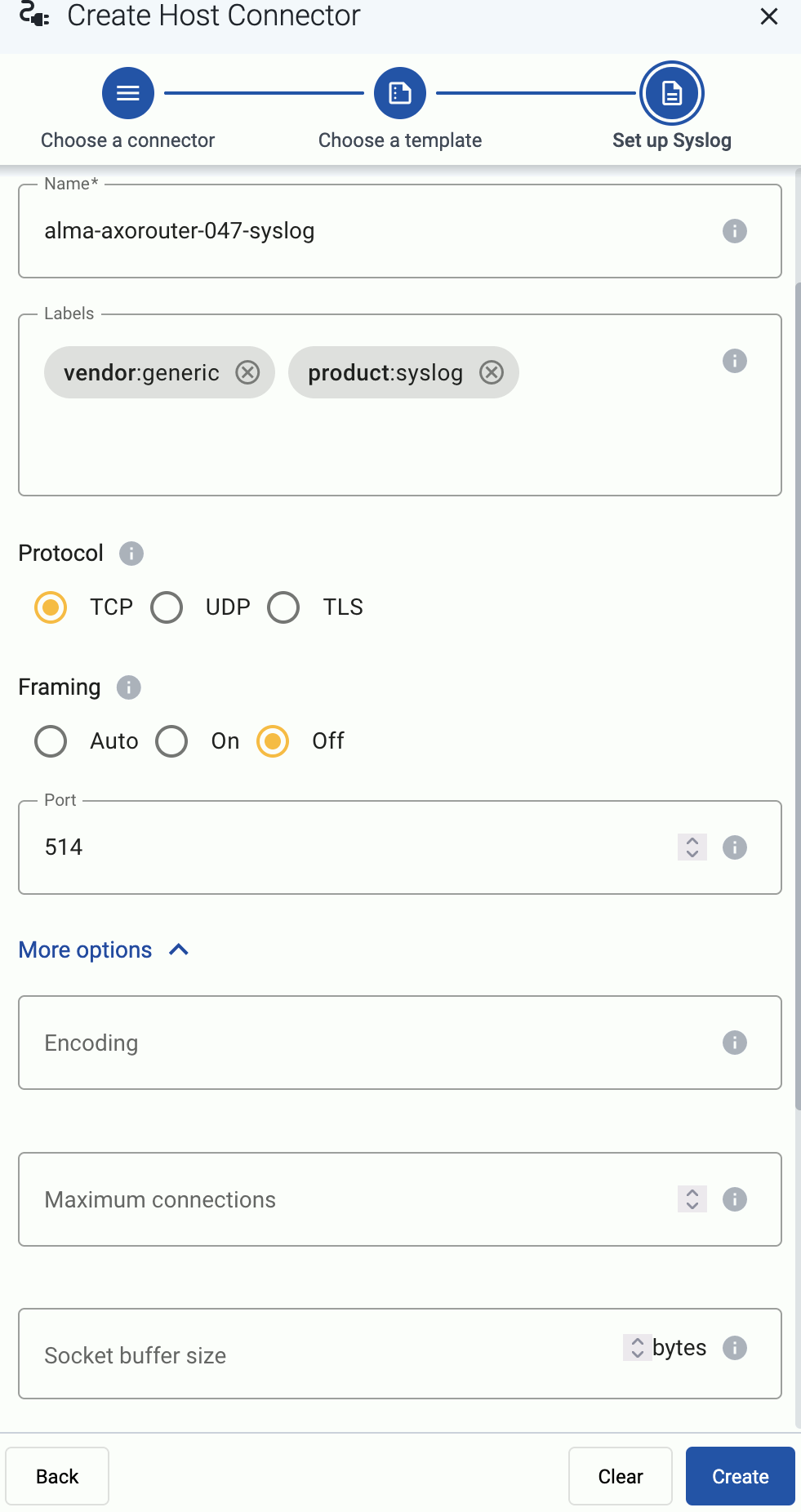

To receive data on other ports or other protocols, configure other connector rules for the AxoRouter host.

Make sure to enable the ports you’re using on the firewall of your host.

-

Add the source to Axoflow Console.

-

Open the Axoflow Console and select Topology.

-

Select + > Source.

- If the source is actively sending data to an AxoRouter instance, select Detected, then select your source.

- Otherwise, select the vendor and product corresponding to your source from the Predefined sources, then enter the parameters of the source, like IP address and FQDN.

Note

During [log tapping](/docs/axoflow/onboard-hosts/log-tapping/), you can add hosts that are actively sending data to an AxoRouter instance by clicking **Register source**.

-

(Optional) Add custom labels as needed.

-

Select Create.

Note

If your syslog source is running

syslog-ng, Splunk Connect for Syslog (SC4S), or AxoSyslog as its log forwarder agent, consider installing Axolet on the host and instrumenting the configuration of the log forwarder to receive detailed metrics about the host and the processed data. For details, see

Manage and monitor the pipeline.

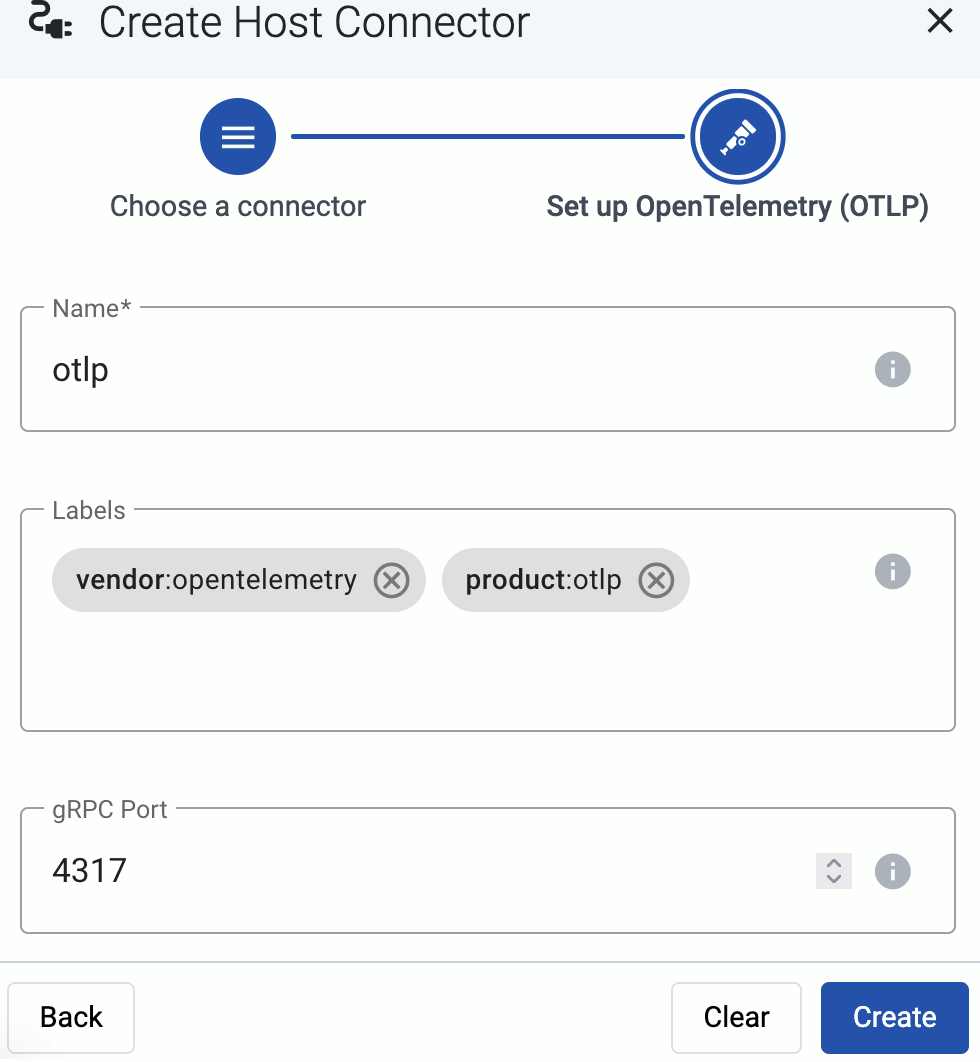

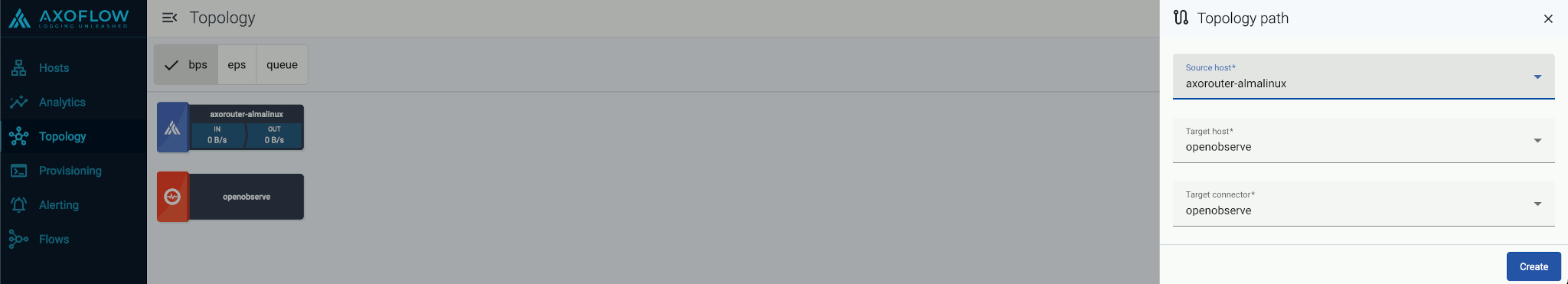

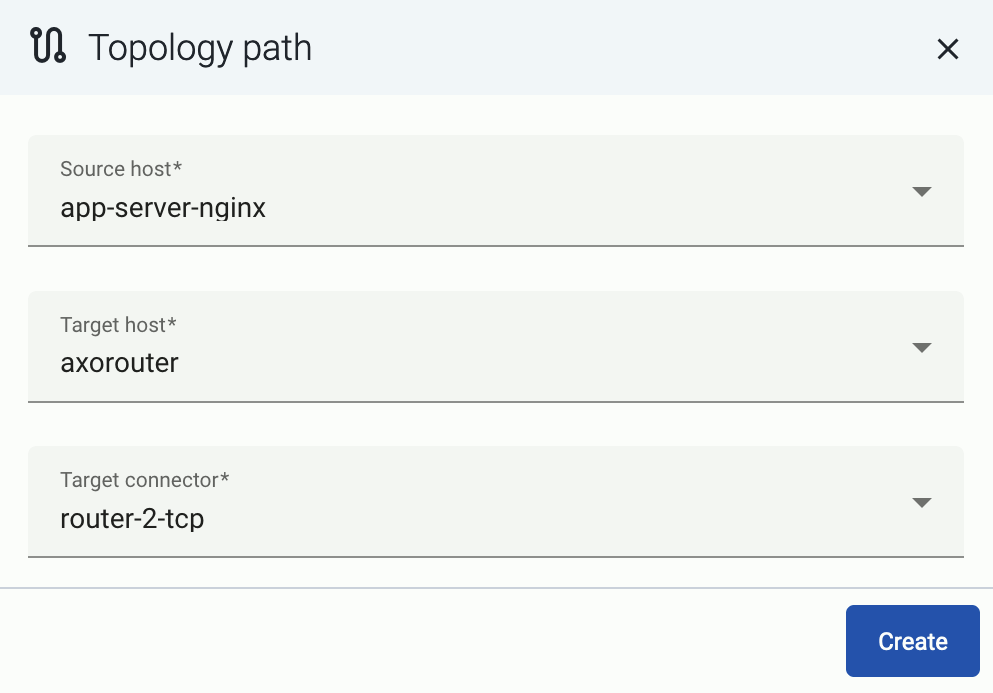

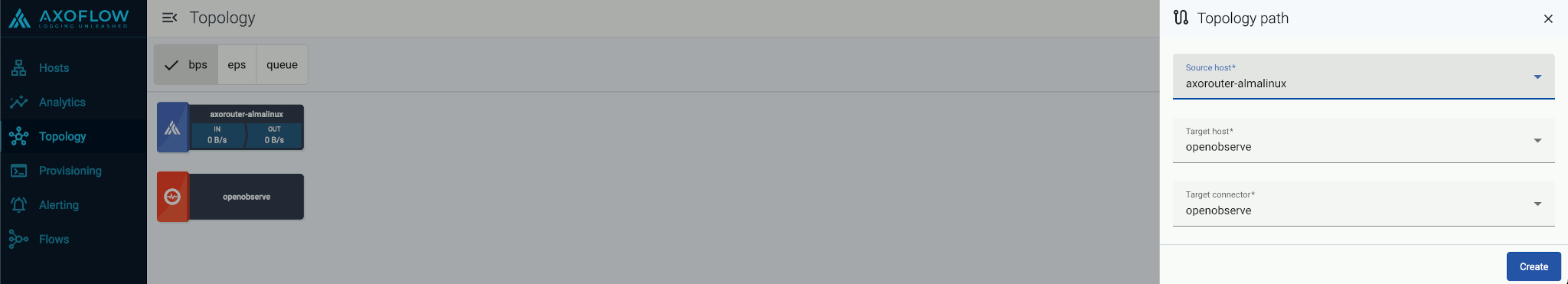

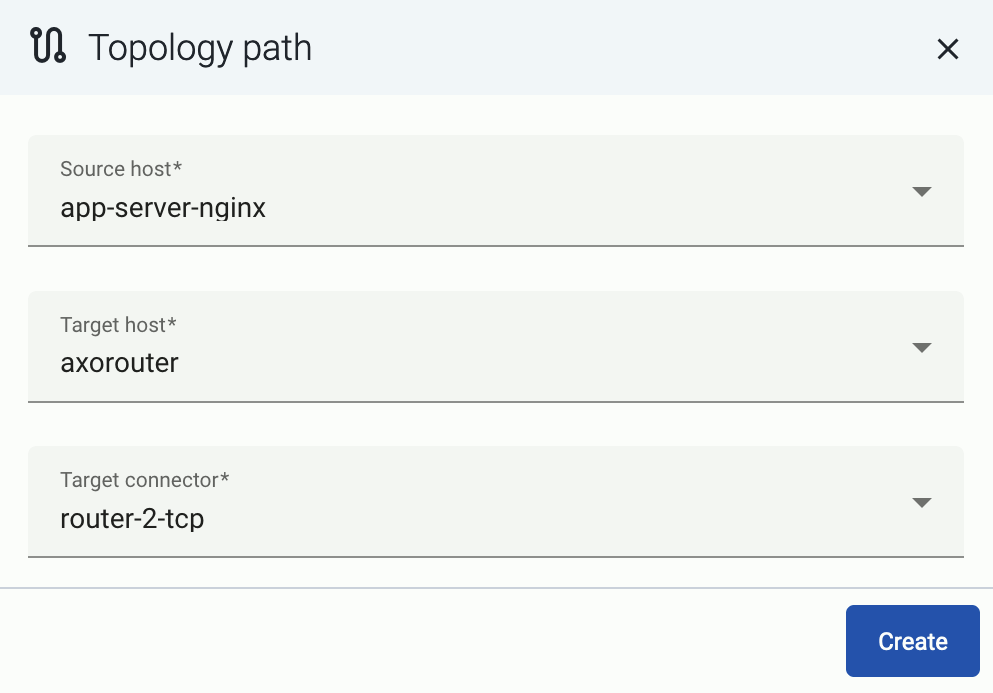

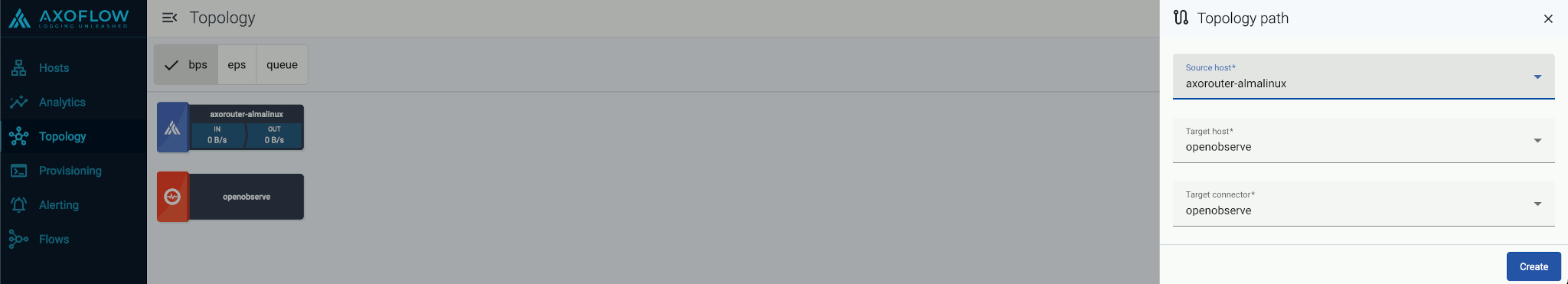

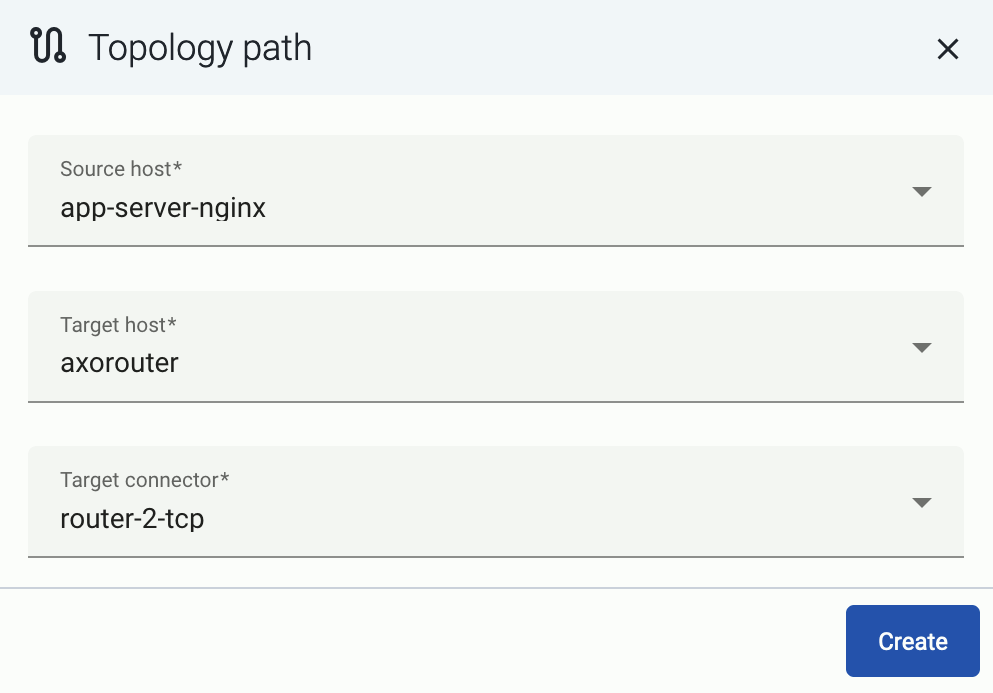

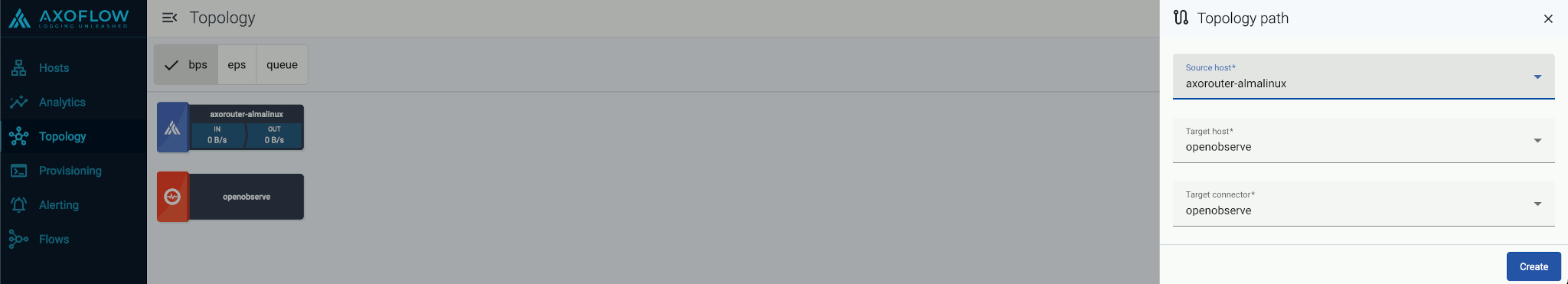

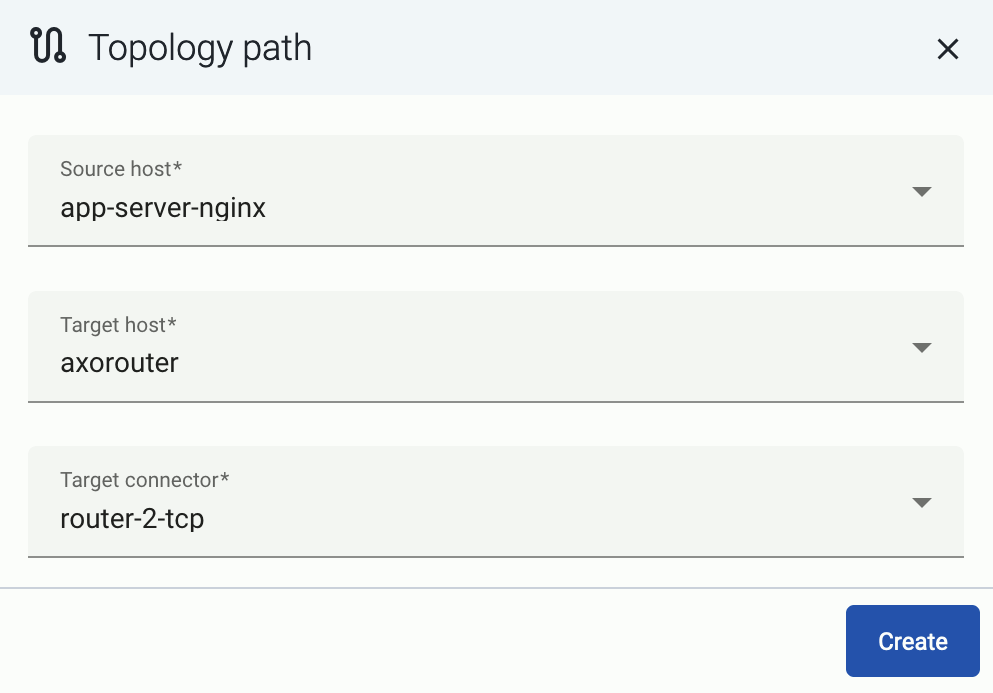

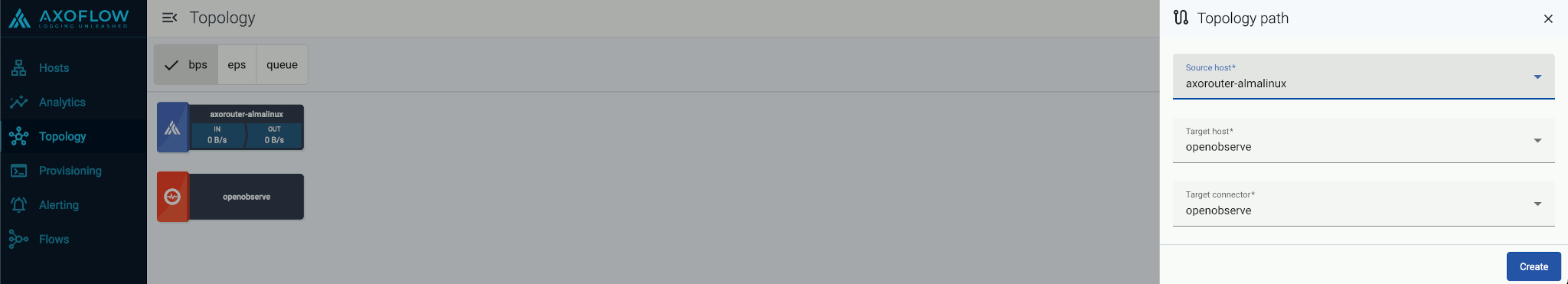

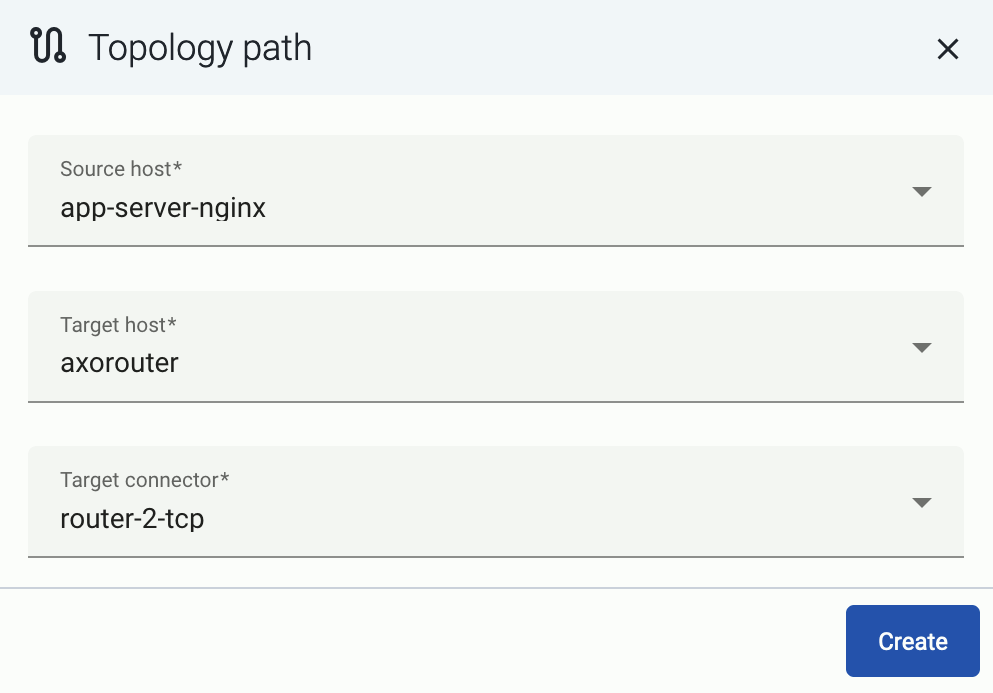

Add a path

Create a path between the source source and the AxoRouter instance.

-

Select Topology > + > Path.

-

Select your data source in the Source host field.

-

Select the target router or aggregator this source is sending its data to in the Target host field, for example, axorouter.

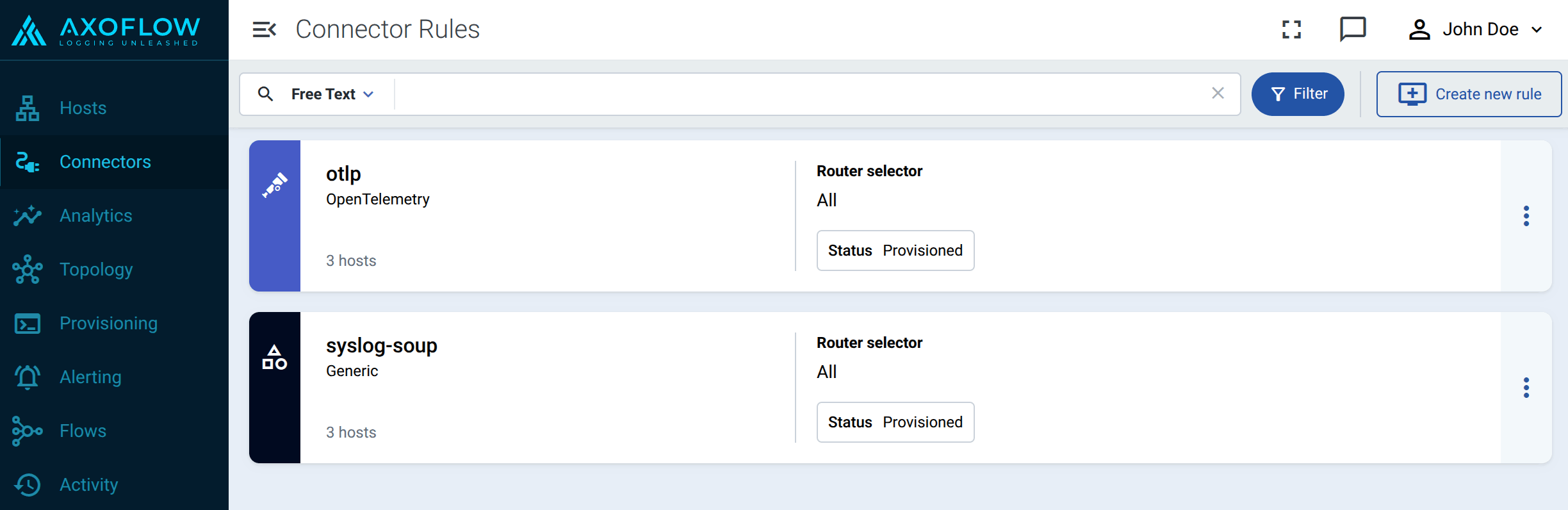

-

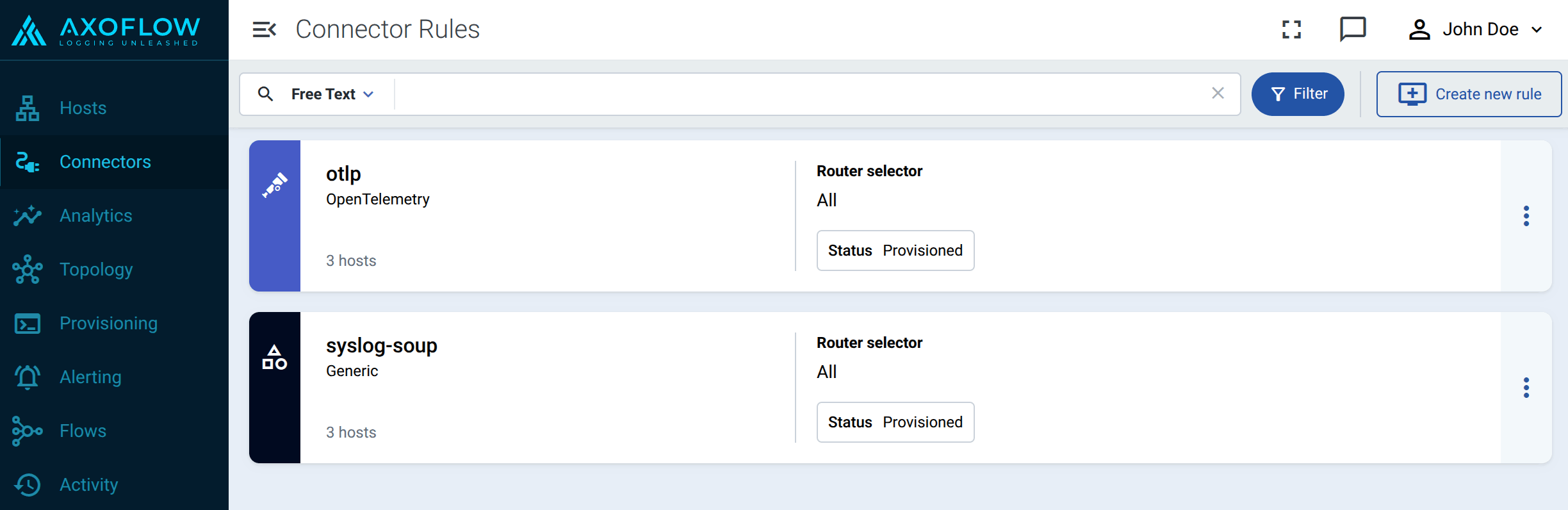

Select the Target connector. The connector determines how the destination receives the data (for example, using which protocol or port).

-

Select Create. The new path appears on the Topology page.

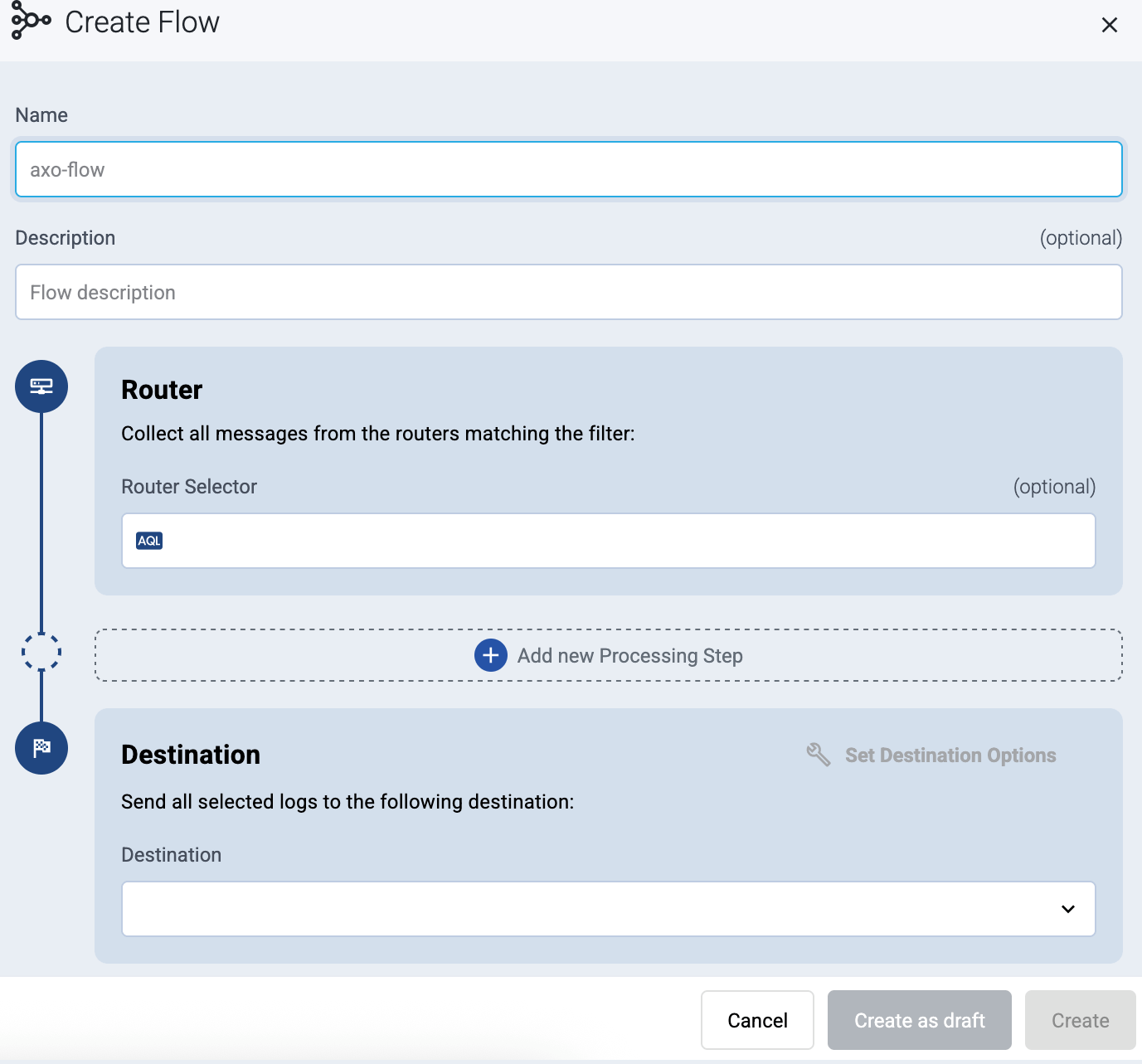

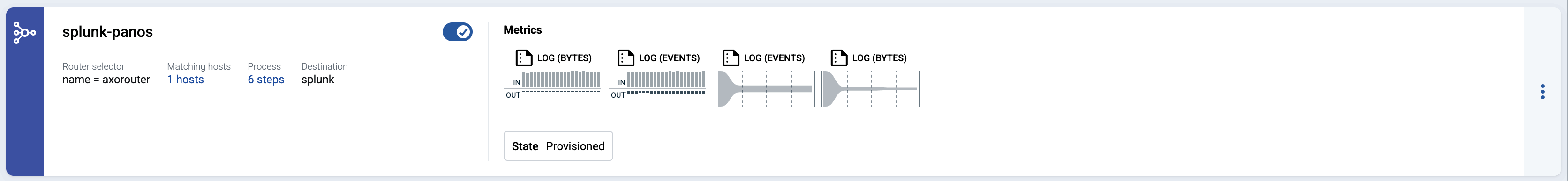

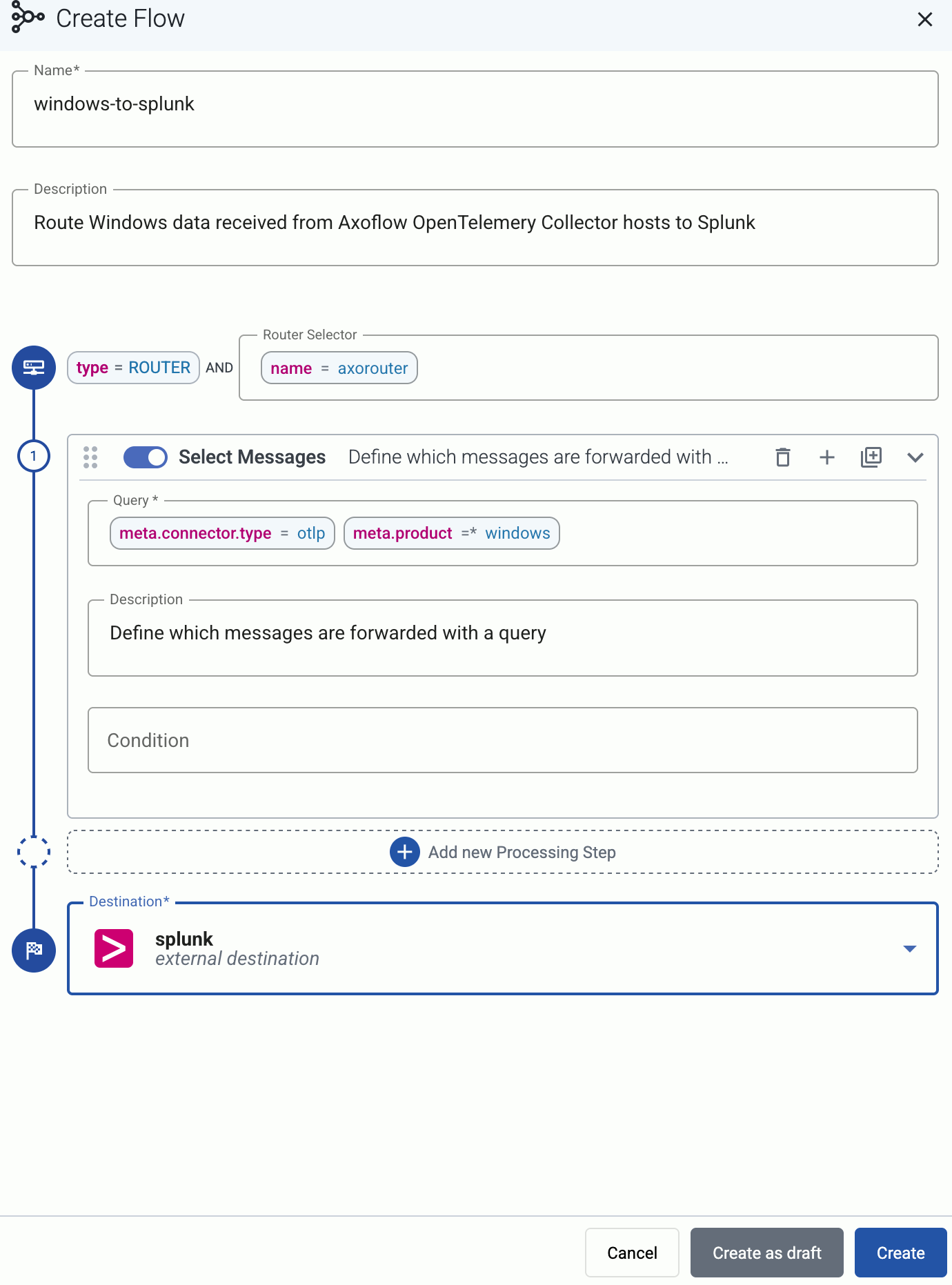

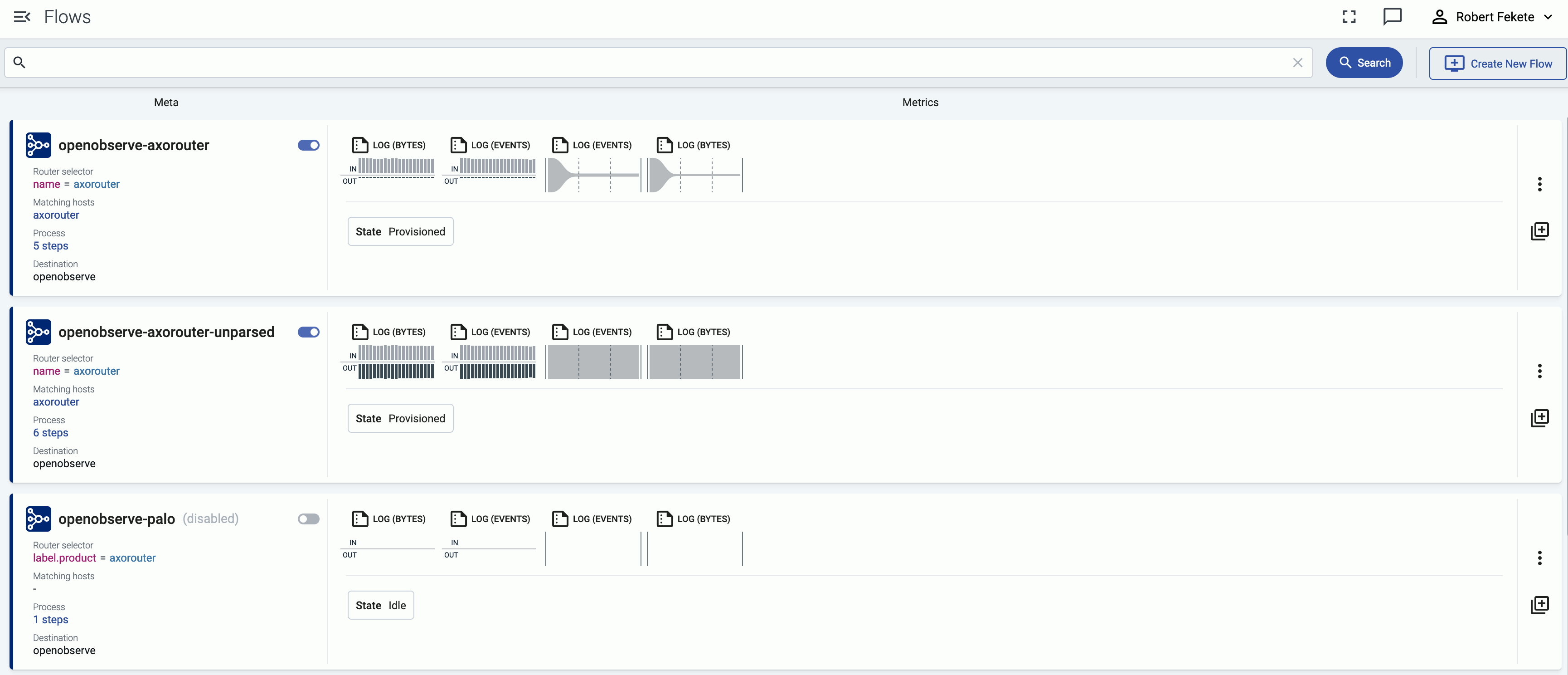

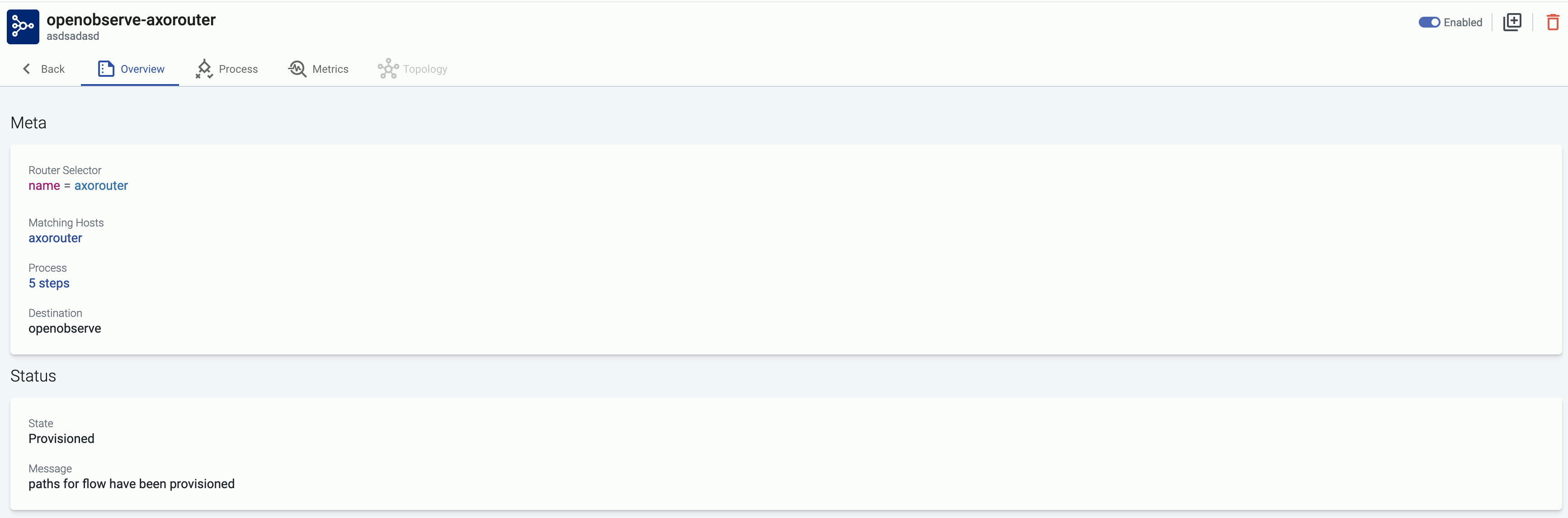

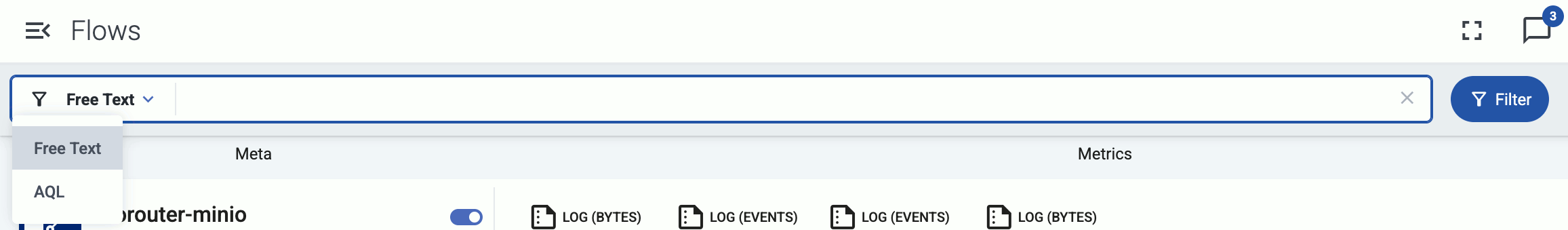

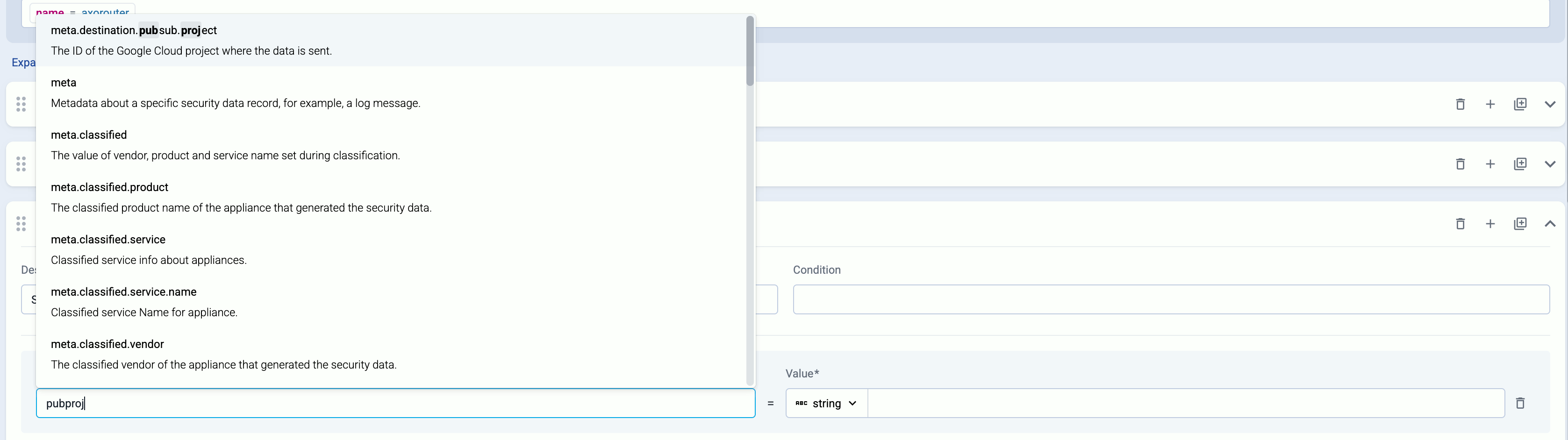

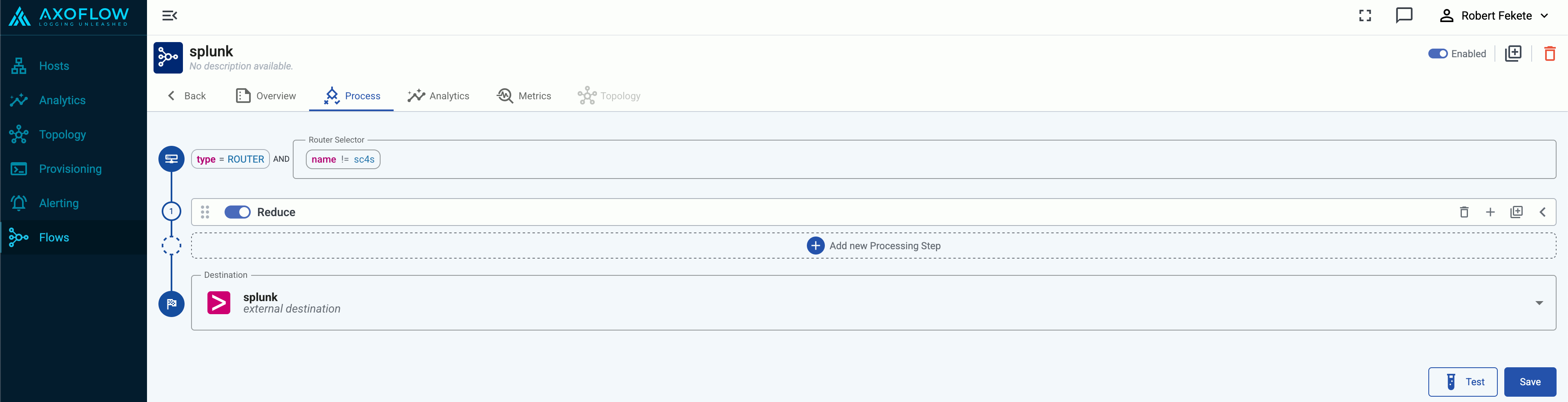

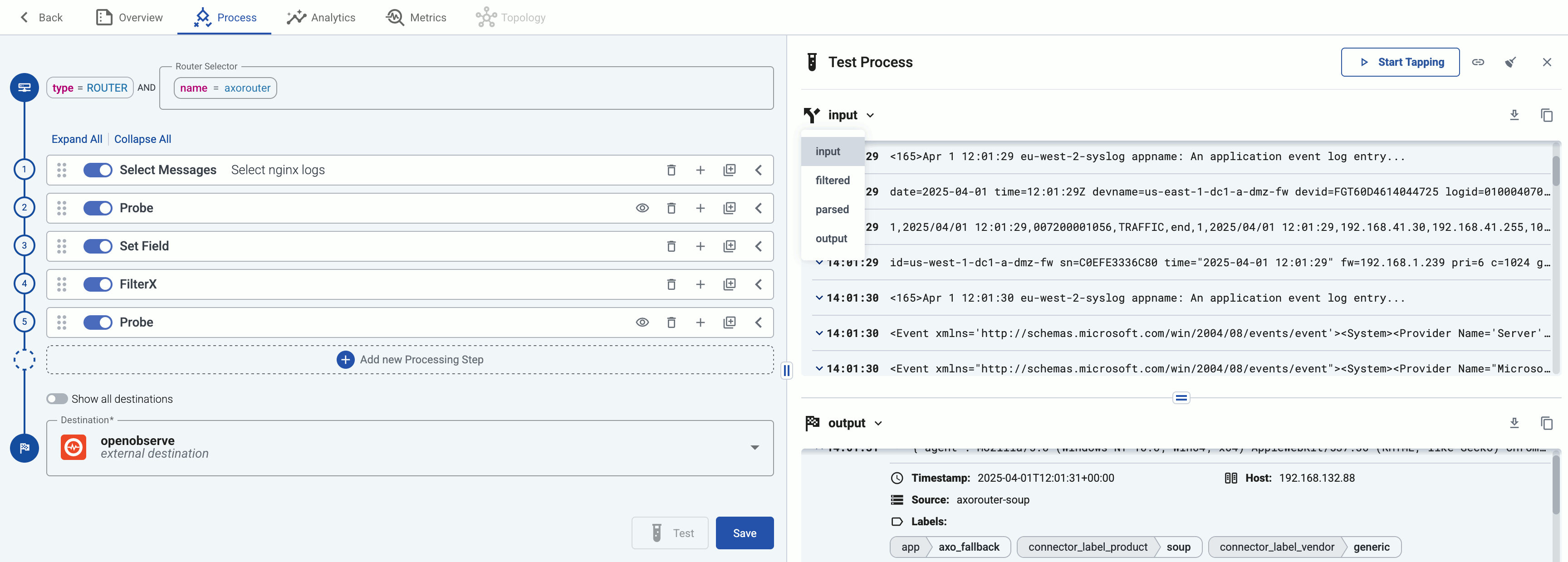

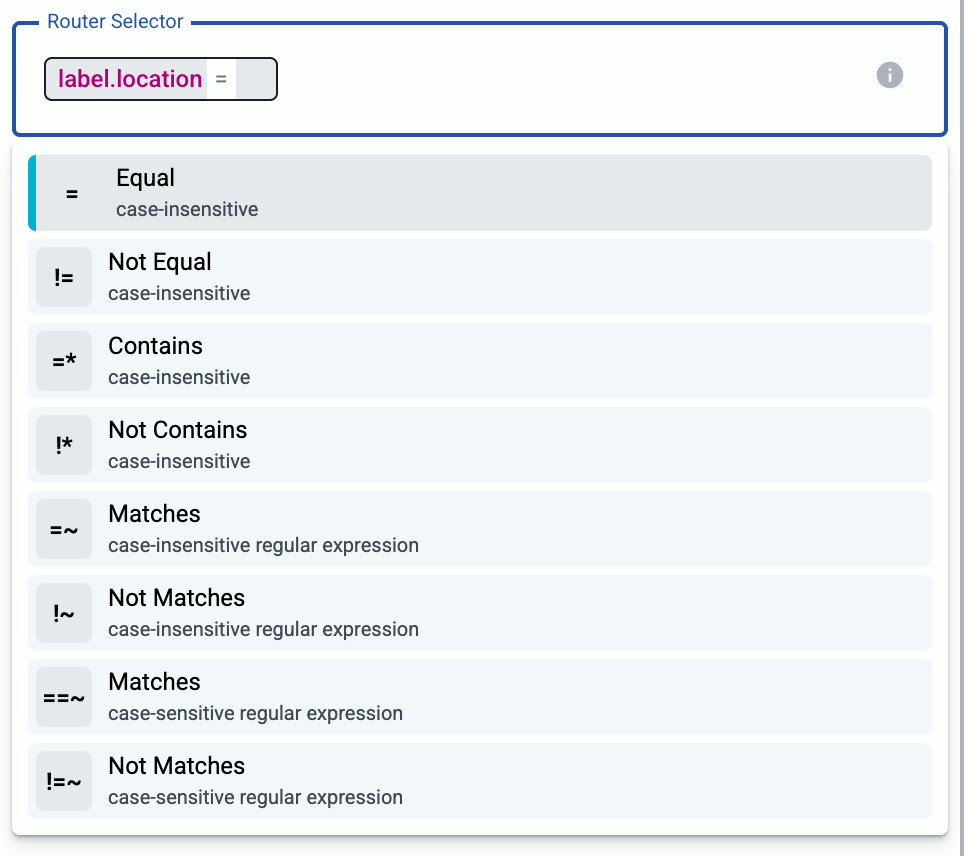

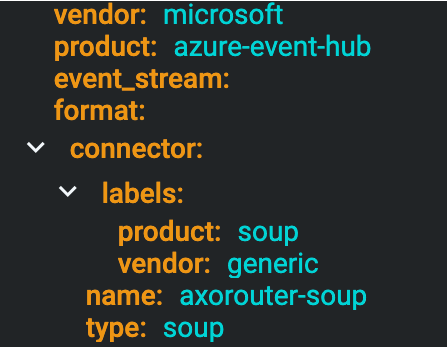

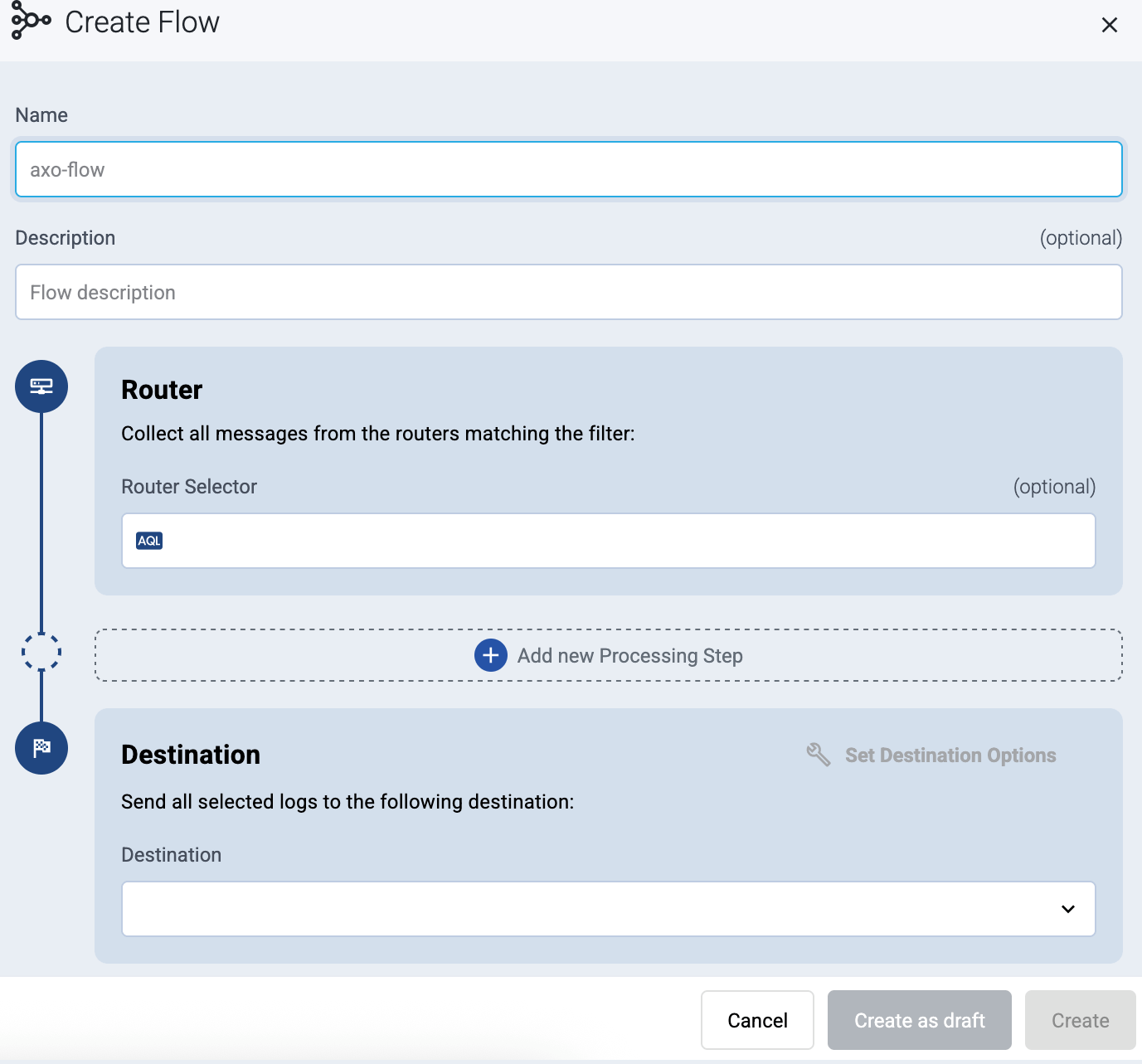

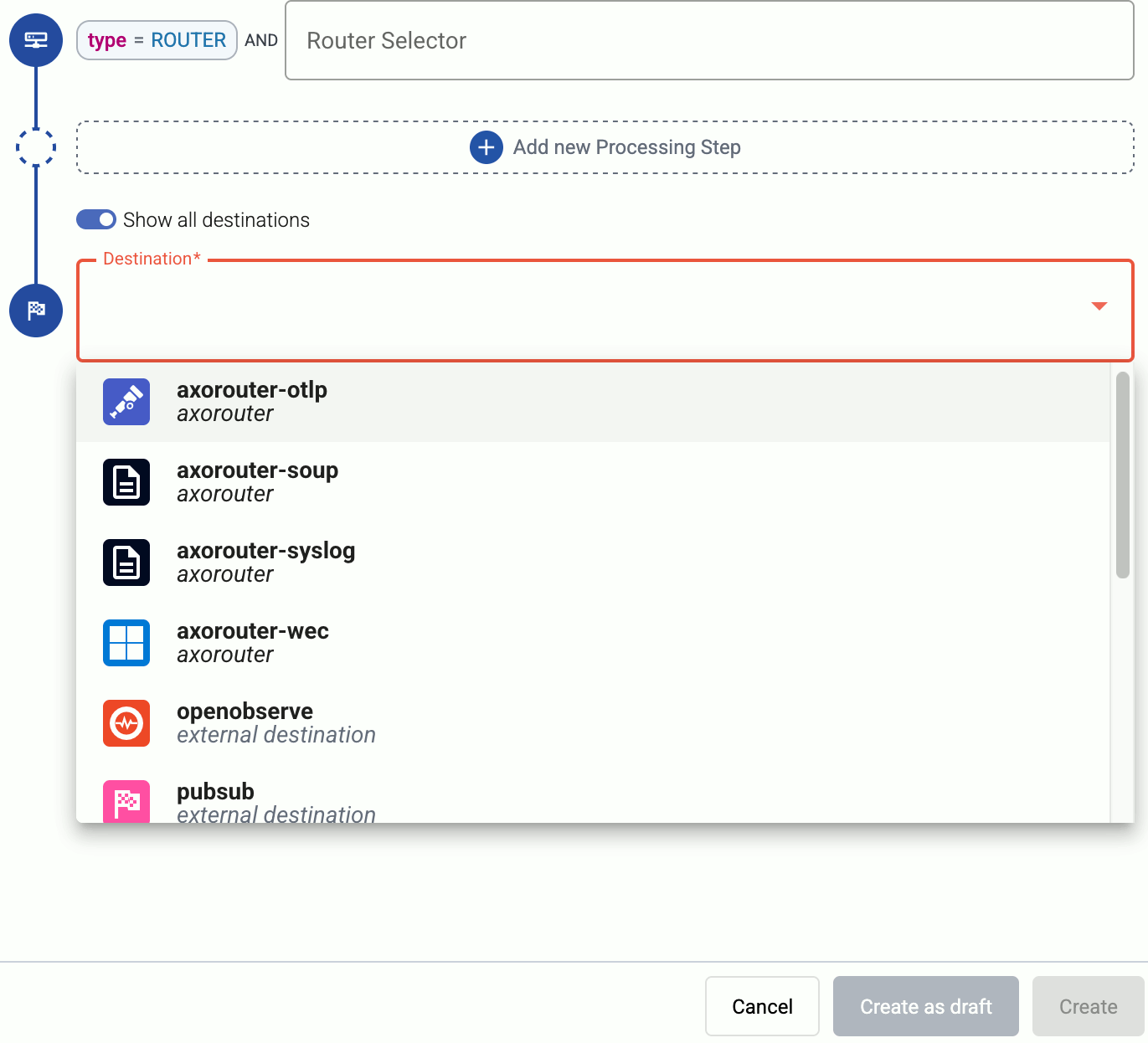

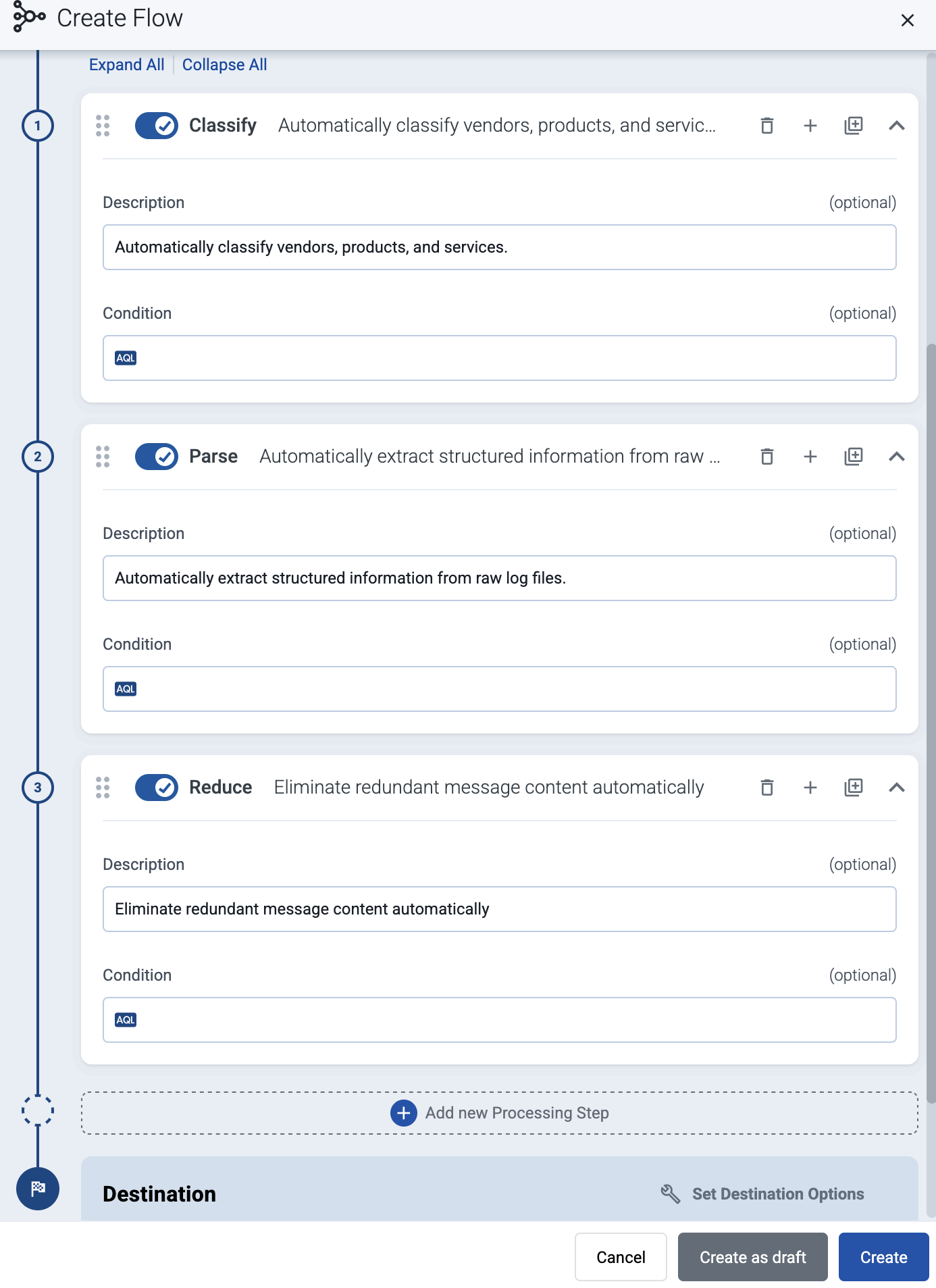

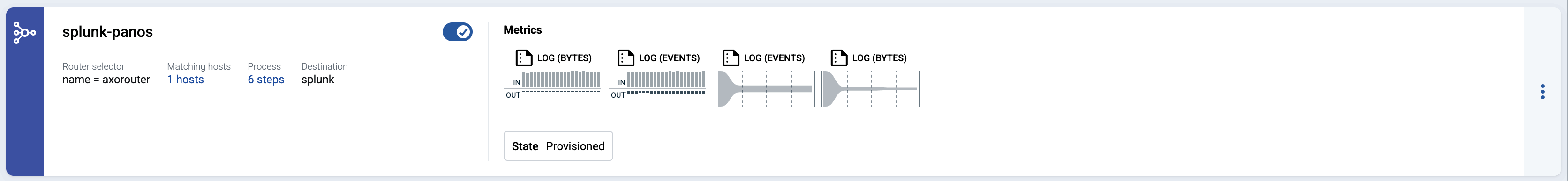

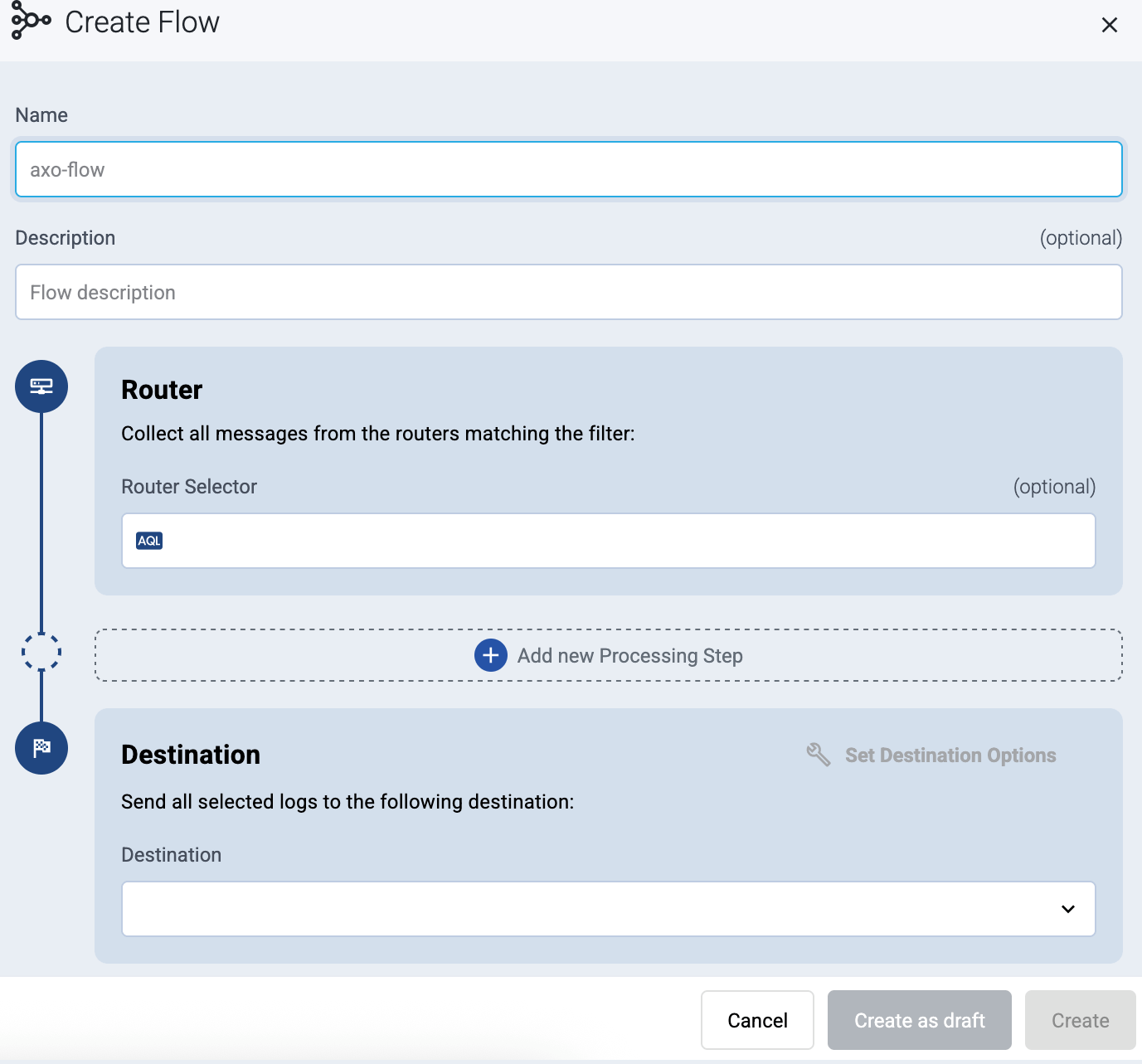

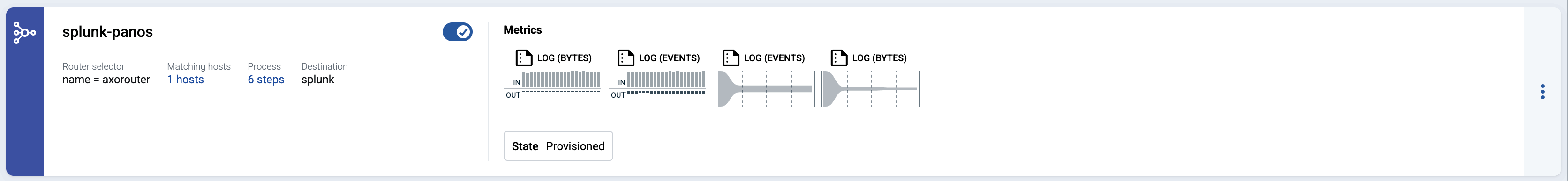

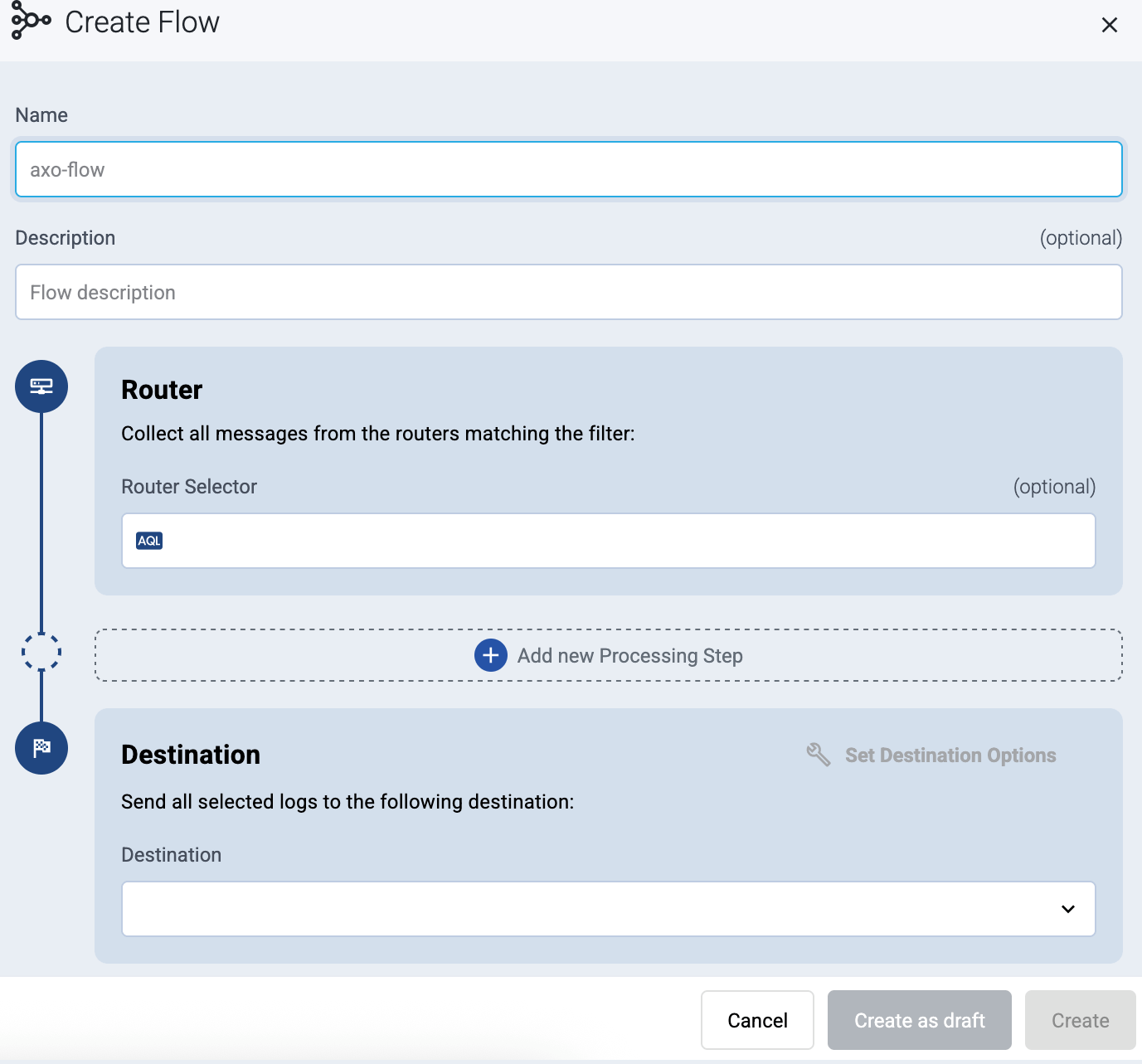

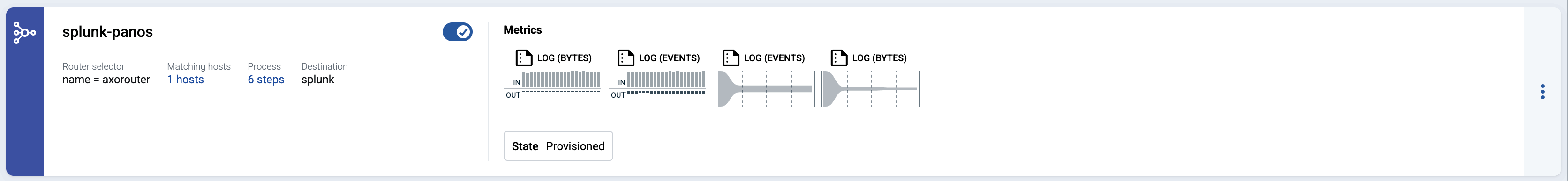

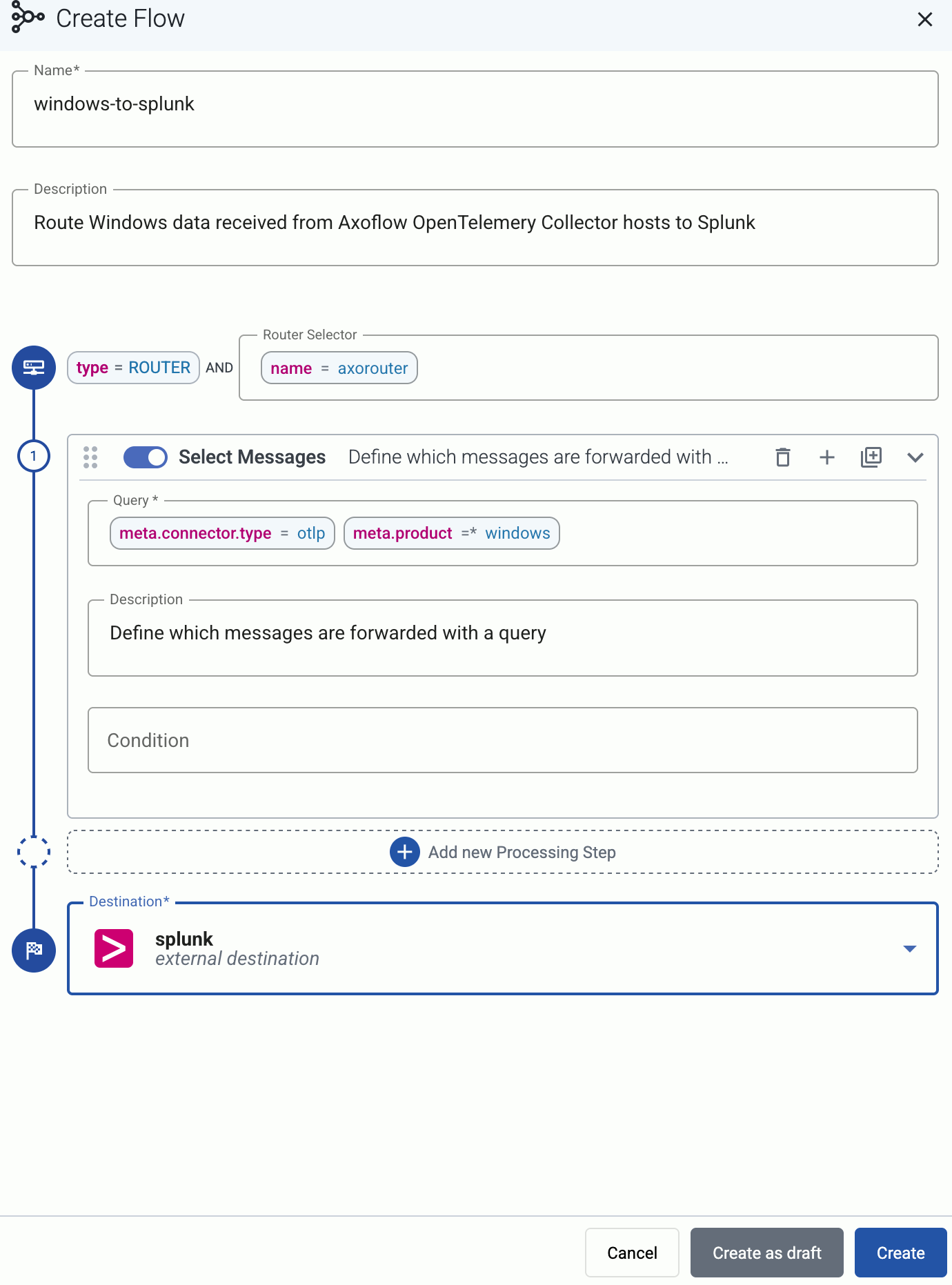

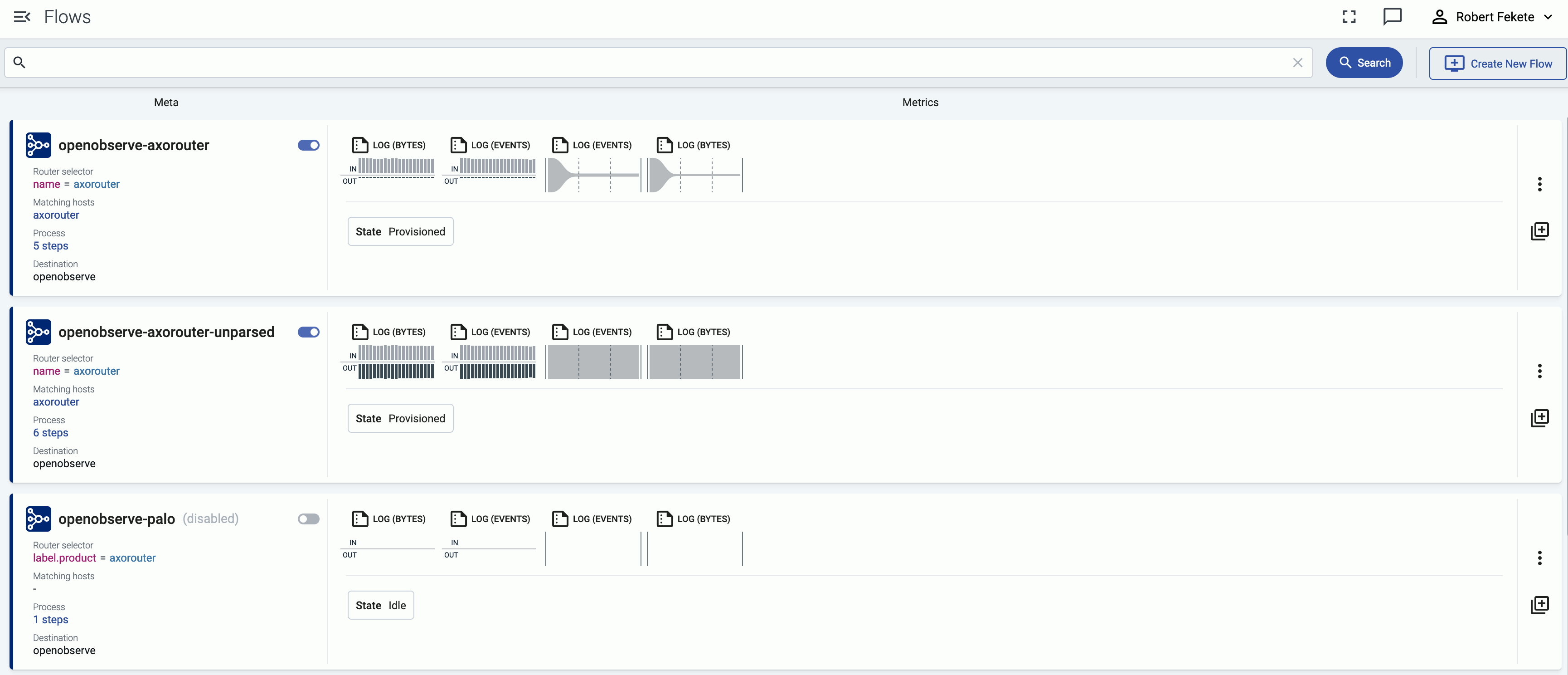

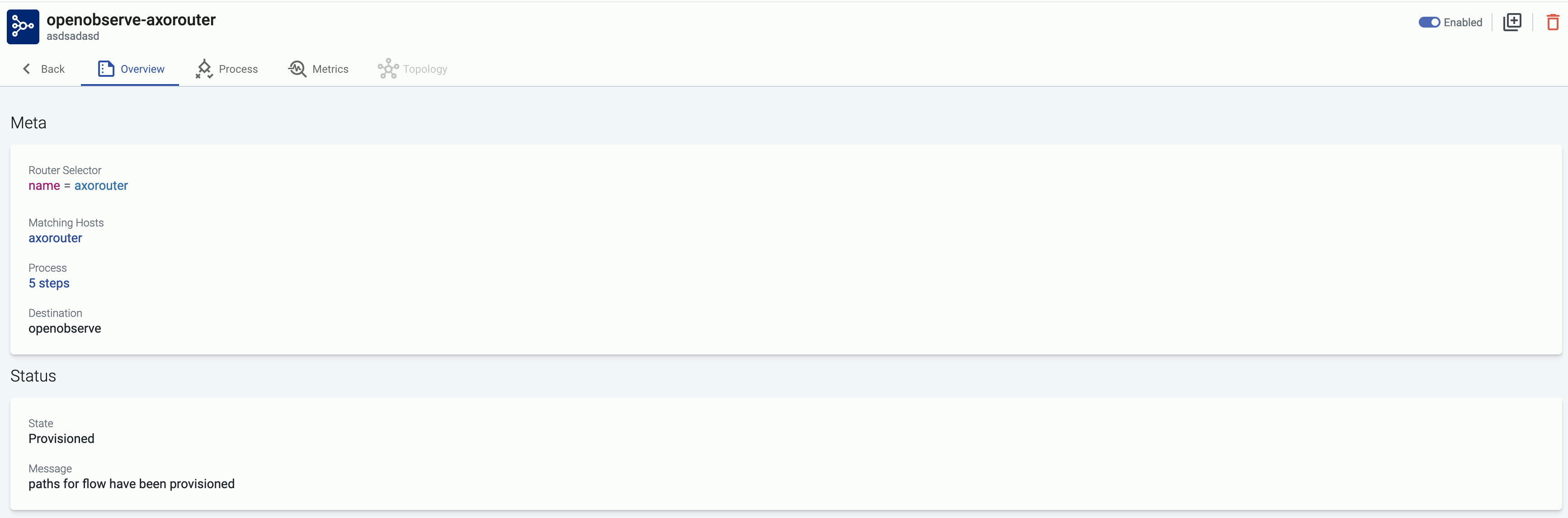

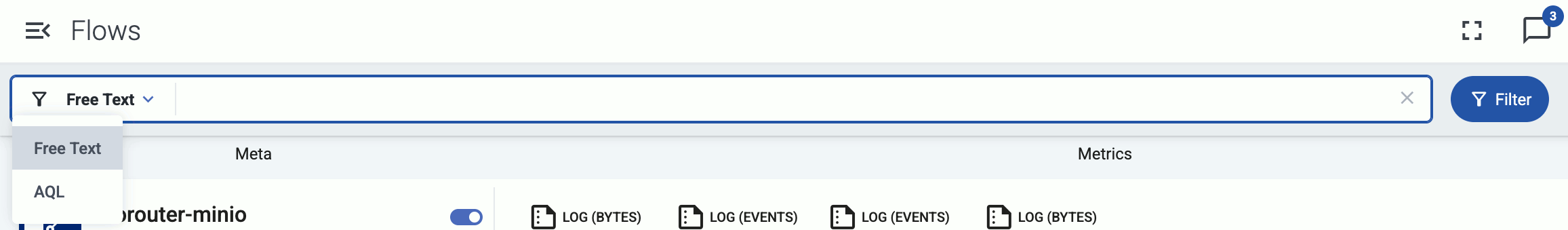

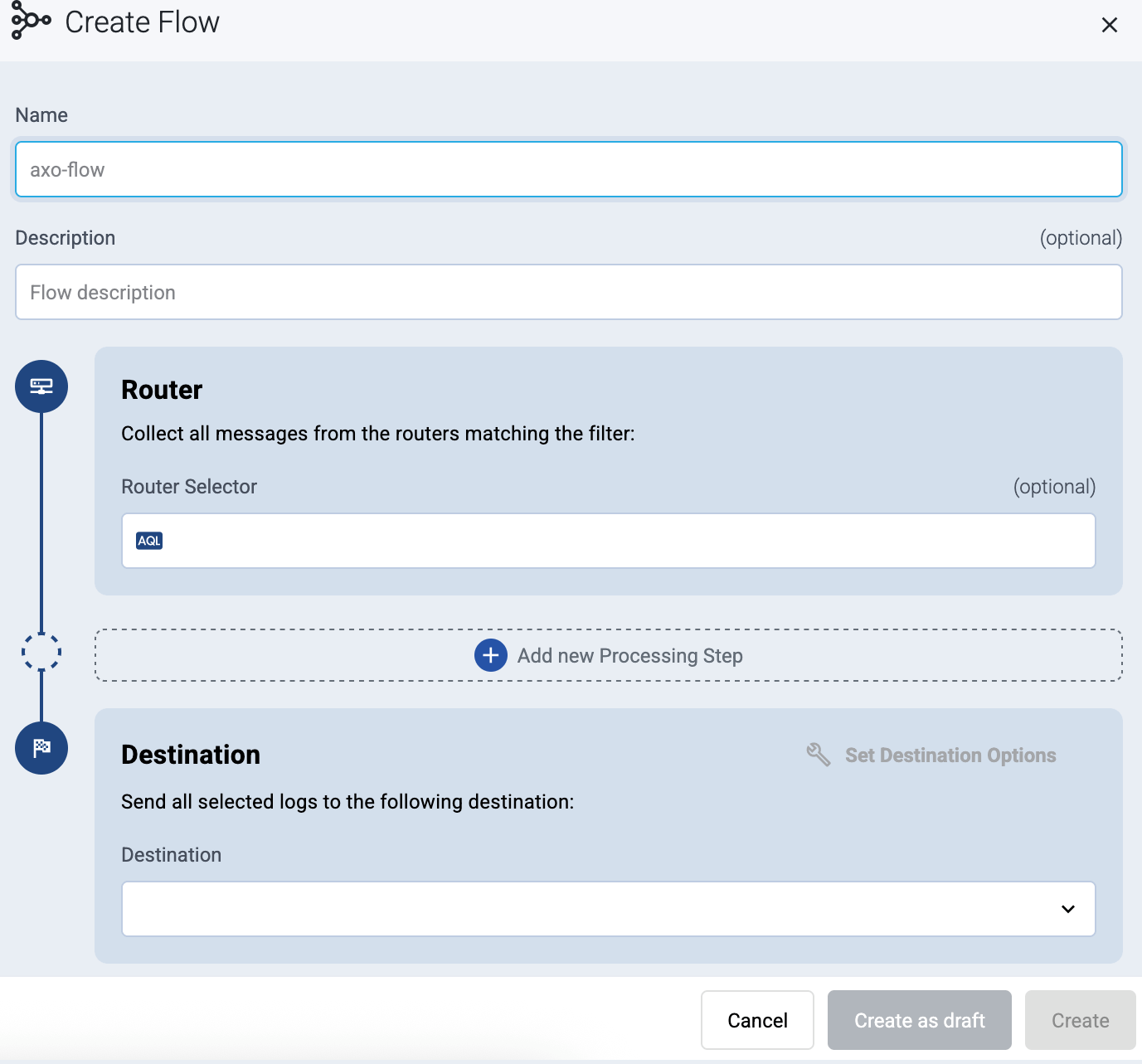

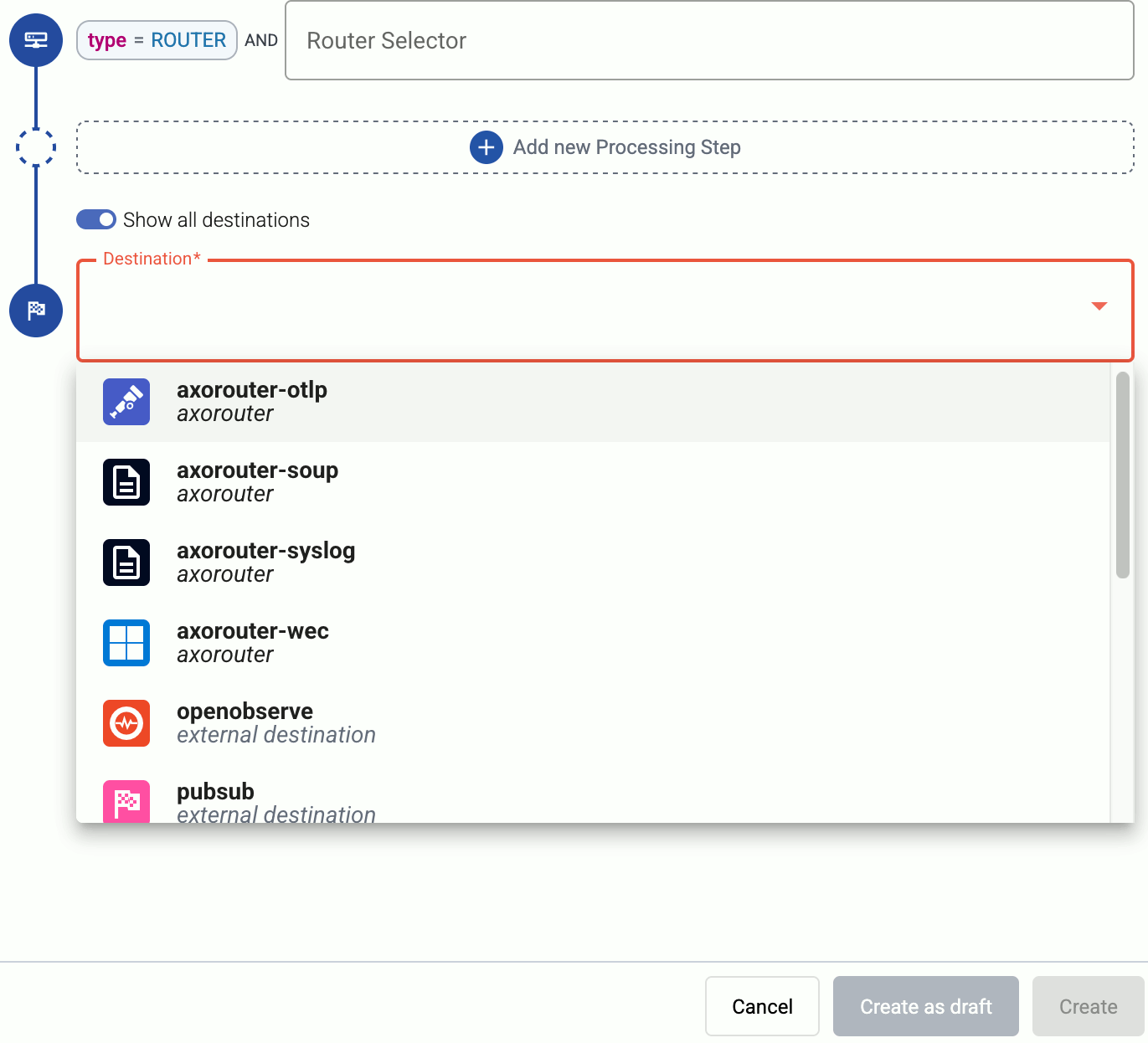

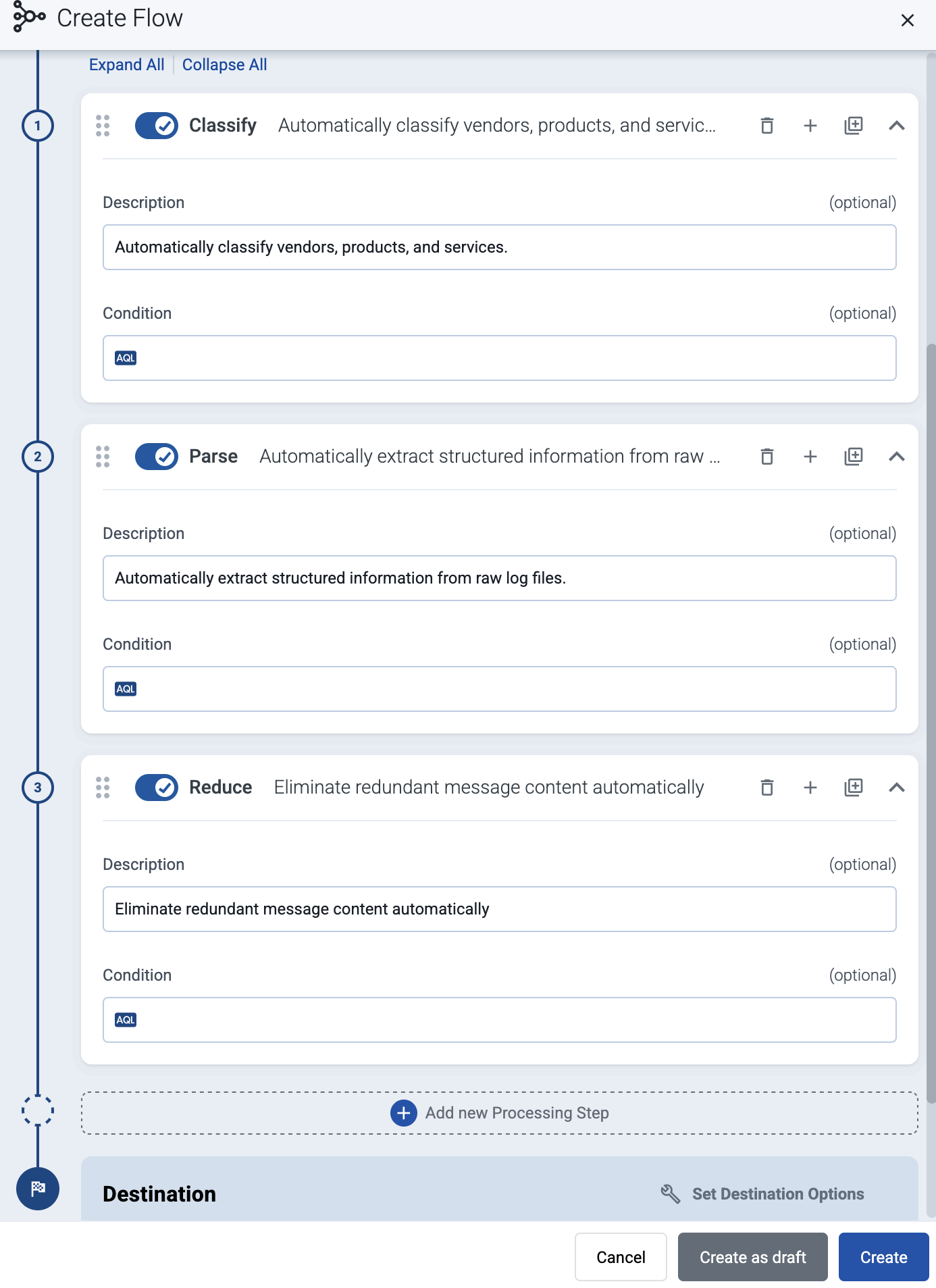

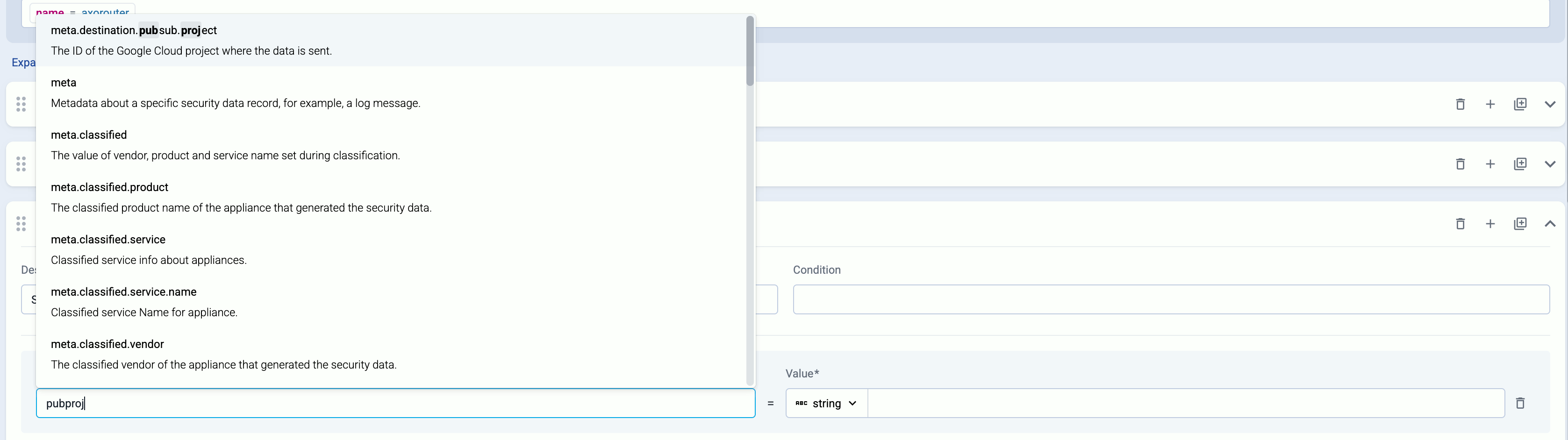

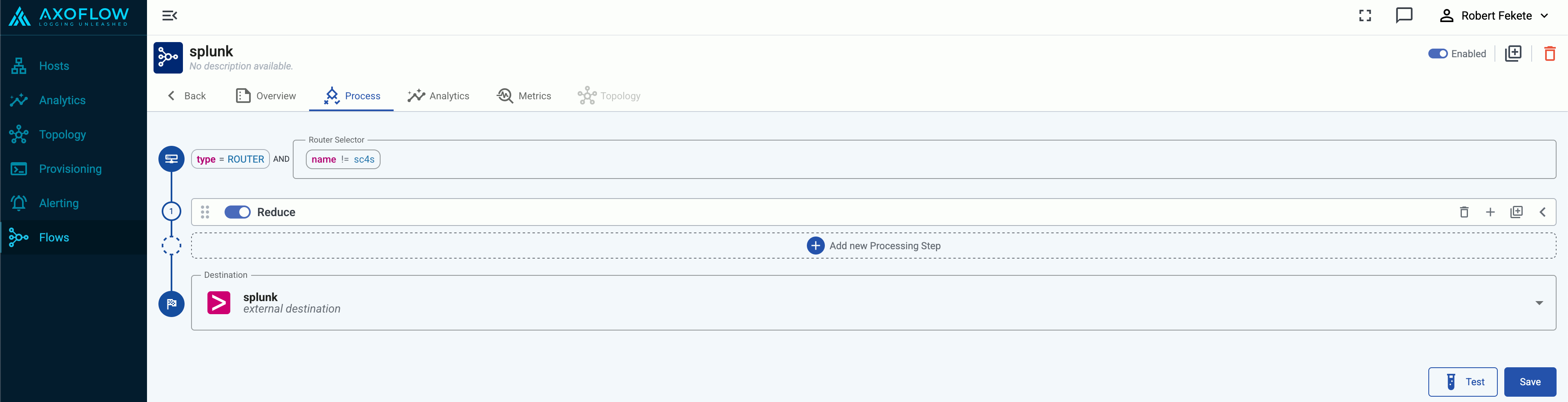

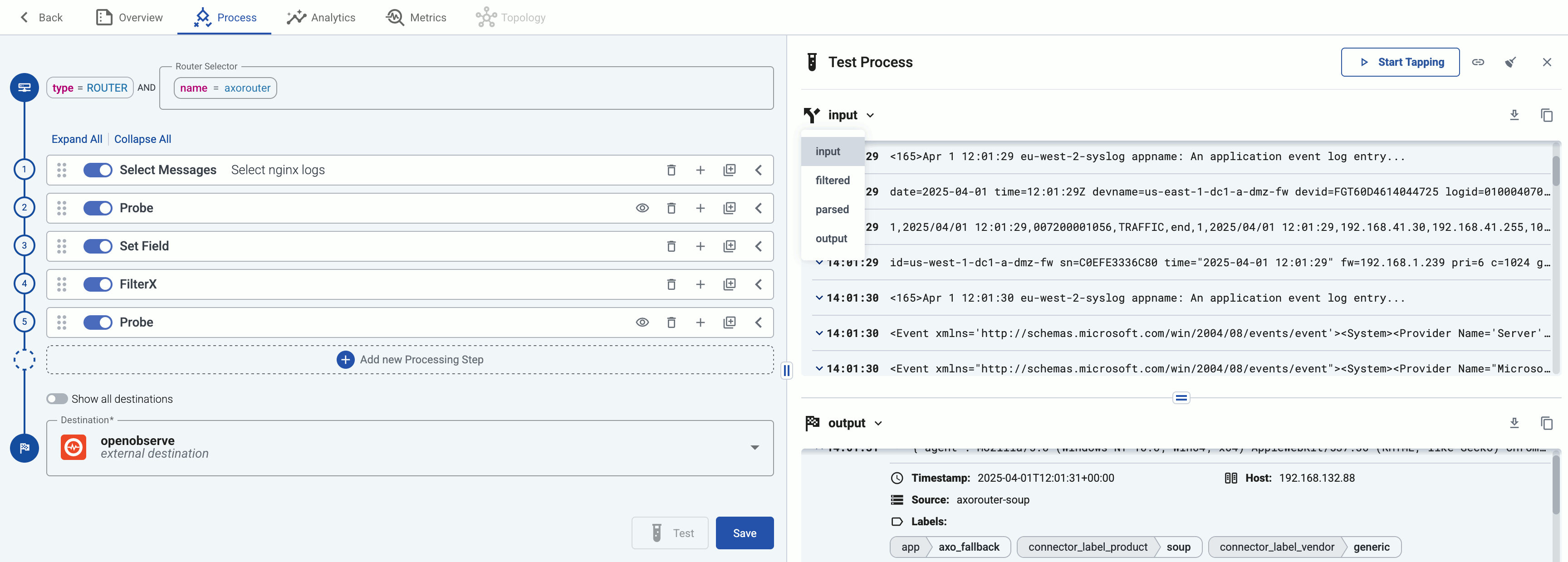

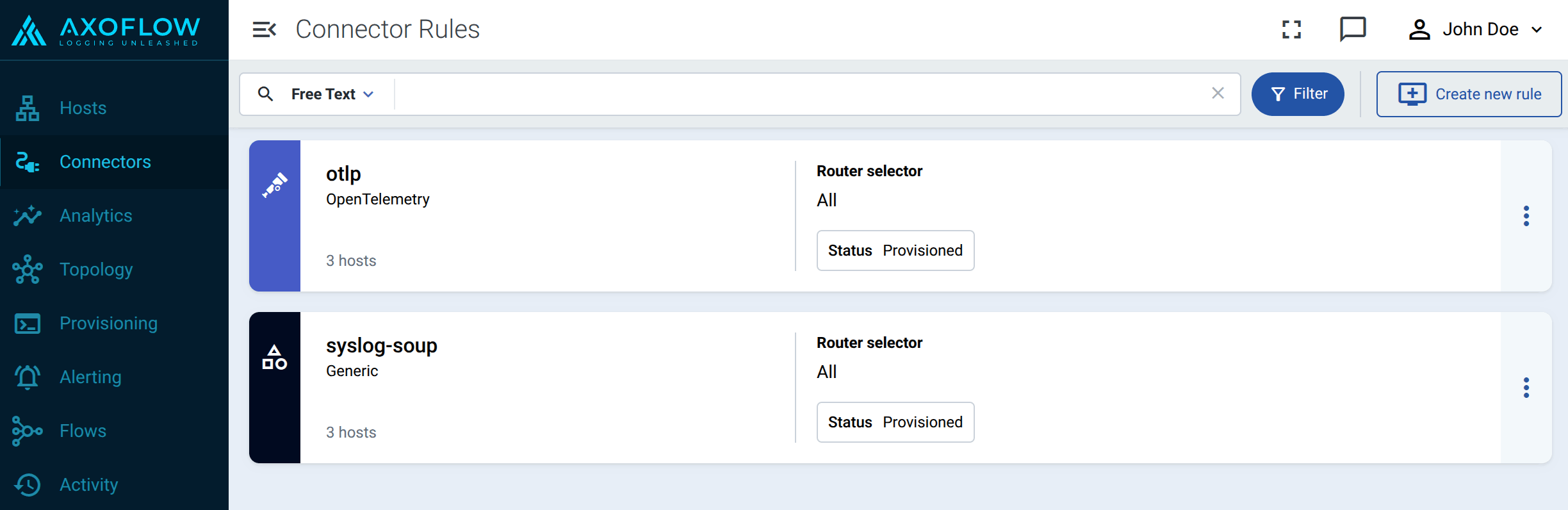

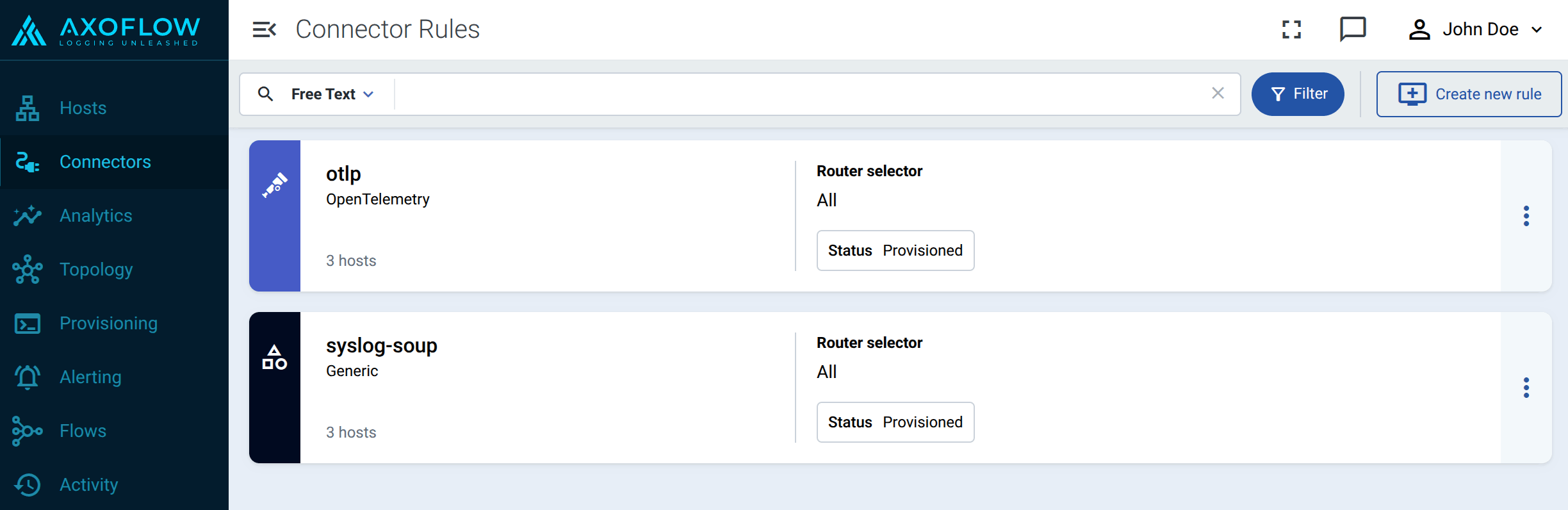

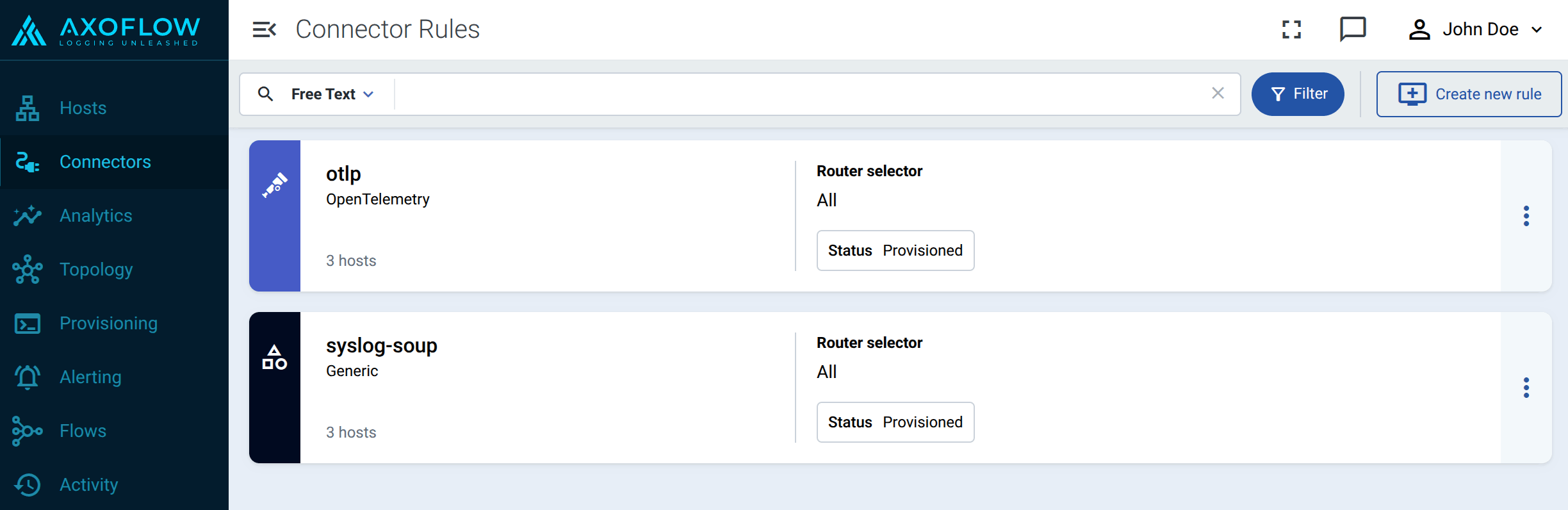

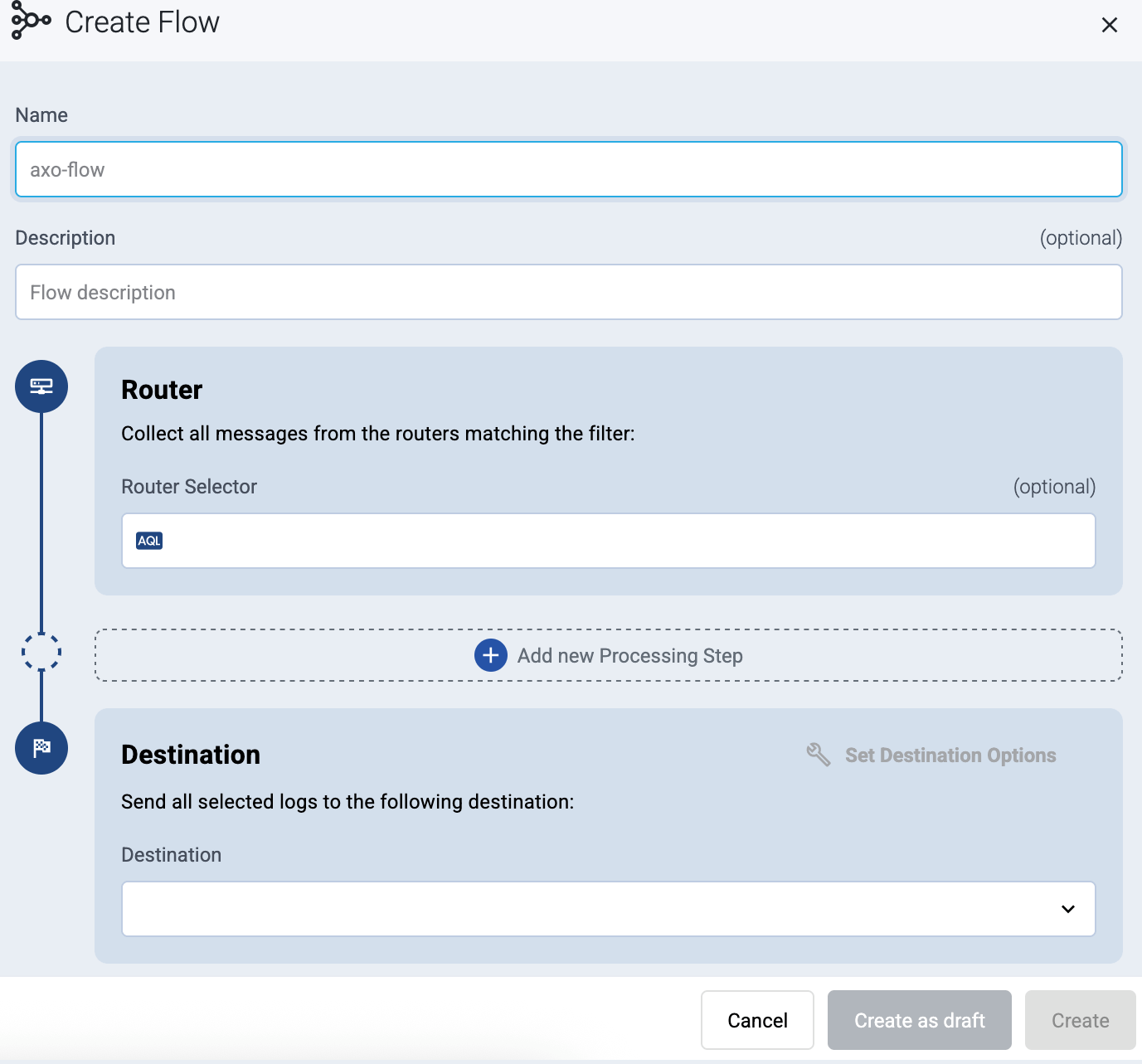

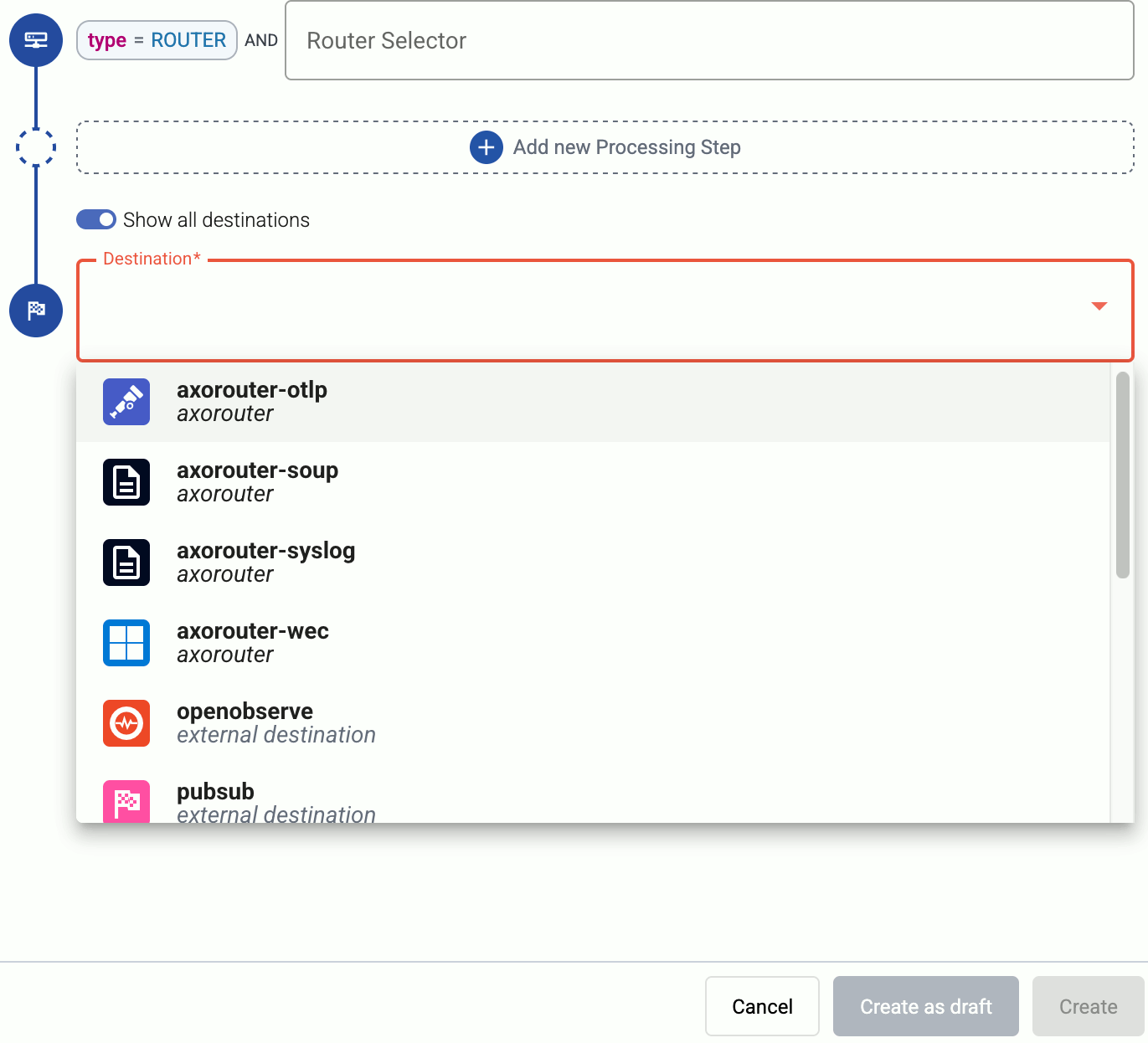

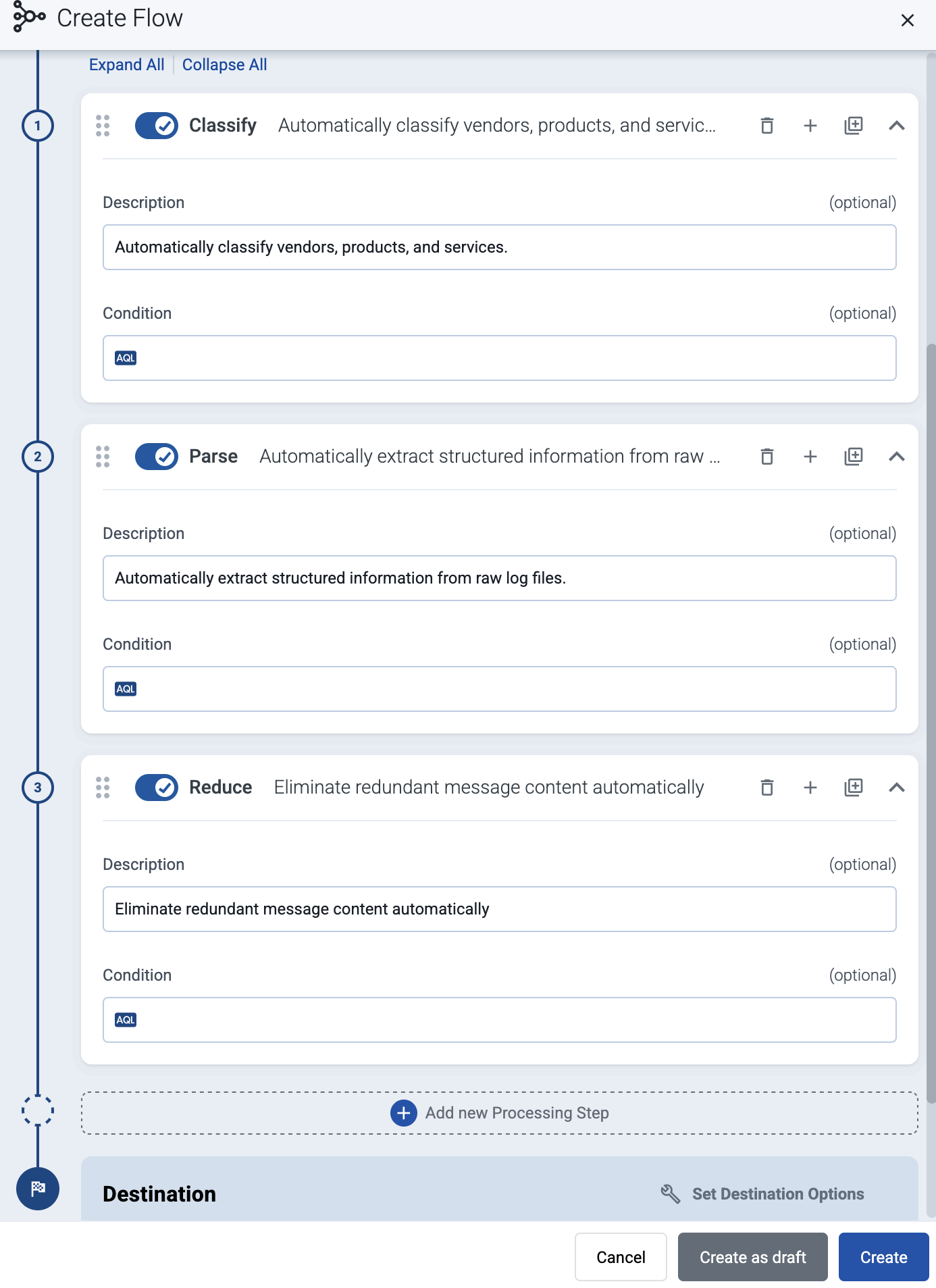

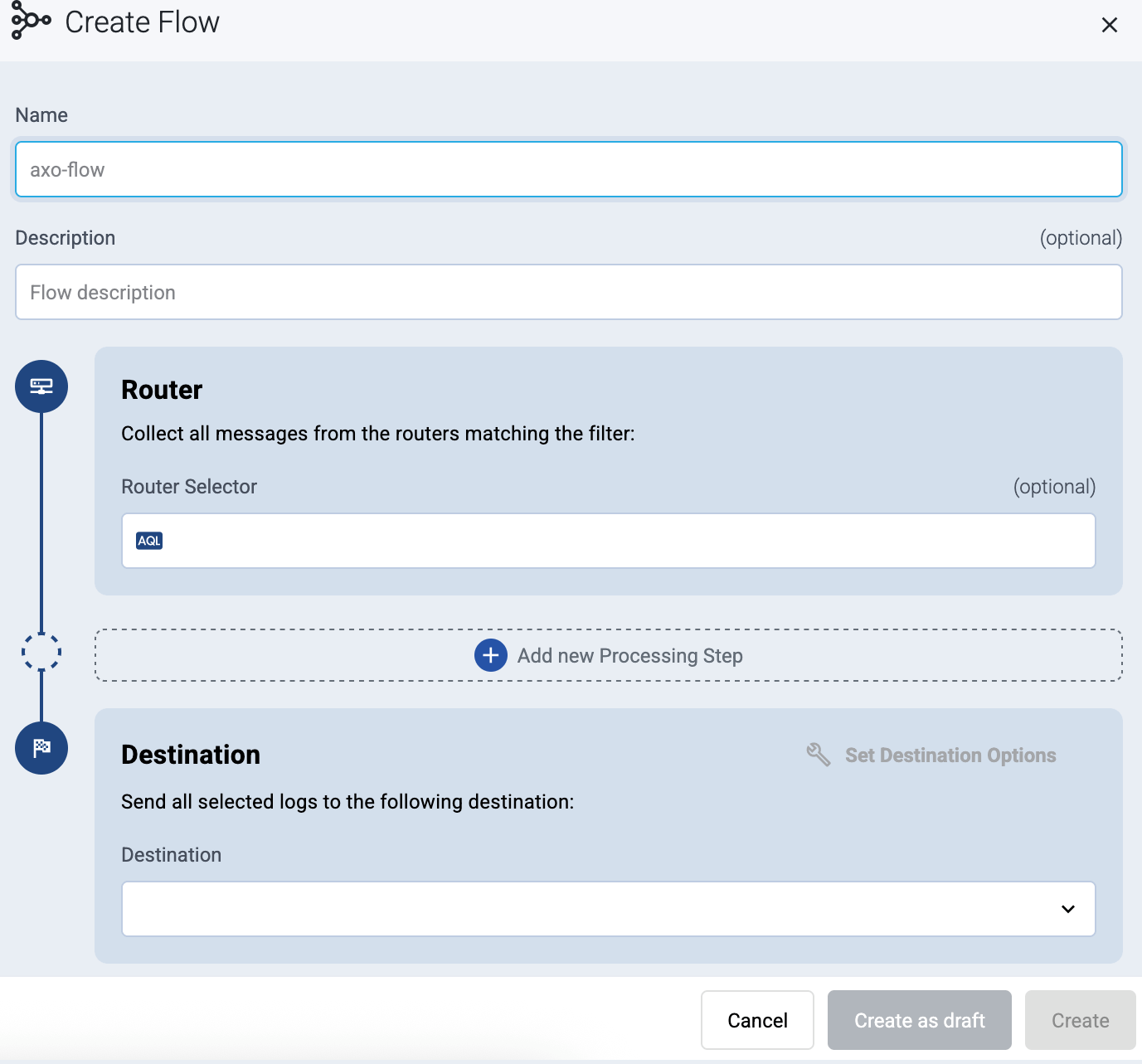

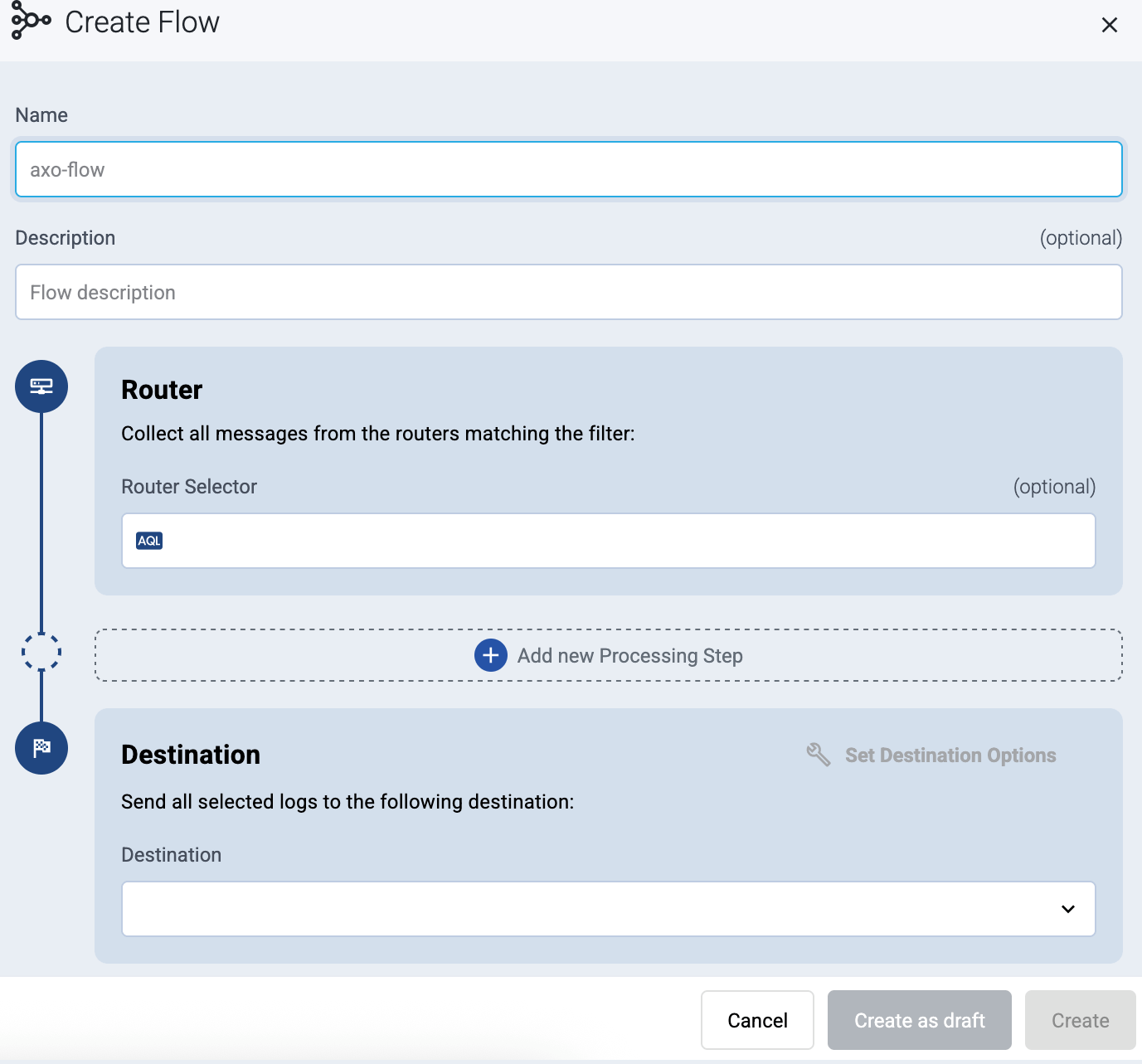

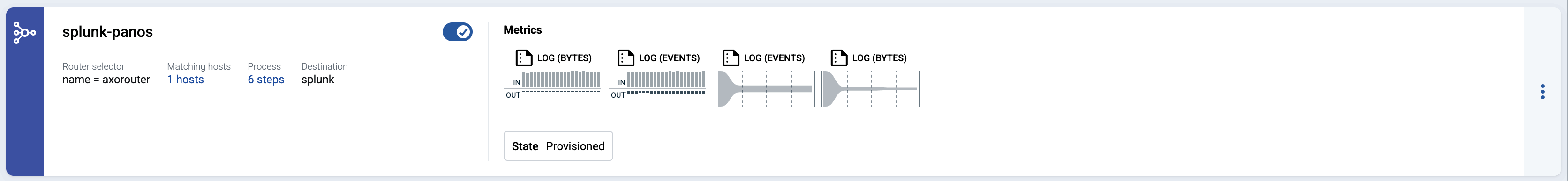

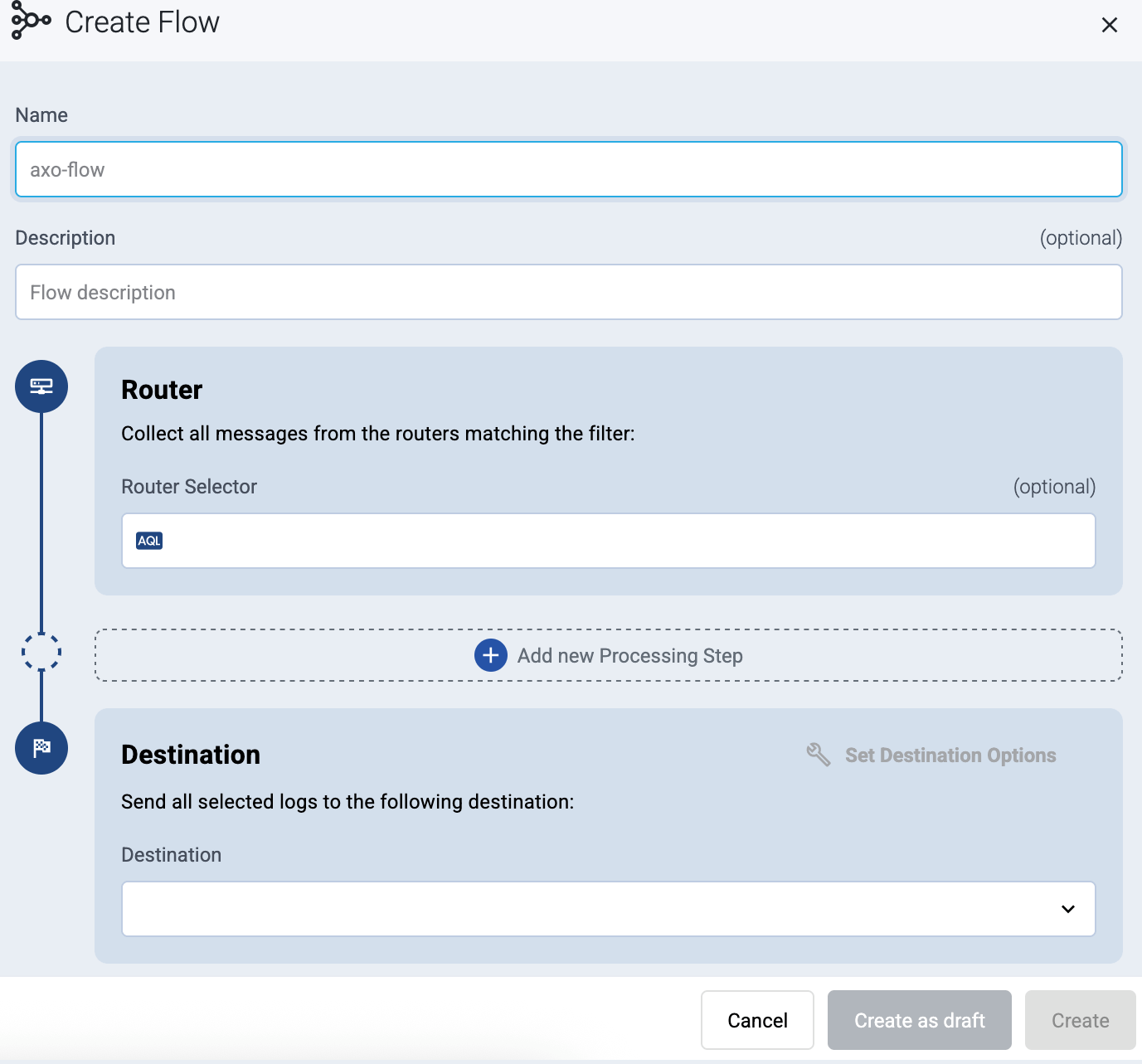

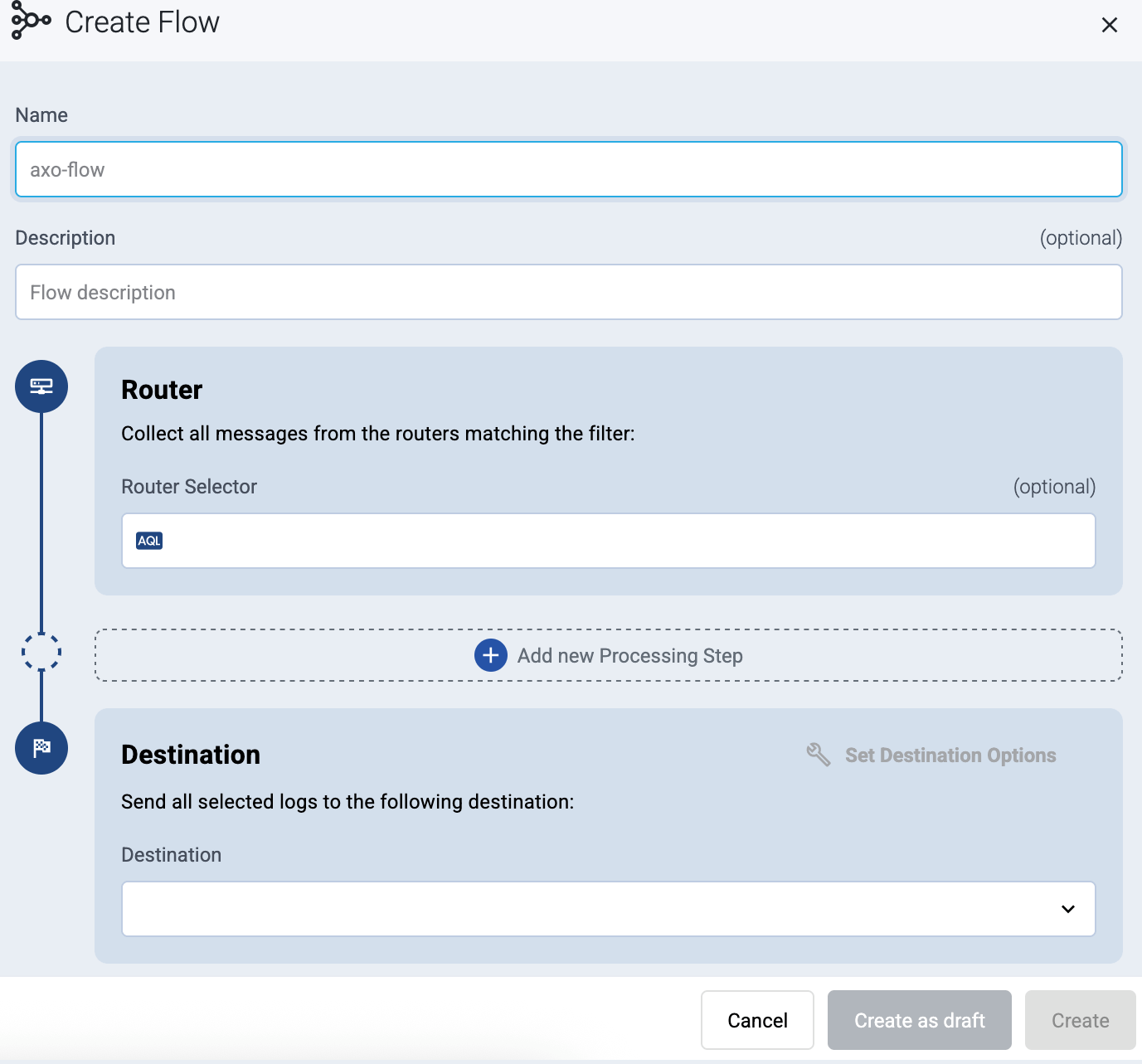

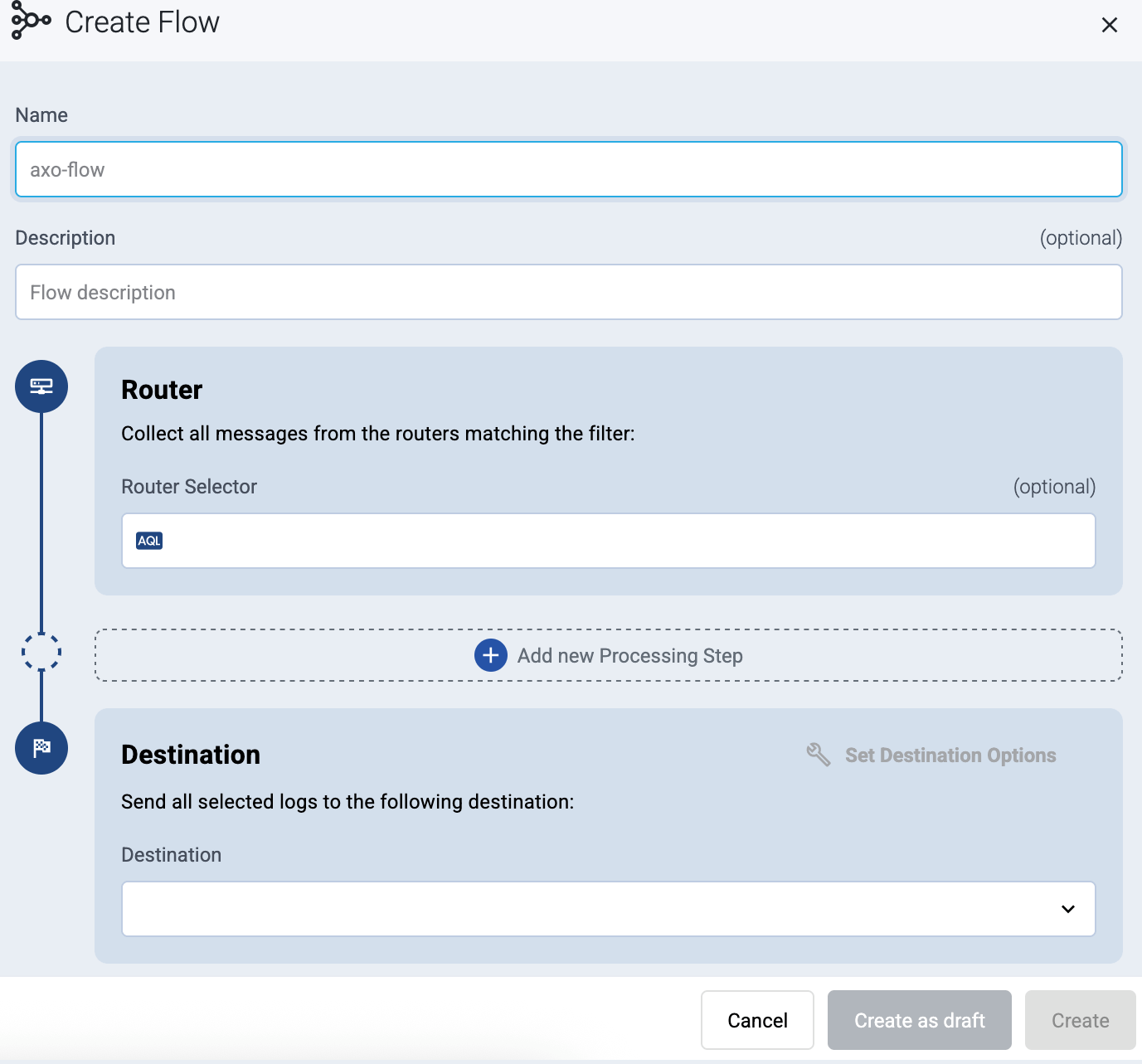

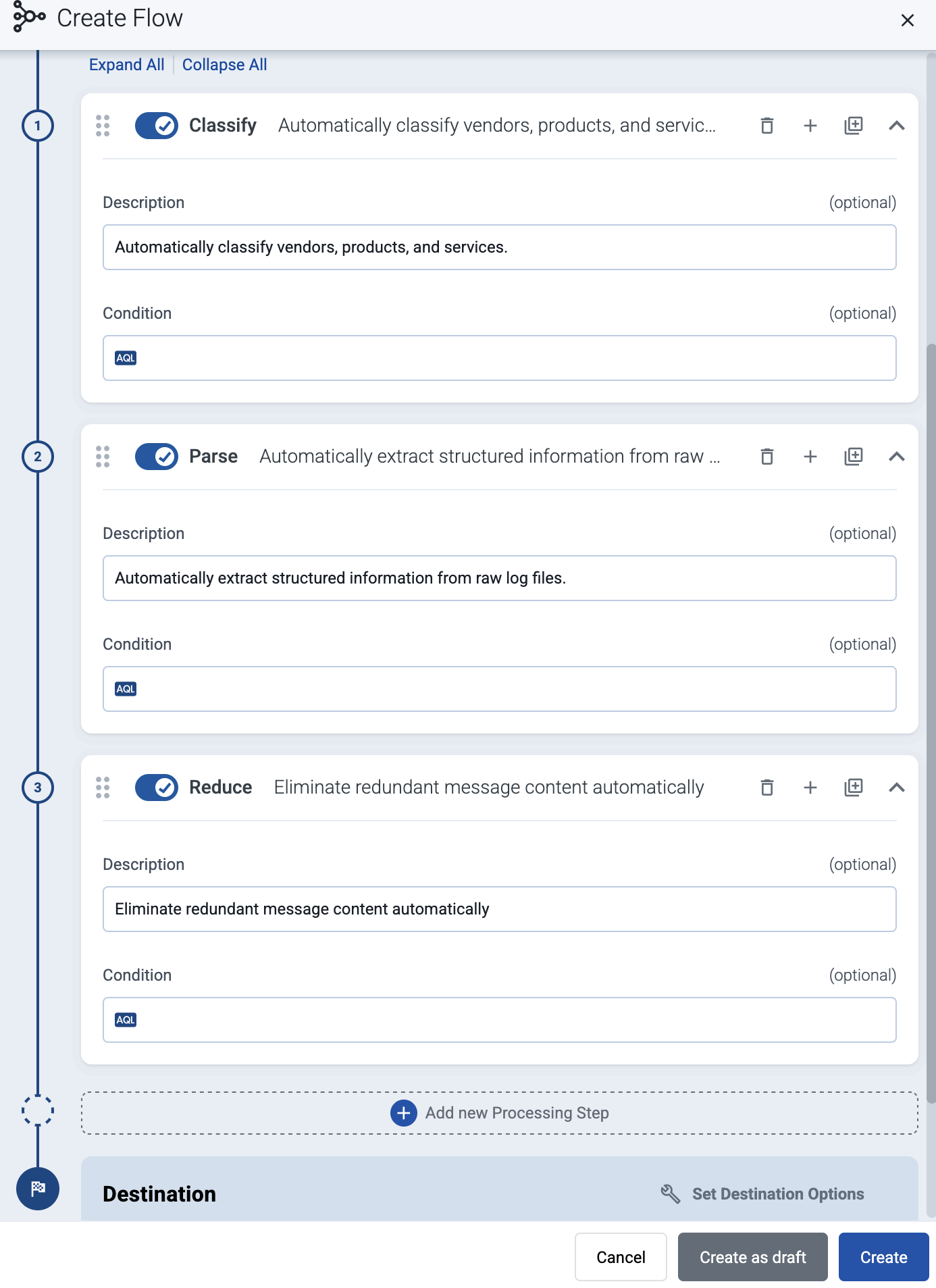

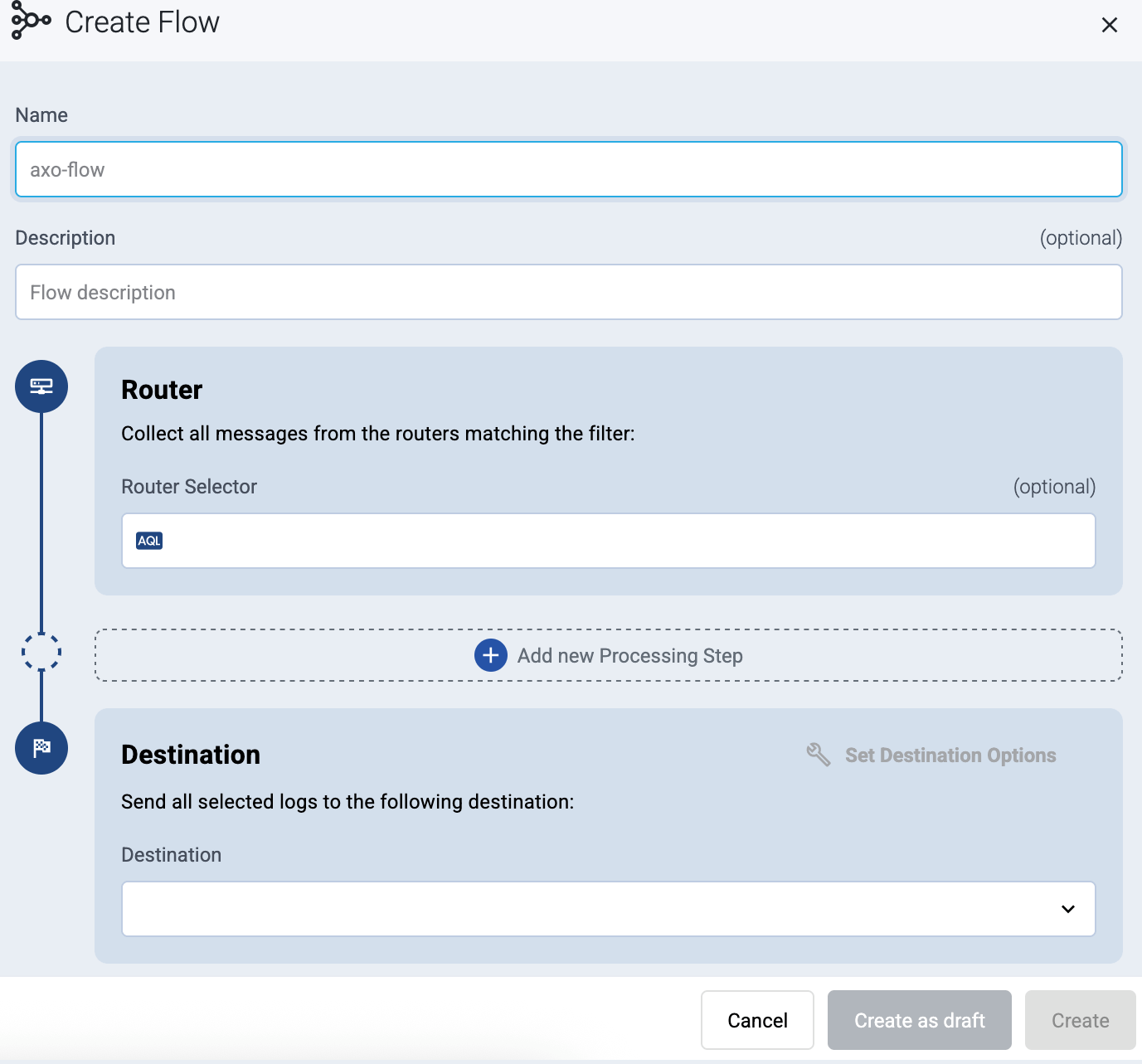

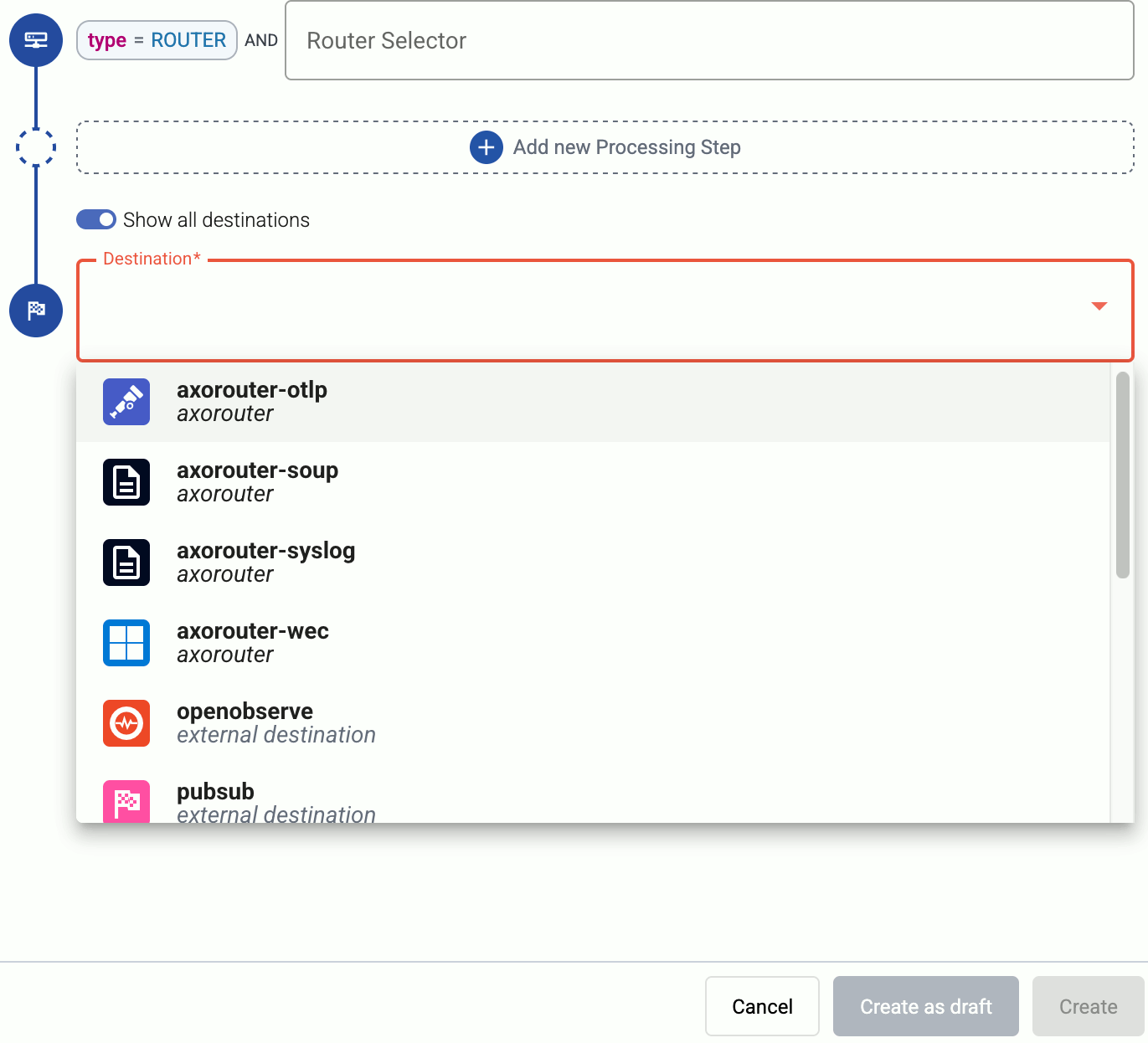

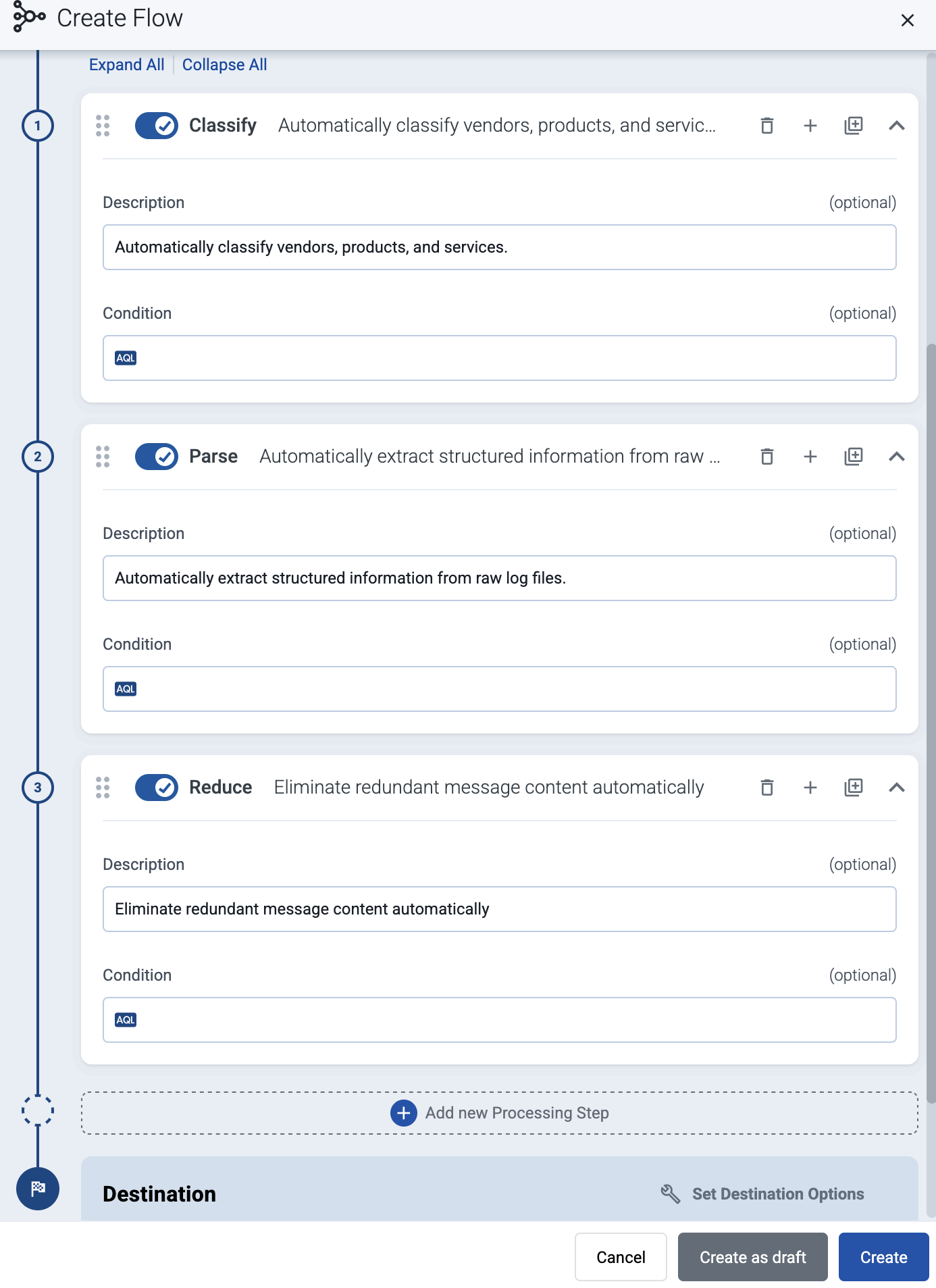

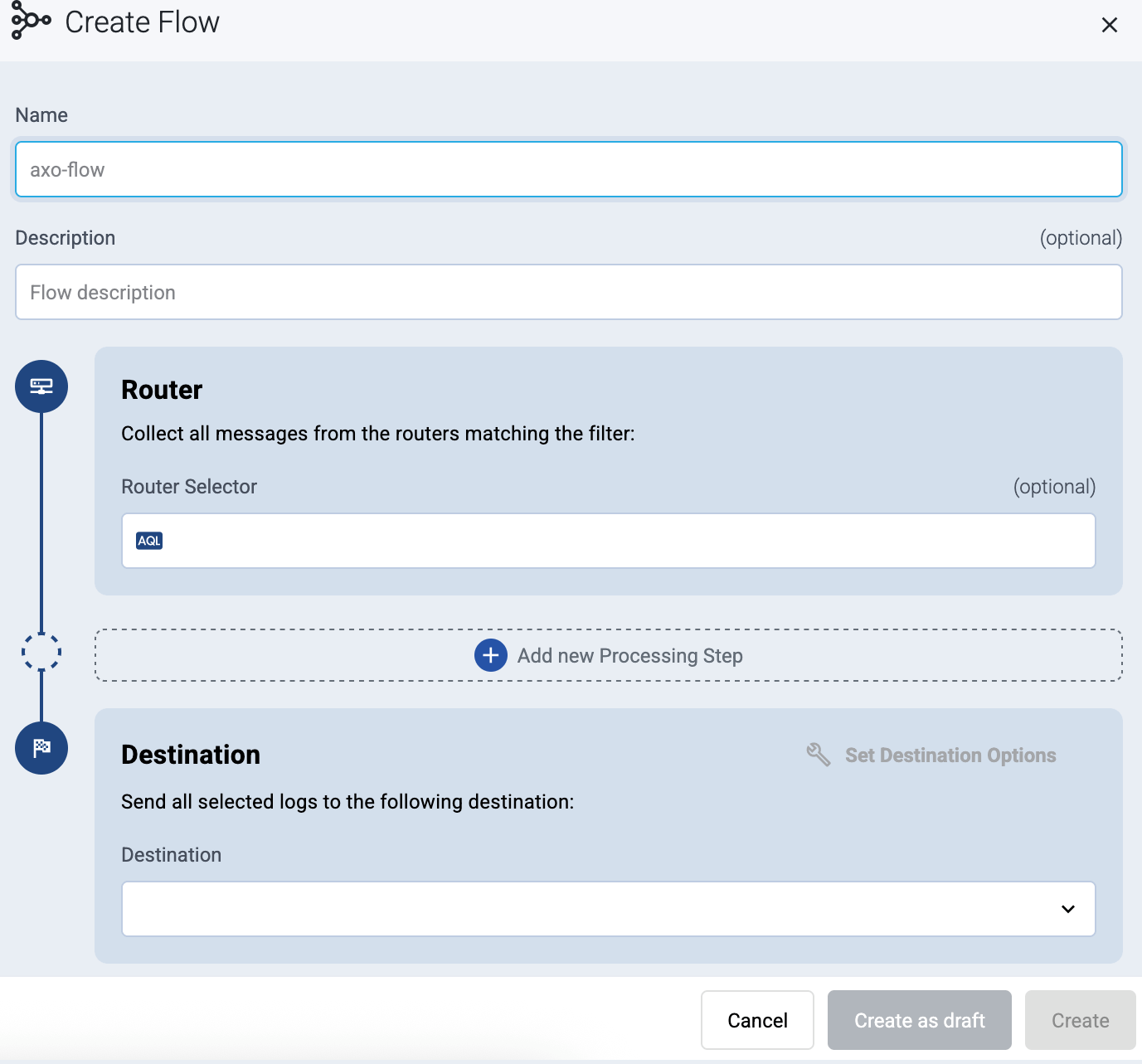

Create a flow

Create a flow to route the traffic from your AxoRouter instance to your destination.

-

Select Flows.

-

Select Create New Flow.

-

Enter a name for the flow, for example, my-test-flow.

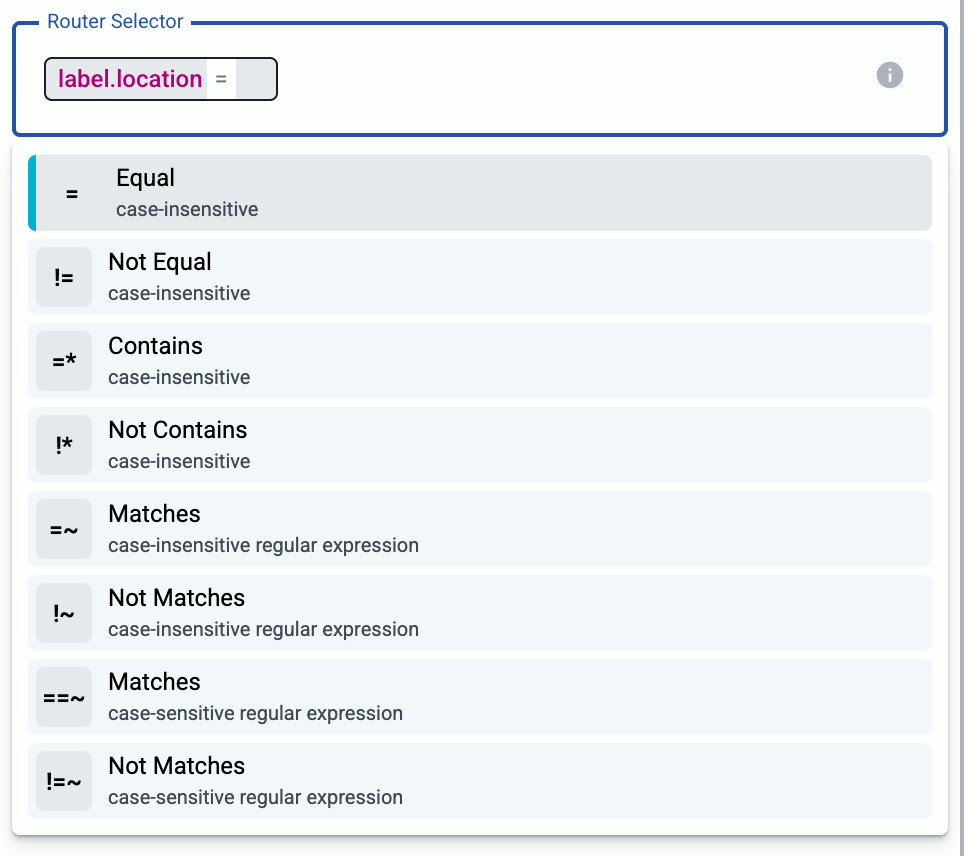

-

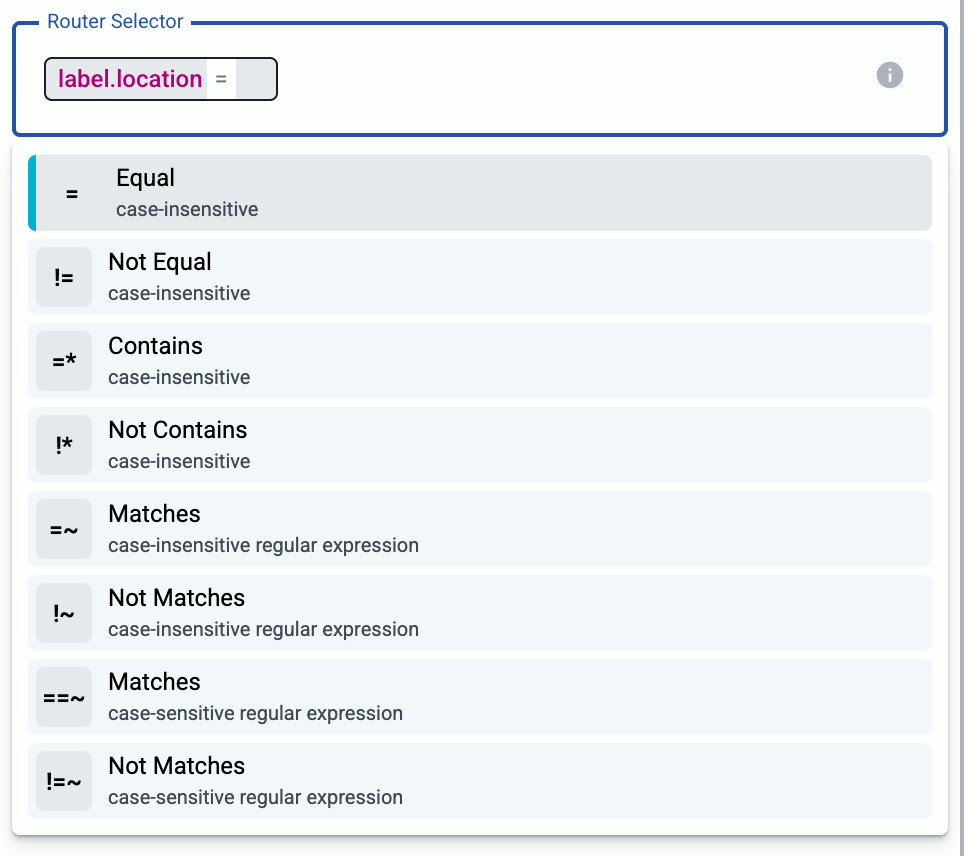

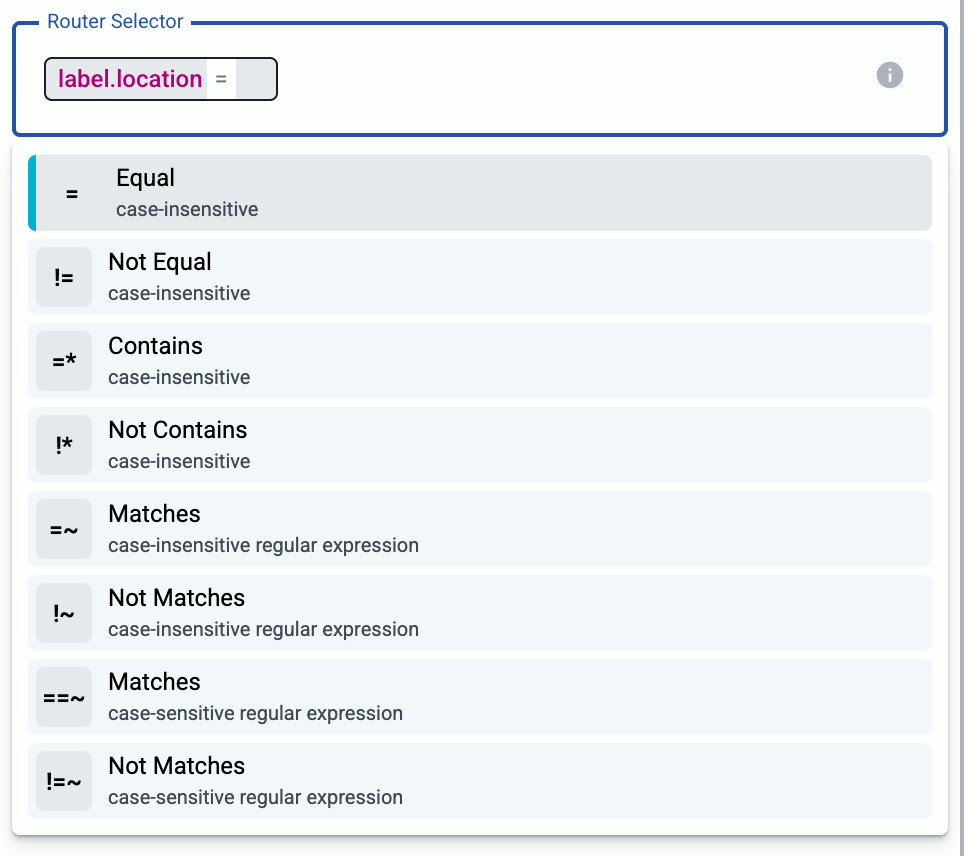

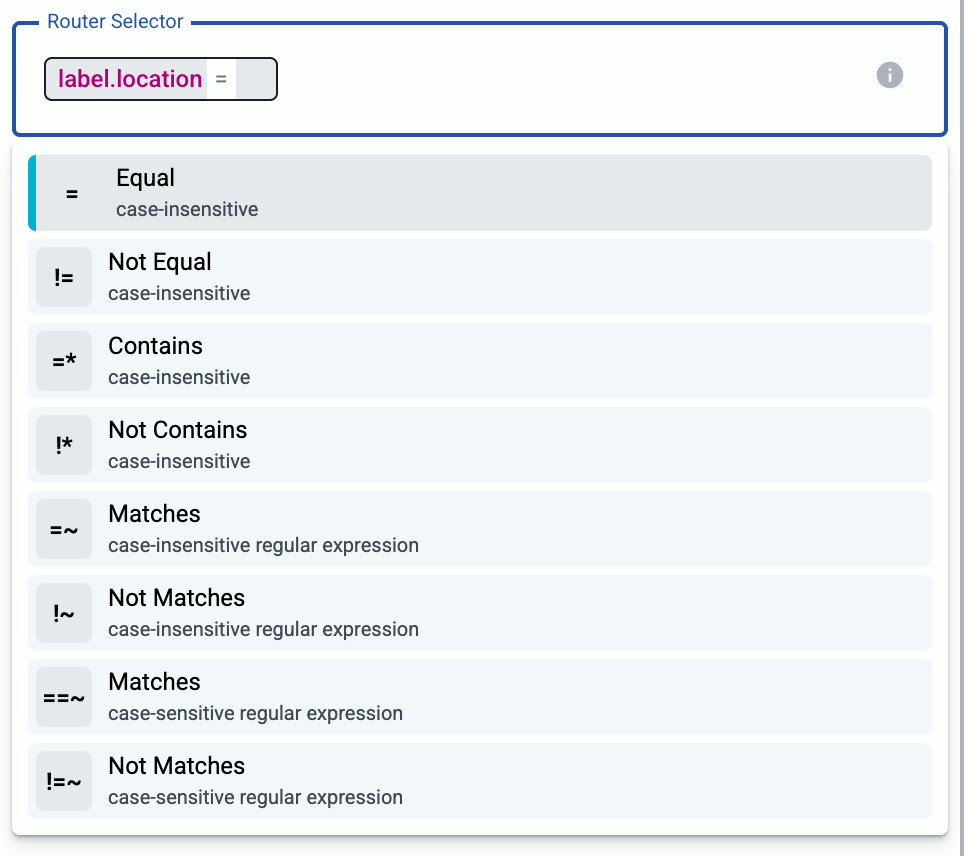

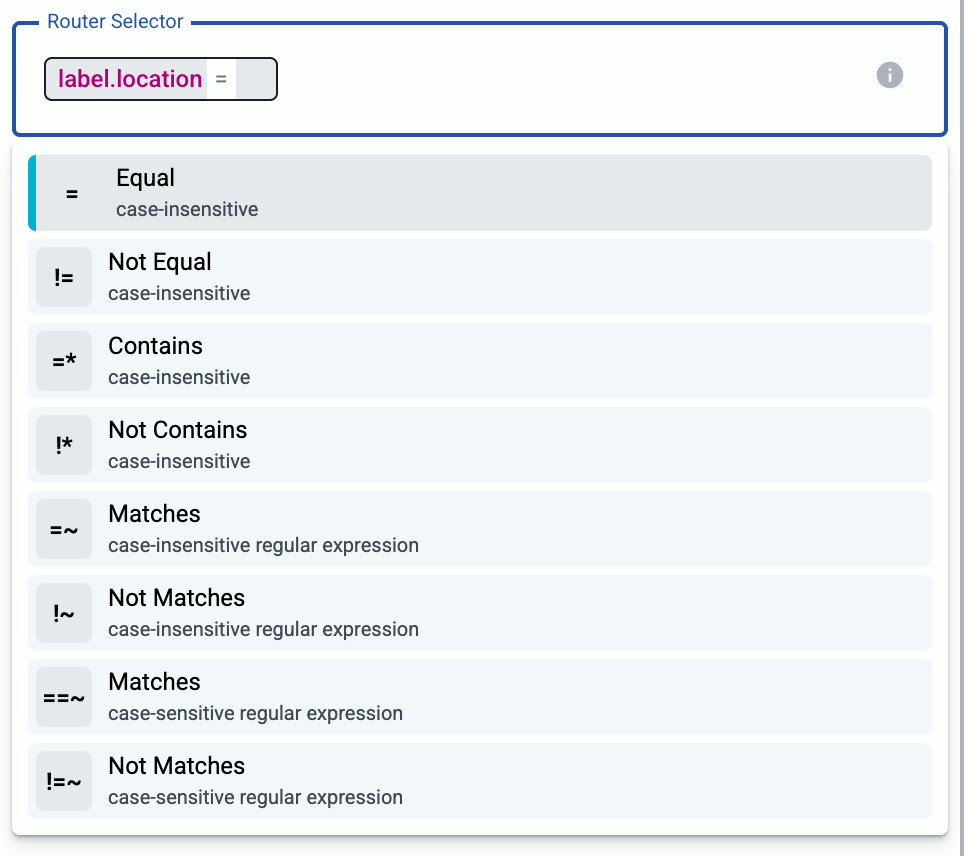

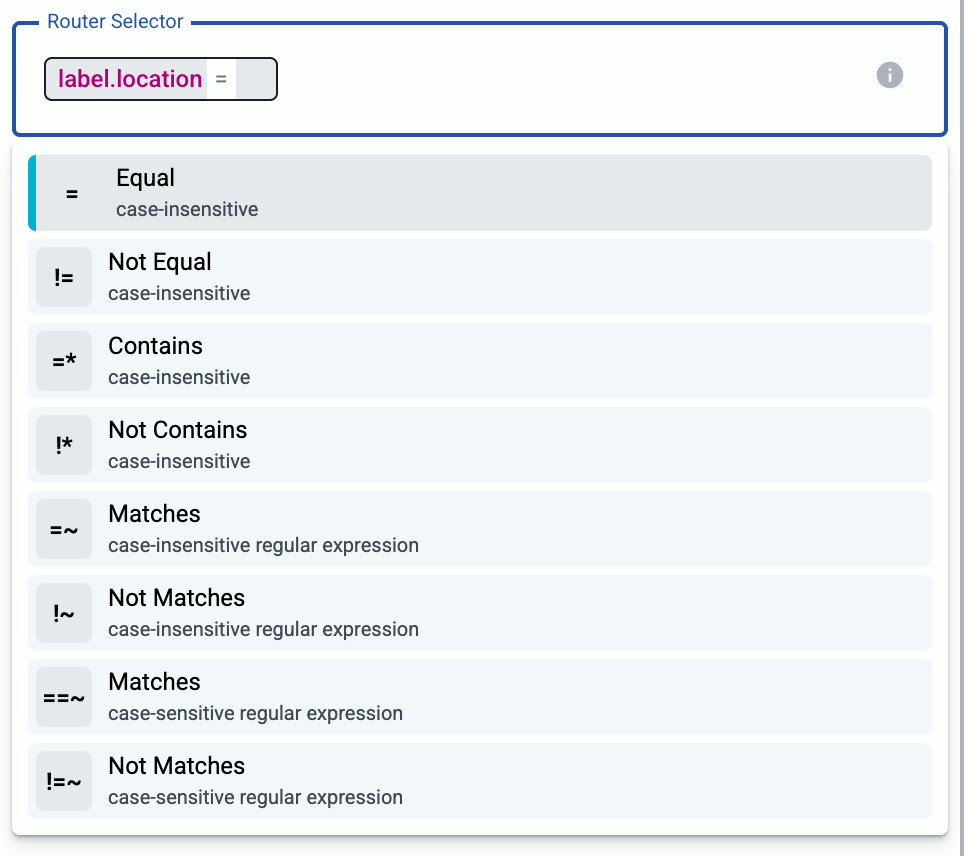

In the Router Selector field, enter an expression that matches the router(s) you want to apply the flow. To select a specific router, use a name selector, for example, name = my-axorouter-hostname.

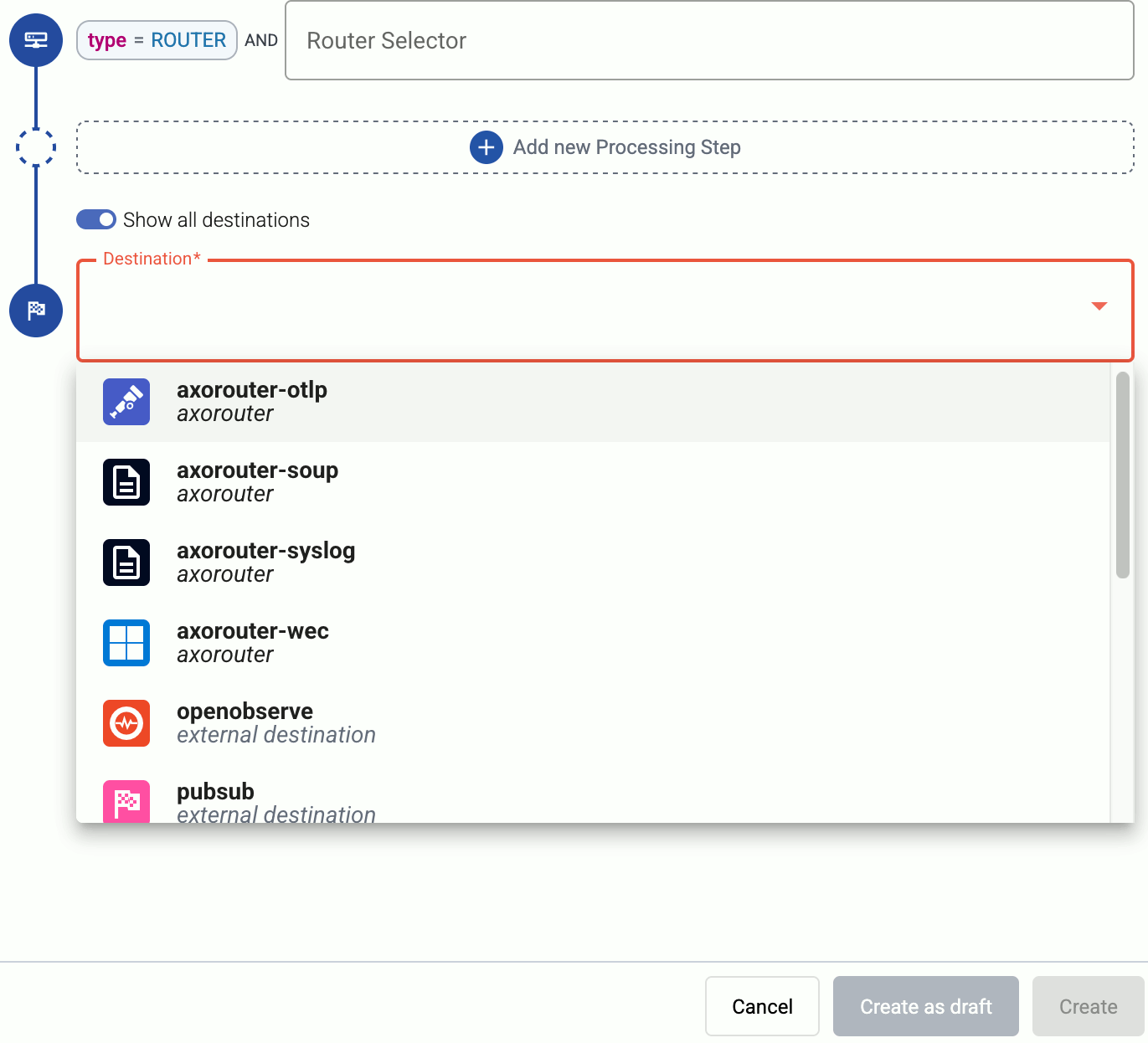

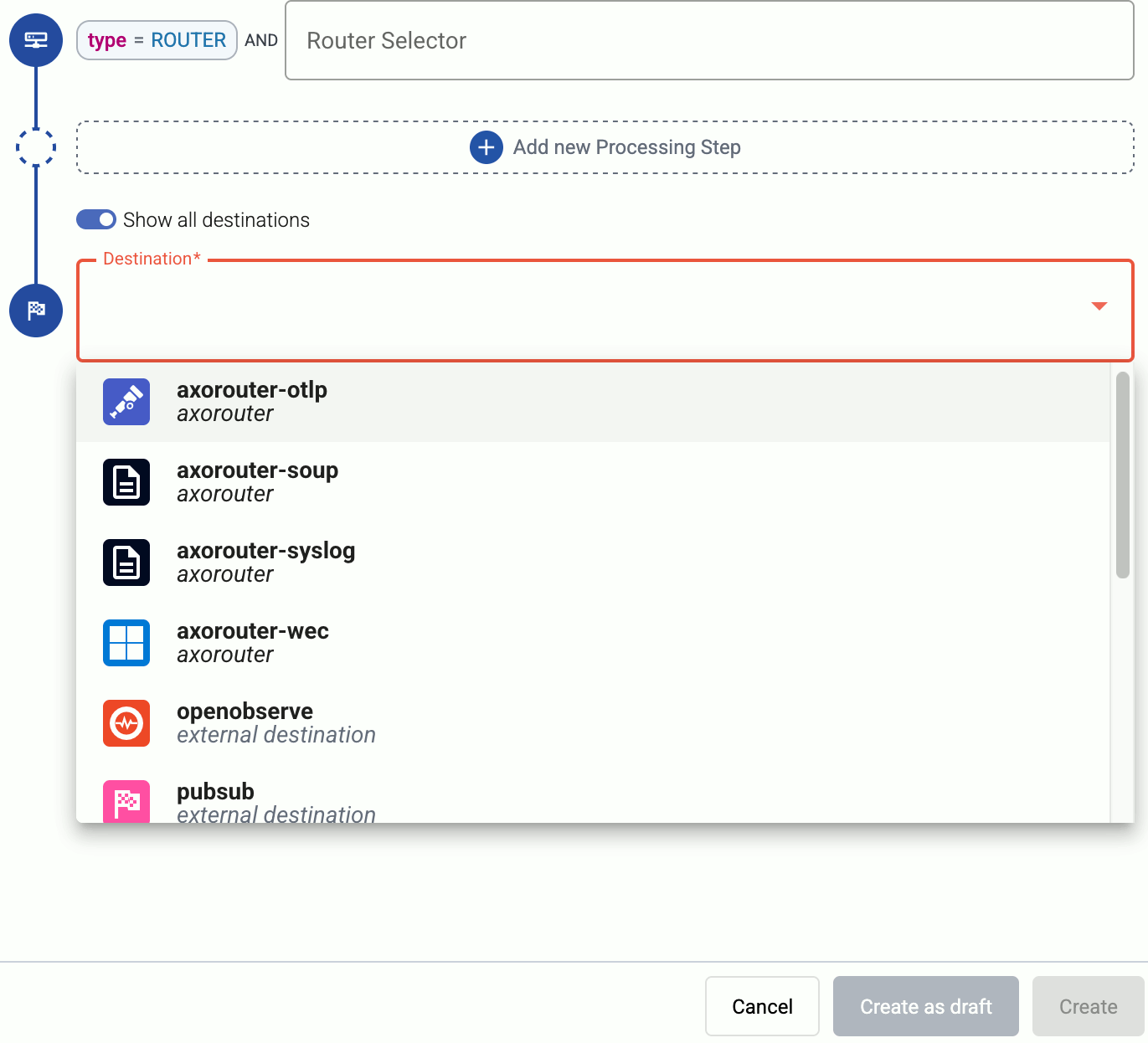

-

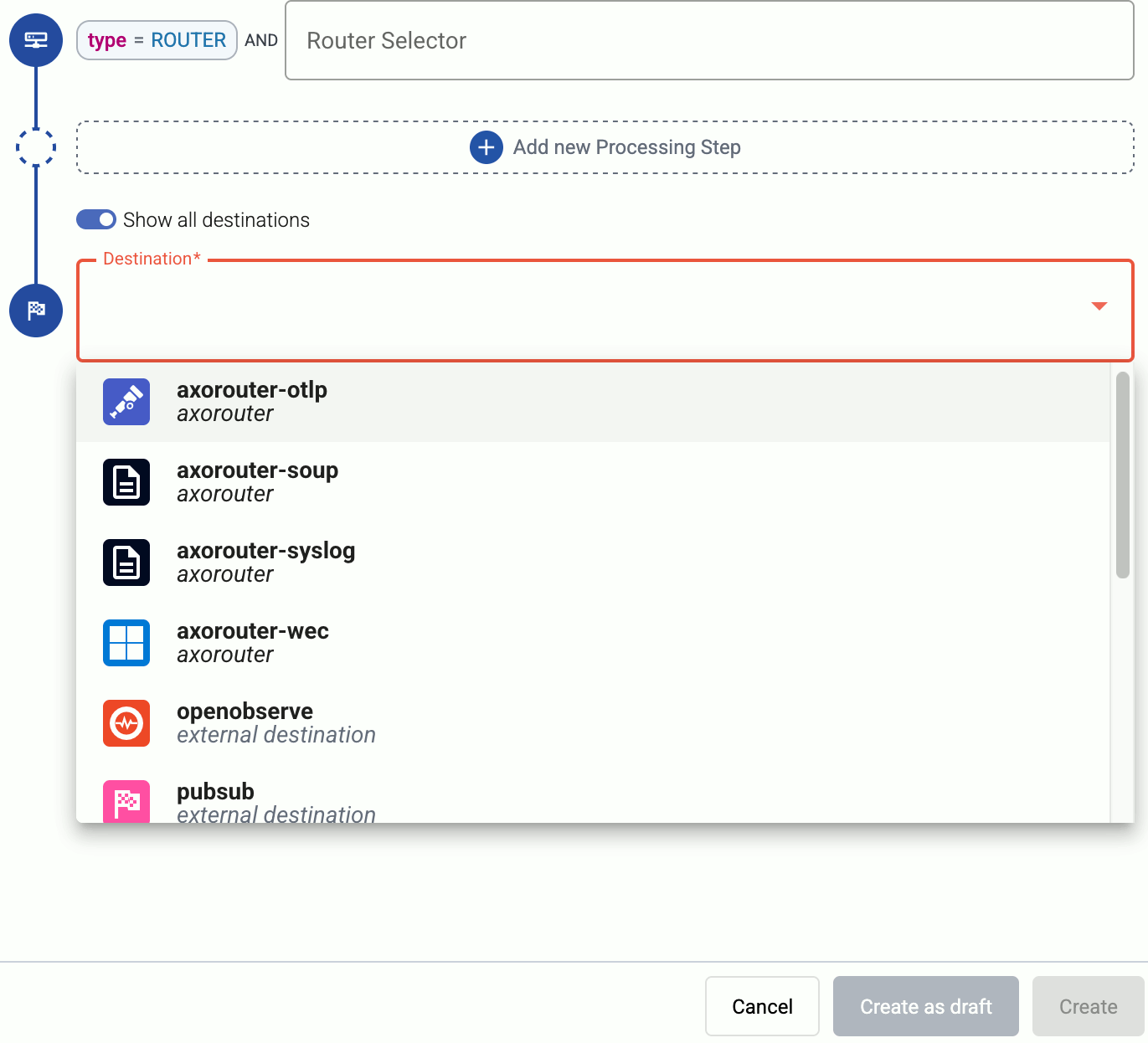

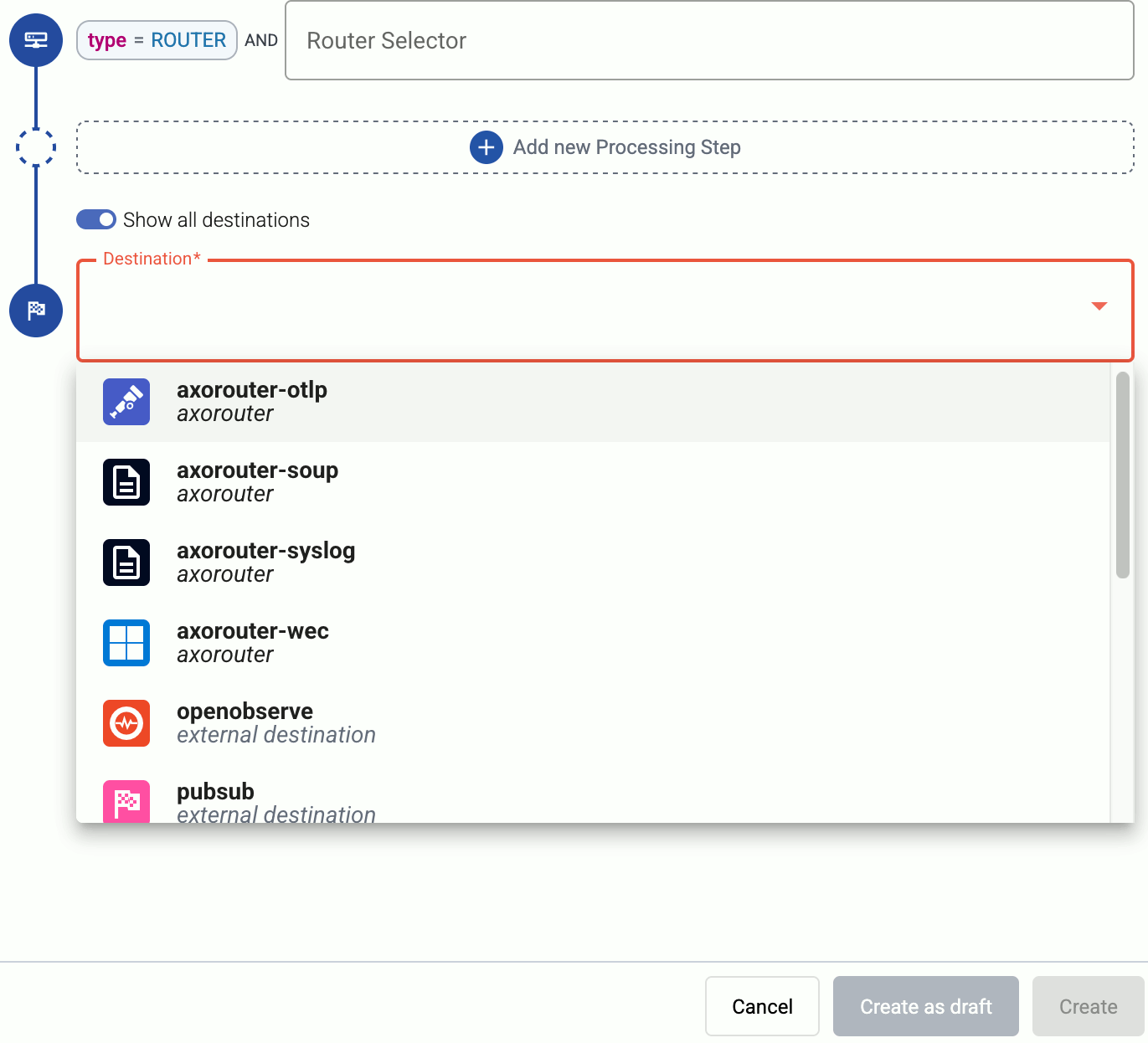

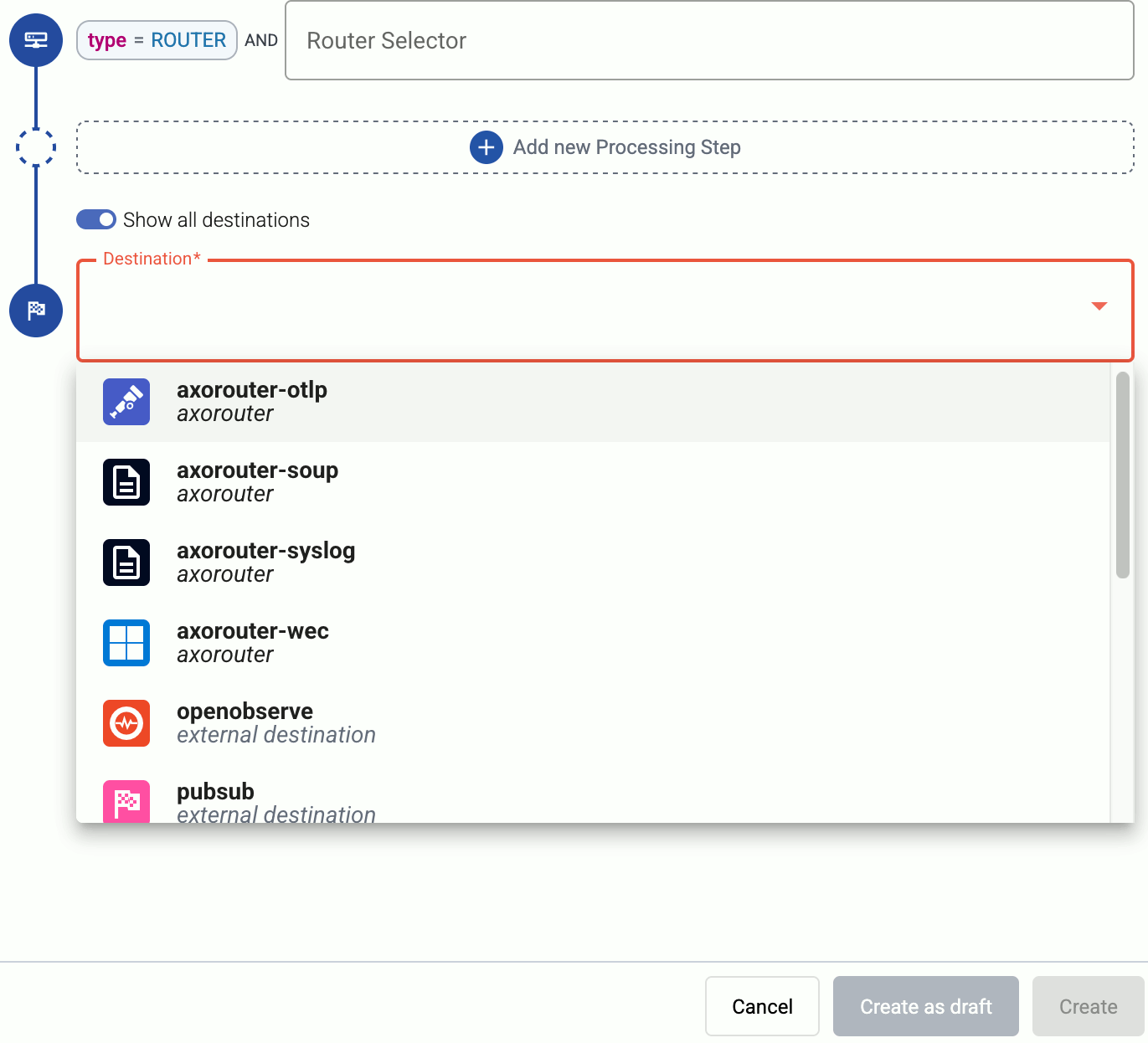

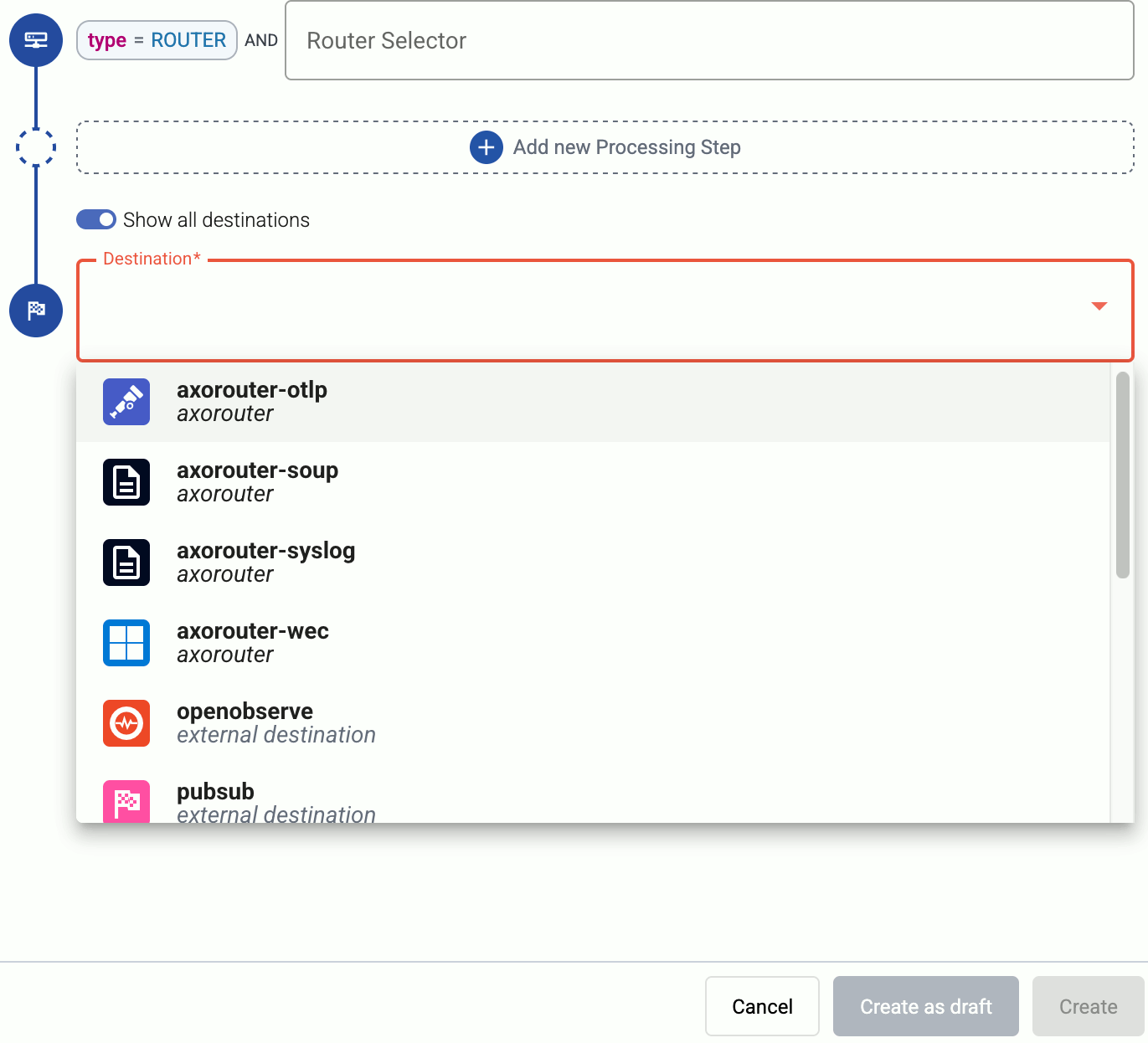

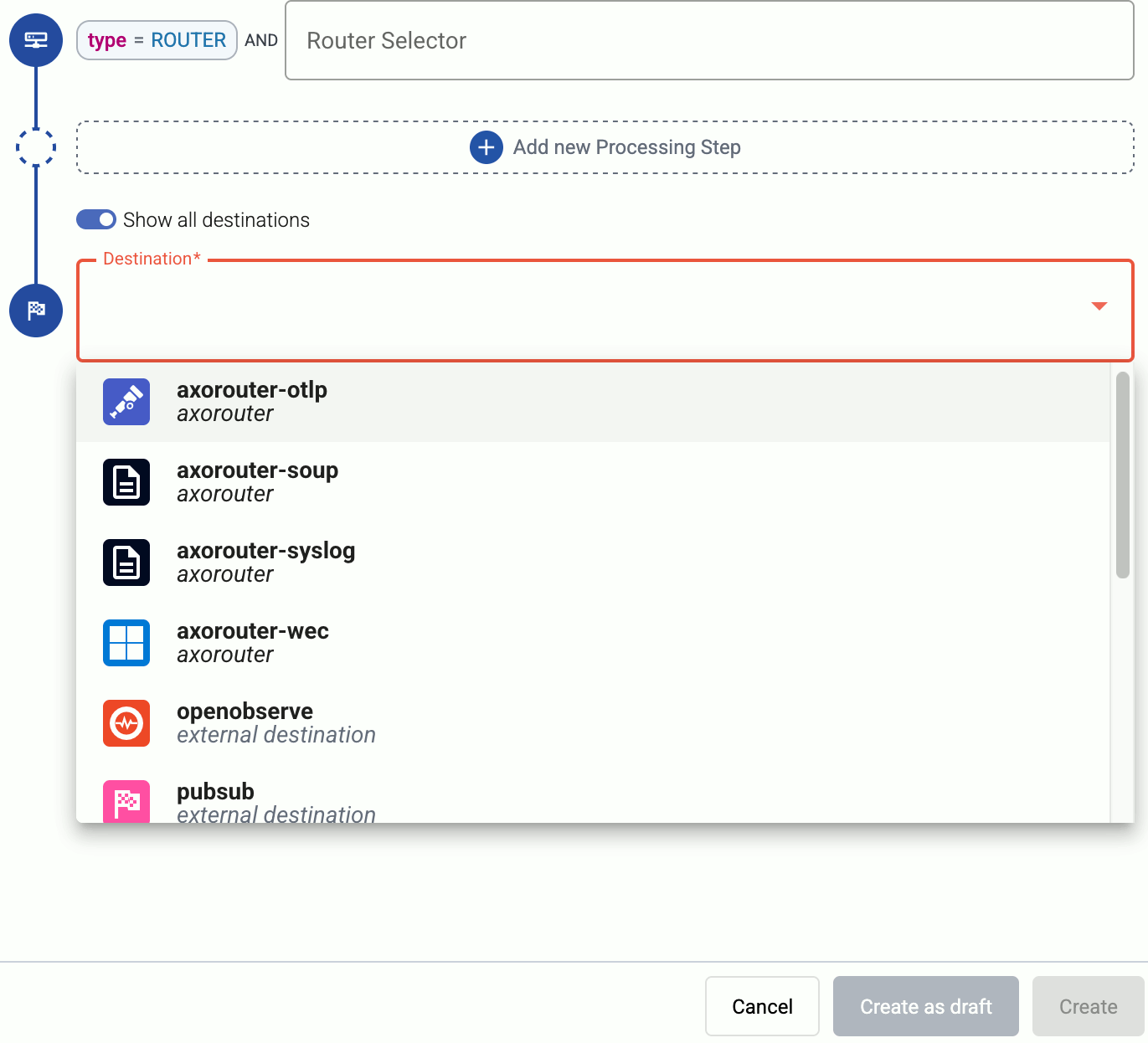

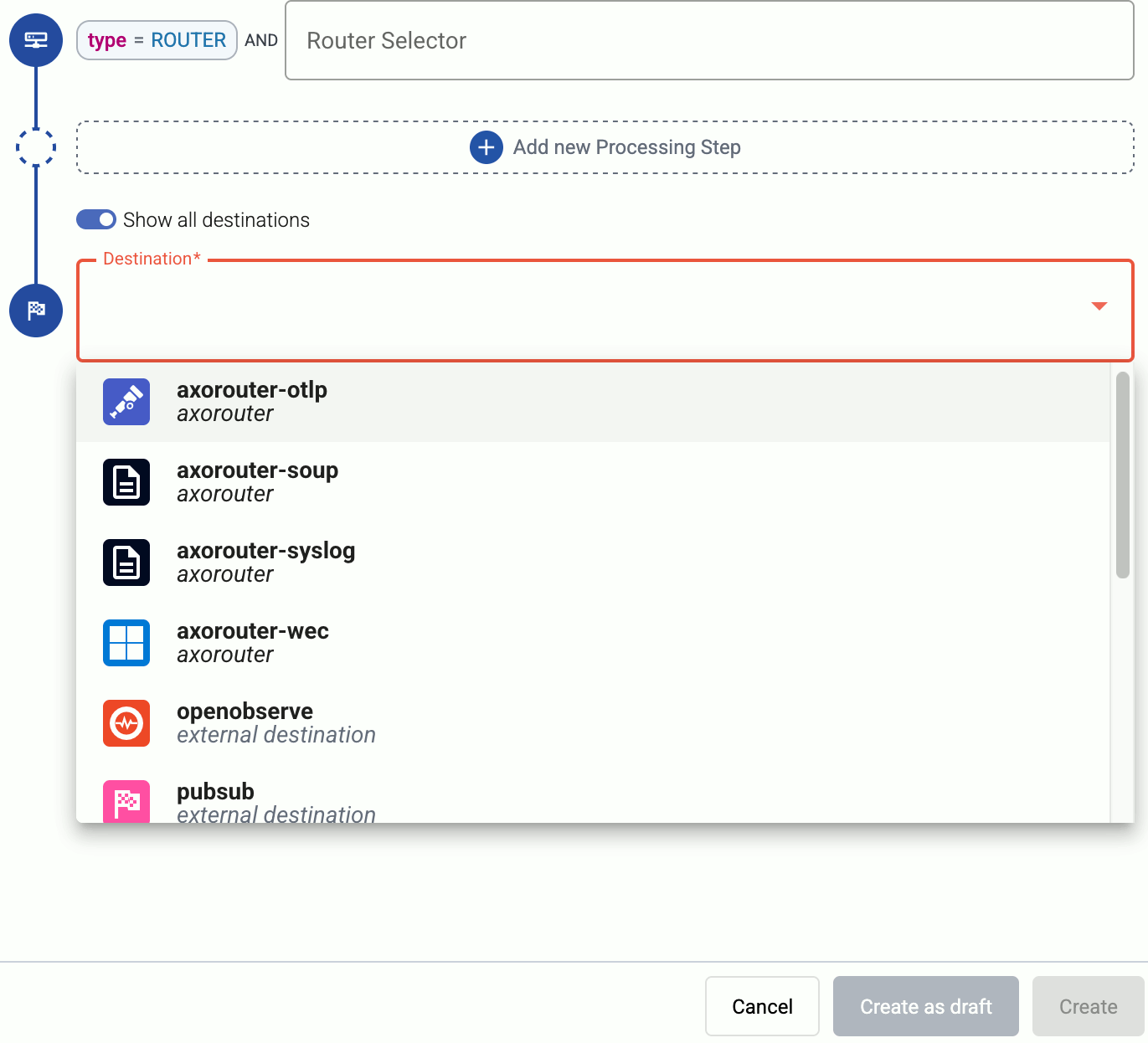

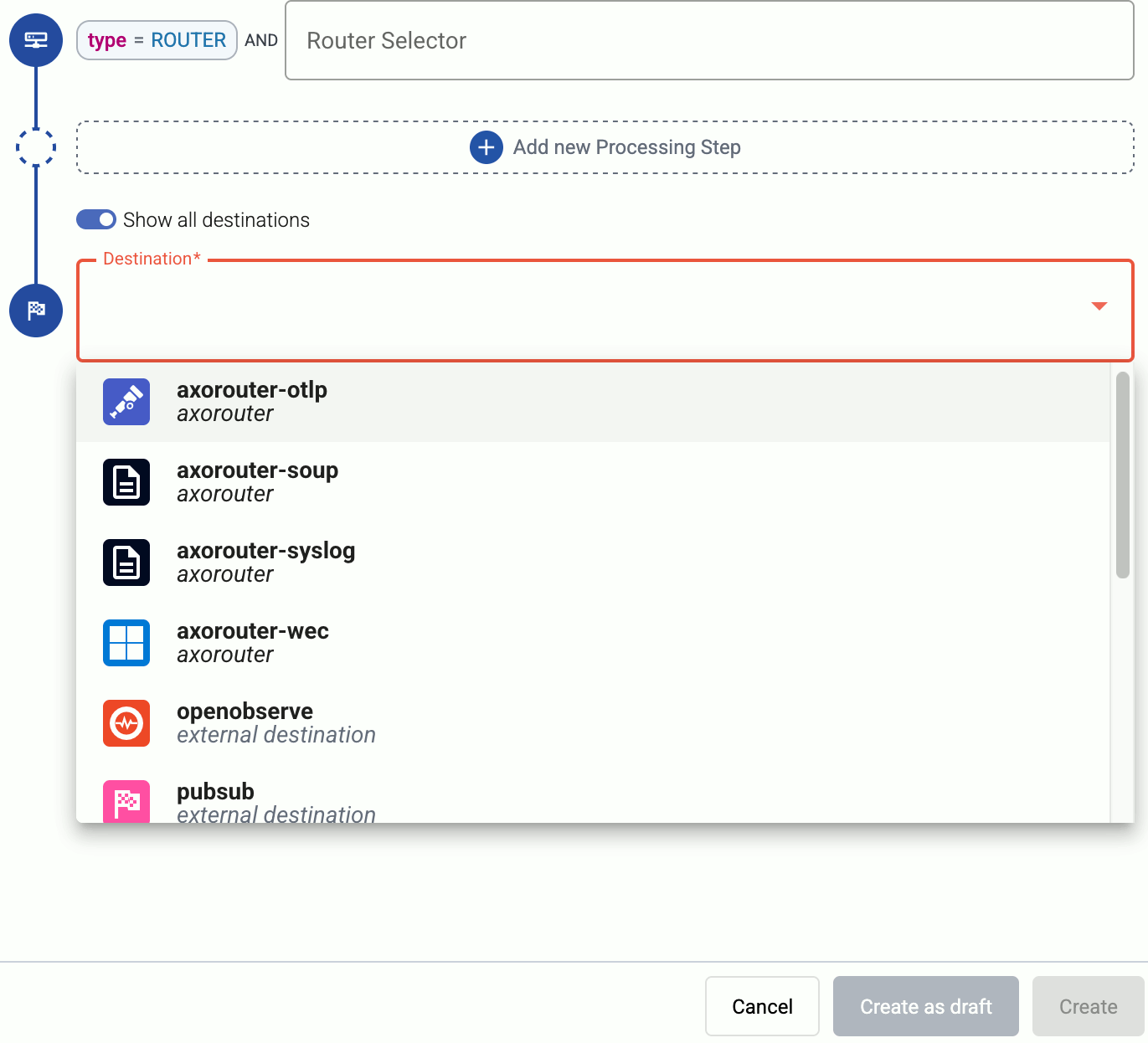

Select the Destination where you want to send your data. If you don’t have any destination configured, see Destinations.

By default, you can select only external destinations. If you want to send data to another AxoRouter, enable the Show all destinations option, and select the connector of the AxoRouter where you want to send the data.

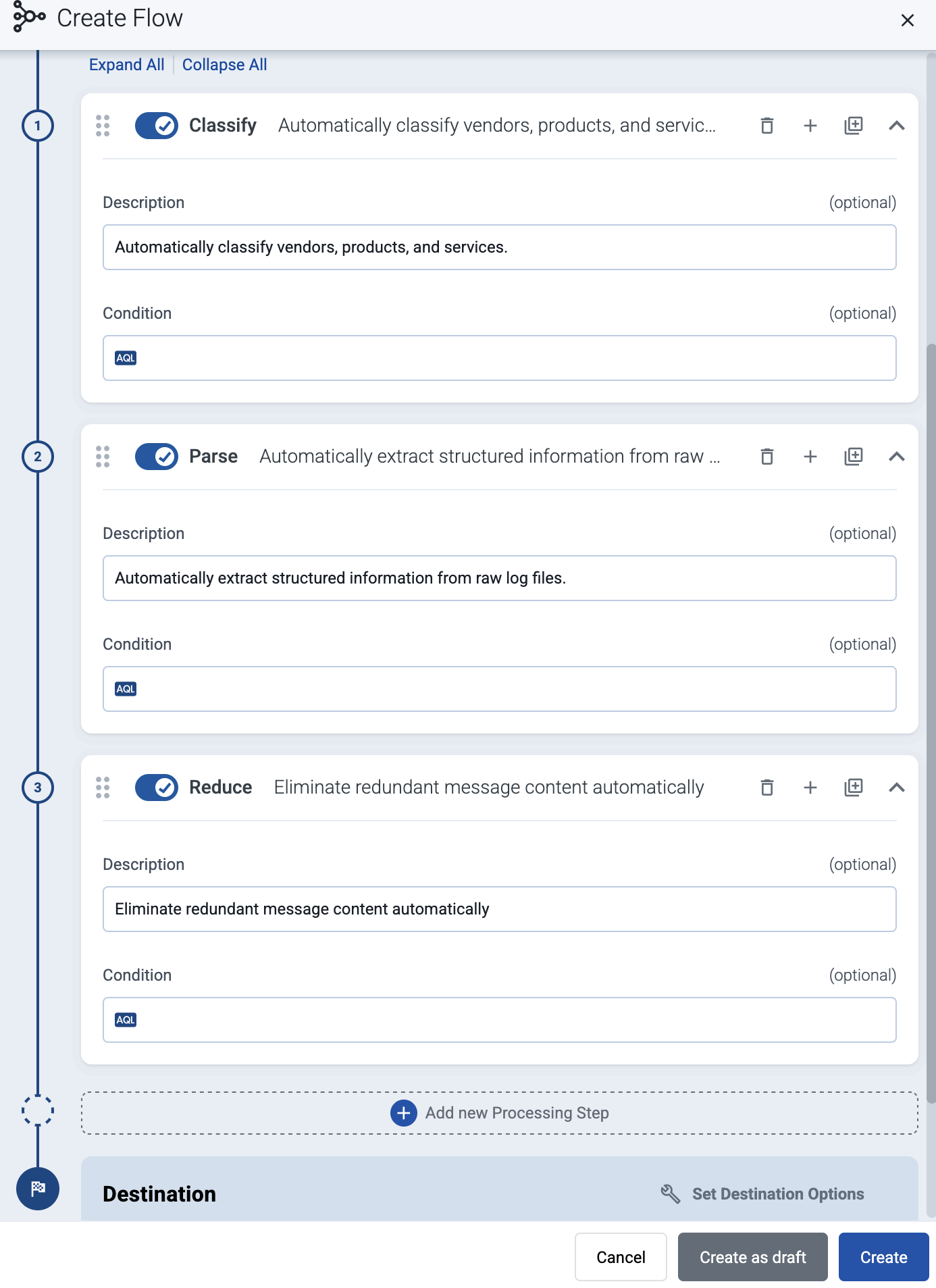

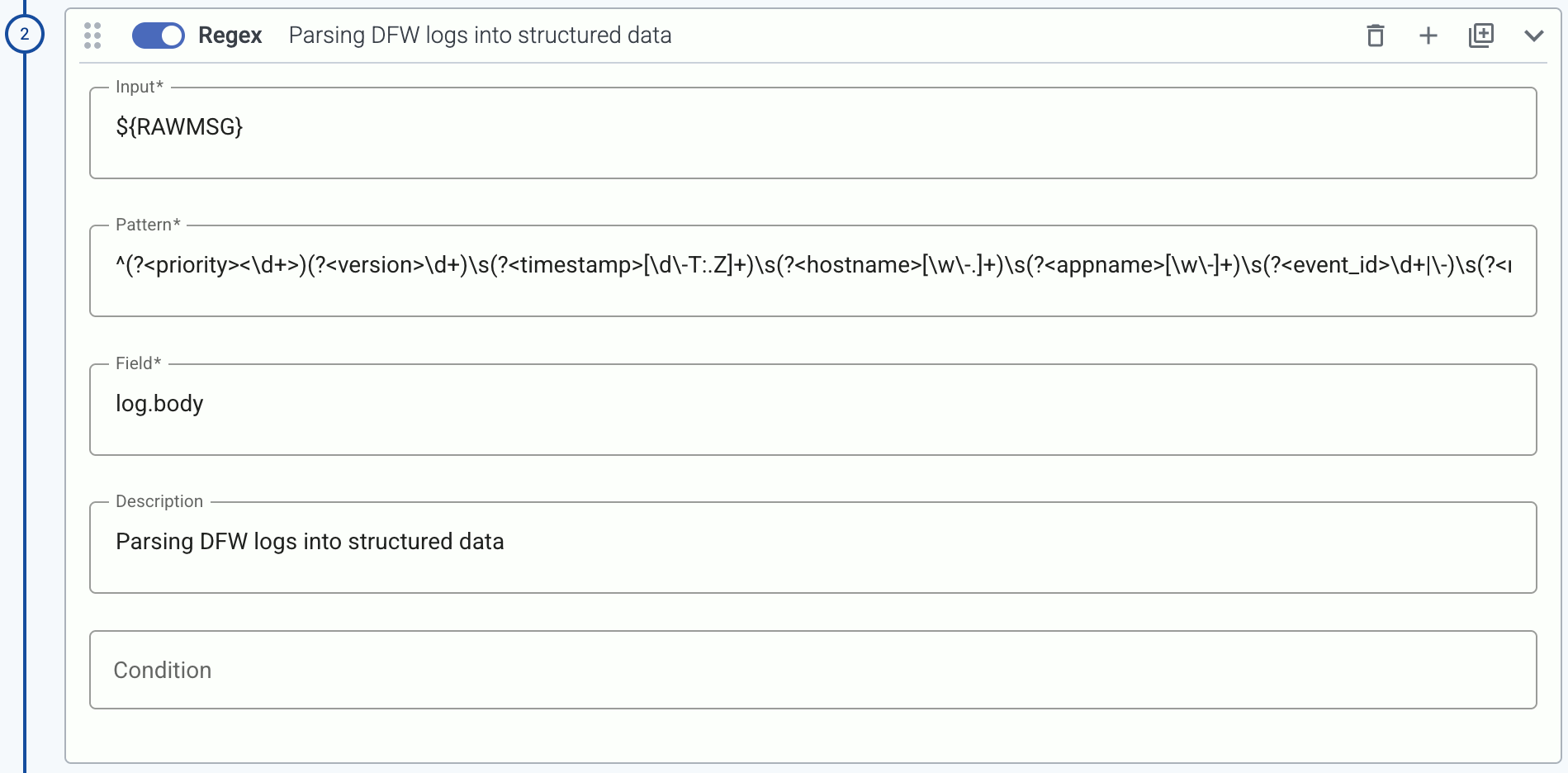

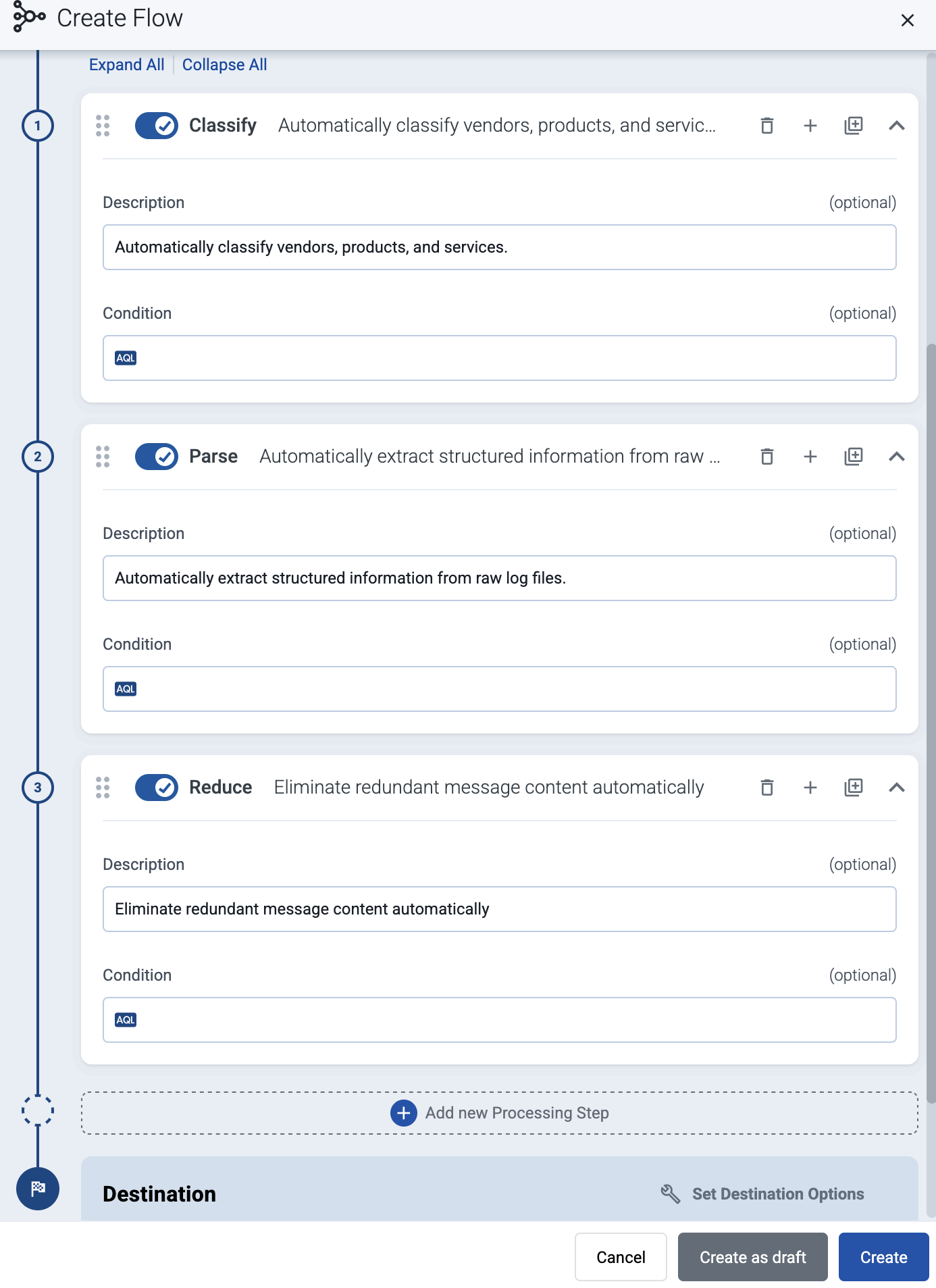

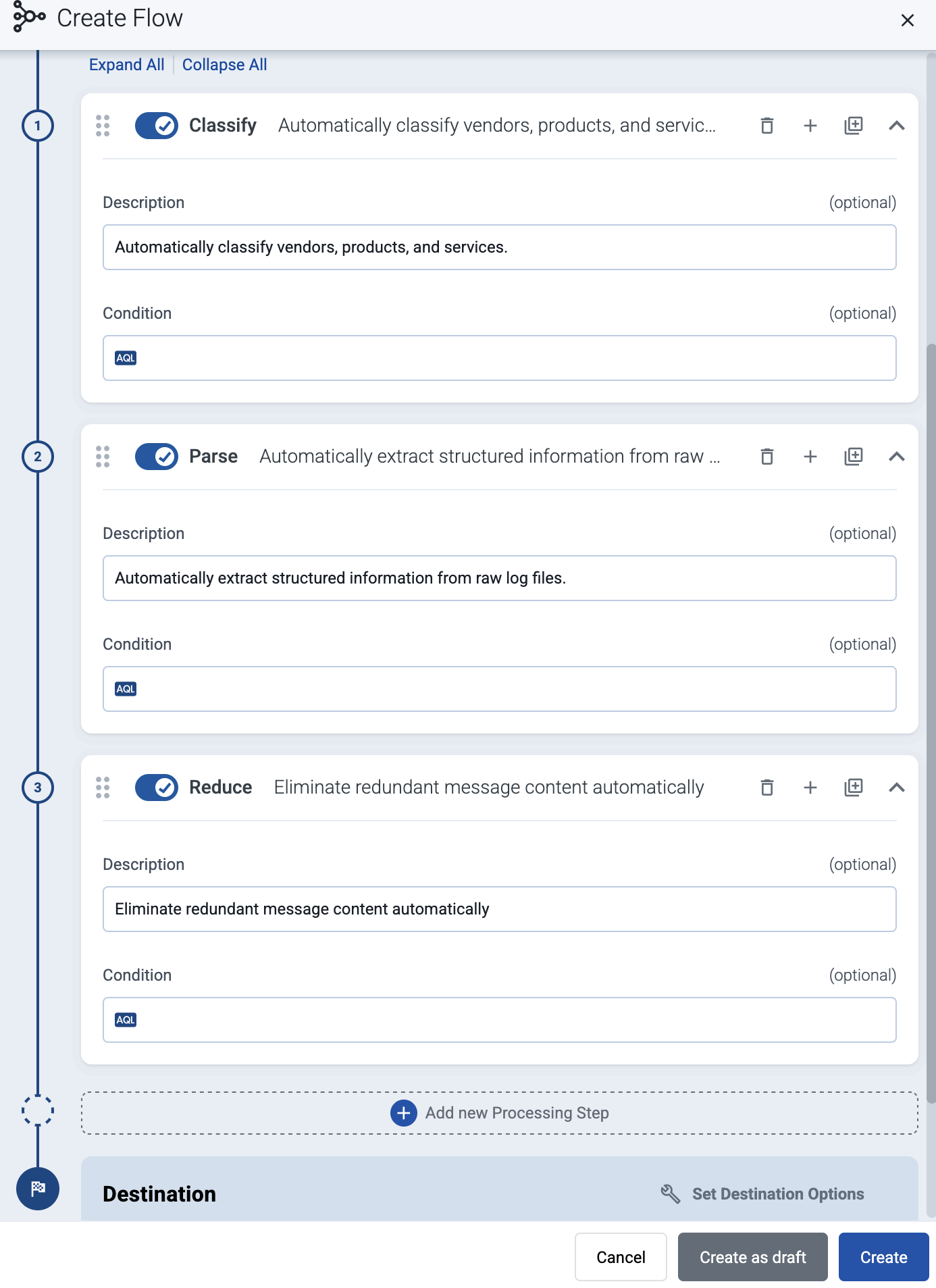

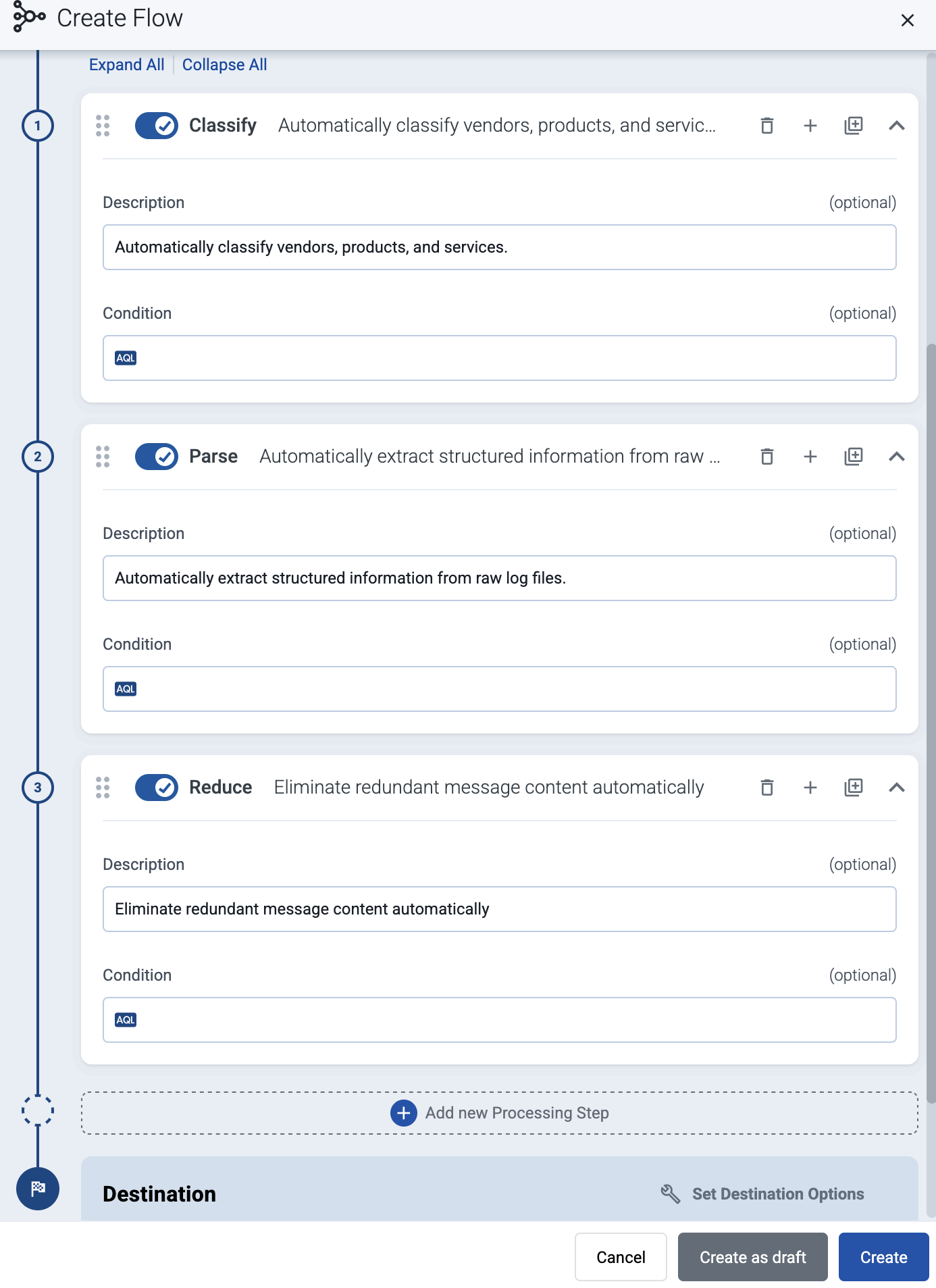

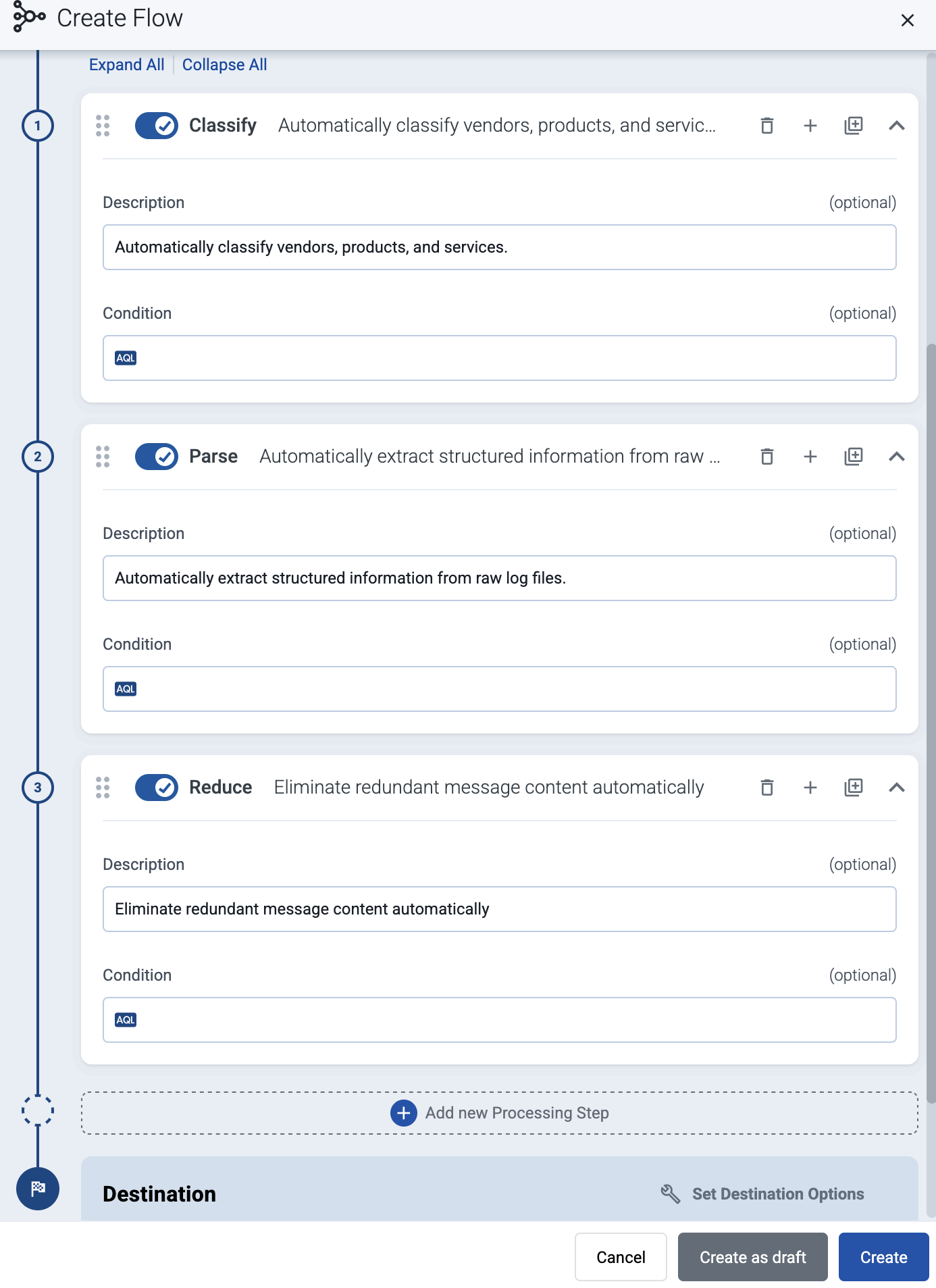

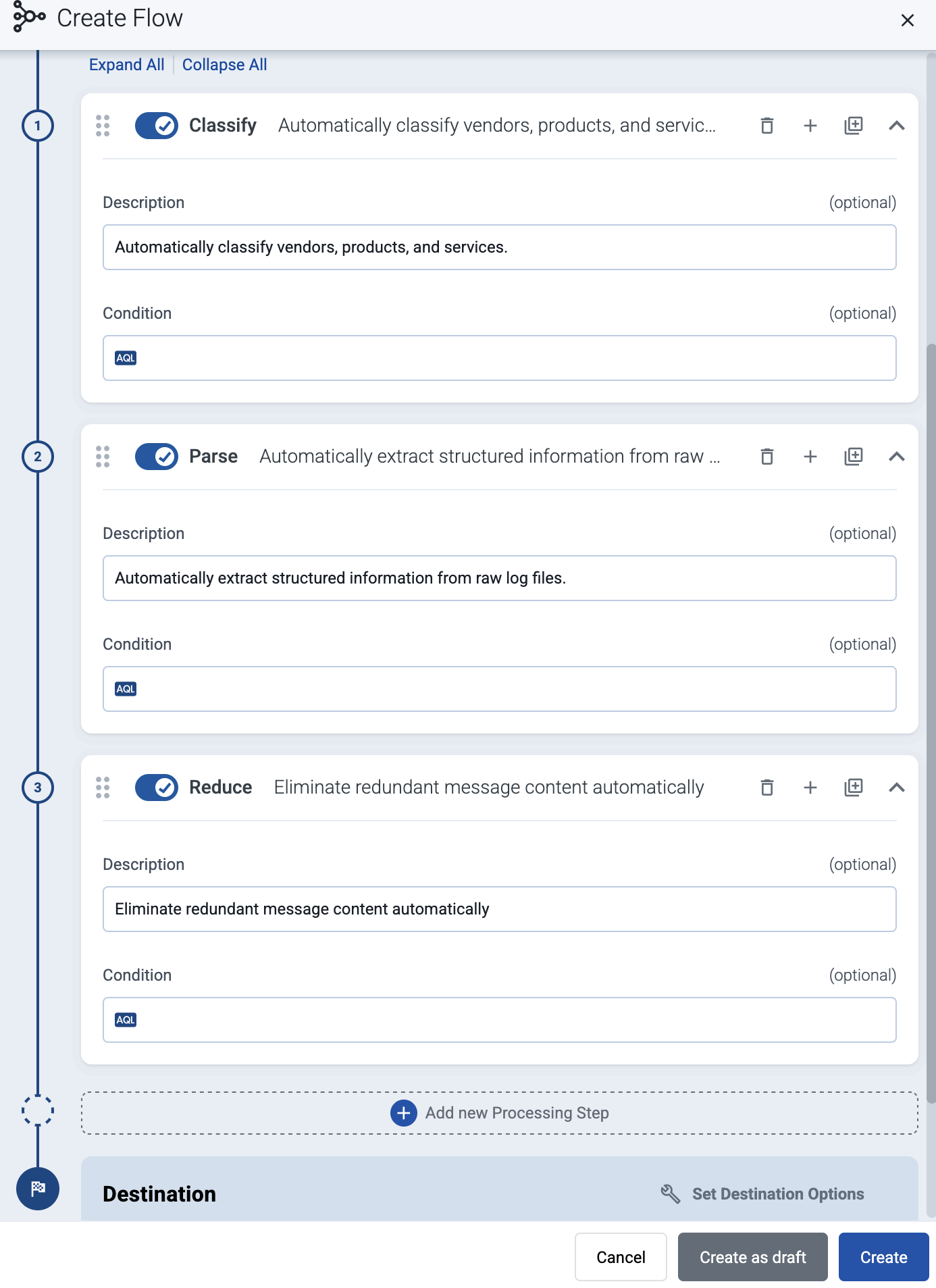

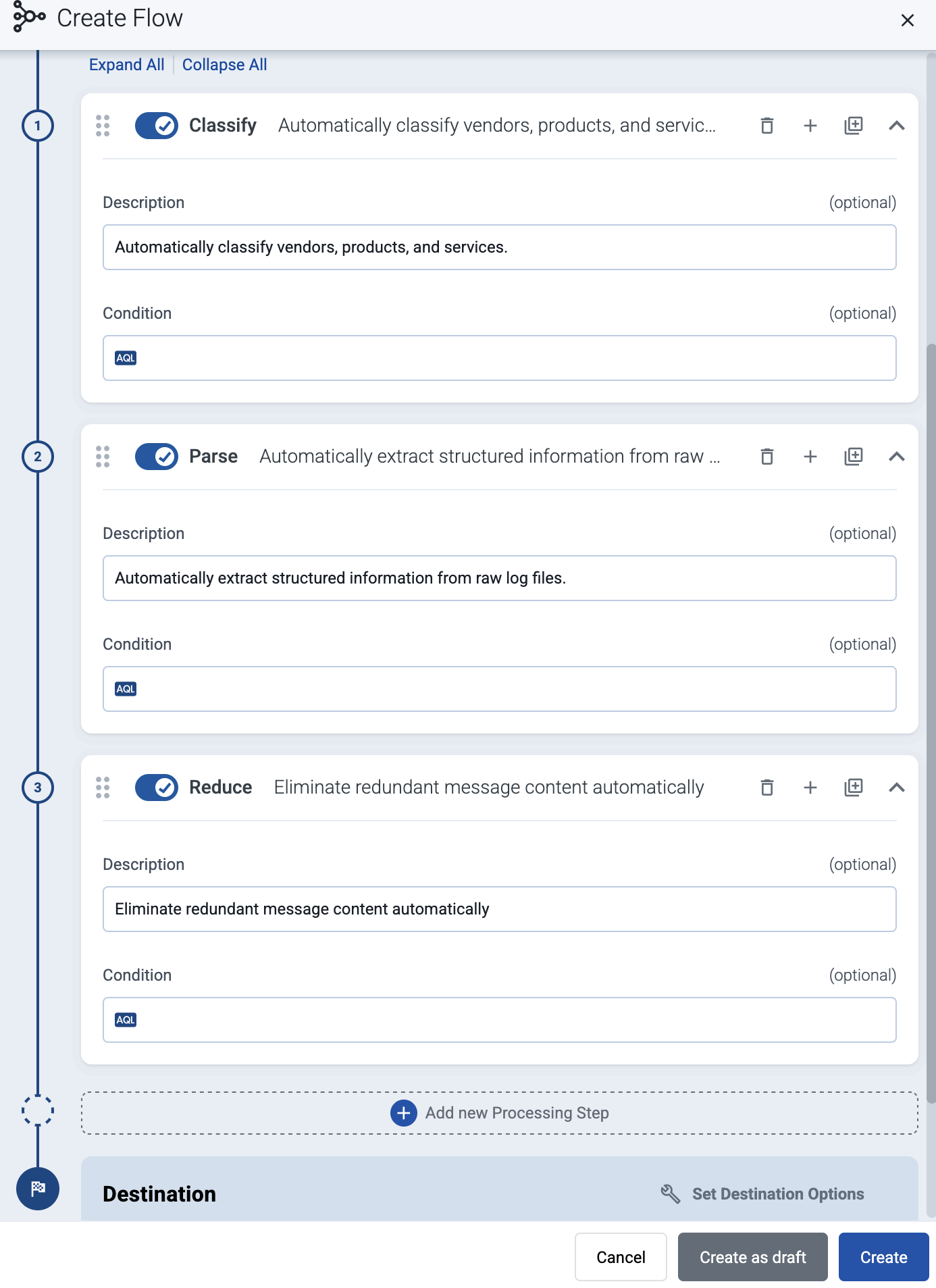

-

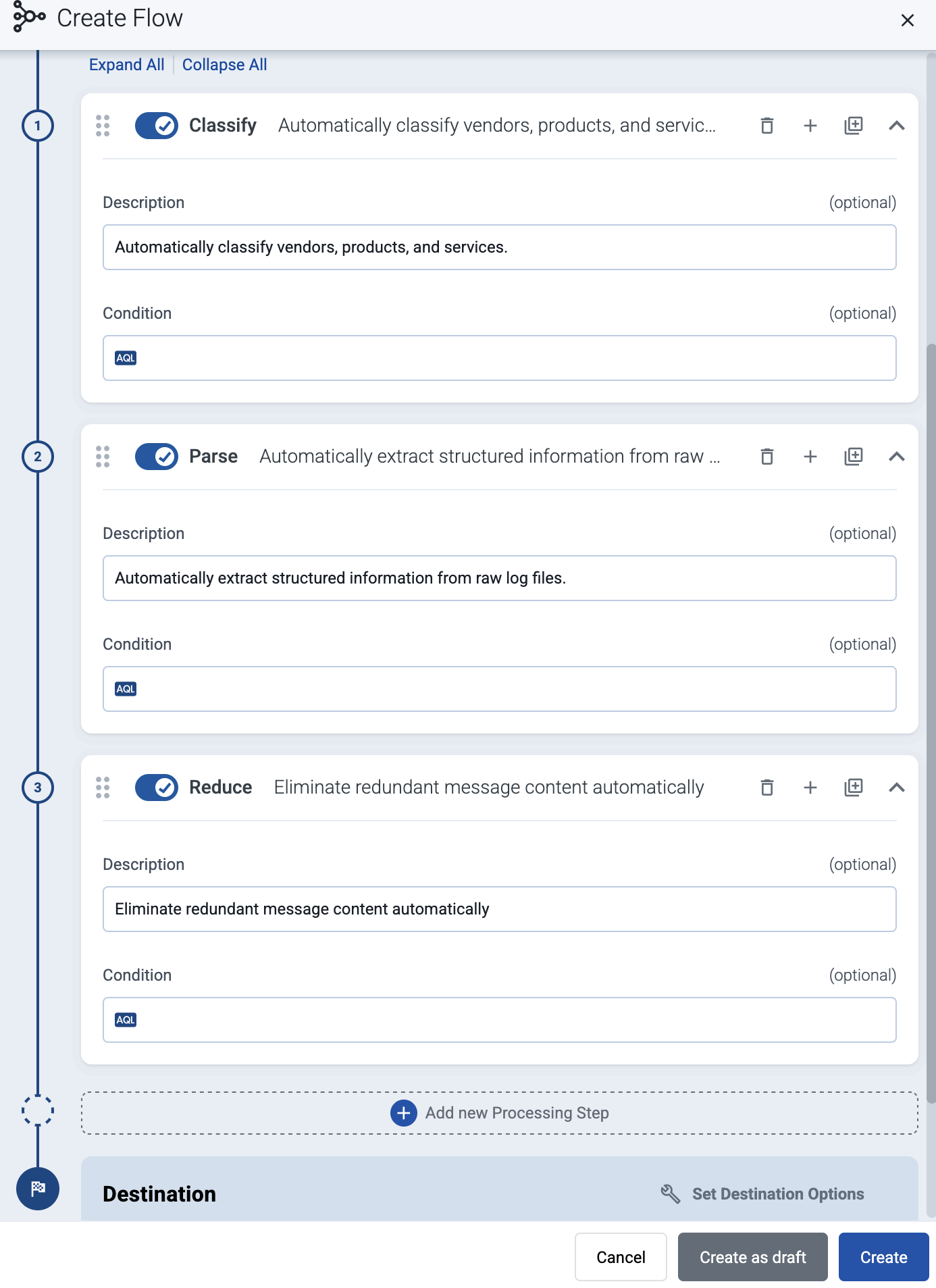

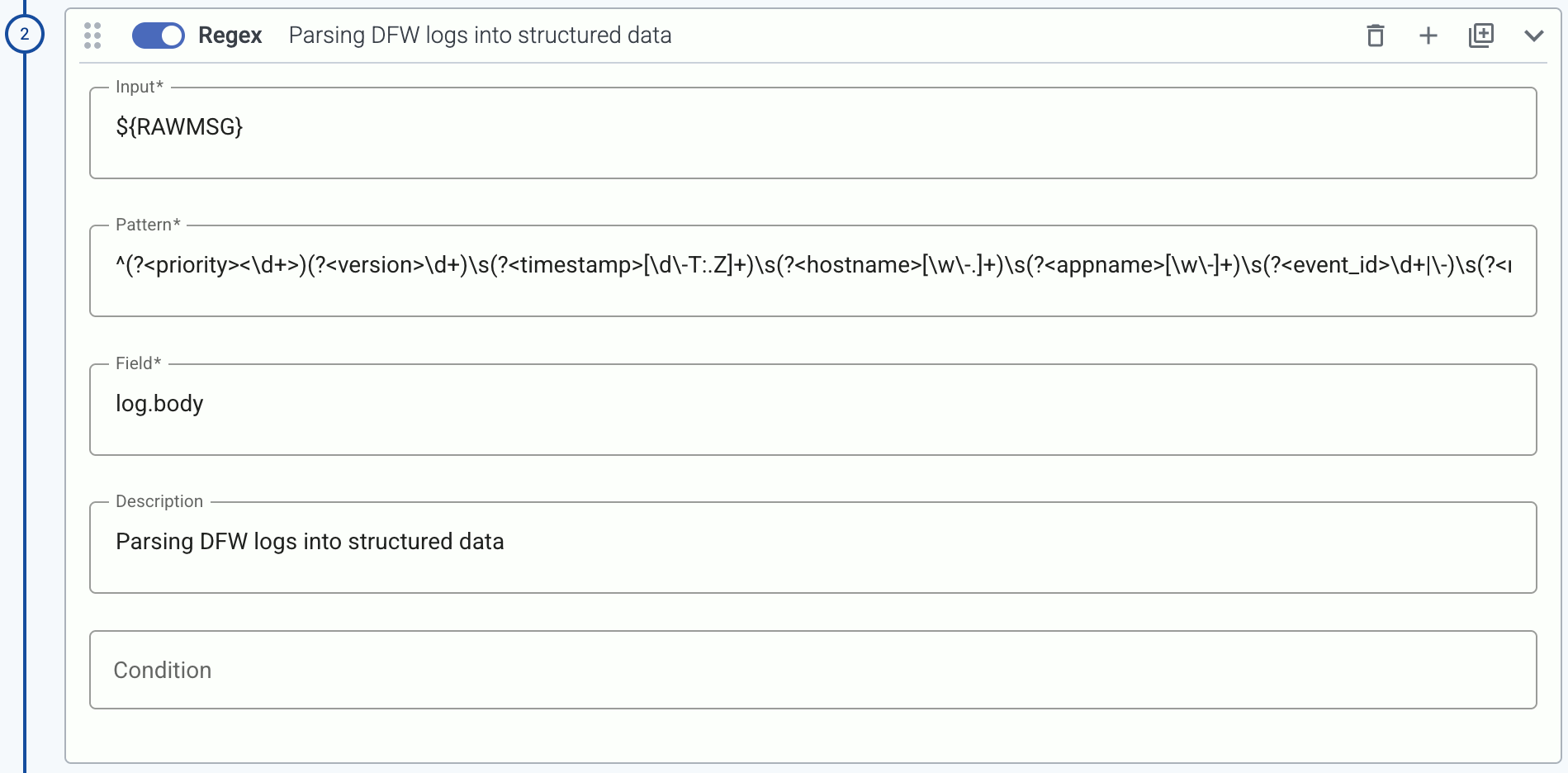

(Optional) To process the data transferred in the flow, select Add New Processing Step. For details, see Processing steps. For example:

- Add a Reduce step to automatically remove redundant and empty fields from your data.

- To select which messages are processed by the flow, add a Select Messages step, and enter a filter into the Query field. For example, to select only the messages received from Fortinet FortiGate firewalls, use the

meta.vendor = fortinet + meta.product = fortigate query.

- Save the processing steps.

-

Select Create.

-

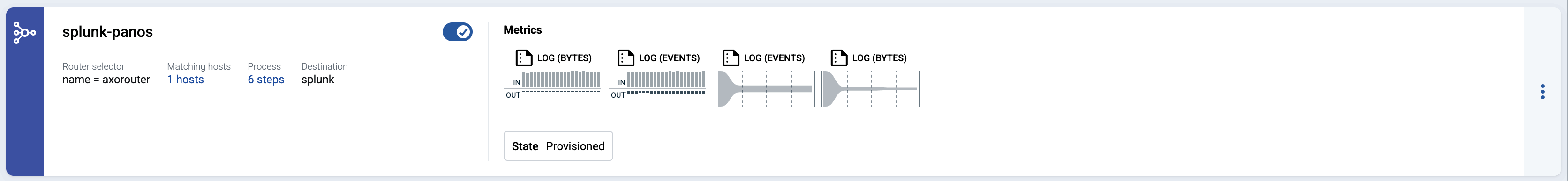

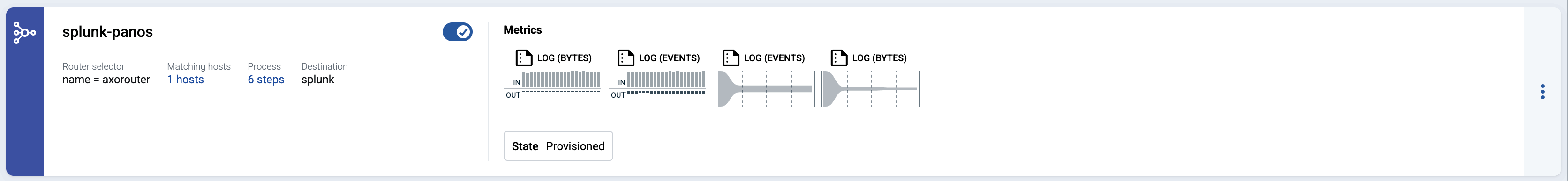

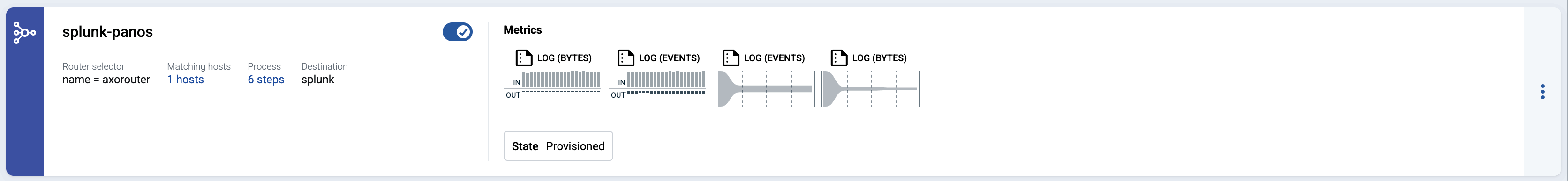

The new flow appears in the Flows list.

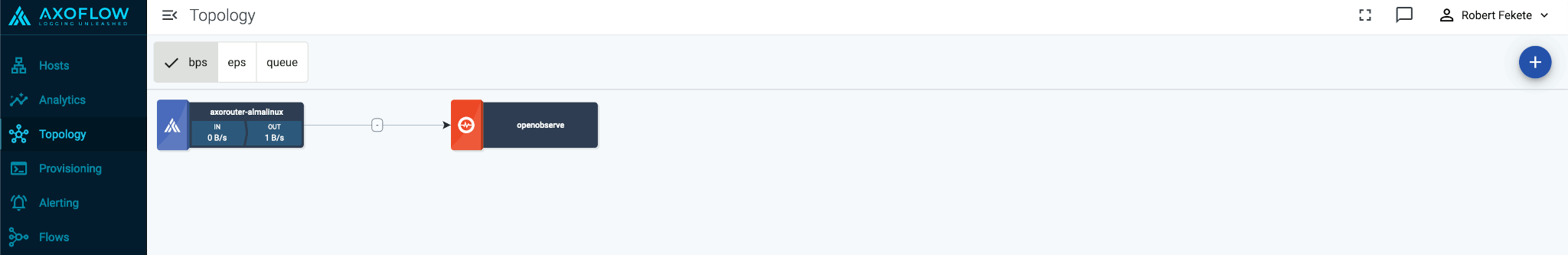

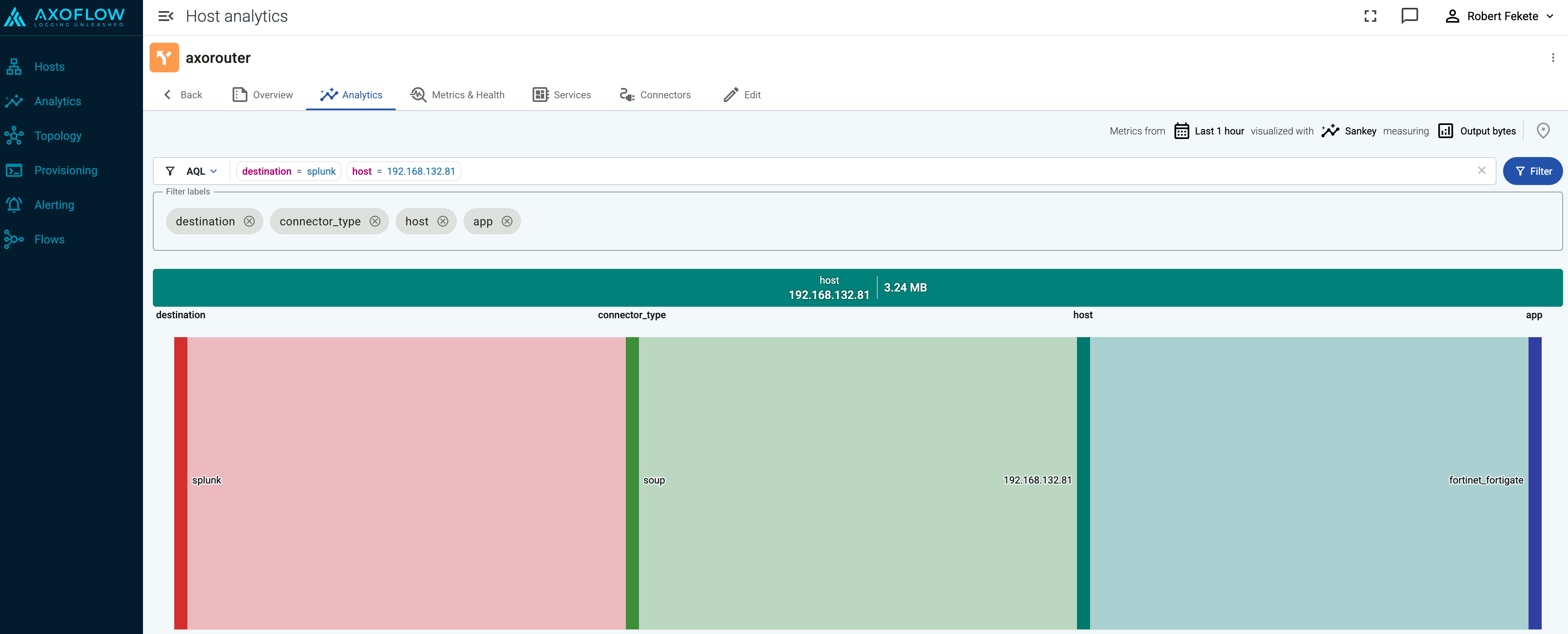

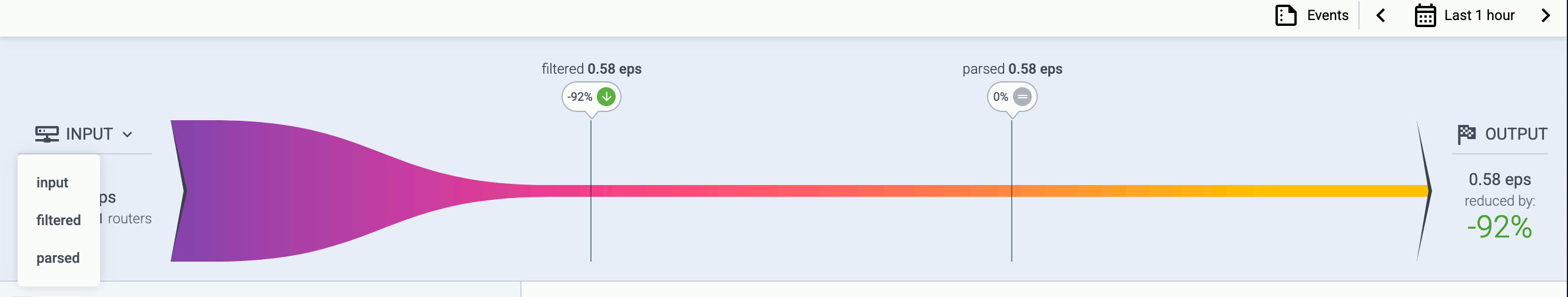

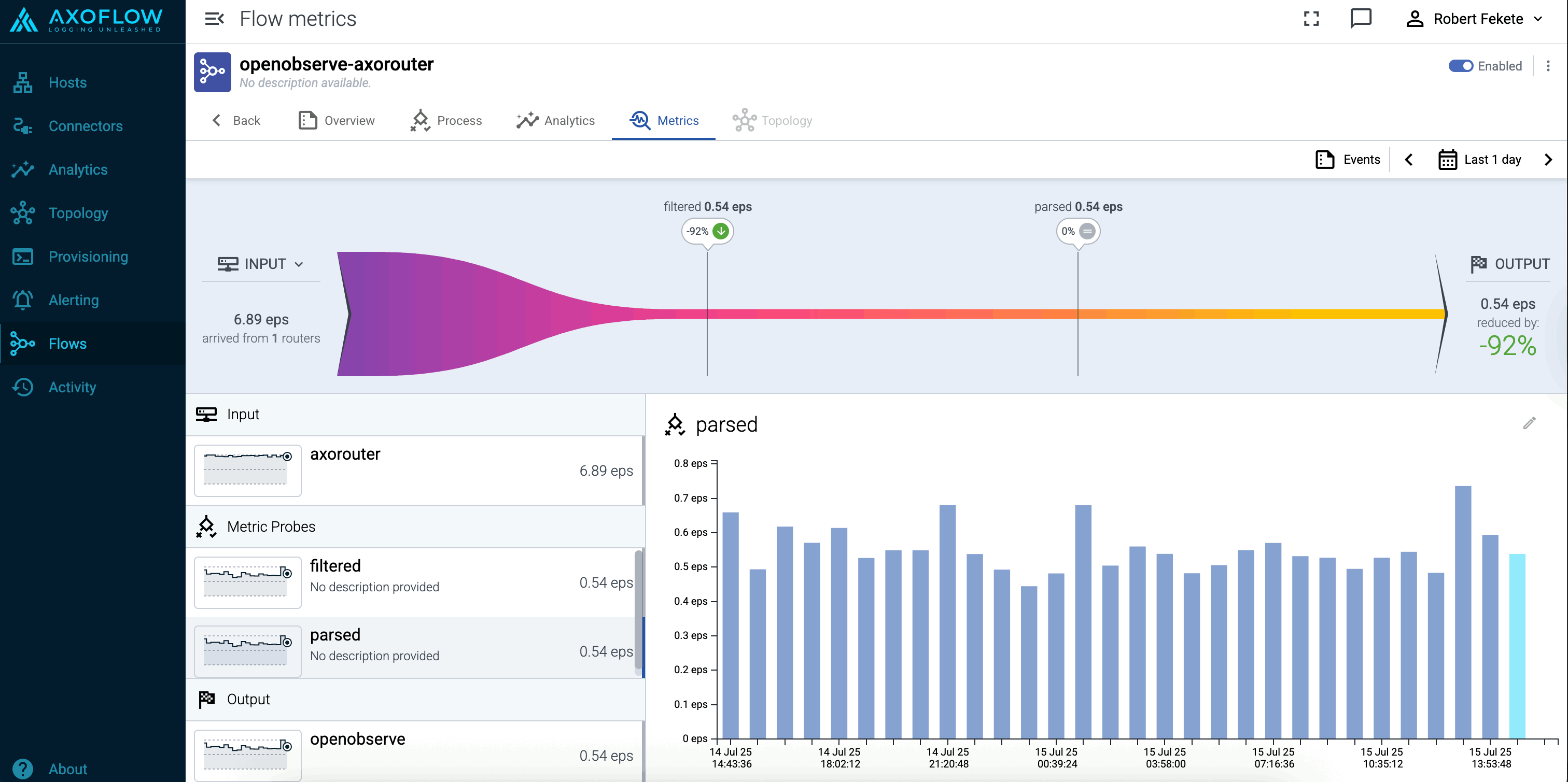

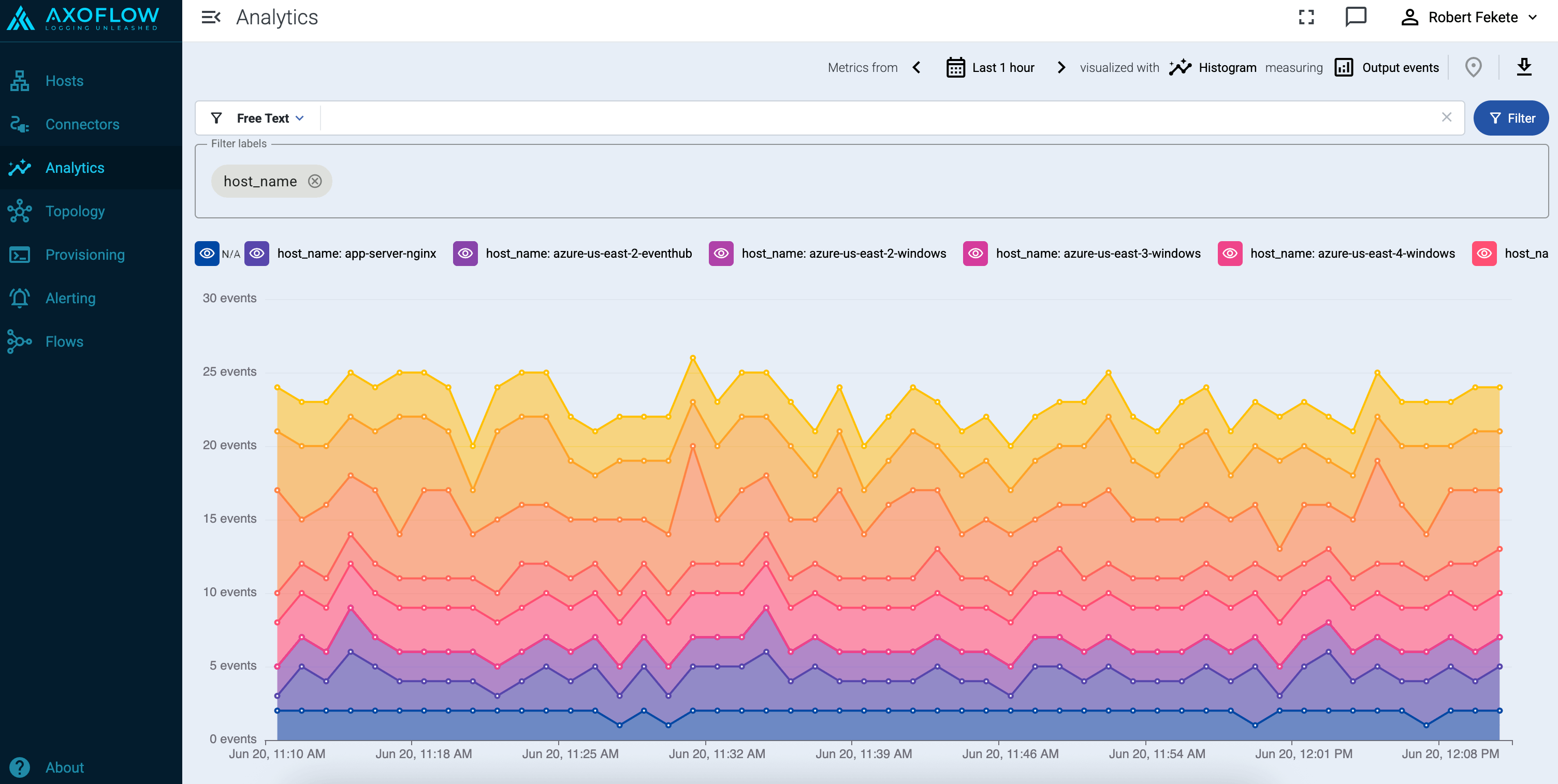

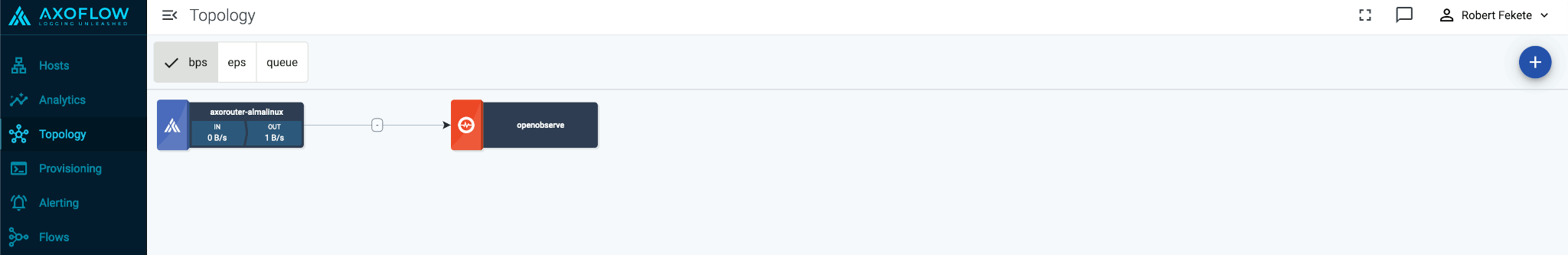

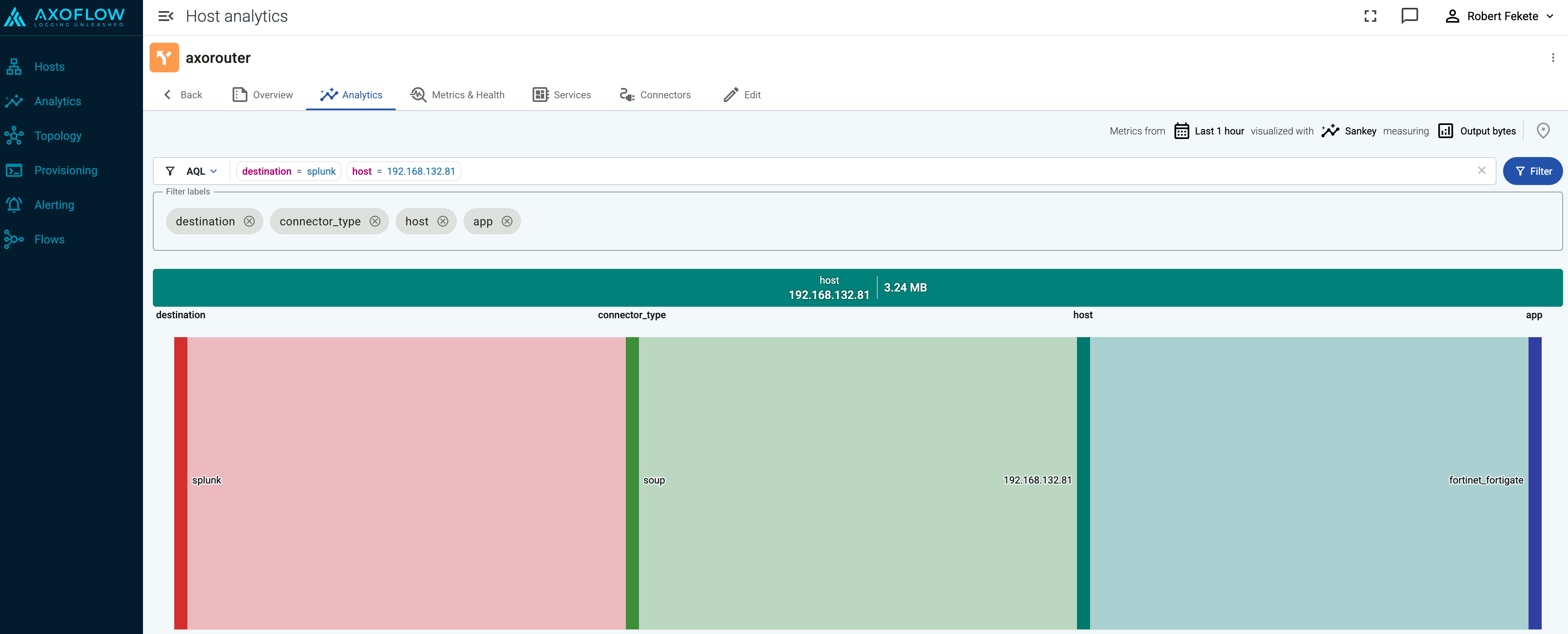

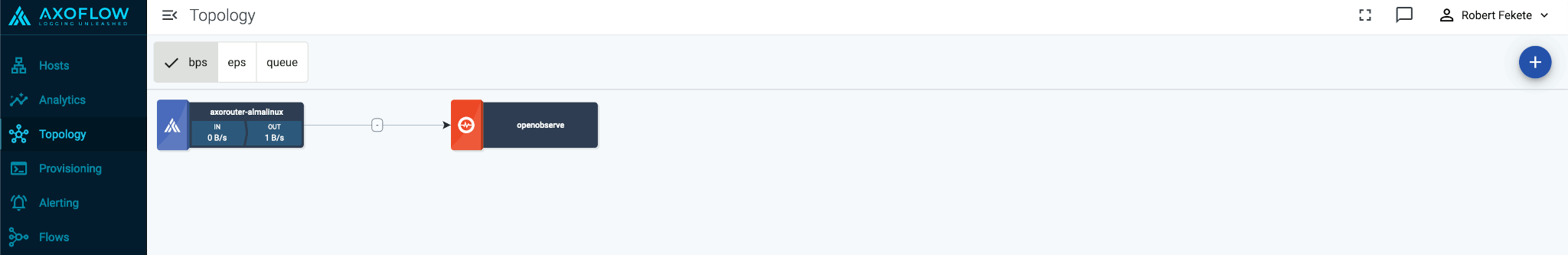

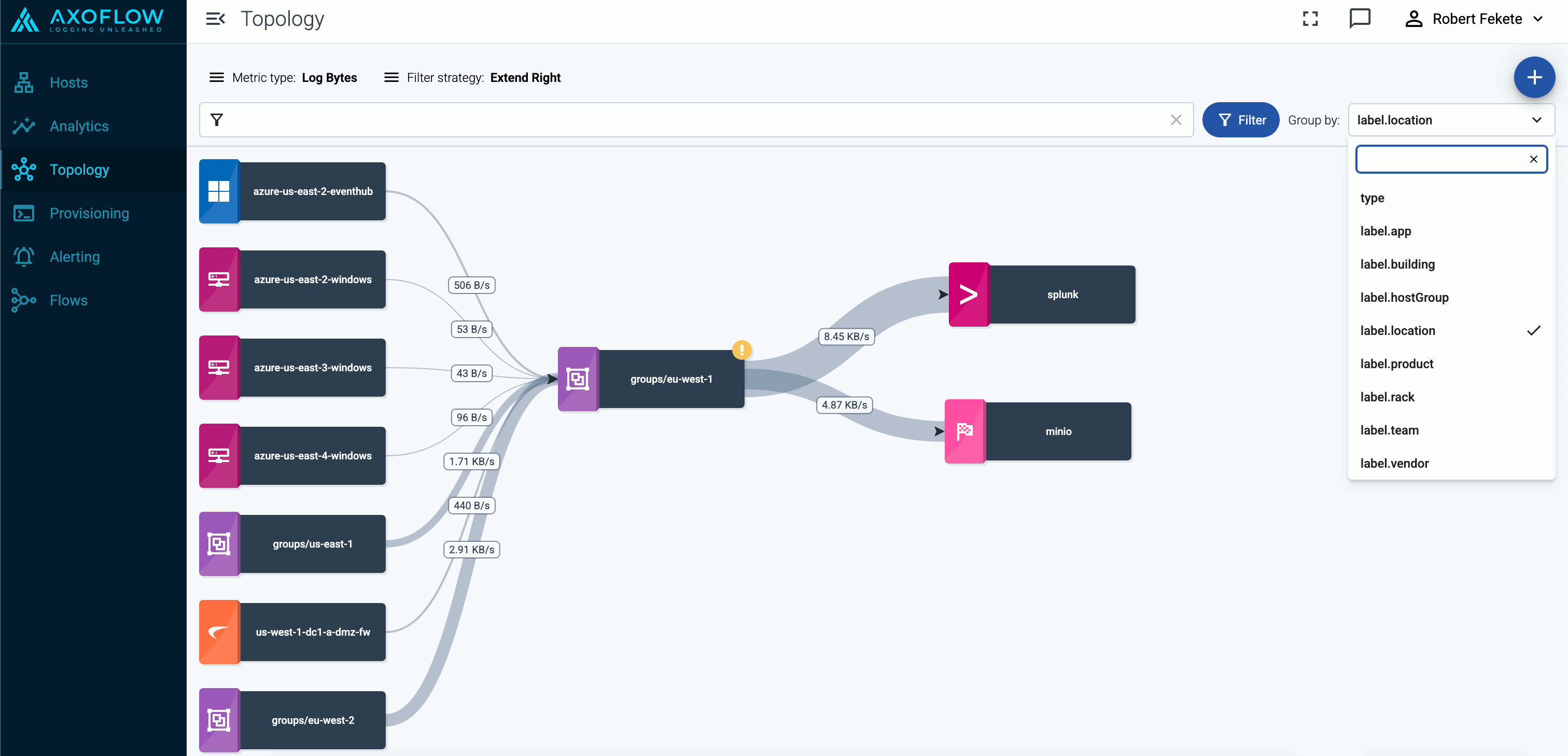

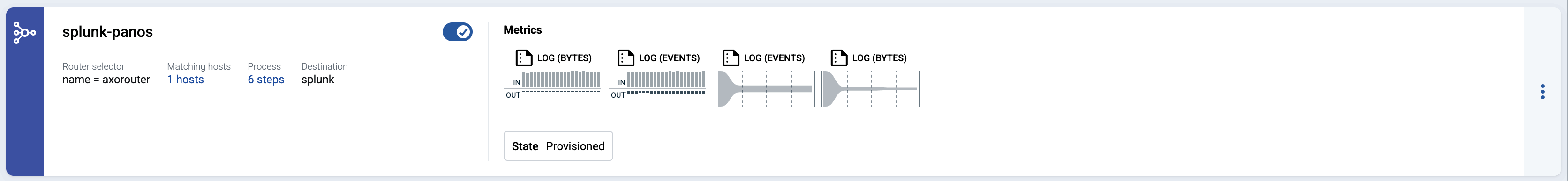

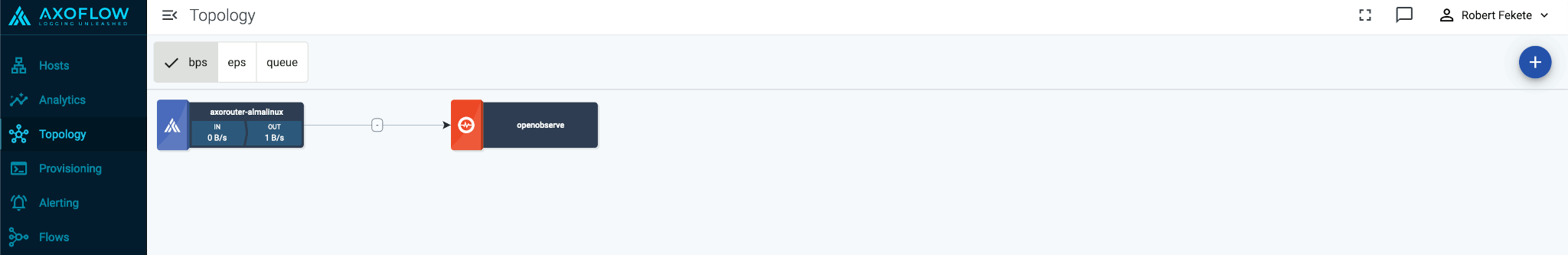

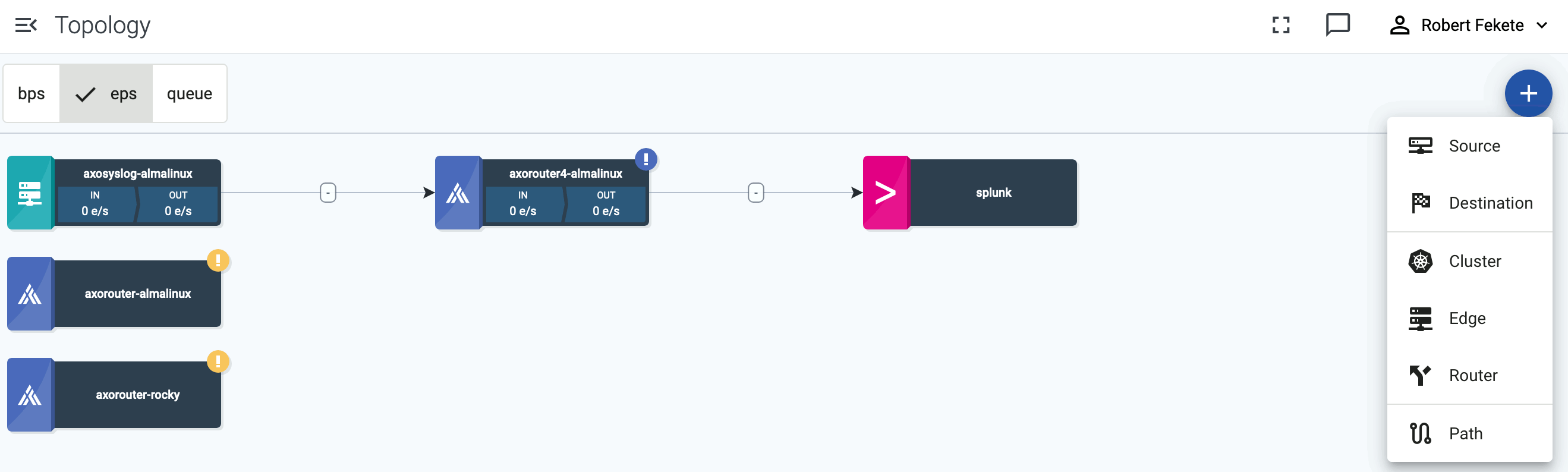

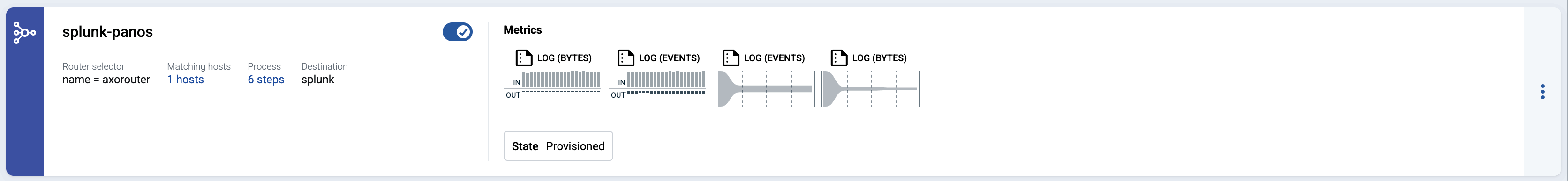

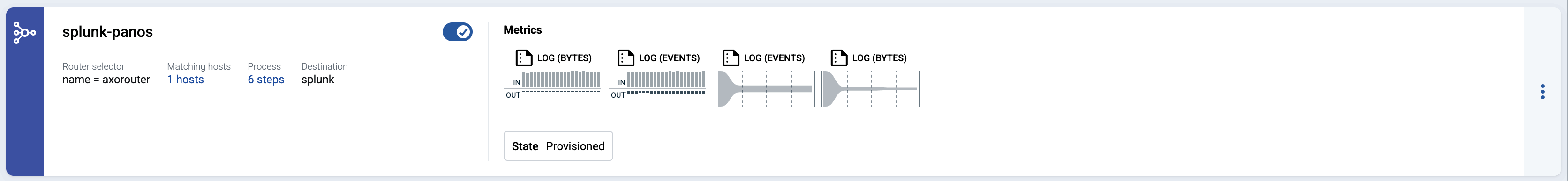

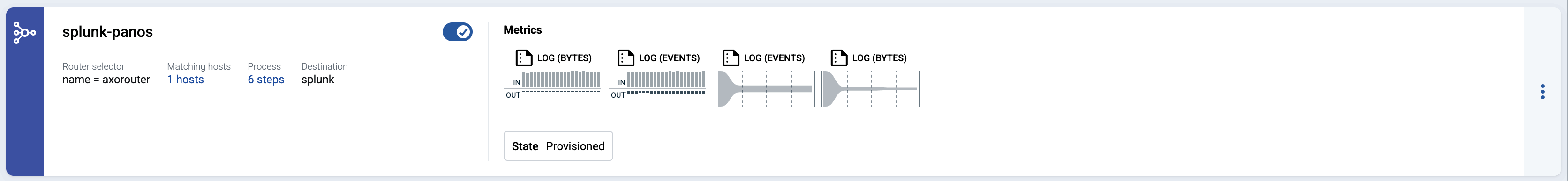

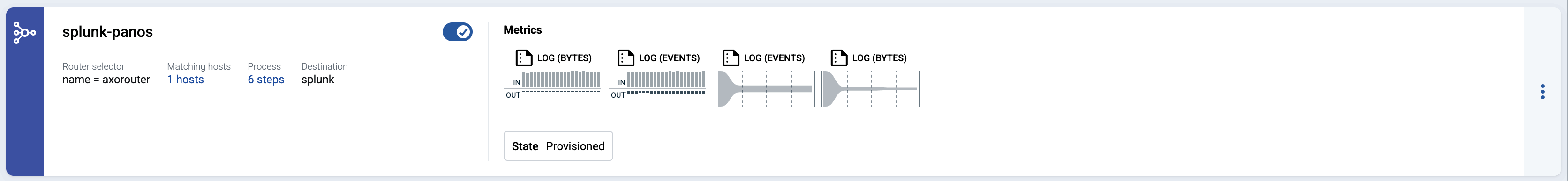

Check the metrics on the Topology page

Open the Topology page and verify that your AxoRouter instance is connected both to the source and the destination.

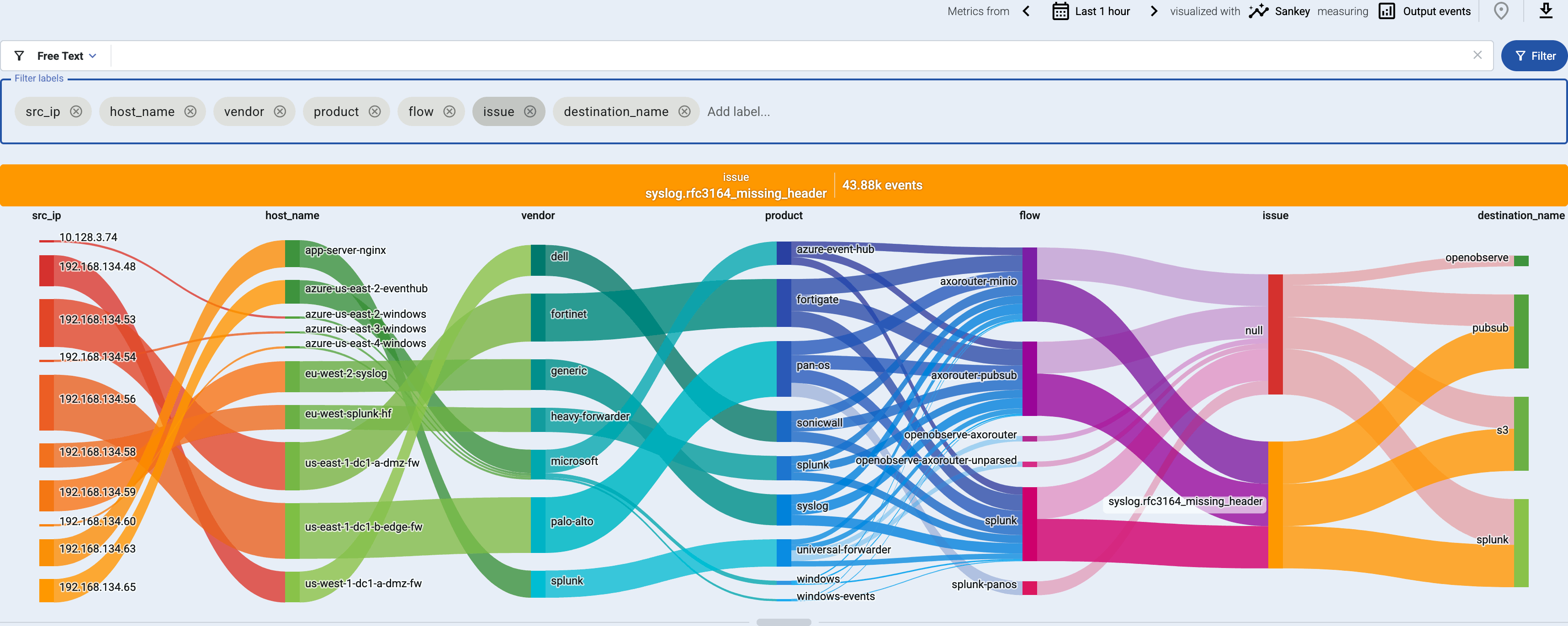

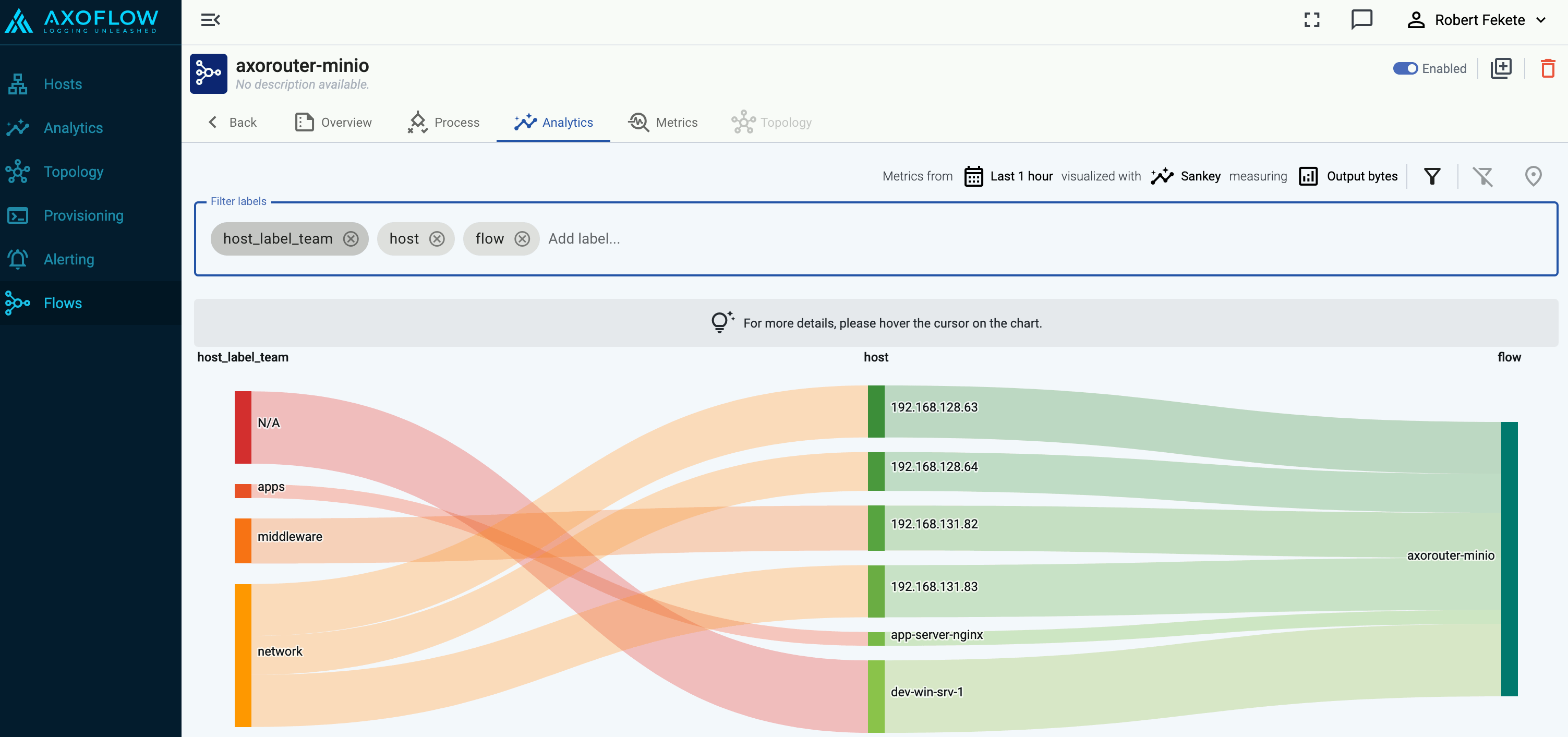

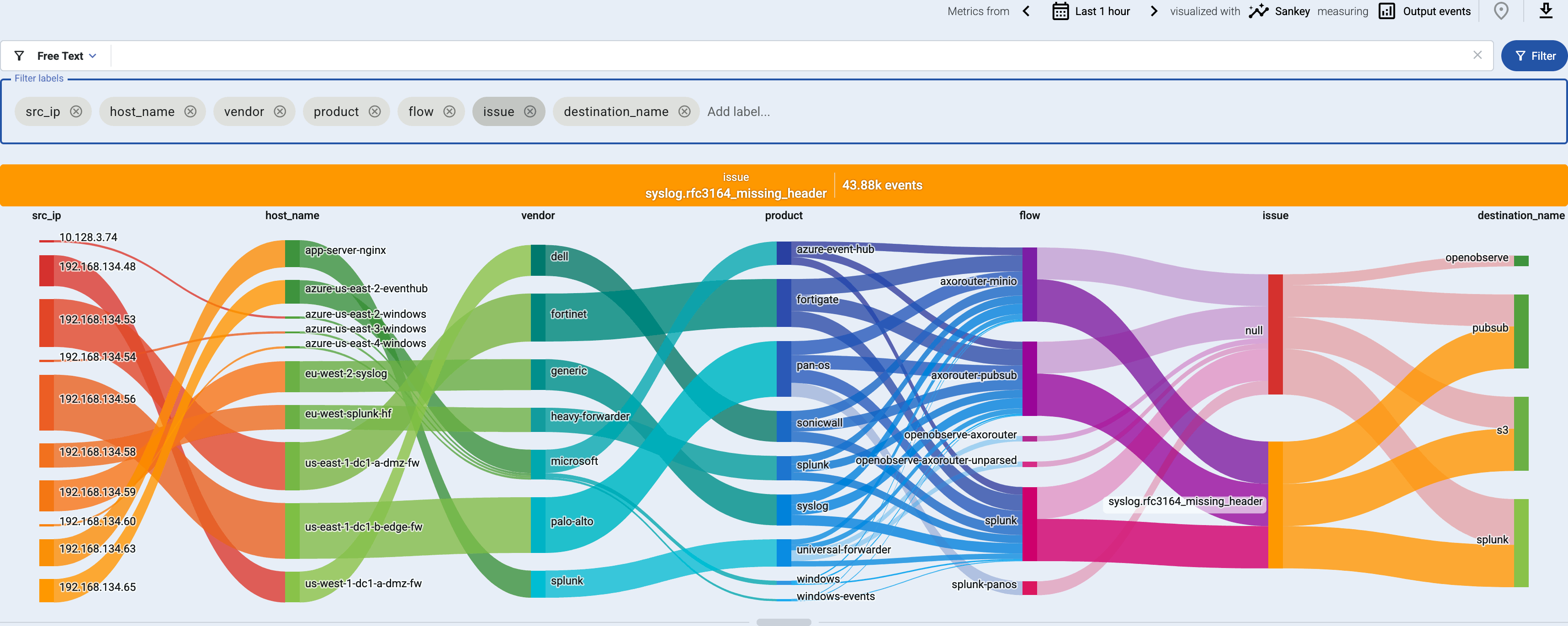

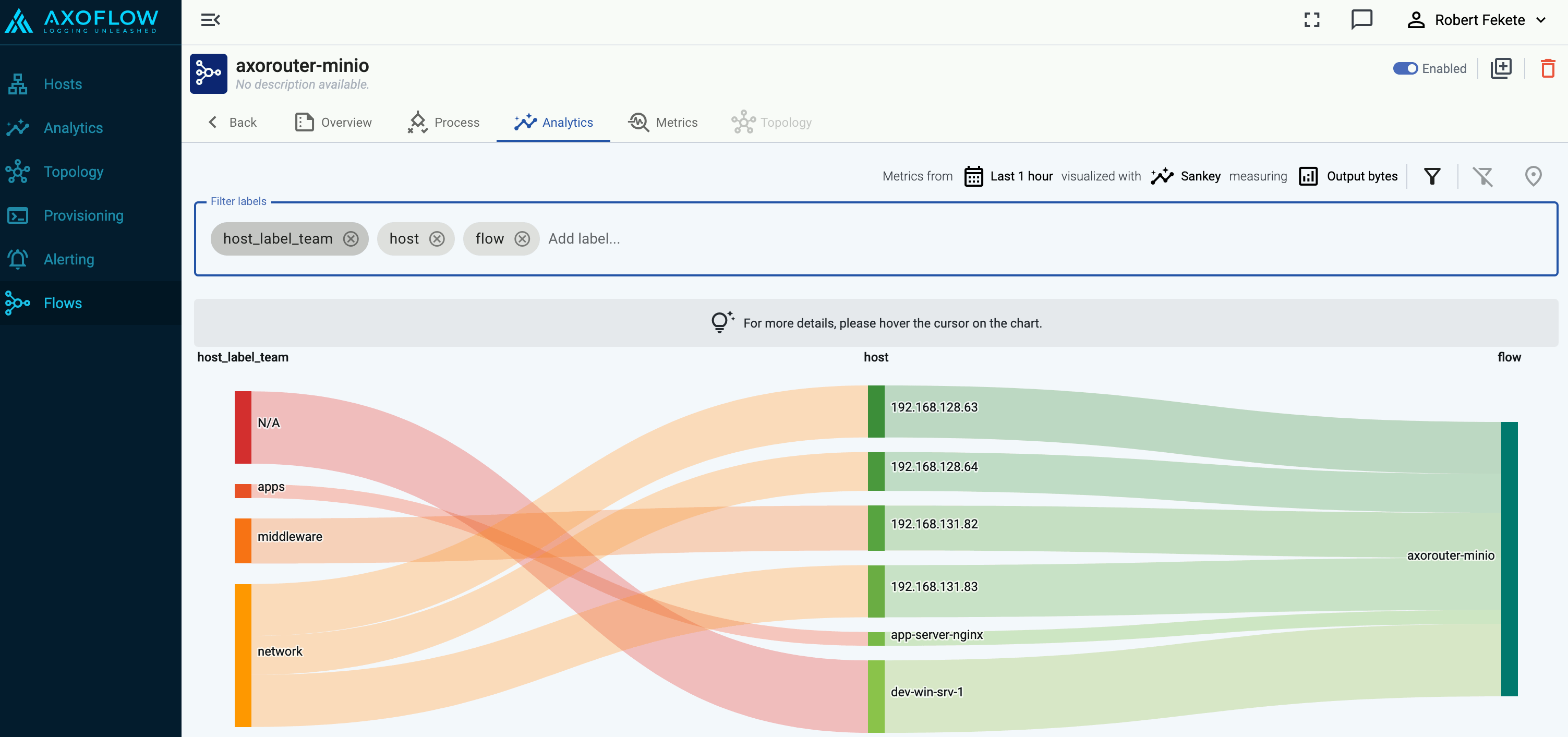

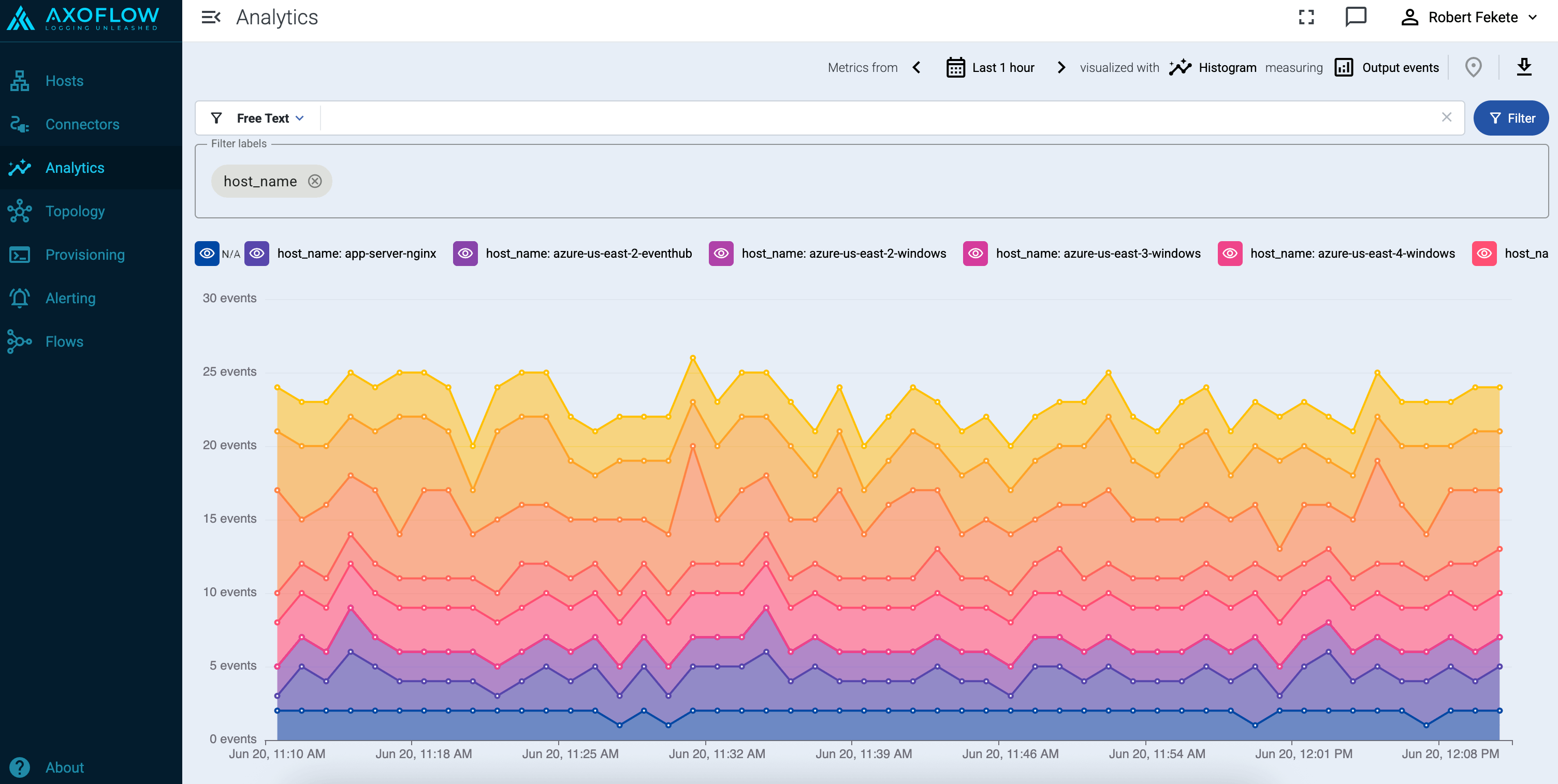

If you have traffic flowing from the source to your AxoRouter instance, the Topology page shows the amount of data flowing on the path. Click the AxoRouter instance, then select Analytics to visualize the data flow.

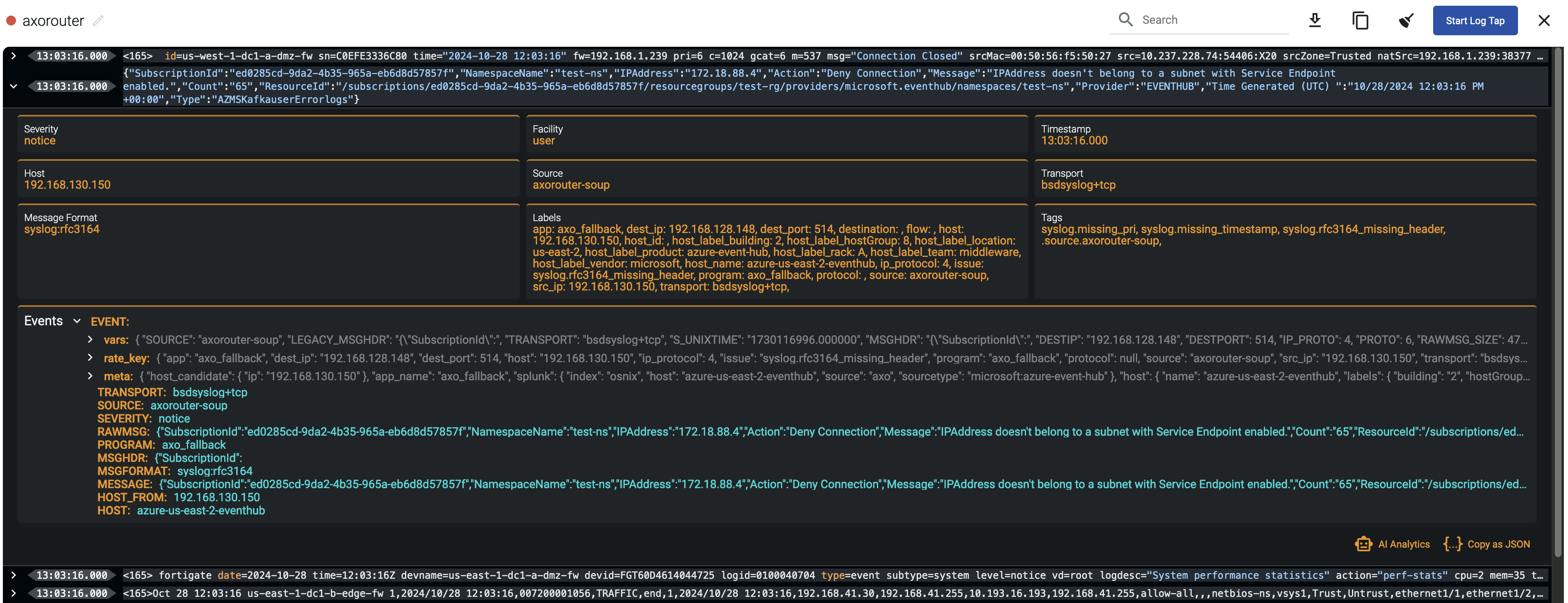

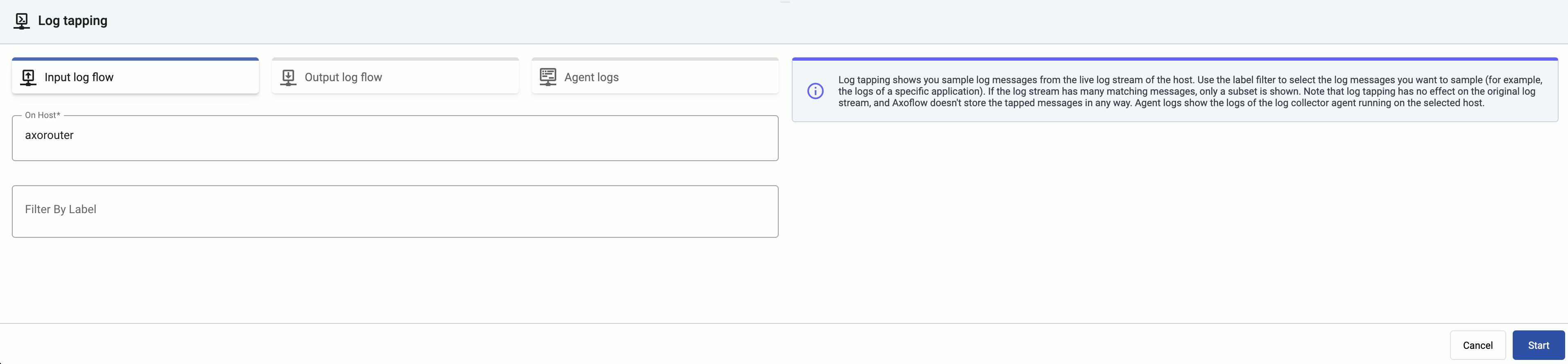

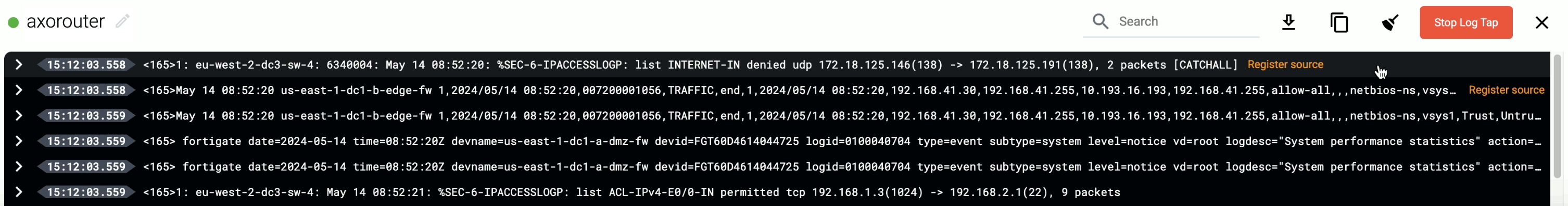

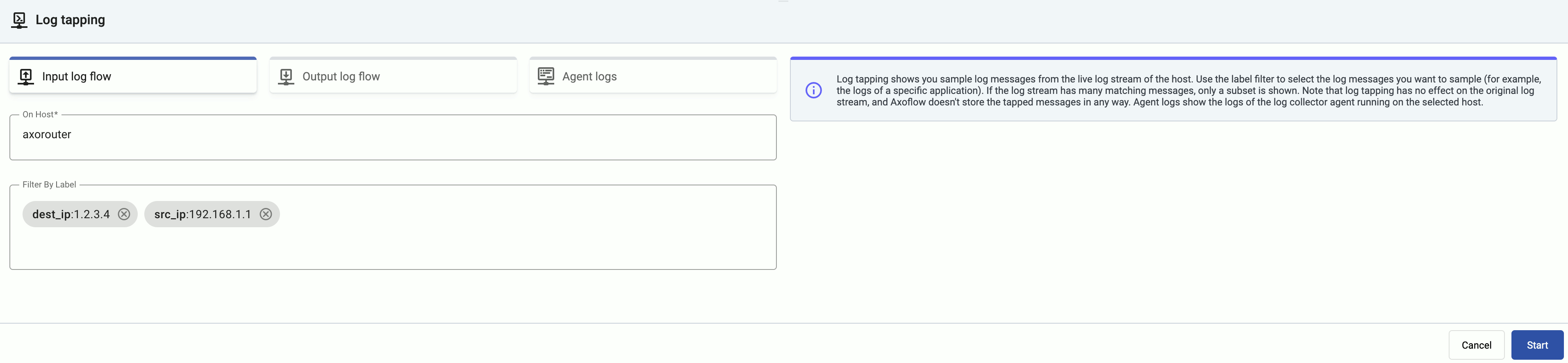

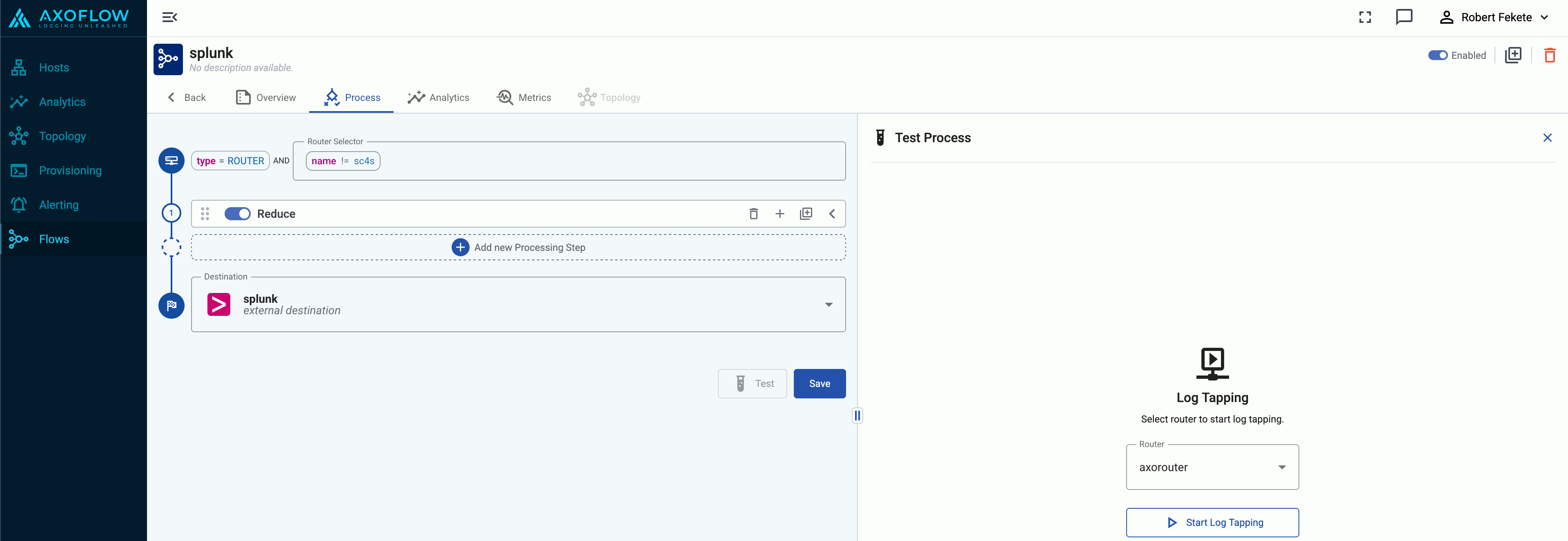

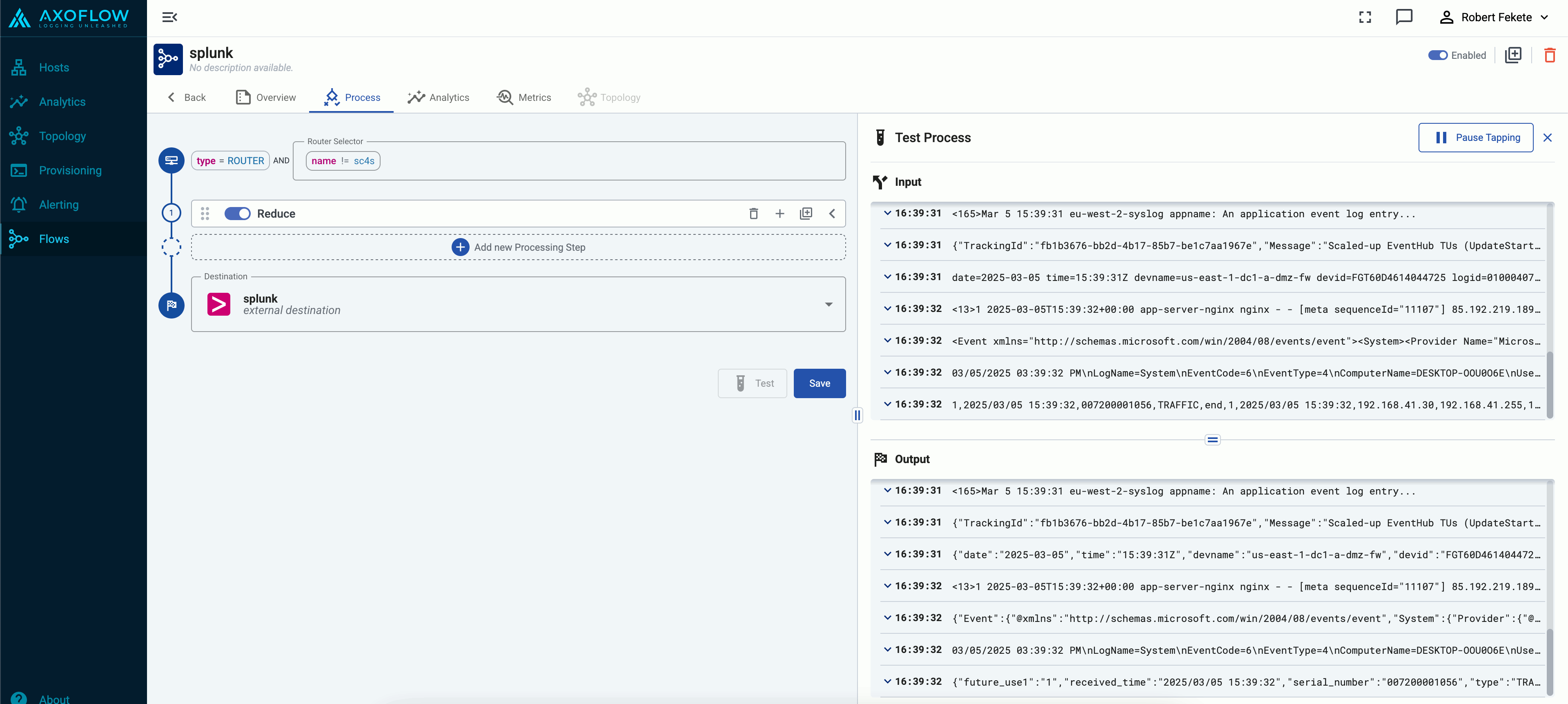

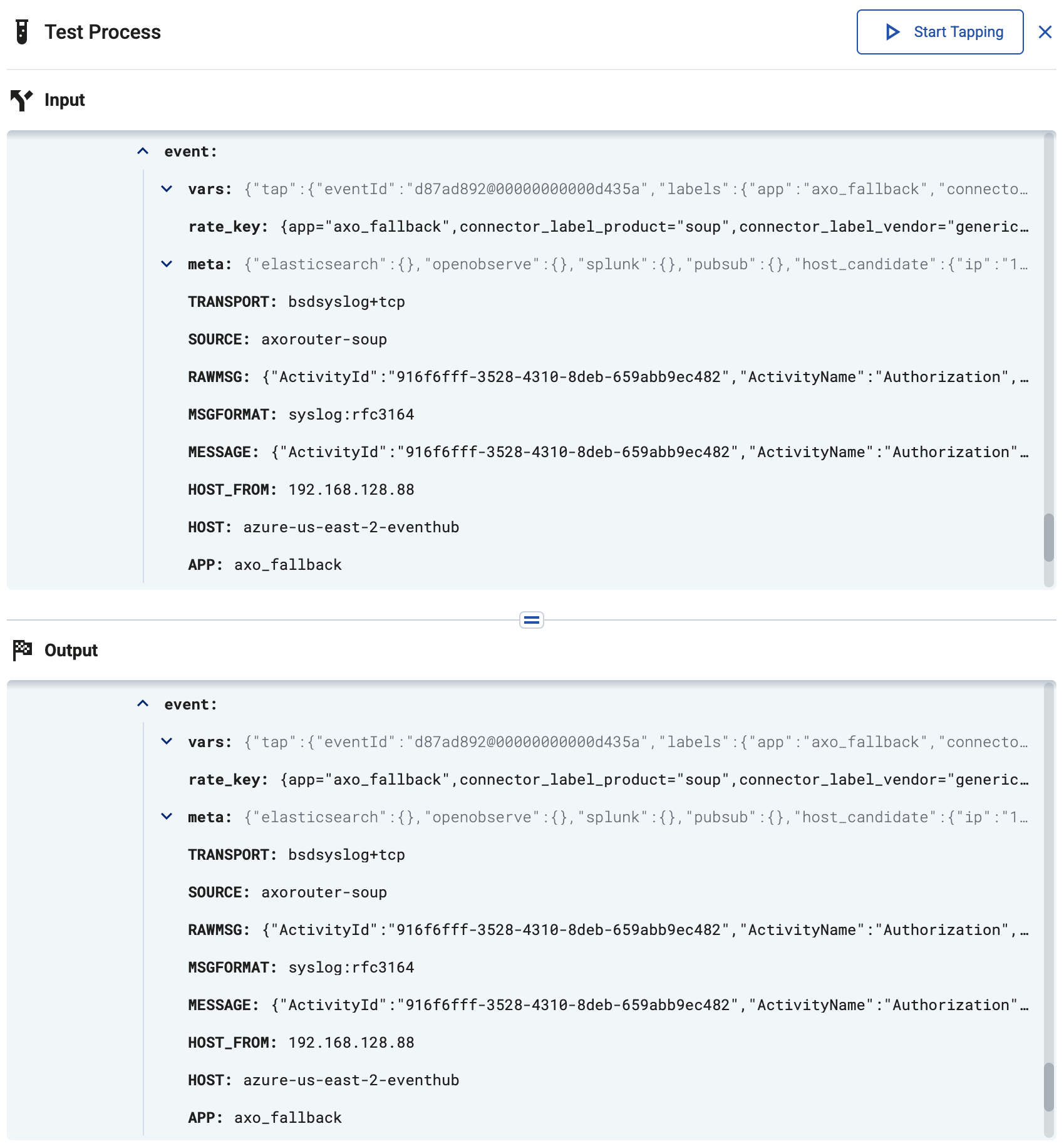

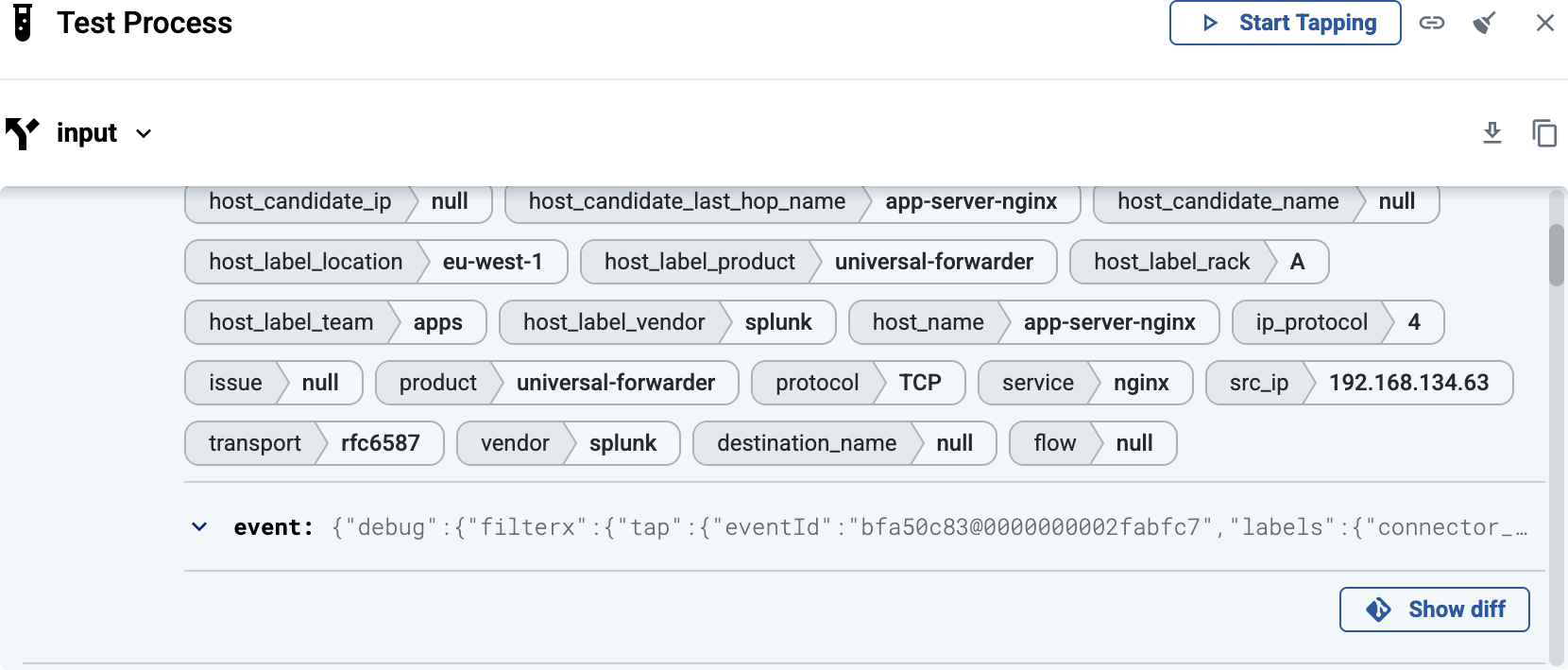

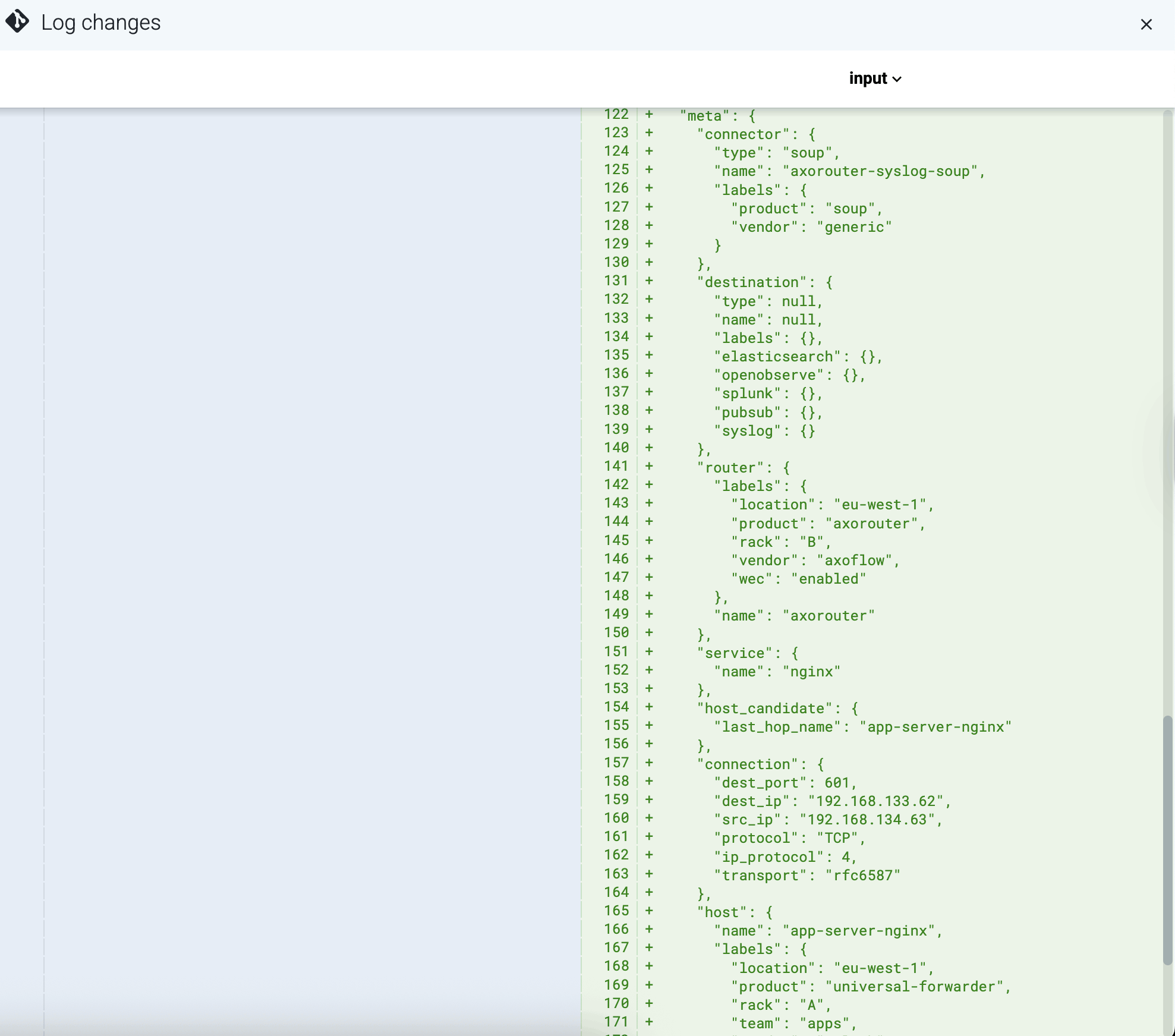

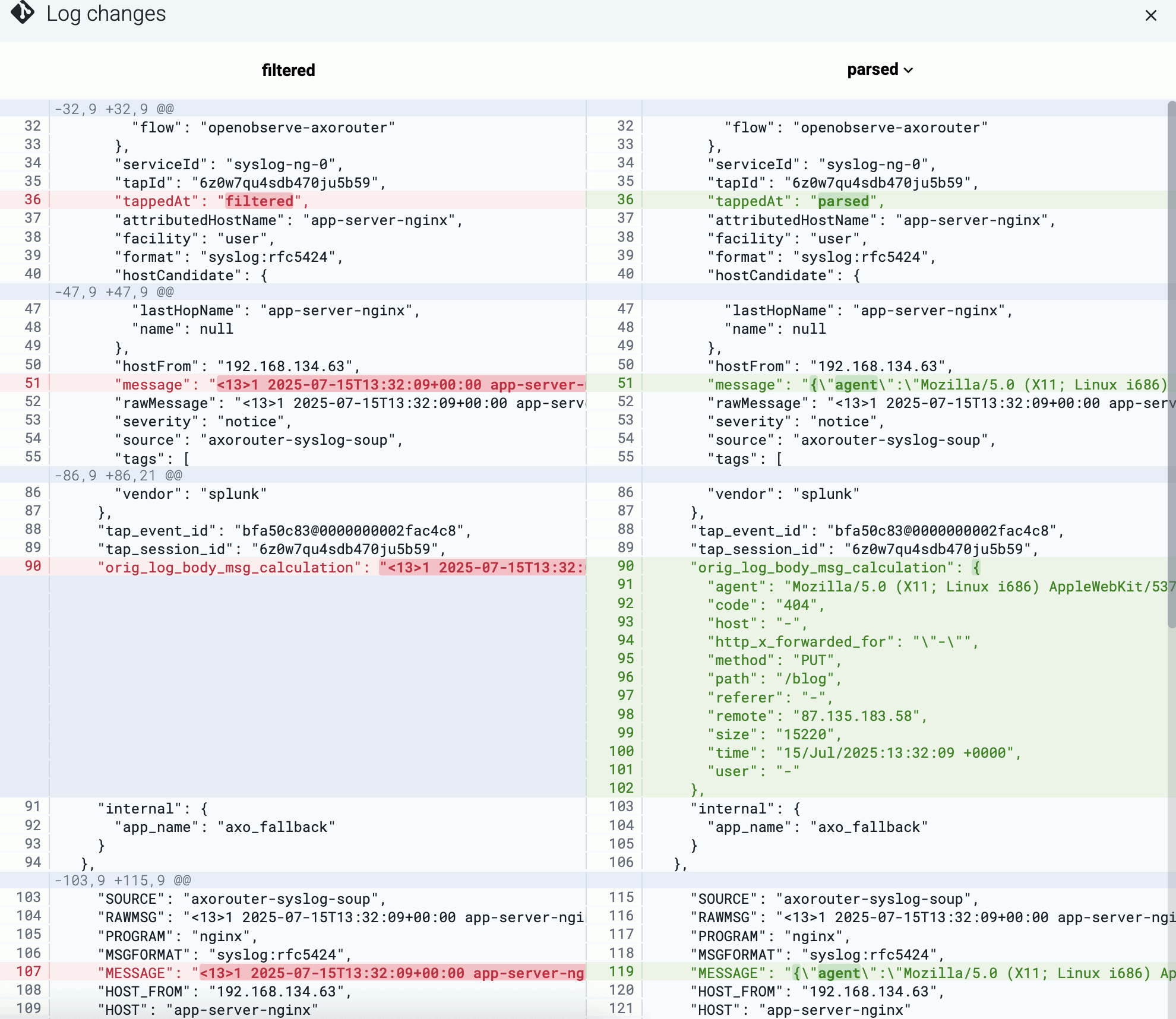

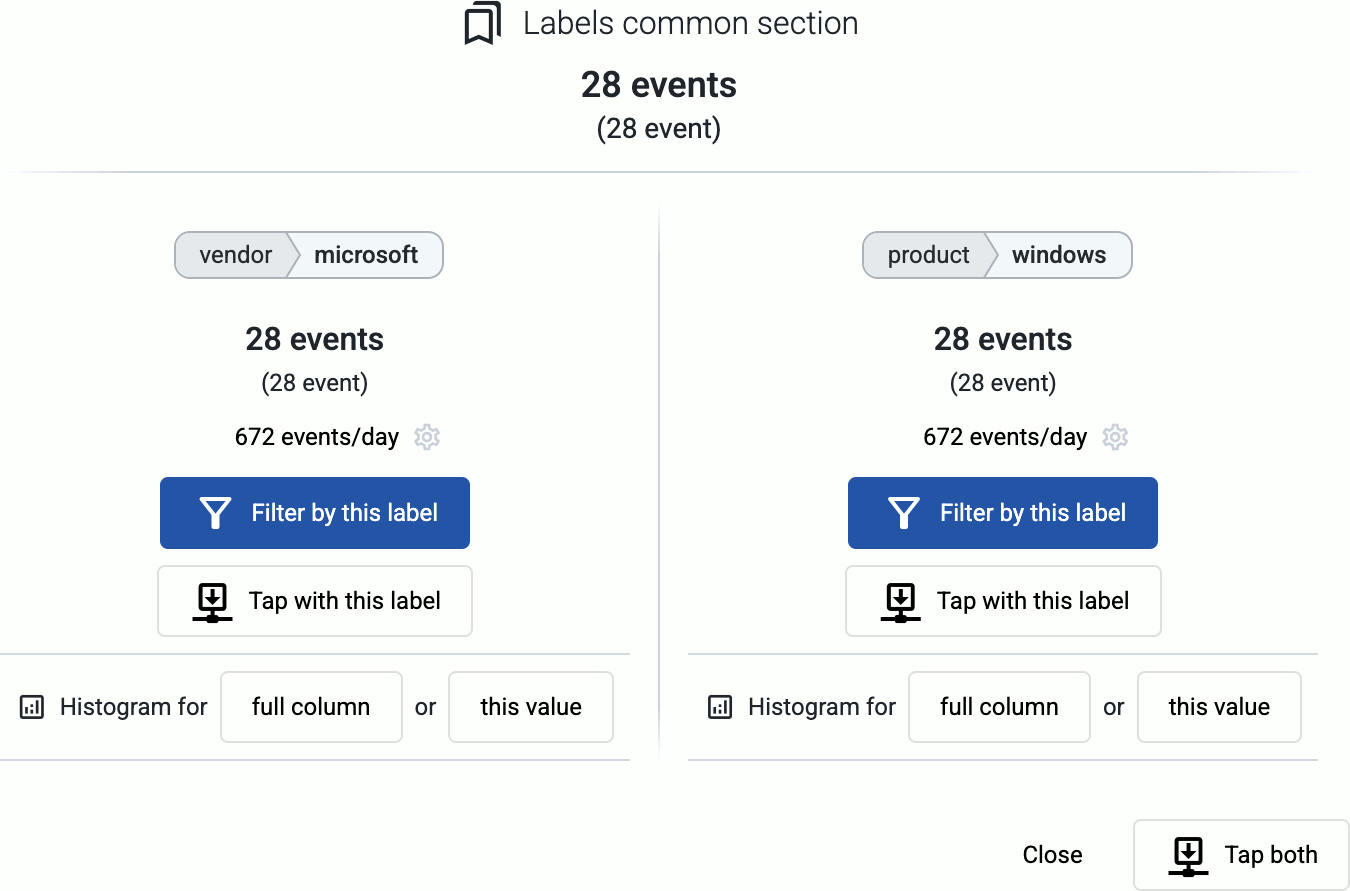

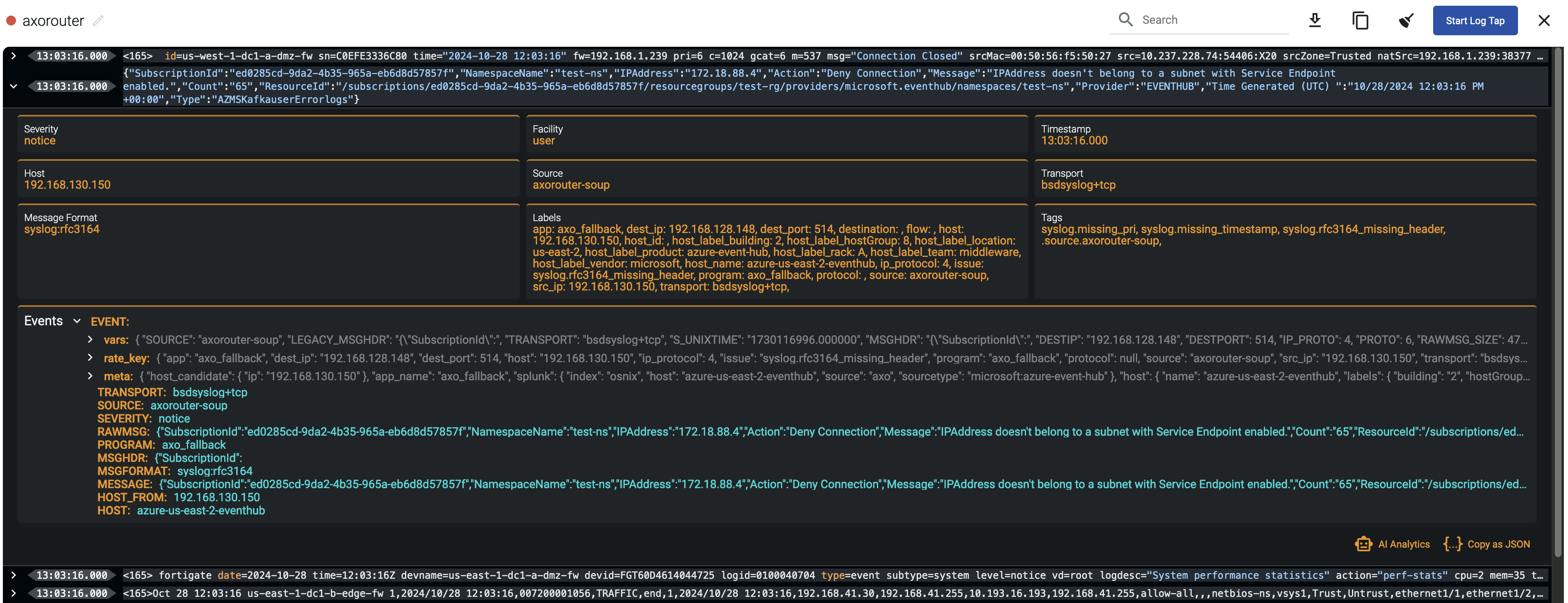

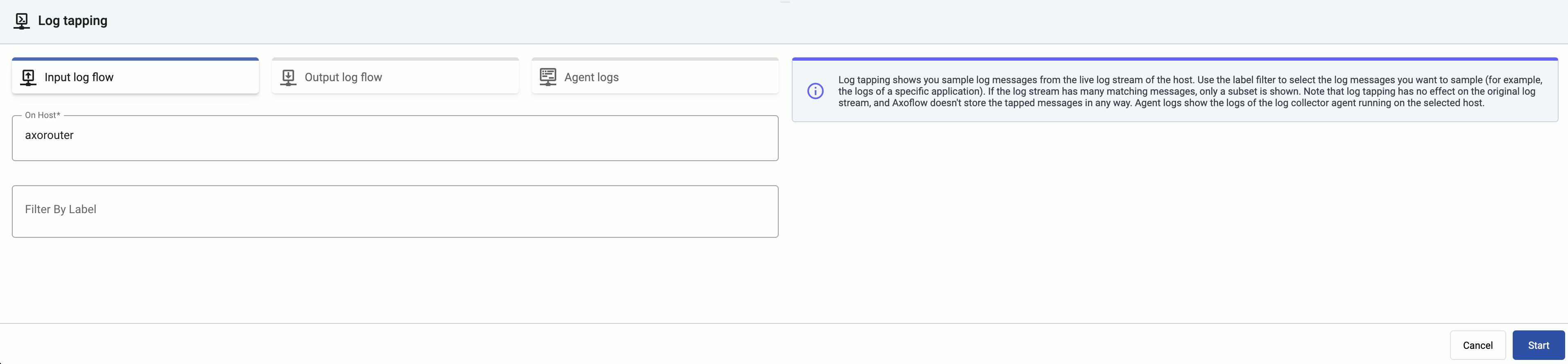

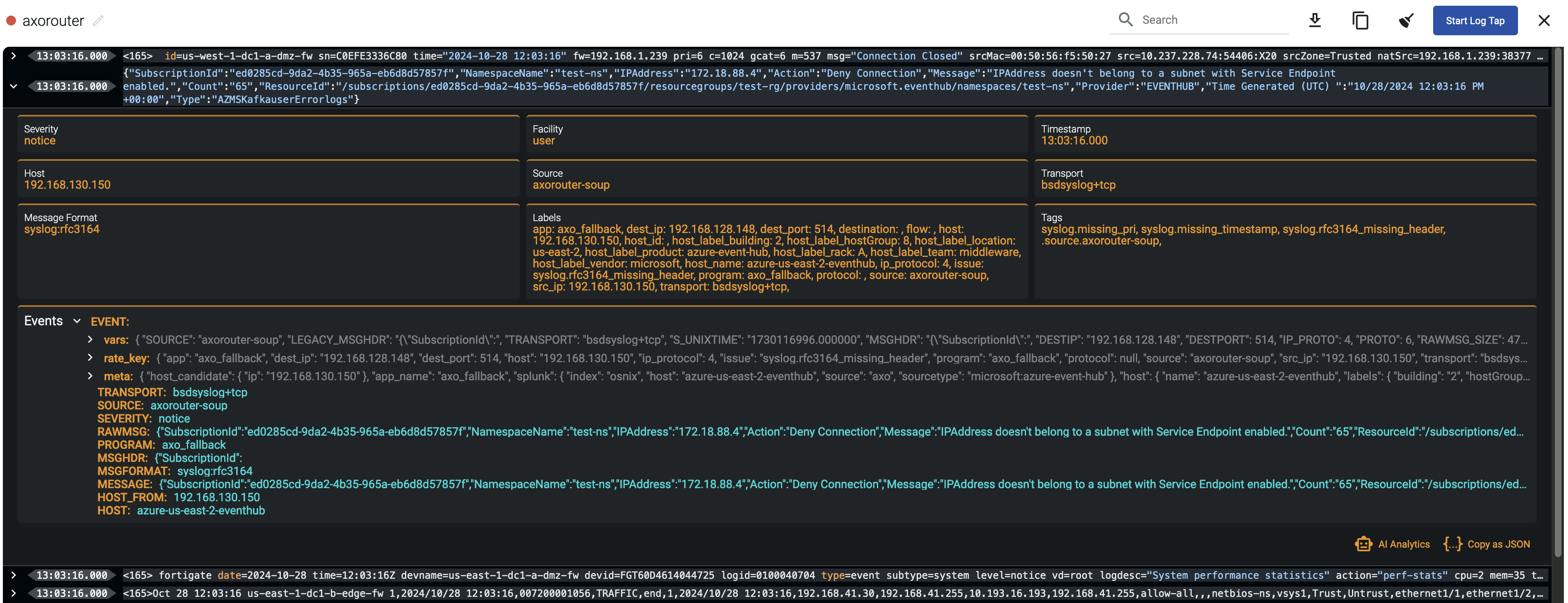

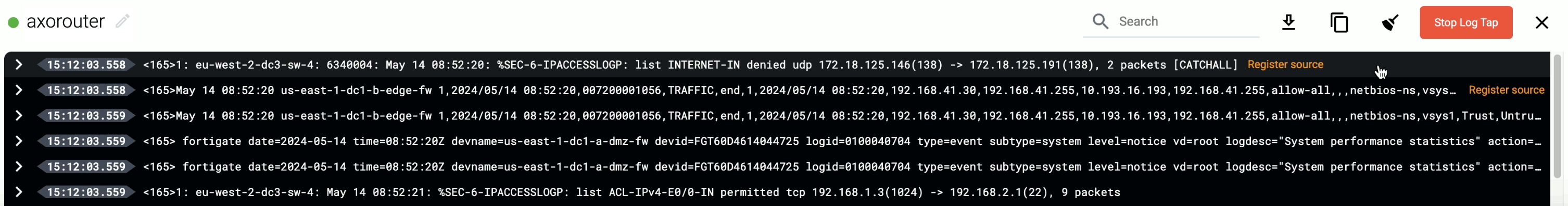

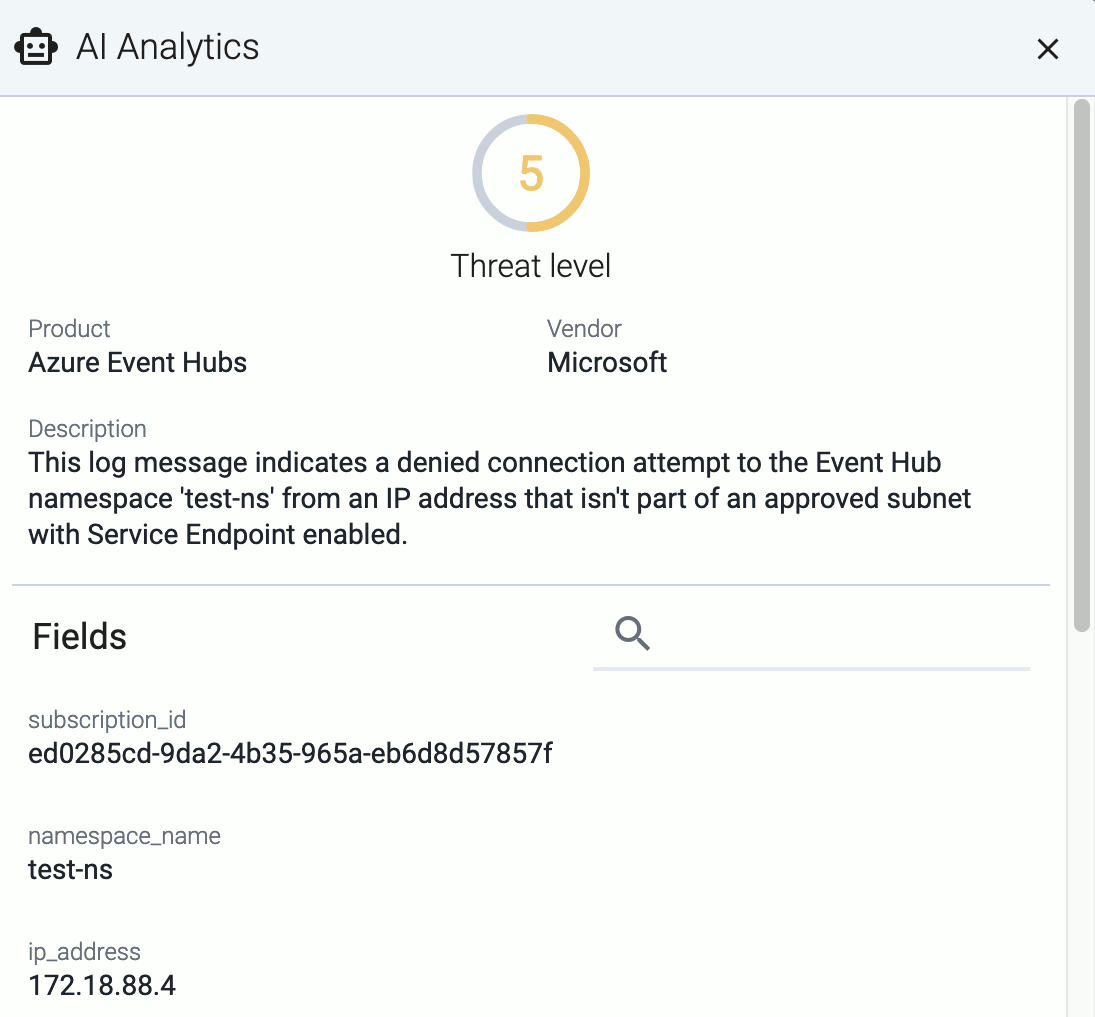

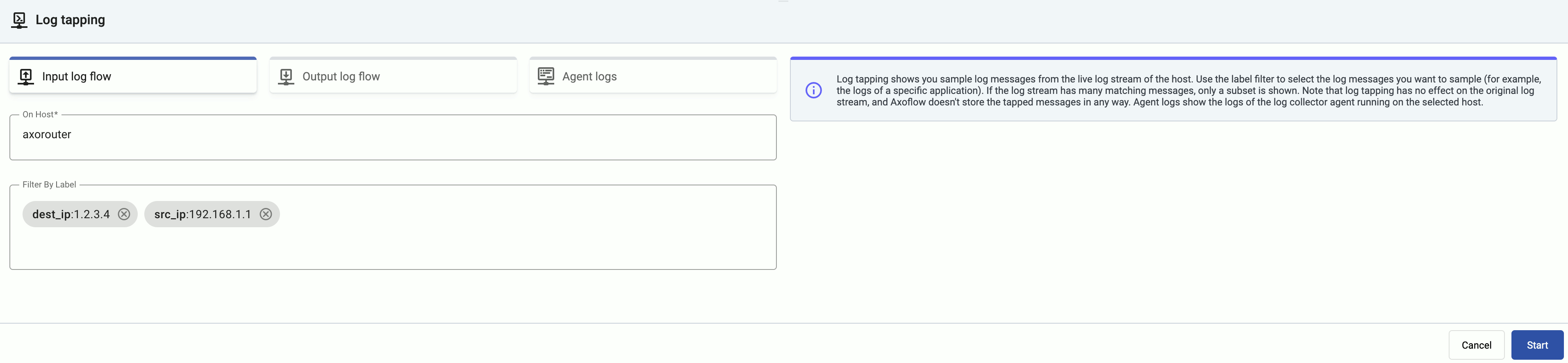

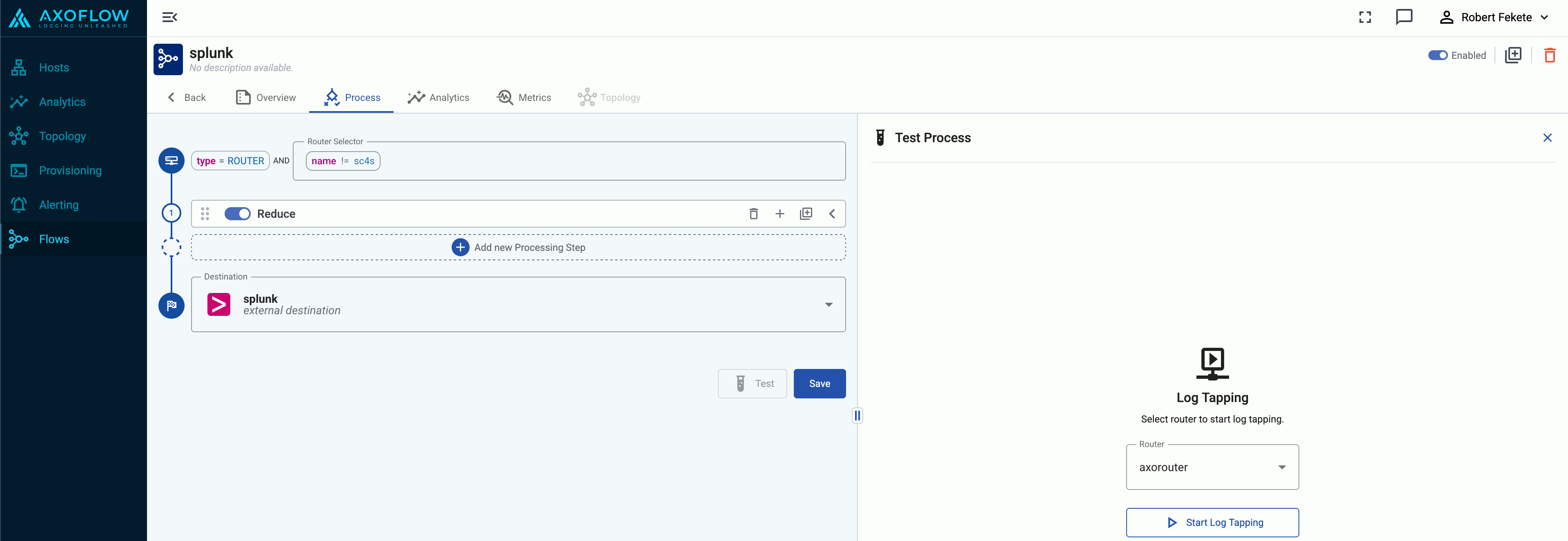

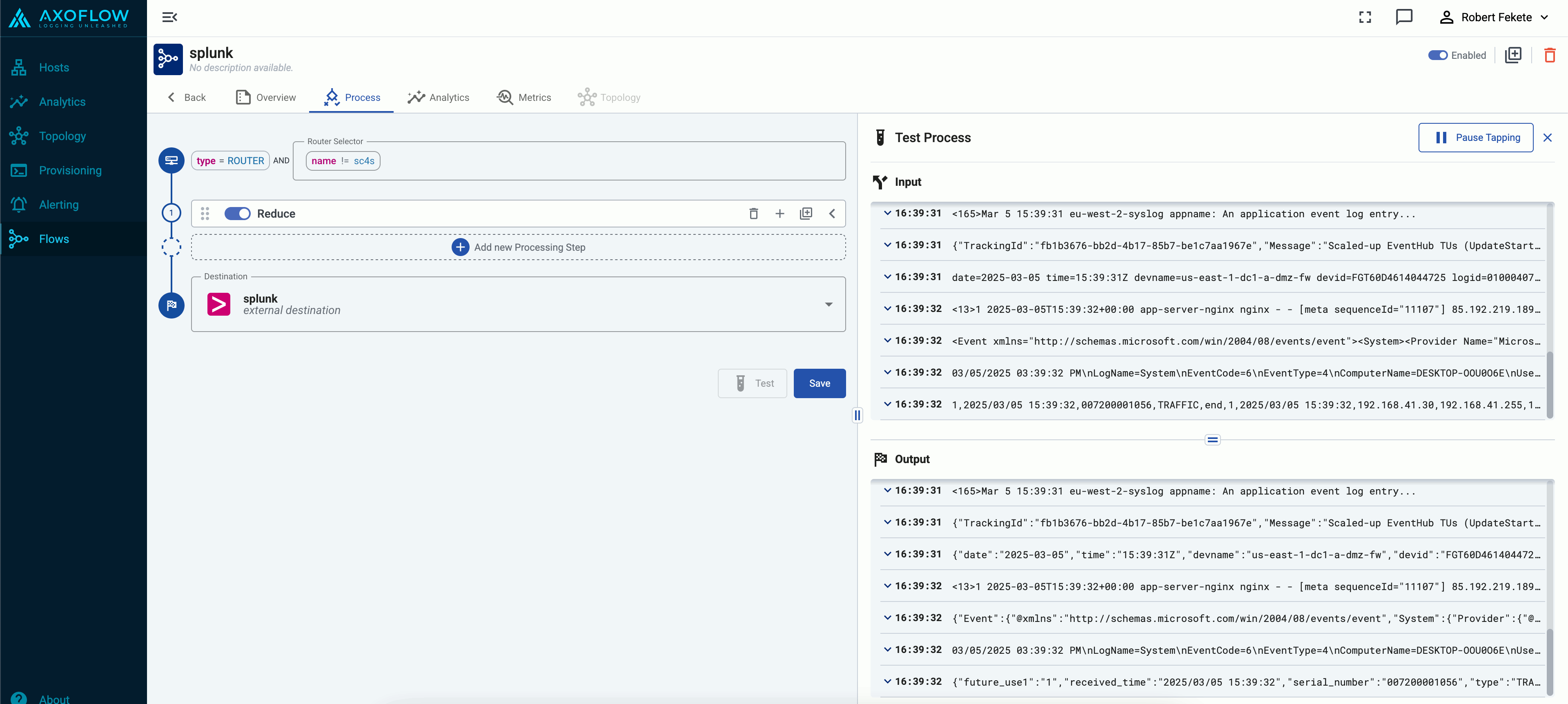

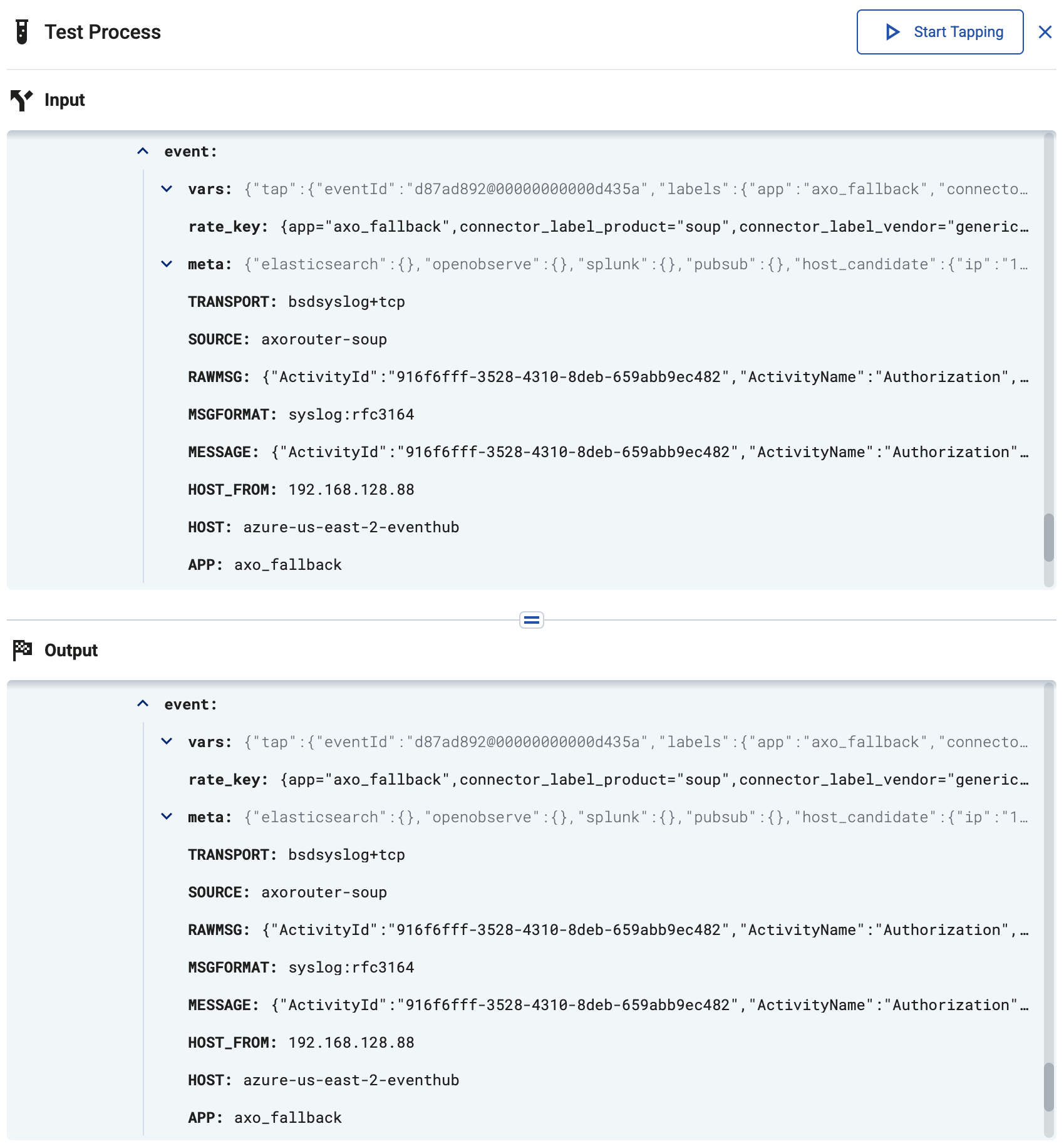

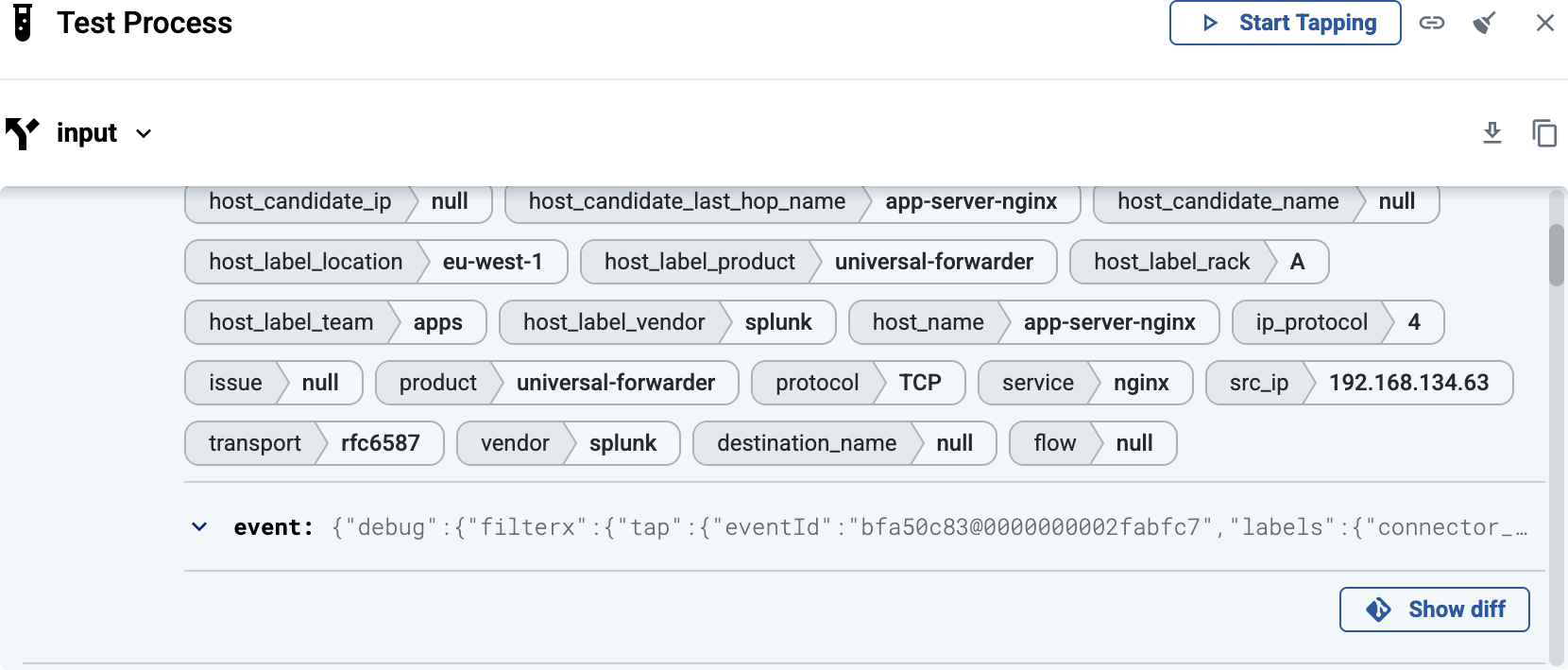

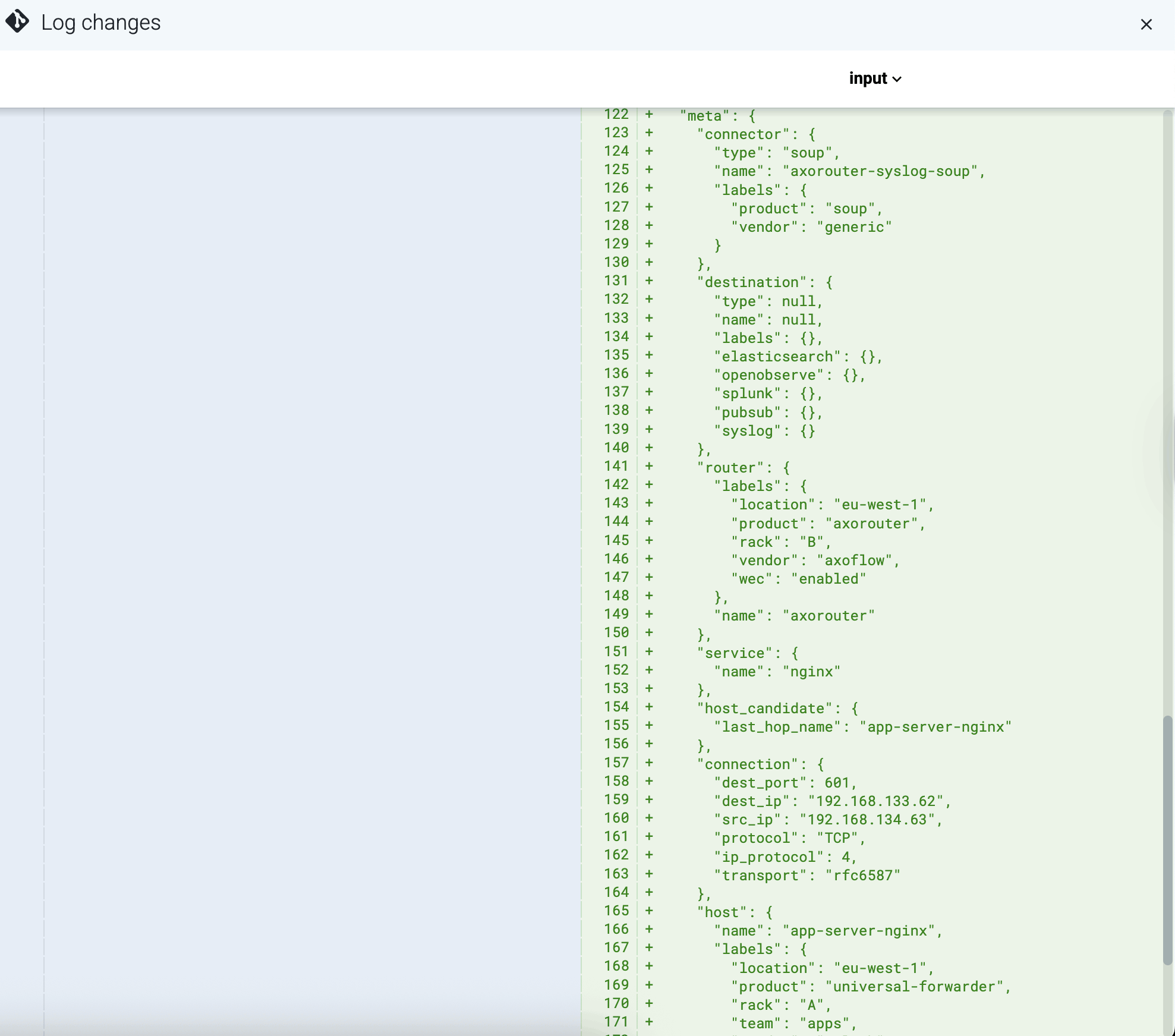

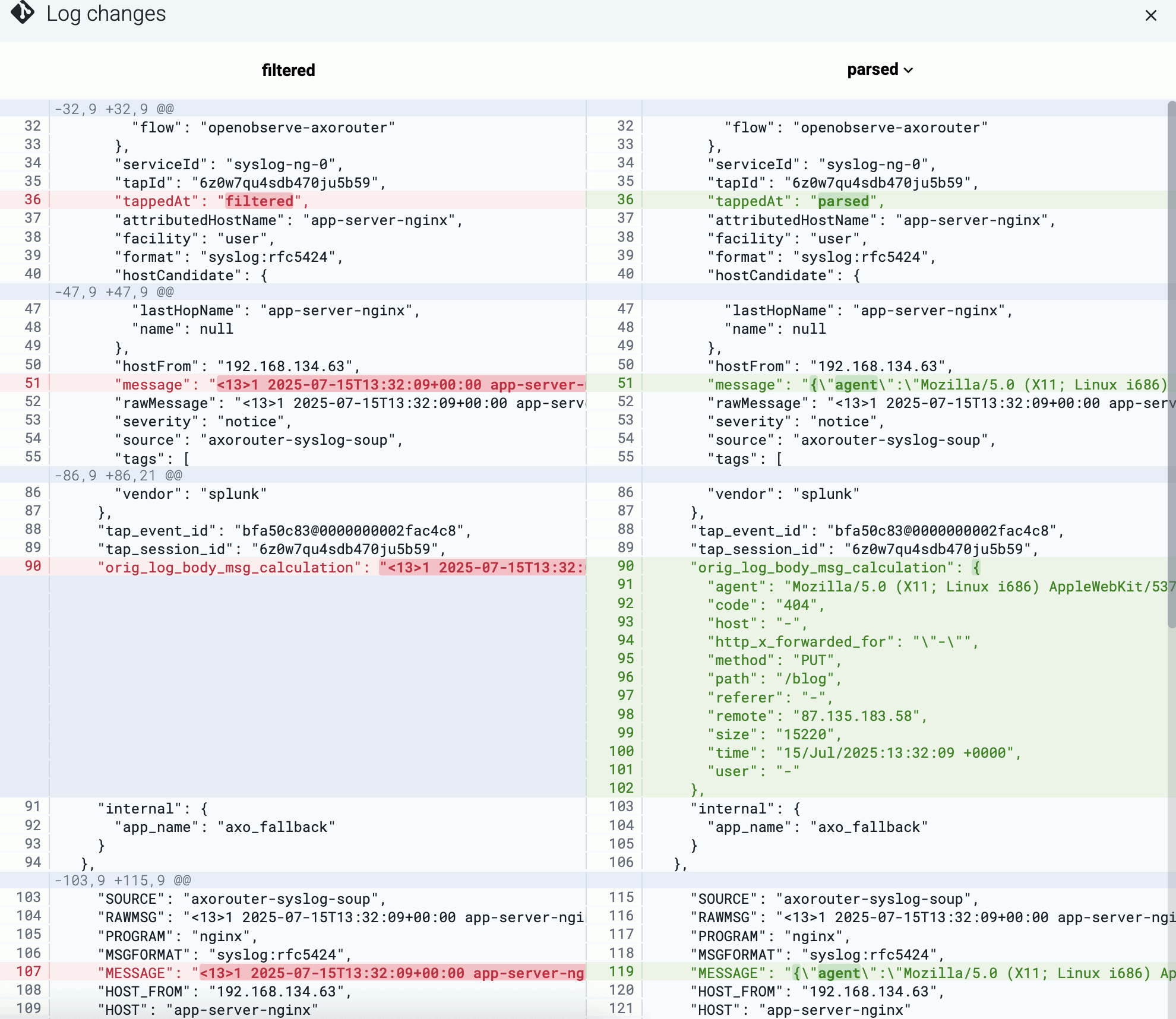

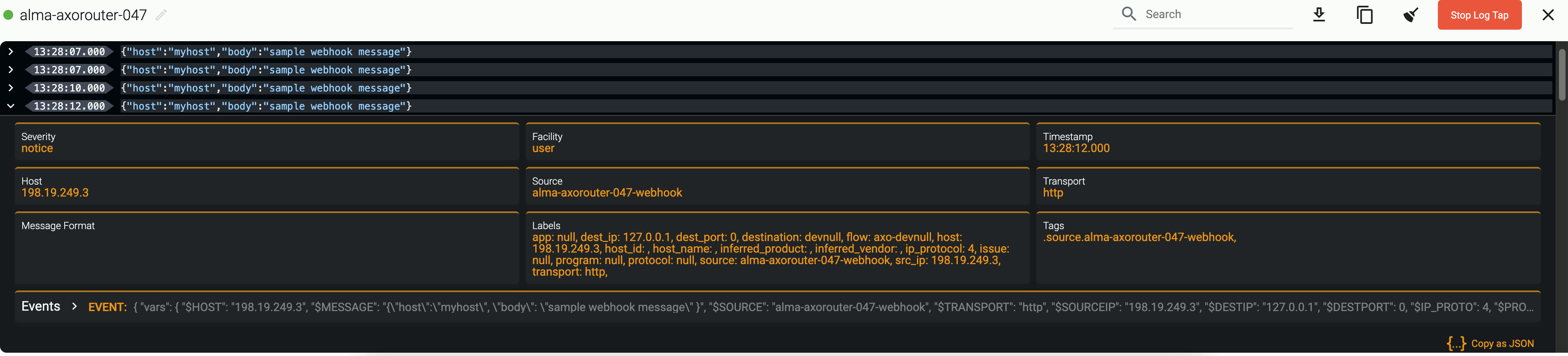

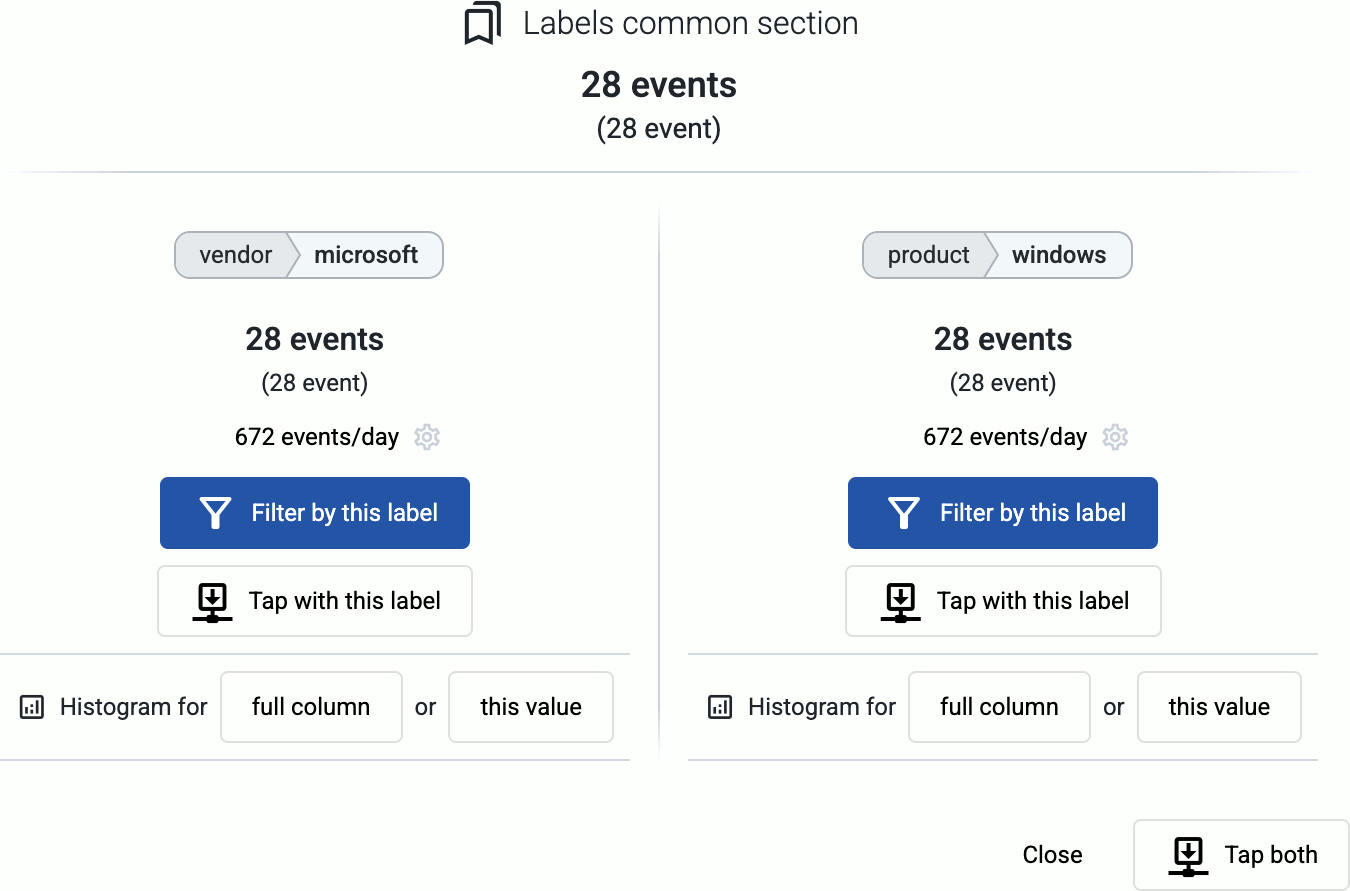

Tap into the log flow

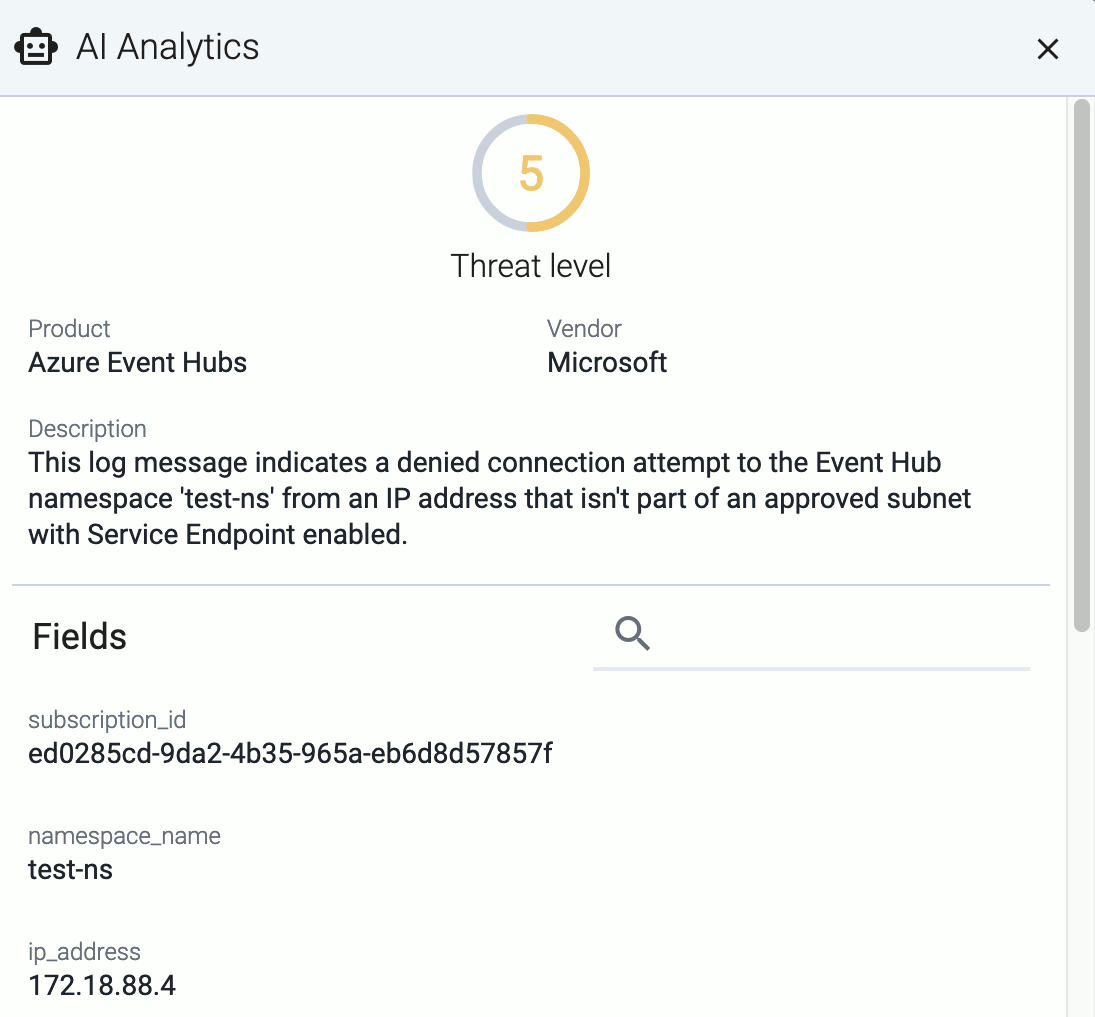

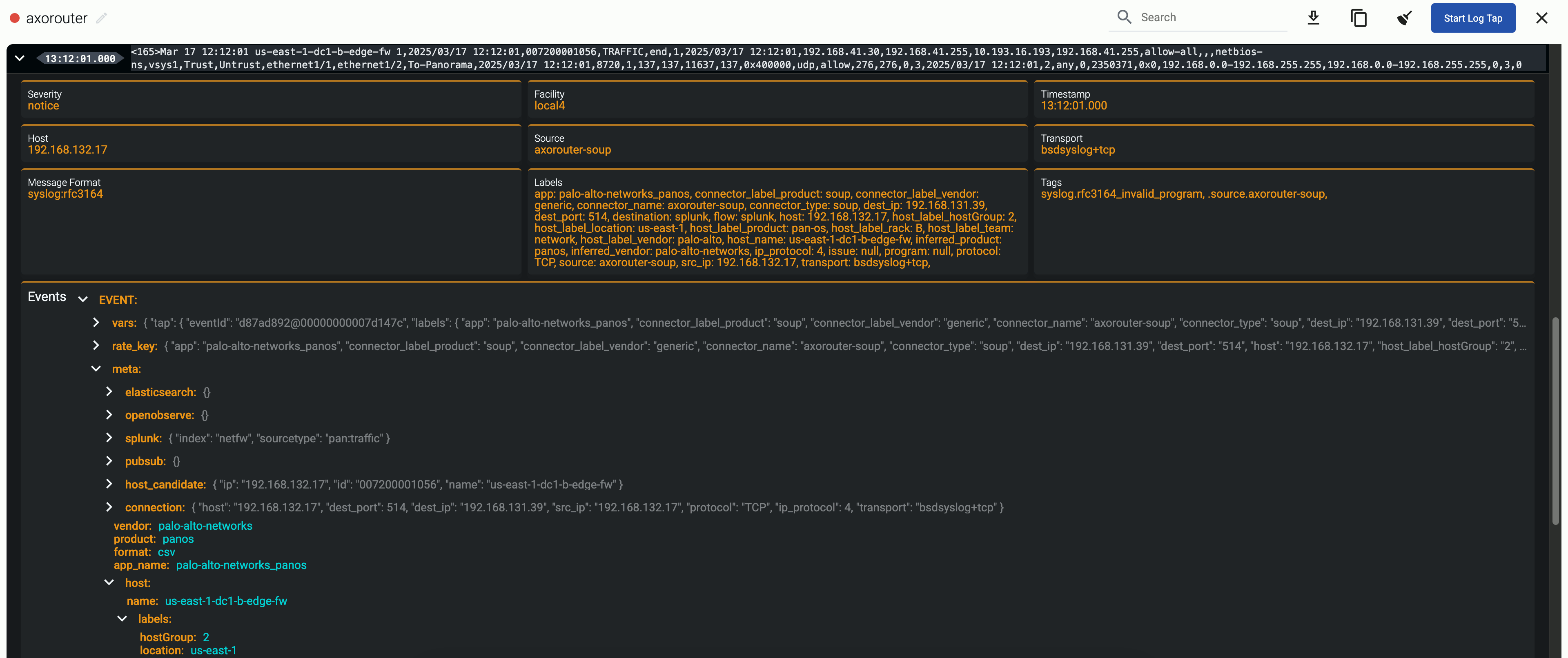

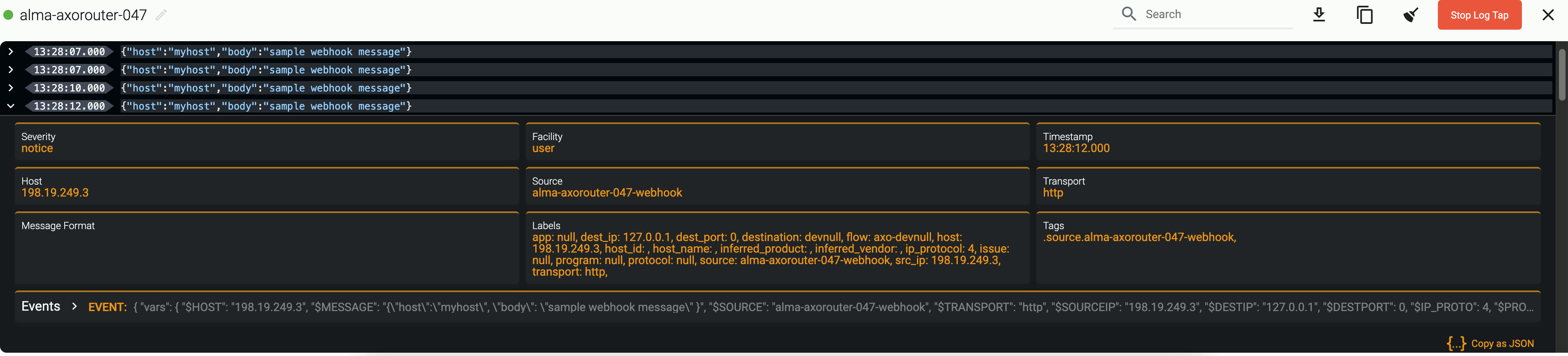

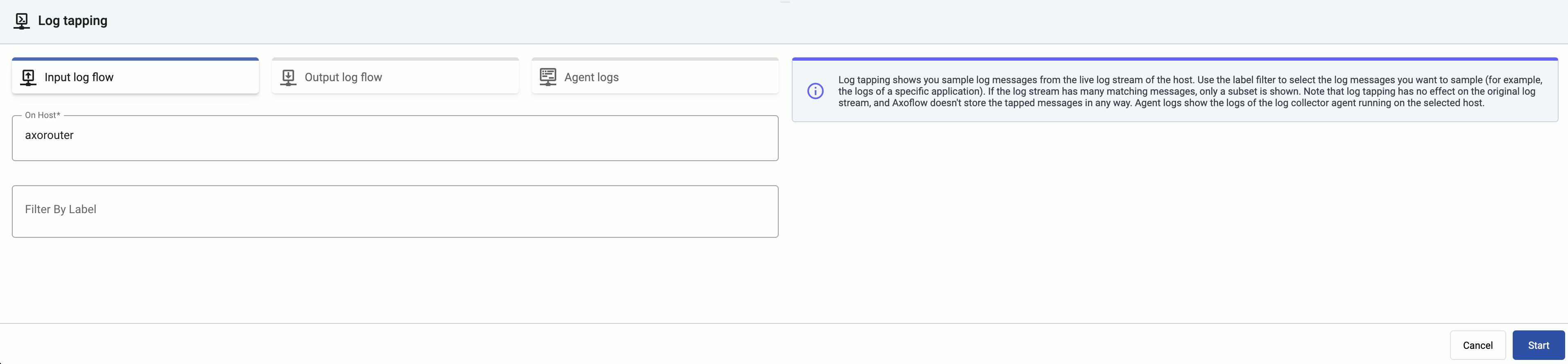

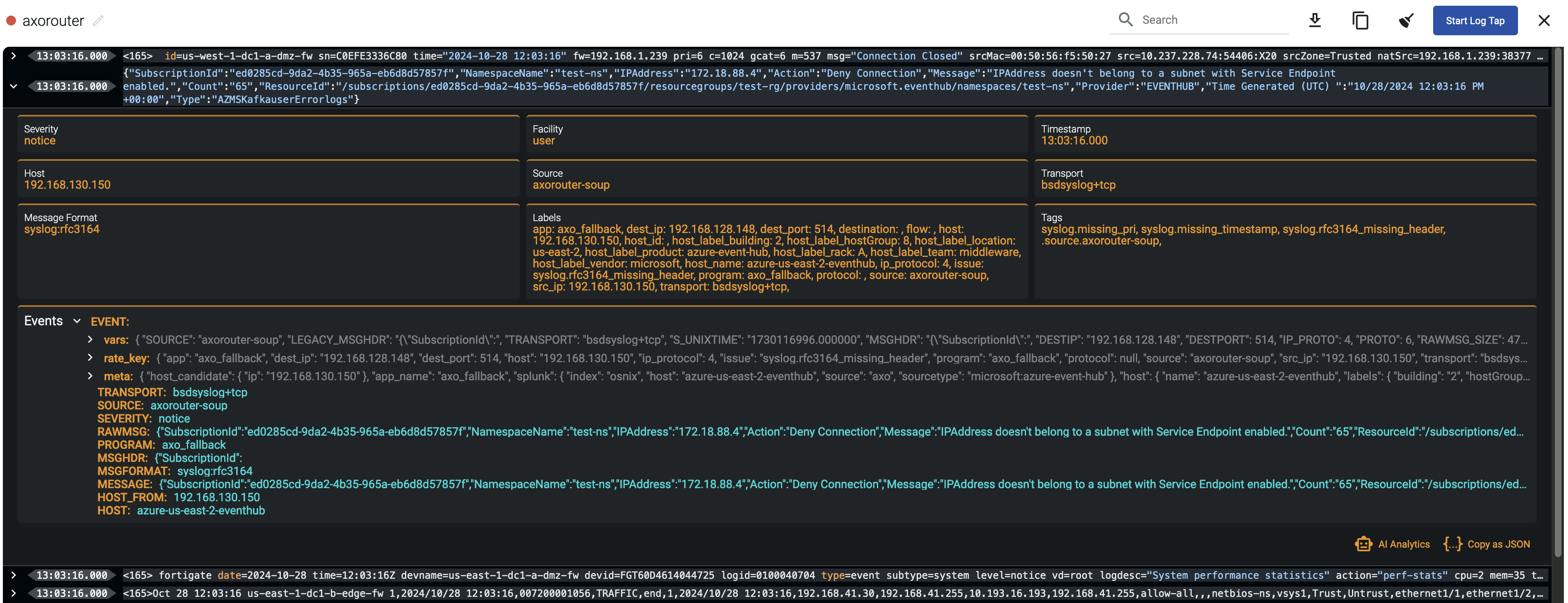

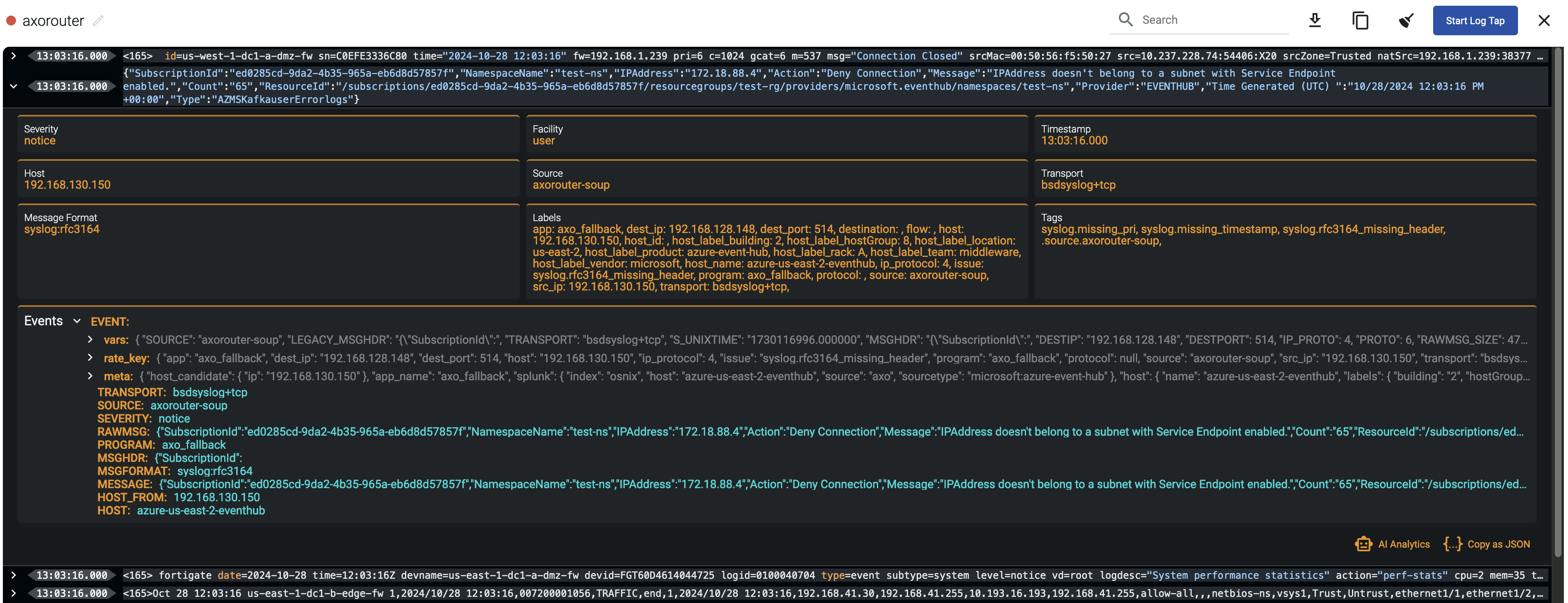

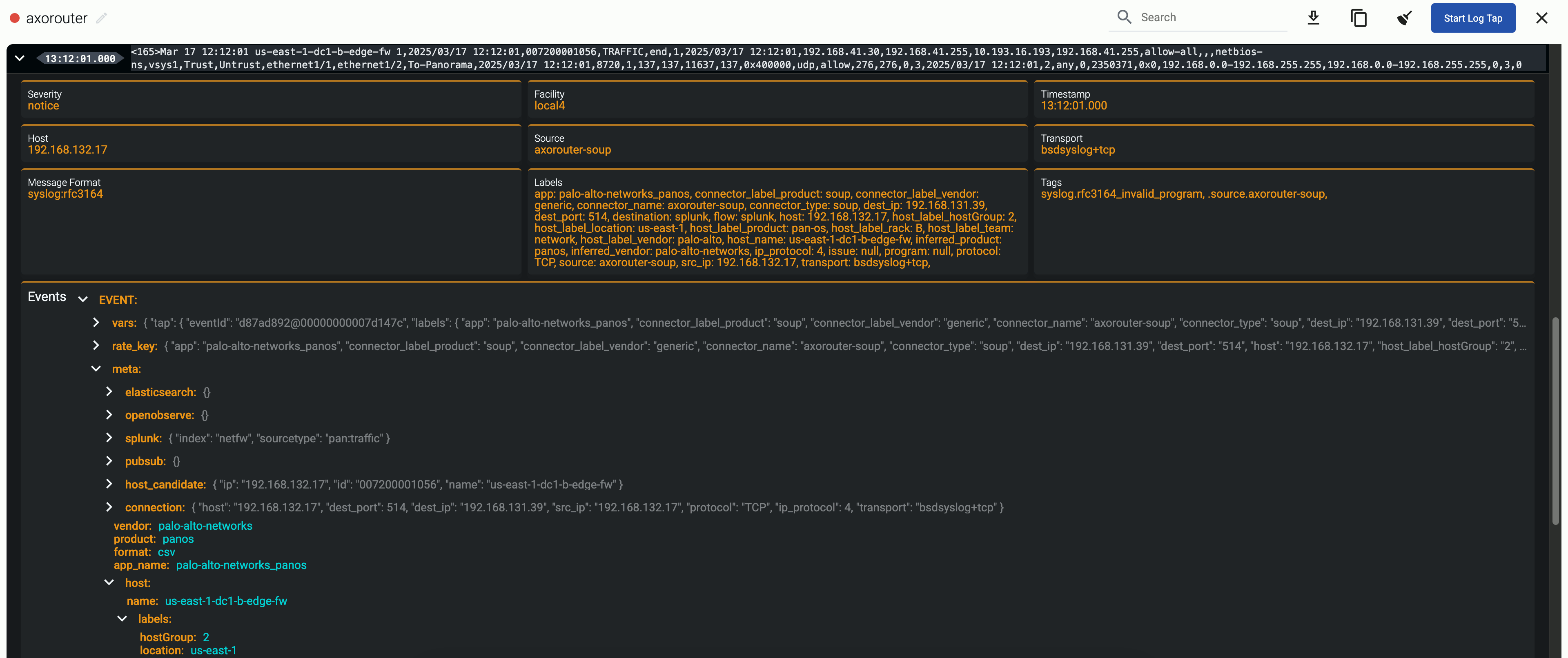

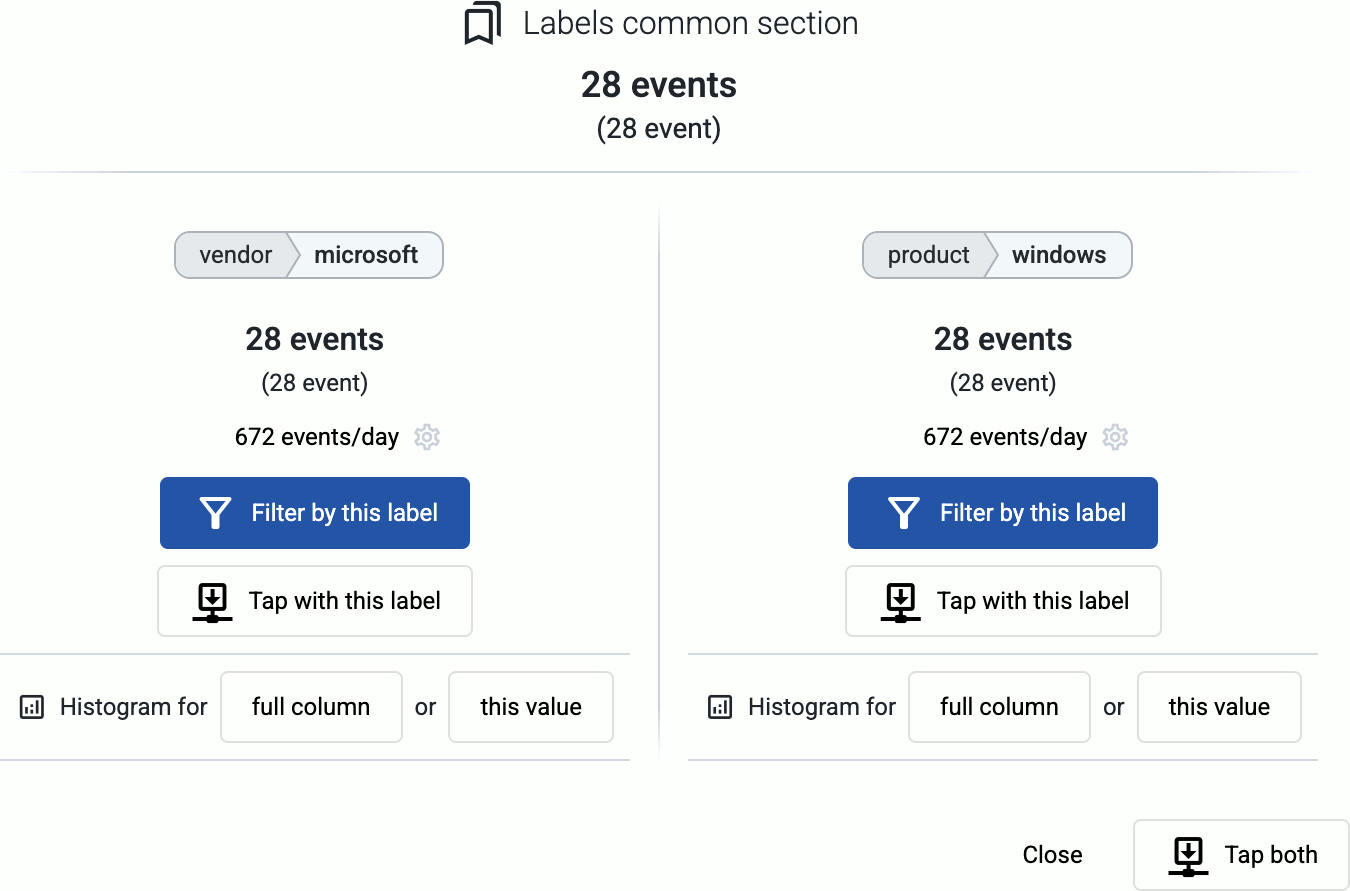

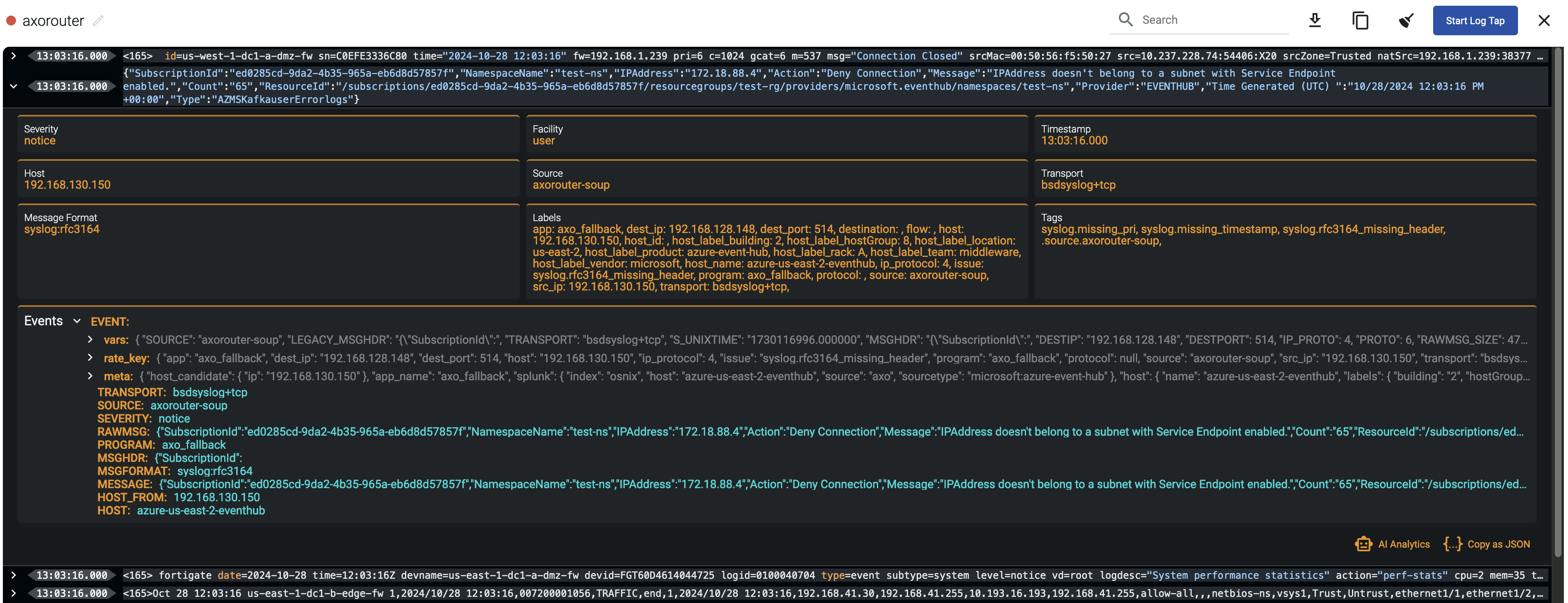

Log tapping in Axoflow samples the log flow. You can use labels to filter for specific messages (like ones with parse errors) and tap only those messages. To not get overwhelmed with events, Axoflow automatically samples the output: if many messages match the selected filter, only a subset is shown (about 1 message per second). Using log tapping, you can quickly troubleshoot both parsing/curation errors and destination ingest (API) errors, and check:

- What was in the original message?

- What is sent in the final payload to the destination?

Tap into the log flow.

-

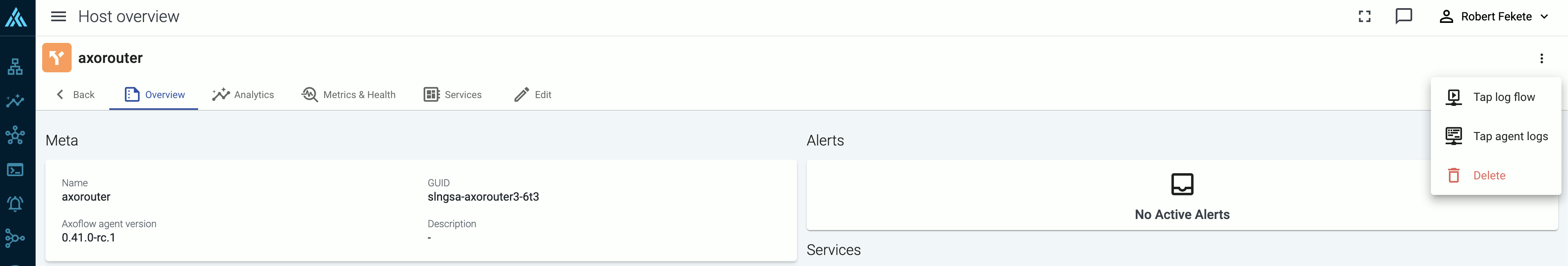

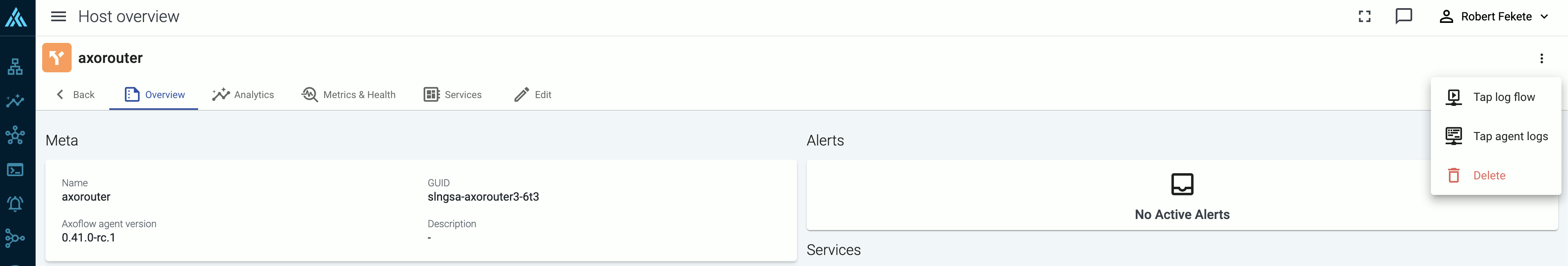

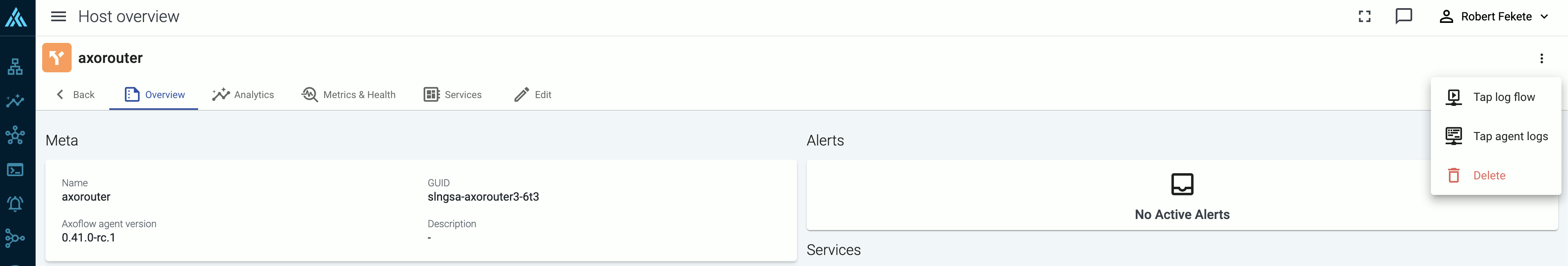

Click your AxoRouter instance on the Topology page, then select ⋮ > Tap log flow.

-

Tap into the log flow.

- To see the input data, select Input log flow > Start.

- To see the output data, select Output log flow > Start.

You can use labels to filter the messages and sample only the matching ones.

-

When the logs you’re interested in show up, click Stop Log Tap, then click a log message to see its details.

-

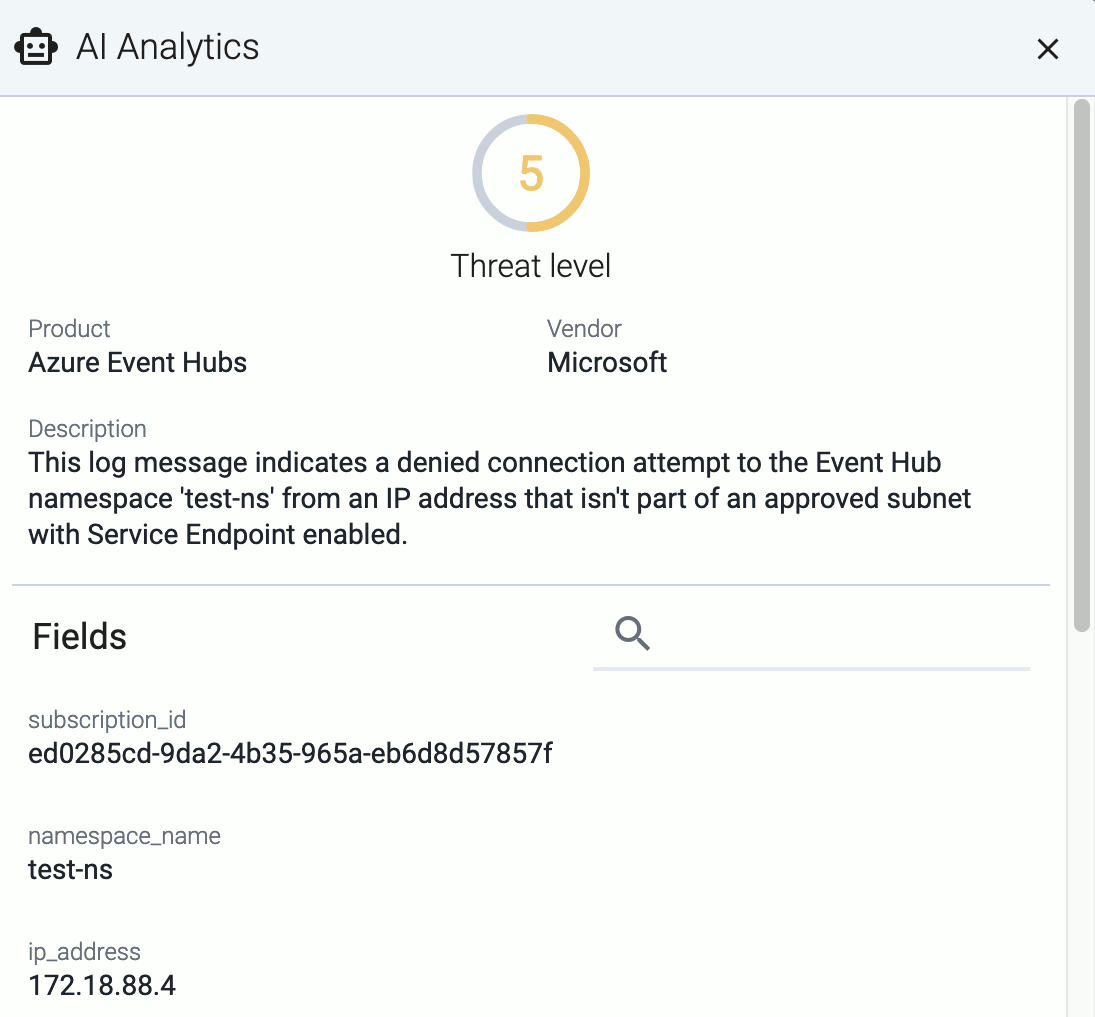

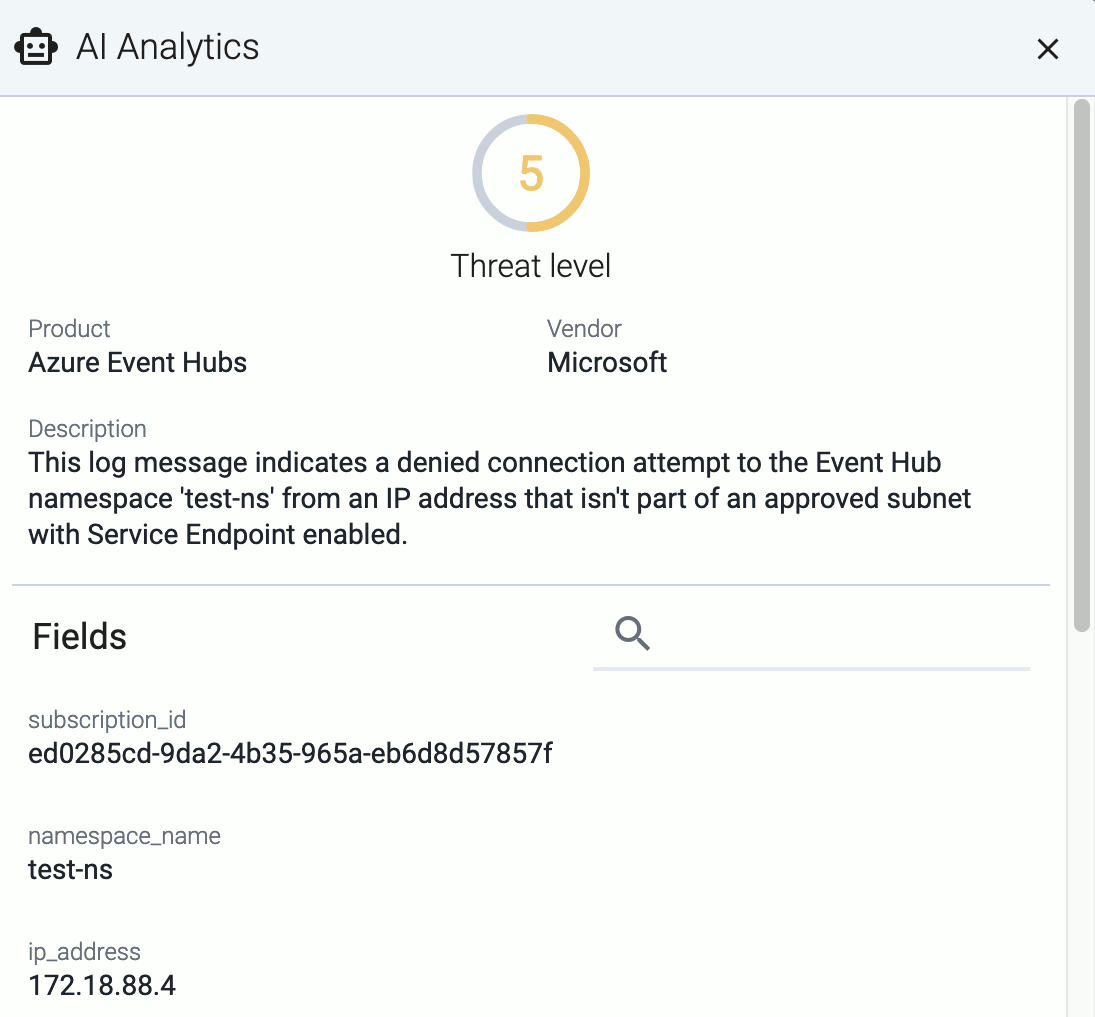

If you don’t know what the message means, select AI Analytics to ask our AI to interpret it.

Troubleshooting

In case you run into problems, or you’re not getting any data in Splunk, check the logs of your AxoRouter instance:

-

Select Topology, then select your AxoRouter instance.

-

Select ⋮ > Tap agent logs > Start. Axoflow displays the log messages of AxoRouter. Check the logs for error messages. Some common errors include:

Redirected event for unconfigured/disabled/deleted index=netops with source="source::axo" host="host::axosyslog-almalinux" sourcetype="sourcetype::fortigate_event" into the LastChanceIndex. So far received events from 1 missing index(es).: The Splunk index where AxoRouter is trying to send data doesn’t exist. Check which index is missing in the error message and create it in Splunk. (For a list of recommended indices, see the Splunk destination prerequisites.)http: error sending HTTP request; url='https://prd-p-sp2id.splunkcloud.com:8088/services/collector/event/1.0?index=&source=&sourcetype=', error='SSL peer certificate or SSH remote key was not OK', worker_index='0', driver='splunk--flow-axorouter4-almalinux#0', location='/usr/share/syslog-ng/include/scl/splunk/splunk.conf:104:3': Your Splunk deployment uses an invalid or self-signed certificate, and the Verify server certificate option is enabled in the Splunk destination of Axoflow. Either fix the certificate in Splunk, or: select Topology > <your-splunk-destination>, disable Verify server certificate, then select Update.

6 - Concepts

This section describes the main concepts of Axoflow.

6.1 - Automatic data processing

When forwarding data to your SIEM, poor quality data and malformed logs that lack critical fields like timestamps or hostnames need to be fixed. The usual solutions fix the problem in the SIEM, and involve complex regular expressions, which are difficult to create and maintain. SIEM users often rely on vendors to manage these rules, but support is limited, especially for less popular devices. Axoflow offers a unique solution by automatically processing, curating, and classifying data before it’s sent to the SIEM, ensuring accurate, structured, and optimized data, reducing ingestion costs and improving SIEM performance.

The problem

The main issue related to classification is that many devices send malformed messages: missing timestamp, missing hostname, invalid message format, and so on. Such errors can cause different kinds of problems:

- Log messages are often routed to different destinations based on the sender hostname. Missing or invalid hostnames mean that the message is not attributed to the right host, and often doesn’t arrive at its intended destination.

- Incorrect timestamp or timezone hampers investigations during an incident, resulting in potentially critical data failing to show up (or extraneous data appearing) in queries for a particular period.

- Invalid data can lead to memory leaks or resource overload in the processing software (and to unusable monitoring dashboards) when a sequence number or other rapidly varying field is mistakenly parsed as the hostname, program name, or other low cardinality field.

Overall, they decrease the quality of security data you’re sending to your SIEM tools, which increases false positives, requires secondary data processing to clean, and increases query time – all of which ends up costing firms a lot more.

For instance, this is a log message from a SonicWall firewall appliance:

<133> id=firewall sn=C0EFE33057B0 time="2024-10-07 14:56:47 UTC" fw=172.18.88.37 pri=6 c=1024 m=537 msg="Connection Closed" f=2 n=316039228 src=192.0.0.159:61254:X1: dst=10.0.0.7:53:X3:SIMILDC01 proto=udp/dns sent=59 rcvd=134 vpnpolicy="ELG Main"

A well-formed syslog message should look like this:

<priority>timestamp hostname application: message body

As you can see, the SonicWall format is completely invalid after the initial <priority> field. Instead of the timestamp, hostname, and application name comes the free-form part of the message (in this case a whitespace-separated key=value list). Unless you extract the hostname and timestamp from the content of this malformed message, you won’t be able to reconstruct the course of events during a security incident.

Axoflow provides data processing, curation, and classification intelligence that’s built into the data pipeline, so it processes and fixes the data before it’s sent to the SIEM.

Our solution

Our data engine and database solution automatically processes the incoming data: AxoRouter recognizes and classifies the incoming data, applies device-specific fixes for the errors, then enriches and optimizes the formatting for the specific destination (SIEM). This approach has several benefits:

- Cost reduction: All data is processed before it’s sent to the SIEM. That way, we can automatically reduce the amount of data sent to the SIEM (for example, by removing empty and redundant fields), cutting your data ingestion costs.

- Structured data: Axoflow recognizes the format of the incoming data payload (for example, JSON, CSV, LEEF, free text), and automatically parses the payload into a structured map. This allows us to have detailed, content-based metrics and alerts, and also makes it easy for you to add custom transformations if needed.

- SIEM-independent: The structured data representation allows us to support multiple SIEMs (and other destinations) and optimize the data for every destination.

- Performance: Compared to the commonly used regular expressions, it’s more robust and has better performance, allowing you to process more data with fewer resources.

- Maintained by Axoflow: We maintain the database; you don’t have work with it. This includes updates for new product versions and adding new devices. We proactively monitor and check the new releases of main security devices for logging-related changes and update our database. (If something’s not working as expected, you can easily submit log samples and we’ll fix it ASAP). Currently, we have over 80 application adapters in our database.

The automatic classification and curation also adds labels and metadata that can be used to make decisions and route your data. Messages with errors are also tagged with error-specific tags. For example, you can easily route all firewall and security logs to your Splunk deployment, and exclude logs (like debug logs) that have no security relevance and shouldn’t be sent to the SIEM.

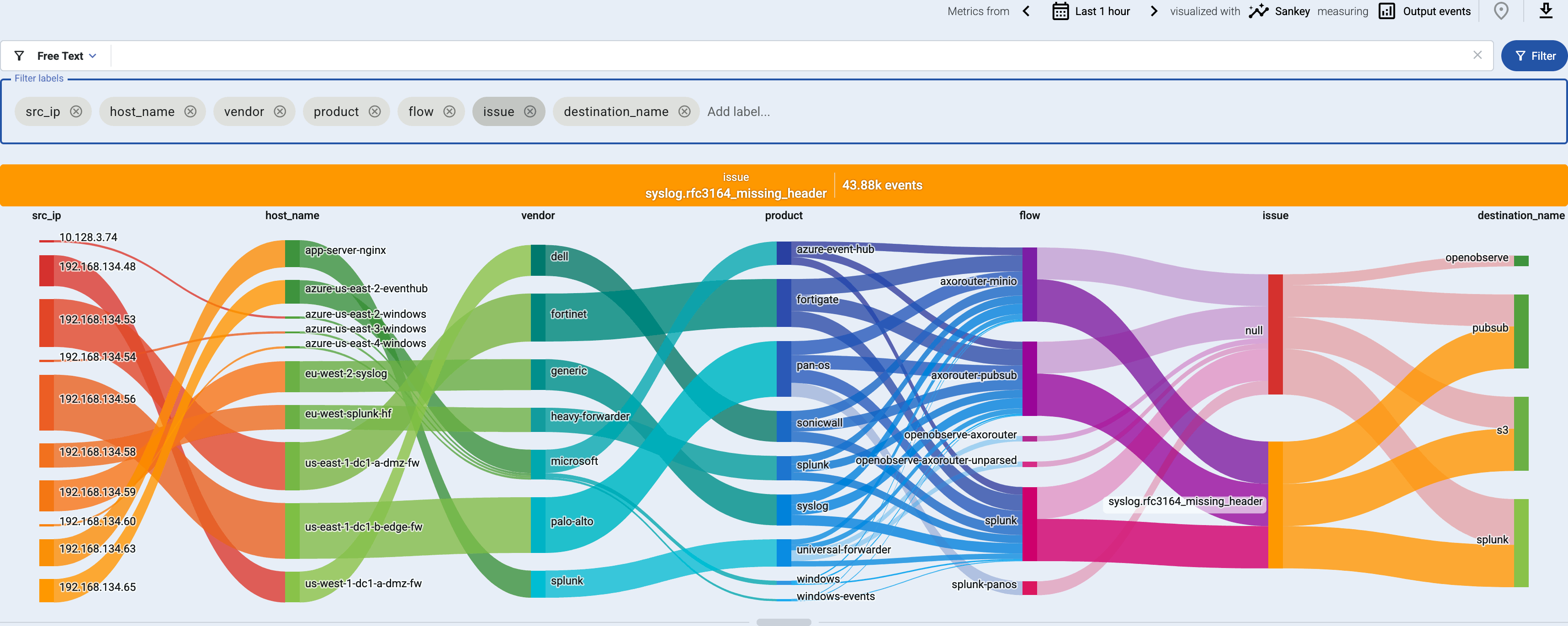

Message fixup

Axoflow Console allows you to quickly drill down to find log flows with issues, and to tap into the log flow and see samples of the specific messages that are processed, along with the related parsing information, like tags that describe the errors of invalid messages.

message.utf8_sanitized: The message is not valid UTF-8.syslog.missing_timestamp: The message has no timestamp.syslog.invalid_hostname: The hostname field doesn’t seem to be valid, for example, it contains invalid characters.syslog.missing_pri: The priority (PRI) field is missing from the message.syslog.unexpected_framing: An octet count was found in front of the message, suggested invalid framing.syslog.rfc3164_missing_header: The date and the host are missing from the message – practically that’s the entire header of RFC3164-formatted messages.syslog.rfc5424_unquoted_sdata_value: The message contains an incorrectly quoted RFC5424 SDATA field.message.parse_error: Some other parsing error occurred.

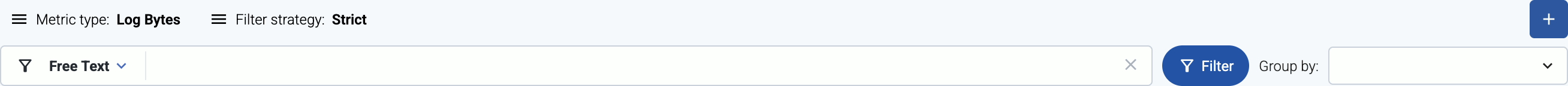

You can get an overview of such problems in your pipeline on the Analytics page by adding issue to the Filter labels field.

6.2 - Host attribution and inventory

Axoflow’s built-in inventory solution enriches your security data with critical metadata (like the origin host) so you can pinpoint the exact source of every data entry, enabling precise, label-based routing and more informed security decisions.

Enterprises and organizations collect security data (like syslog and other event data) from various data sources, including network devices, security devices, servers, and so on. When looking at a particular log entry during a security incident, it’s not always trivial to determine what generated it. Was it an appliance or an application? Which team is the owner of the application or device? If it was a network device like a switch or a Wi-Fi access point (of which even medium-sized organizations have dozens), can you tell which one it was, and where is it located?

Cloud-native environments, like Kubernetes have addressed this issue using resource metadata. You can attach labels to every element of the infrastructure: containers, pods, nodes, and so on, to include region, role, owner or other custom metadata. When collecting log data from the applications running in containers, the log collector agent can retrieve these labels and attach them to the log data as metadata. This helps immensely to associate routing decisions and security conclusions with the source systems.

In non-cloud environments, like traditionally operated physical or virtual machine clusters, the logs of applications, appliances, and other data sources lack such labels. To identify the log source, you’re stuck with the sender’s IP address, the sender’s hostname, and in some cases the path and filename of the log file, plus whatever information is available in the log message.

Why isn’t the IP address or hostname enough?

- Source IP addresses are poor identifiers, as they aren’t strongly tied to the host, especially when they’re dynamically allocated using DHCP or when a group of senders are behind a NAT and have the same address.

- Some organizations encode metadata into the hostname or DNS record. However, compared to the volume of log messages, DNS resolving is slow. Also, the DNS record is available at the source, but might not be available at the log aggregation device or the log server. As a result, this data is often lost.

- Many data sources and devices omit important information from their messages. For example, the log messages of Cisco routers by default omit the hostname. (They can be configured to send their hostname, but unfortunately, often they aren’t.) You can resolve their IP address to obtain the hostname of the device, but that leads back to the problem in the previous point.

- In addition, all the above issues are rooted in human configuration practices, which tend to be the main cause of anomalies in the system.

Correlating data to data source

Some SIEMs (like IBM QRadar) rely on the sender IP address to identify the data source. Others, like Splunk, delegate the task of providing metadata to the collector agent, but log sources often don’t supply metadata, and even if they do, the information is based on IP address or DNS.

Certain devices, like SonicWall firewalls include a unique device ID in the content of their log messages. Having access to this ID makes attribution straightforward, but logging solutions rarely extract such information. AxoRouter does.

Axoflow builds an inventory to match the messages in your data flow to the available data sources, based on data including:

-

IP address, host name, and DNS records of the source (if available),

-

serial number, device ID, and other unique identifiers extracted from the messages, and

-

metadata based on automatic classification of the log messages, like product and vendor name.

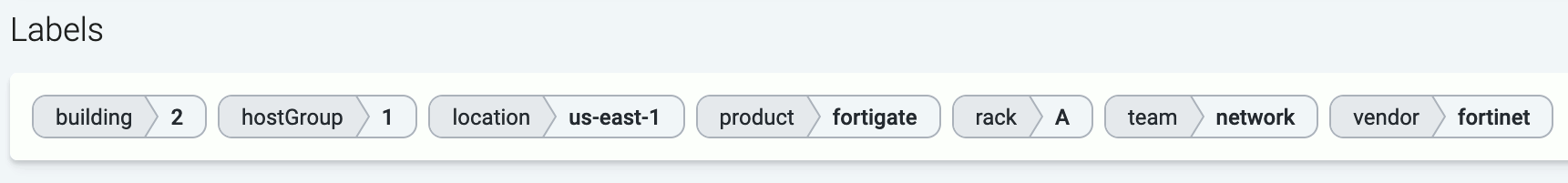

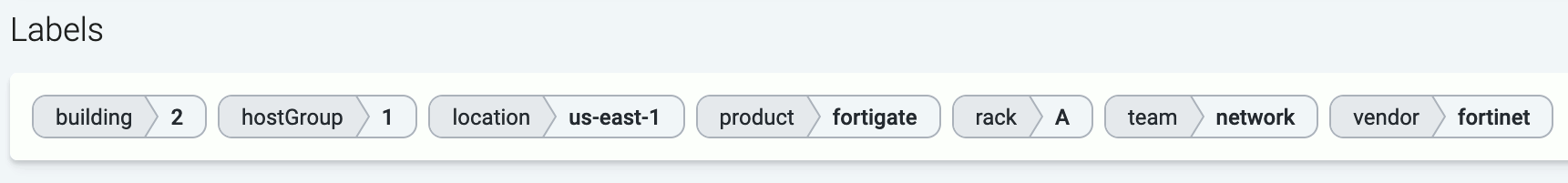

Axoflow (or more precisely, AxoRouter) classifies the processed log data to identify common vendors and products, and automatically applies labels to attach this information.

You can also integrate the telemetry pipeline with external asset management systems to further enrich your security data with custom labels and additional contextual information about your data sources.

6.3 - Policy based routing

Axoflow’s host attribution gives you unprecedented possibilities to route your data. You can tell exactly what kind of data you want to send where. Not only based on technical parameters like sender IP or application name, but using a much more fine-grained inventory that includes information about the devices, their roles, and their location. For example:

- The vendor and the product (for example, a SonicWall firewall)

- The physical location of the device (geographical location, rack number)

- Owner of the device (organization/department/team)

Axoflow automatically adds metadata labels based on information extracted from the data payload, but you can also add static custom labels to hosts manually, or dynamically using flows.

Paired with the information from the content of the messages, all this metadata allows you to formulate high-level, declarative policies and alerts using the metadata labels, for example:

- Route the logs of devices to the owner’s Splunk index.

- Route every firewall and security log to a specific index.

- Let the different teams manage and observe their own devices.

- Granular access control based on relevant log content (for example, identify a proxy log-stream based on the upstream address), instead of just device access.

- Ensure that specific logs won’t cross geographic borders, violating GDPR or other compliance requirements.

6.4 - Classify and reduce security data

Axoflow provides a robust classification system that actually verifies the data it receives, instead of relying on using dedicated ports. This approach results in automatic data labeling, high-quality data, and volume reduction - out of the box, automated, without coding.

Classify the incoming data

Verifying which device or service a certain message belongs to is difficult: even a single data source (like an appliance) can have different kinds of messages, and you have to be able to recognize each one, uniquely. This requires:

- deep, device and vendor-specific understanding of the data, and also

- understanding of the syslog data formats and protocols, because oftentimes the data sources send invalid messages that you have to recognize and fix as part of the classification process.

Also, classification needs to be both reliable and performant. A naive implementation using regexps is neither, nevertheless, that’s the solution you find at the core of today’s ingestion pipelines. We at Axoflow understand that creating and maintaining such a classification database is difficult, this is why we decided to make classification a core functionality of the Axoflow Platform, so you will never need to write another parsing regexp. At the moment, Axoflow supports over 90 data sources of well-known vendors.

Classification and the ability to process your security data in the pipeline also allows you to:

- Fix the incoming data (like the malformed firewall messages shown above) to add missing information, like hostname or timestamp.

- Identify the source host,

- Parse the log to access the information contained within,

- Redact sensitive information before it gets sent to a SIEM or storage, like PII information,

- Reduce the data volume and as a result, storage and SIEM costs,

- Enrich the data with contextual information, like adding labels based on the source or content of the data,

- Use all the above to route the data to the appropriate destinations, and finally

- Transform the data into an optimized format that the destination can reliably and effortlessly consume. This includes mapping your data to multiple different schemas if you use multiple analytic tools.

Reduce data volume

Classifying and parsing the incoming data allows you to remove the parts that aren’t needed, for example, to:

- drop entire messages if they are redundant or not relevant from a security perspective, or

- remove parts of individual messages, like fields that are non-empty even if they do not convey information (for example, that contain values such as “N/A” or “0”).

As this data reduction happens in the pipeline, before the data arrives in the SIEM or storage, it can save you significant costs, and also improves the quality of the data your detection engineers get to work with.

Palo Alto log reduction example

Let’s see an example on how data reduction works in Axoflow. Here is a log message from a Palo Alto firewall:

<165>Mar 26 18:41:06 us-east-1-dc1-b-edge-fw 1,2025/03/26 18:41:06,007200001056,TRAFFIC,end,1,2025/03/26 18:41:06,192.168.41.30,192.168.41.255,10.193.16.193,192.168.41.255,allow-all,,,netbios-ns,vsys1,Trust,Untrust,ethernet1/1,ethernet1/2,To-Panorama,2025/03/26 18:41:06,8720,1,137,137,11637,137,0x400000,udp,allow,276,276,0,3,2025/03/26 18:41:06,2,any,0,2345136,0x0,192.168.0.0-192.168.255.255,192.168.0.0-192.168.255.255,0,3,0

Here’s what you can drop from this particular message:

-

Redundant timestamps: Palo Alto log messages contain up to five, practically identical timestamps (see the Receive time, Generated time, and High resolution timestamp fields in the Traffic Log Fields documentation):

- the syslog timestamp in the header (Mar 26 18:41:06),

- the time Panorama (the management plane of Palo Alto firewalls) collected the message (2025/03/26 18:41:06), and

- the time when the event was generated (2025/03/26 18:41:06).

The sample log message has five timestamps. Leaving only one timestamp can reduce the message size by up to 15%.

-

The priority field (<165>) is identical in most messages and has no information value. While that takes up only about 1% of the size of the, on high-traffic firewalls even this small change adds up to significant data saving.

-

Several fields contain default or empty values that provide no information, for example, default internal IP ranges like 192.168.0.0-192.168.255.255. Removing such fields yields over 10% size reduction.

Note that when removing fields, we can delete only the value of the field, because the message format (CSV) relies on having a fixed order of columns for each message type. This also means that we have to individually check what can be removed from each of the 17 Palo Alto log type.

Palo Alto firewalls send this specific message when a connection is closed. They also send a message when a new connection is started, but that doesn’t contain any information that’s not available in the ending message, so it’s completely redundant and can be dropped. As every connection has a beginning and an end, this alone almost halves the size of the data stored per connection. For example:

Connection start message:

<113>Apr 11 10:58:18 us-east-1-dc1-b-edge-fw 1,10:58:18.421048,007200001056,TRAFFIC,end,1210,10:58:18.421048,192.168.41.30,192.168.41.255,10.193.16.193,192.168.41.255,allow-all,,,ssl,vsys1,trust-users,untrust,ethernet1/2.30,ethernet1/1,To-Panorama,2020/10/09 17:43:54,36459,1,39681,443,32326,443,0x400053,tcp,allow,43135,24629,18506,189,2020/10/09 16:53:27,3012,laptops,0,1353226782,0x8000000000000000,10.0.0.0-10.255.255.255,United States,0,90,99,tcp-fin,16,0,0,0,,testhost,from-policy,,,0,,0,,N/A,0,0,0,0,ace432fe-a9f2-5a1e-327a-91fdce0077da,0

Connection end message:

<113>Apr 11 10:58:18 us-east-1-dc1-b-edge-fw 1,10:58:18.421048,007200001056,TRAFFIC,end,1210,10:58:18.421048,192.168.41.30,192.168.41.255,10.193.16.193,192.168.41.255,allow-all,,,ssl,vsys1,trust-users,untrust,ethernet1/2.30,ethernet1/1,To-Panorama,2020/10/09 17:43:54,36459,1,39681,443,32326,443,0x400053,tcp,allow,43135,24629,18506,189,2020/10/09 16:53:27,3012,laptops,0,1353226782,0x8000000000000000,10.0.0.0-10.255.255.255,United States,0,90,99,tcp-fin,16,0,0,0,,testhost,from-policy,,,0,,0,,N/A,0,0,0,0,ace432fe-a9f2-5a1e-327a-91fdce0077da,0

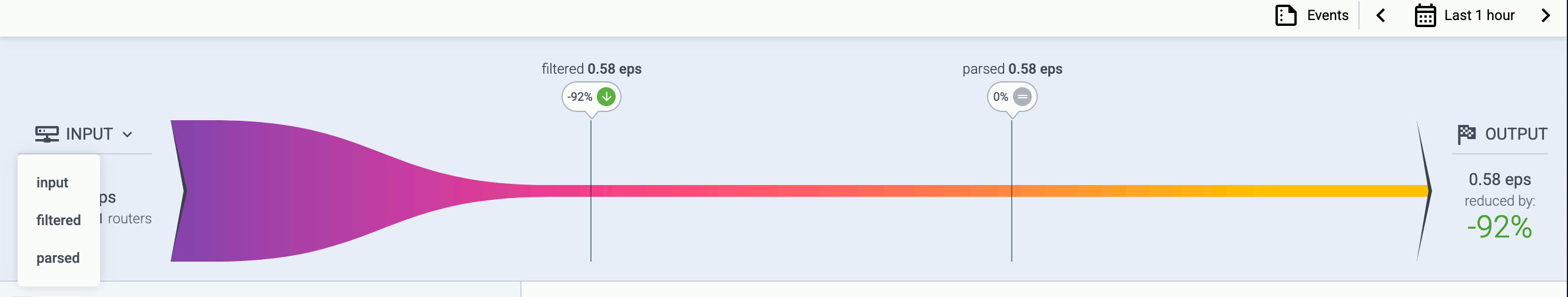

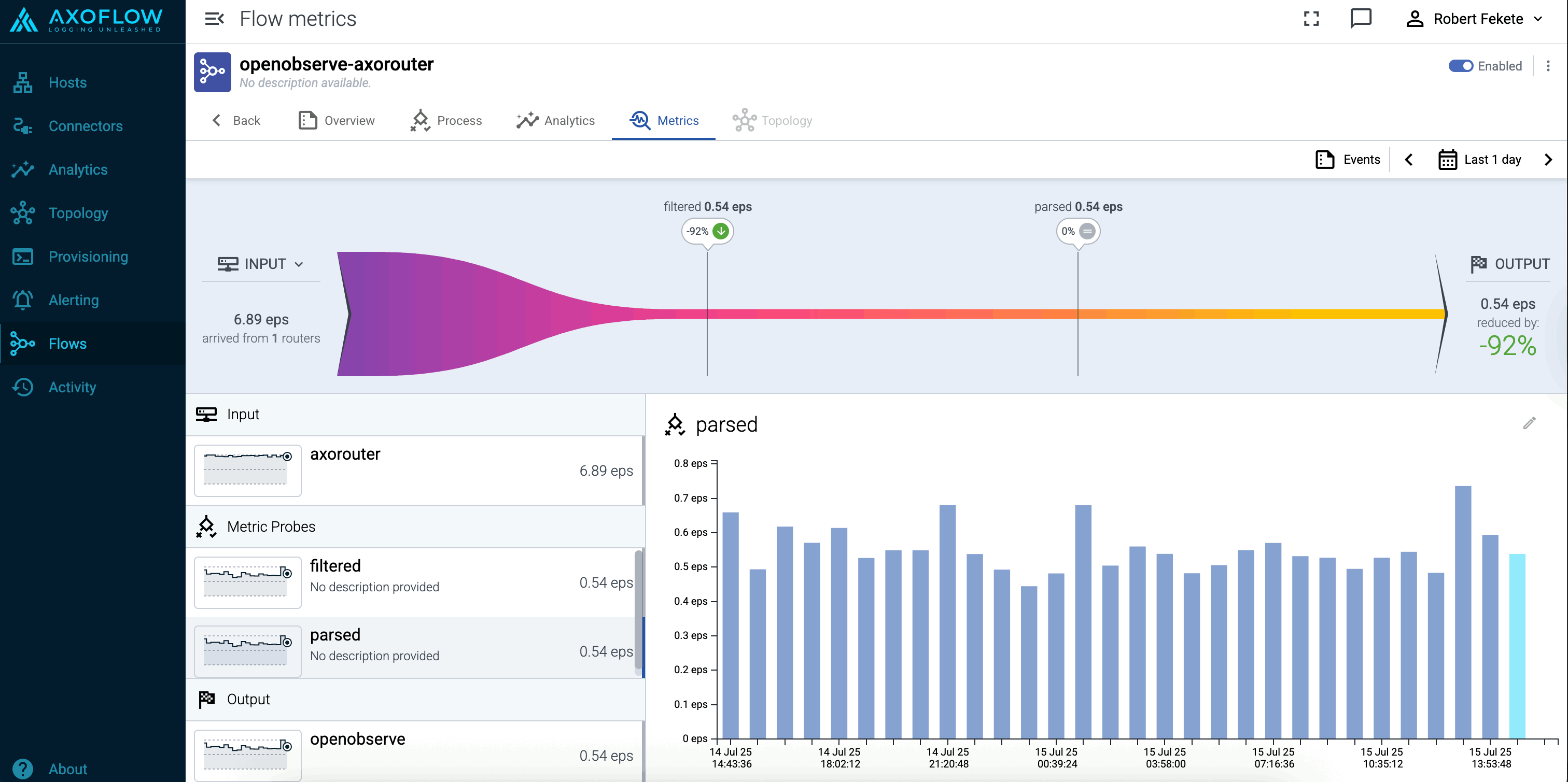

You can enable data reduction in your data flows using the Reduce processing step, and see the amount of data received and transferred in the flow on the Metrics page of the flow.

6.5 - Reliable transport

Between its components, Axoflow transports data using the reliable OpenTelemetry protocol (OTLP) for high performance, and to avoid losing messages. Under the hood, OpenTelemetry uses gRPC, and has superior performance compared to other log transport protocols, consumes less bandwidth (because it uses protocol buffers), and also provides features like:

- on-the-wire compression

- authentication

- application layer acknowledgement

- batched data sending

- multi-worker scalability on the client and the server.

7 - Manage and monitor the pipeline

7.1 - Provision pipeline elements

The following sections describe how to register a logging host into the Axoflow Console.

Hosts with supported collectors

If the host is running one of the following log collector agents and you can install Axolet on the host to receive detailed metrics about the host, the agent, and data flow the agent processes.

-

Install Axolet on the host. For details, see Axolet.

-

Configure the log collector agent of the host to integrate with Axolet. For details, see the following pages:

7.1.1 - AxoRouter

7.1.1.1 - Install AxoRouter on Kubernetes

To install AxoRouter on a Kubernetes cluster, complete the following steps. For other platforms, see AxoRouter.

Note

Note that AxoRouter collects detailed, real-time metrics about the data-flows – giving you observability over the health of the security data pipeline and its components. Your security data remains in your self-managed cloud or in your on-prem instance where your sources, destinations, and AxoRouters are running, only metrics are forwarded to Axoflow Console.

Prerequisites

Kubernetes version 1.29 and newer

Minimal resource requirements

- CPU: at least

100m

- Memory:

256MB

- Storage:

8Gi

Network access

The hosts must be able to access the following domains related to the Axoflow Console:

Install AxoRouter

-

Open the Axoflow Console.

-

Select Provisioning.

-

Select the Host type > AxoRouter > Kubernetes. The one-liner installation command is displayed.

-

Open a terminal and set your Kubernetes context to the cluster where you want to install AxoRouter.

-

Run the one-liner, and follow the on-screen instructions.

Current kubernetes context: minikube

Server Version: v1.28.3

Installing to new namespace: axorouter

Do you want to install AxoRouter now? [Y]

-

Register the host.

-

Reload the Provisioning page. There should be a registration request for the new AxoRouter deployment. Select ✓.

-

Select Register to register the host. You can add a description and labels (in label:value format) to the host.

-

Select the Topology page. The new AxoRouter instance is displayed.

Create a flow

- If you haven’t already done so, create a new destination.

-

Create a flow to connect the new AxoRouter to the destination.

-

Select Flows.

-

Select Create New Flow.

-

Enter a name for the flow, for example, my-test-flow.

-

In the Router Selector field, enter an expression that matches the router(s) you want to apply the flow. To select a specific router, use a name selector, for example, name = my-axorouter-hostname.

-

Select the Destination where you want to send your data. If you don’t have any destination configured, see Destinations.

By default, you can select only external destinations. If you want to send data to another AxoRouter, enable the Show all destinations option, and select the connector of the AxoRouter where you want to send the data.

-

(Optional) To process the data transferred in the flow, select Add New Processing Step. For details, see Processing steps. For example:

- Add a Reduce step to automatically remove redundant and empty fields from your data.

- To select which messages are processed by the flow, add a Select Messages step, and enter a filter into the Query field. For example, to select only the messages received from Fortinet FortiGate firewalls, use the

meta.vendor = fortinet + meta.product = fortigate query.

- Save the processing steps.

-

Select Create.

-

The new flow appears in the Flows list.

Send logs to AxoRouter

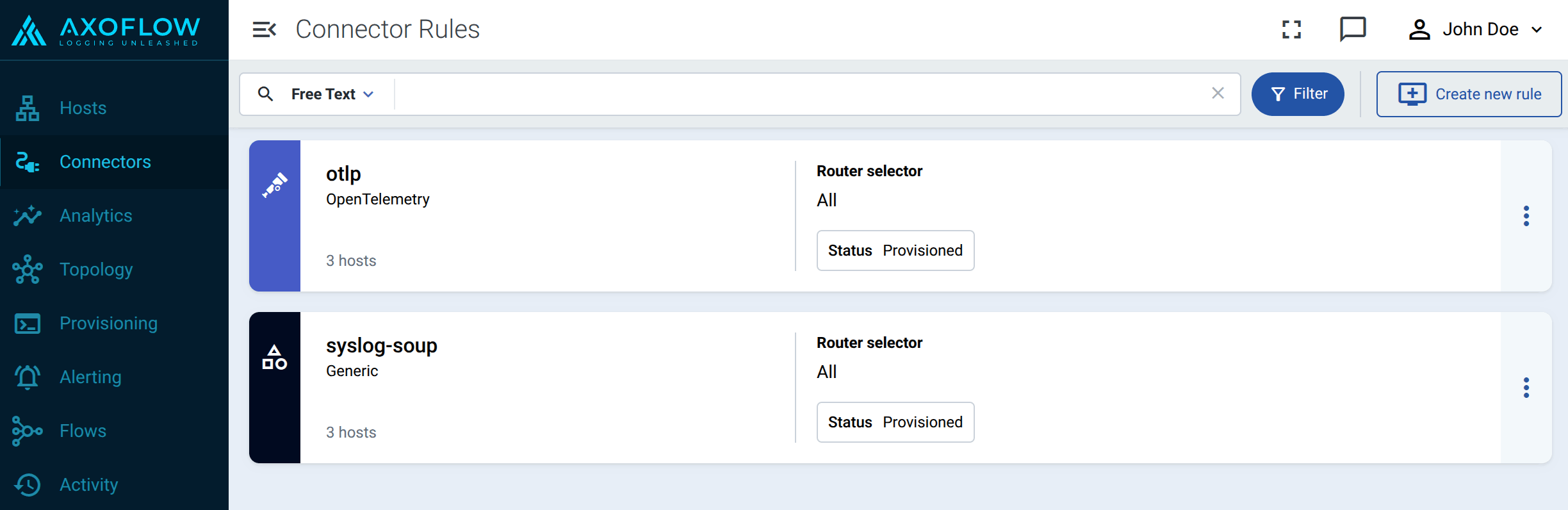

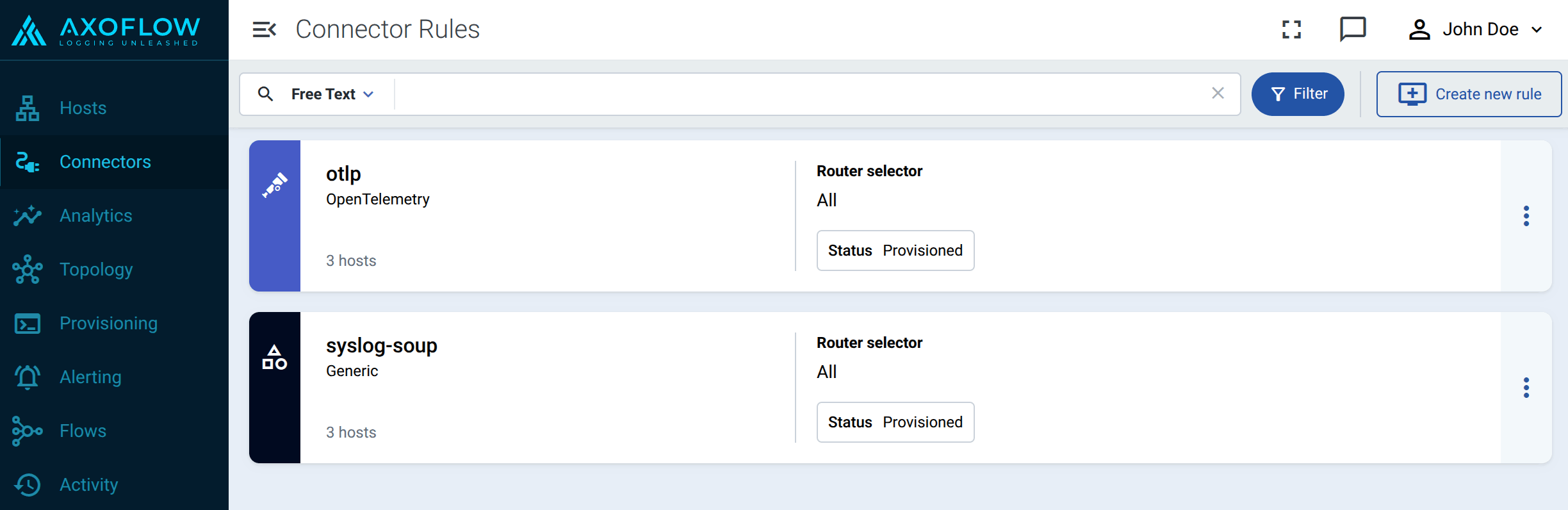

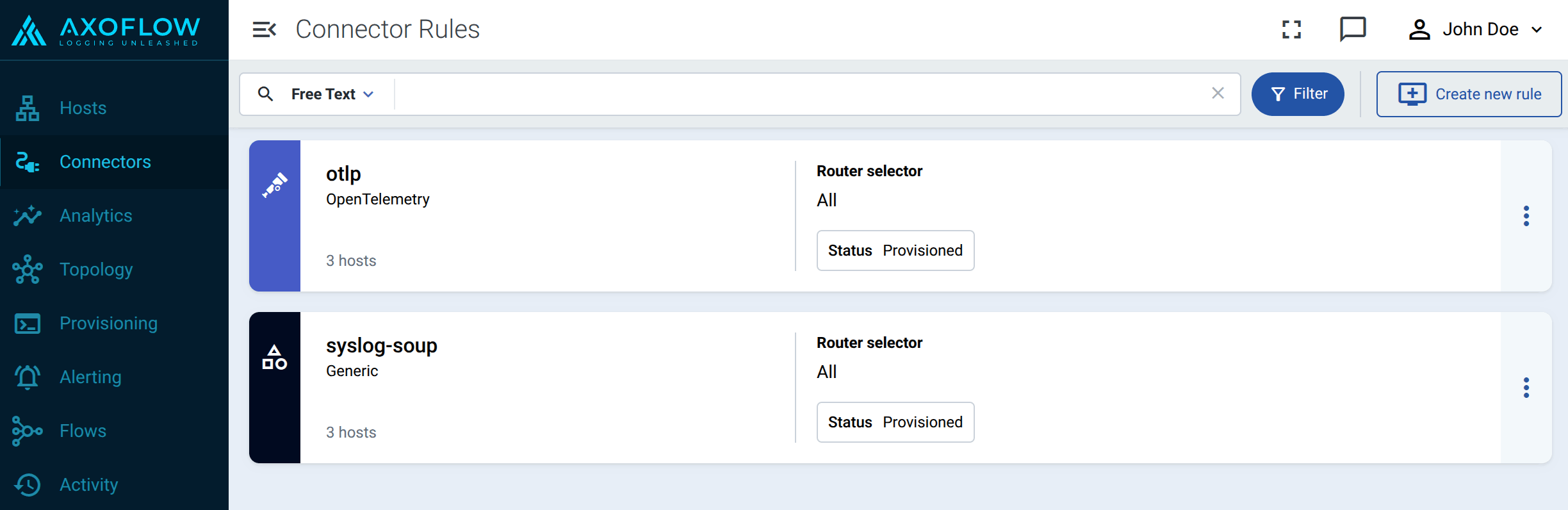

By default, AxoRouter accepts data on the following ports (unless you’ve modified the default connector rules):

- 514 TCP and UDP for RFC3164 (BSD-syslog) formatted traffic.

- 601 TCP for RFC5424 (IETF-syslog) formatted traffic.

- 6514 TCP for TLS-encrypted syslog traffic.

- 4317 TCP for OpenTelemetry log data.

To receive data on other ports or other protocols, configure other connector rules for the AxoRouter host.

Make sure to enable the ports you’re using on the firewall of your host.

7.1.1.1.1 - Advanced installation options

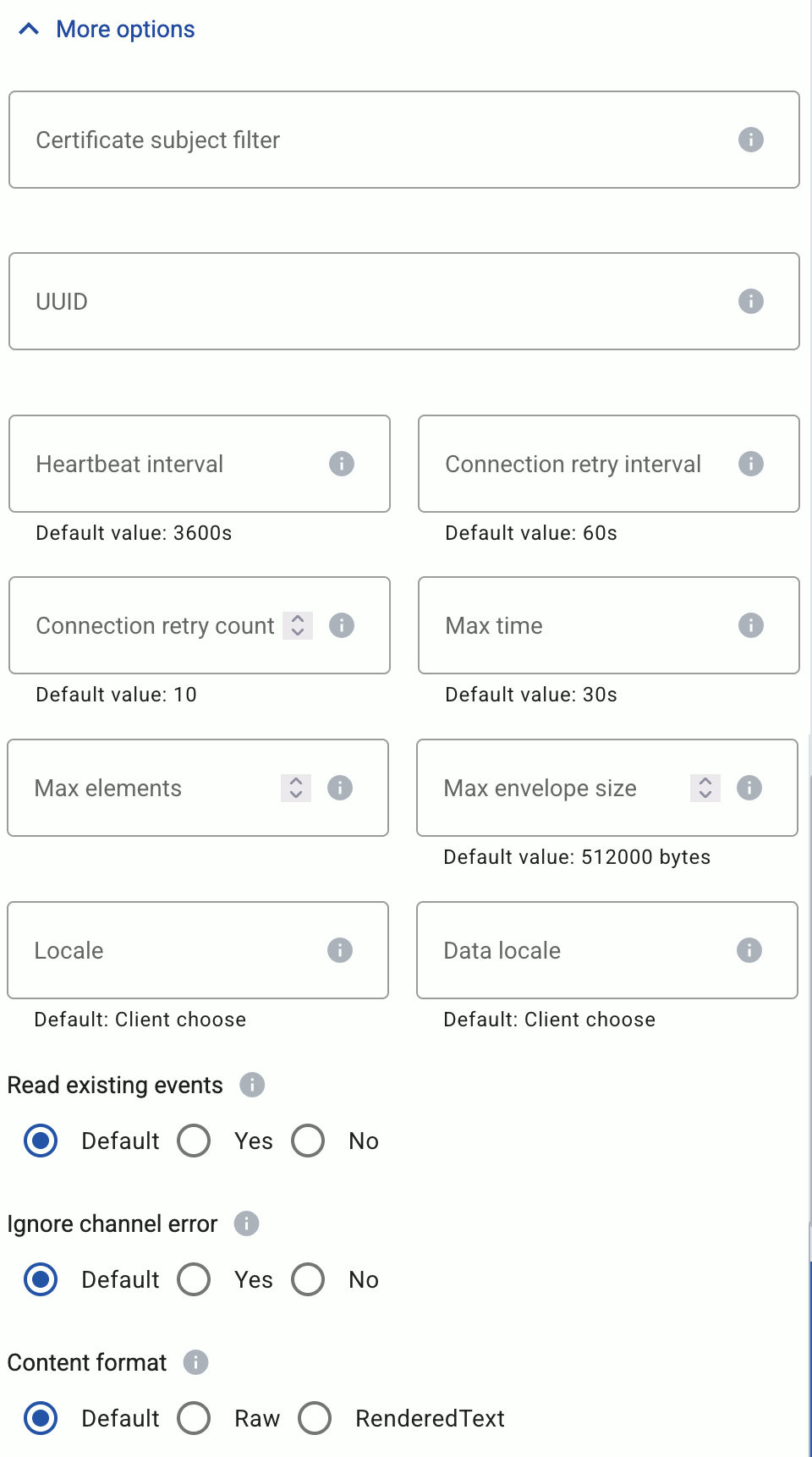

When installing AxoRouter, you can set a number of advanced options if needed for your environment. Setting the advanced options in the Axoflow Console automatically updates the one-liner command that you can copy and run.

Alternatively, before running the one-liner you can use one of the following methods:

Proxy settings

Use the http_proxy=, https_proxy=, no_proxy= parameters to configure HTTP proxy settings for the installer. To configure the Axolet service to use the proxy settings, enable the AXOLET_AVOID_PROXY parameter as well. Lowercase variable names are preferred because they work universally.

Installation options

You can pass the following parameters to the installation script as environment variables, or as URL parameters.

Note

Running the provisioning command with sudo would mask environment variables of the calling shell. Either start the whole procedure from a root shell, or let the install script call sudo when it needs to. In other words: don’t add the sudo command to the provisioning command.

AxoRouter image override

|

|

| Default value: |

empty string |

| Environment variable |

IMAGE |

| URL parameter |

image |

Description: Deploy the specified AxoRouter image.

Helm chart

|

|

| Default value: |

oci://us-docker.pkg.dev/axoflow-registry-prod/axoflow/charts/axorouter-syslog |

| Environment variable |

HELM_CHART |

| URL parameter |

helm_chart |

Description: The path or URL of the AxoRouter Helm chart.

Helm chart version

|

|

| Default value: |

Current Axoflow version |

| Environment variable |

HELM_CHART_VERSION |

| URL parameter |

helm_chart_version |

Description: Deploy the specified version of the Helm chart.

|

|

| Default value: |

empty string |

| Environment variable |

HELM_EXTRA_ARGS |

| URL parameter |

helm_extra_args |

Description: Additional arguments passed to Helm during the installation.

Helm release name

|

|

| Default value: |

axorouter |

| Environment variable |

HELM_RELEASE_NAME |

| URL parameter |

helm_release_name |

Description: Name of the Helm release.

Image repository

|

|

| Default value: |

us-docker.pkg.dev/axoflow-registry-prod/axoflow/axorouter |

| Environment variable |

IMAGE_REPO |

| URL parameter |

image_repo |

Description: Deploy AxoRouter from a custom image repository.

Image version

|

|

| Default value: |

Current Axoflow version |

| Environment variable |

IMAGE_VERSION |

| URL parameter |

image_version |

Description: Deploy the specified AxoRouter version.

Namespace

|

|

| Default value: |

axorouter |

| Environment variable |

NAMESPACE |

| URL parameter |

namespace |

Description: The namespace where AxoRouter is installed.

Axolet parameters

API server host

|

|

| Default value: |

|

| Environment variable |

|

| URL parameter |

api_server_host |

Description: Override the host part of the API endpoint for the host.

Axolet executable path

|

|

| Default value: |

|

| Environment variable |

AXOLET_EXECUTABLE |

| URL parameter |

axolet_executable |

Description: Path to the Axolet executable.

Axolet image override

|

|

| Default value: |

empty string |

| Environment variable |

AXOLET_IMAGE |

| URL parameter |

axolet_image |

Description: Deploy the specified Axolet image.

Axolet image repository

|

|

| Default value: |

us-docker.pkg.dev/axoflow-registry-prod/axoflow/axolet |

| Environment variable |

AXOLET_IMAGE_REPO |

| URL parameter |

axolet_image_repo |

Description: Deploy Axolet from a custom image repository.

Axolet image version

|

|

| Default value: |

Current Axoflow version |

| Environment variable |

AXOLET_IMAGE_VERSION |

| URL parameter |

axolet_image_version |

Description: Deploy the specified Axolet version.

Initial GUID

|

|

| Default value: |

|

| Environment variable |

|

| URL parameter |

initial_guid |

Description: Set a static GUID.

7.1.1.2 - Install AxoRouter on Linux

AxoRouter is a key building block of Axoflow that collects, aggregates, transforms and routes all kinds of telemetry and security data automatically. AxoRouter for Linux includes a Podman container running AxoSyslog, Axolet, and other components.

To install AxoRouter on a Linux host, complete the following steps. For other platforms, see AxoRouter.

Note

Note that AxoRouter collects detailed, real-time metrics about the data-flows – giving you observability over the health of the security data pipeline and its components. Your security data remains in your self-managed cloud or in your on-prem instance where your sources, destinations, and AxoRouters are running, only metrics are forwarded to Axoflow Console.

What the install script does

When you deploy AxoRouter, you run a command that installs the required software packages, configures them and sets up the connection with Axoflow.

The installer script installs the axolet packages, then executes the configure-axolet command with the right parameters. (If the packages are already installed, the installer will update them unless the none package format is selected when generating the provisioning command.)

The install script is designed to be run as root (sudo), but you can configure AxoRouter to run as a non-root user.

The installer script performs the following main steps:

- Executes prerequisite checks:

- Tests the network connection with the console endpoints.

- Checks if the operating system is supported.

- Checks if

podman is installed.

- Downloads and installs the

axolet RPM or DEB package.

- The package contains the

axolet and configure-axolet commands, and the axolet.service systemd unit file.

- The

configure-axolet command is executed with a configuration snippet on its standard input which contains a token required for registering into the management platform. The command:

-

Writes the initial /etc/axolet/config.json configuration file.

Note: if the file already exists it will only be overwritten if the Overwrite config option is enabled when generating the provisioning command.

-

Enables and starts the axolet service.

axolet performs the following main steps on its first execution:

- Generates and persists a unique identifier (GUID).

- Initiates a cryptographic handshake process to Axoflow Console.

- Axoflow Console issues a client certificate to AxoRouter, which will be stored in the above mentioned

config.json file.

- The service waits for an approval on Axoflow Console. Once you approve the host registration request, axolet starts to

manage the local services and

send telemetry data to Axoflow Console. It keeps doing so as long as the agent is registered.

Prerequisites

Minimal resource requirements

- CPU: at least

100m

- Memory:

256MB

- Storage:

8Gi

Network access

The hosts must be able to access the following domains related to the Axoflow Console:

Install AxoRouter

-

Select Provisioning > Select type and platform.

-

Select the type (AxoRouter) and platform (Linux). The one-liner installation command is displayed.

If needed, set the Advanced options (for example, proxy settings) to modify the installation parameters. Usually, you don’t have to use advanced options unless the Axoflow support team instructs you to do so.

-

Open a terminal on the host where you want to install AxoRouter.

-

Run the one-liner, then follow the on-screen instructions.

Note

Running the provisioning command with sudo would mask environment variables of the calling shell. Either start the whole procedure from a root shell, or let the install script call sudo when it needs to. In other words: don’t add the sudo command to the provisioning command.

Example output:

Do you want to install AxoRouter now? [Y]

y

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 4142 100 4142 0 0 19723 0 --:--:-- --:--:-- --:--:-- 19818

Verifying packages...

Preparing packages...

axorouter-0.40.0-1.aarch64

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 31.6M 100 31.6M 0 0 2092k 0 0:00:15 0:00:15 --:--:-- 2009k

Verifying packages...

Preparing packages...

axolet-0.40.0-1.aarch64

Created symlink /etc/systemd/system/multi-user.target.wants/axolet.service → /usr/lib/systemd/system/axolet.service.

Now continue with onboarding the host on the Axoflow web UI.

-

Register the host.

-

Reload the Provisioning page. There should be a registration request for the new AxoRouter deployment. Select ✓.

-

Select Register to register the host. You can add a description and labels (in label:value format) to the host.

-

Select the Topology page. The new AxoRouter instance is displayed.

Create a flow

- If you haven’t already done so, create a new destination.

-

Create a flow to connect the new AxoRouter to the destination.

-

Select Flows.

-

Select Create New Flow.

-

Enter a name for the flow, for example, my-test-flow.

-

In the Router Selector field, enter an expression that matches the router(s) you want to apply the flow. To select a specific router, use a name selector, for example, name = my-axorouter-hostname.

-

Select the Destination where you want to send your data. If you don’t have any destination configured, see Destinations.

By default, you can select only external destinations. If you want to send data to another AxoRouter, enable the Show all destinations option, and select the connector of the AxoRouter where you want to send the data.

-

(Optional) To process the data transferred in the flow, select Add New Processing Step. For details, see Processing steps. For example:

- Add a Reduce step to automatically remove redundant and empty fields from your data.

- To select which messages are processed by the flow, add a Select Messages step, and enter a filter into the Query field. For example, to select only the messages received from Fortinet FortiGate firewalls, use the

meta.vendor = fortinet + meta.product = fortigate query.

- Save the processing steps.

-

Select Create.

-

The new flow appears in the Flows list.

Send logs to AxoRouter

Configure your hosts to send data to AxoRouter.

-

For appliances that are specifically supported by Axoflow, see Sources.

-

For other appliances and generic Linux devices, see Generic tips.

-

For a quick test without an actual source, you can also do the following (requires nc to be installed on the AxoRouter host):

-

Open the Axoflow Console, select Topology, then select the AxoRouter instance you’ve deployed.

-

Select ⋮ > Tap log flow > Input log flow. Select Start.

-

Open a terminal on your AxoRouter host.

-

Run the following command to send 120 test messages (2 per second) in a loop to AxoRouter:

for i in `seq 1 120`; do echo "<165> fortigate date=$(date -u +%Y-%m-%d) time=$(date -u +"%H:%M:%S%Z") devname=us-east-1-dc1-a-dmz-fw devid=FGT60D4614044725 logid=0100040704 type=event subtype=system level=notice vd=root logdesc=\"System performance statistics\" action=\"perf-stats\" cpu=2 mem=35 totalsession=61 disk=2 bandwidth=158/138 setuprate=2 disklograte=0 fazlograte=0 msg=\"Performance statistics: average CPU: 2, memory: 35, concurrent sessions: 61, setup-rate: 2\""; sleep 0.5; done | nc -v 127.0.0.1 514

Alternatively, you can send logs in an endless loop:

while true; do echo "<165> fortigate date=$(date -u +%Y-%m-%d) time=$(date -u +"%H:%M:%S%Z") devname=us-east-1-dc1-a-dmz-fw devid=FGT60D4614044725 logid=0100040704 type=event subtype=system level=notice vd=root logdesc=\"System performance statistics\" action=\"perf-stats\" cpu=2 mem=35 totalsession=61 disk=2 bandwidth=158/138 setuprate=2 disklograte=0 fazlograte=0 msg=\"Performance statistics: average CPU: 2, memory: 35, concurrent sessions: 61, setup-rate: 2\""; sleep 1; done | nc -v 127.0.0.1 514

Manage AxoRouter

This section describes how to start, stop and check the status of the AxoRouter service on Linux.

Start AxoRouter

To start AxoRouter, execute the following command. For example:

systemctl start axorouter

If the service starts successfully, no output will be displayed.

The following message indicates that AxoRouter can not start (see Check AxoRouter status):

Job for axorouter.service failed because the control process exited with error code. See `systemctl status axorouter.service` and `journalctl -xe` for details.

Stop AxoRouter

To stop AxoRouter

-

Execute the following command.

systemctl stop axorouter

-

Check the status of the AxoRouter service (see Check AxoRouter status).

Restart AxoRouter

To restart AxoRouter, execute the following command.

systemctl restart axorouter

Reload the configuration without restarting AxoRouter

To reload the configuration file without restarting AxoRouter, execute the following command.

systemctl reload axorouter

Check the status of AxoRouter service

To check the status of AxoRouter service

-

Execute the following command.

systemctl --no-pager status axorouter

-

Check the Active: field, which shows the status of the AxoRouter service. The following statuses are possible:

7.1.1.2.1 - Advanced installation options

When installing AxoRouter, you can set a number of advanced options if needed for your environment. Setting the advanced options in the Axoflow Console automatically updates the one-liner command that you can copy and run.

Alternatively, before running the one-liner you can use one of the following methods:

Proxy settings

Use the HTTP proxy, HTTPS proxy, No proxy parameters to configure HTTP proxy settings for the installer. To avoid using the proxy for the Axolet service, enable the Avoid proxy parameter as well. Lowercase variable names are preferred because they work universally.

Installation options

You can pass the following parameters to the installation script as environment variables, or as URL parameters.

Note

Running the provisioning command with sudo would mask environment variables of the calling shell. Either start the whole procedure from a root shell, or let the install script call sudo when it needs to. In other words: don’t add the sudo command to the provisioning command.

AxoRouter capabilities

|

|

| Default value: |

CAP_NET_BIND_SERVICE CAP_NET_BROADCAST CAP_NET_RAW CAP_SYSLOG CAP_BPF |

| Environment variable |

AXO_AXOROUTER_CAPS |

| URL parameter |

axorouter_caps |

Description: Capabilities added to the AxoRouter container.

AxoRouter config mount path

|

|

| Default value: |

/etc/axorouter/user-config |

| Environment variable |

AXO_AXOROUTER_CONFIG_MOUNT_INSIDE |

| URL parameter |

axorouter_config_mount_inside |

Description: Mount path for custom user configuration.

AxoRouter image override

|

|

| Default value: |

us-docker.pkg.dev/axoflow-registry-prod/axoflow/axorouter |

| Environment variable |

AXO_IMAGE |

| URL parameter |

image |

Description: Deploy the specified AxoRouter image.

|

|

| Default value: |

empty string |

| Environment variable |

AXO_PODMAN_ARGS |

| URL parameter |

extra_args |

Description: Additional arguments passed to the AxoRouter container.

Image repository

|

|

| Default value: |

us-docker.pkg.dev/axoflow-registry-prod/axoflow/axorouter |

| Environment variable |

AXO_IMAGE_REPO |

| URL parameter |

image_repo |

Description: Deploy AxoRouter from a custom image repository.

Image version

|

|

| Default value: |

Current Axoflow version |

| Environment variable |

AXO_IMAGE_VERSION |

| URL parameter |

image_version |

Description: Deploy the specified AxoRouter version.

|

|

| Default value: |

auto |

| Available values: |

auto, dep, rpm, tar, none |

| Environment variable |

AXO_INSTALL_PACKAGE |

| URL parameter |

install_package |

Description: File format of the installer package.

Start router

|

|

| Default value: |

true |

| Available values: |

true, false |

| Environment variable |

AXO_START_ROUTER |

| URL parameter |

start_router |

Description: Start AxoRouter after installation.

Axolet parameters

API server host

|

|

| Default value: |

|

| Environment variable |

|

| URL parameter |

api_server_host |

Description: Override the host part of the API endpoint for the host.

Avoid proxy

|

|

| Default value: |

false |

| Available values: |

true, false |

| Environment variable |

AXO_AVOID_PROXY |

| URL parameter |

avoid_proxy |

Description: Do not use proxy for the Axolet process.

Axolet capabilities

|

|

| Default value: |

CAP_SYS_PTRACE CAP_SYS_CHROOT |

| Environment variable |

AXO_CAPS |

| URL parameter |

caps |

Description: Capabilities added to the Axolet service.

Configuration directory

|

|

| Default value: |

/etc/axolet |

| Environment variable |

AXO_CONFIG_DIR |

| URL parameter |

config_dir |

Description: The directory where the configuration files are stored.

HTTP proxy

|

|

| Default value: |

empty string |

| Environment variable |

AXO_HTTP_PROXY |

| URL parameter |

http_proxy |

Description: Use a proxy to access Axoflow Console from the host.

HTTPS proxy

|

|

| Default value: |

empty string |

| Environment variable |

AXO_HTTPS_PROXY |

| URL parameter |

https_proxy |

Description: Use a proxy to access Axoflow Console from the host.

No proxy

|

|

| Default value: |

empty string |

| Environment variable |

AXO_NO_PROXY |

| URL parameter |

no_proxy |

Description: Comma-separated list of hosts that shouldn’t use proxy to access Axoflow Console from the host.

Overwrite config

|

|

| Default value: |

false |

| Available values: |

true, false |

| Environment variable |

AXO_CONFIG_OVERWRITE |

| URL parameter |

config_overwrite |

Description: Overwrite the configuration when reinstalling the service.

Service group

|

|

| Default value: |

root |

| Environment variable |

AXO_GROUP |

| URL parameter |

group |

Description: The group running the Axolet service.

Service user

|

|

| Default value: |

root |

| Environment variable |

AXO_USER |

| URL parameter |

user |

Description: The user running the Axolet service.

Start service

|

|

| Default value: |

true |

| Available values: |

true, false |

| Environment variable |

AXO_START |

| URL parameter |

start |

Description: Start the Axolet service after installation.

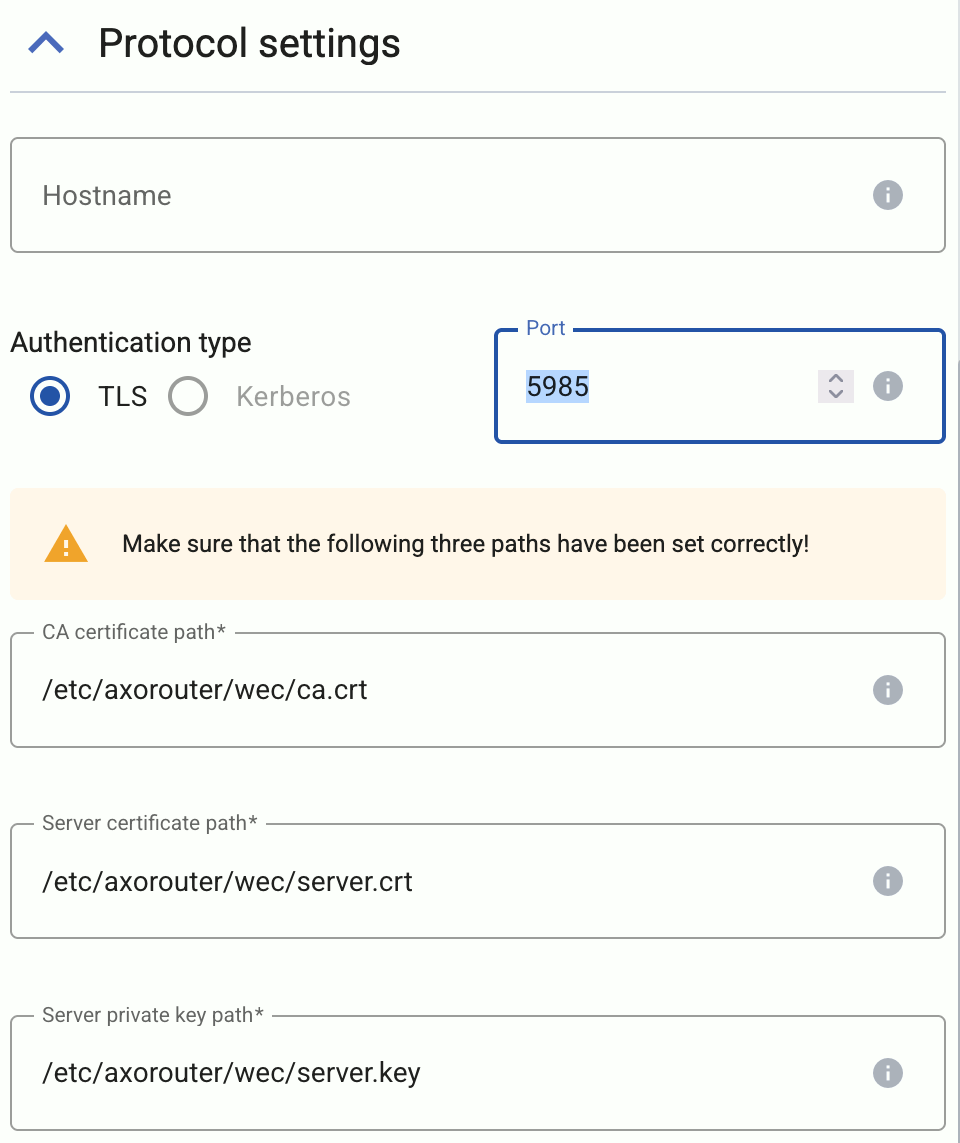

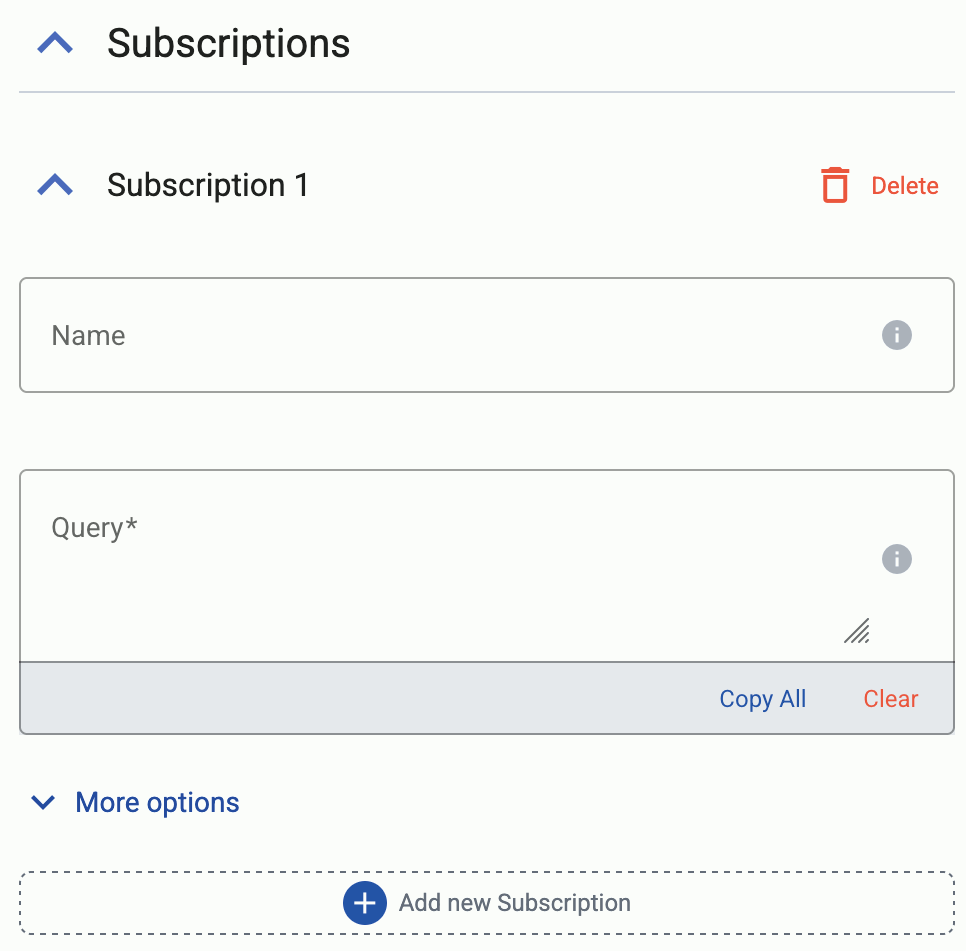

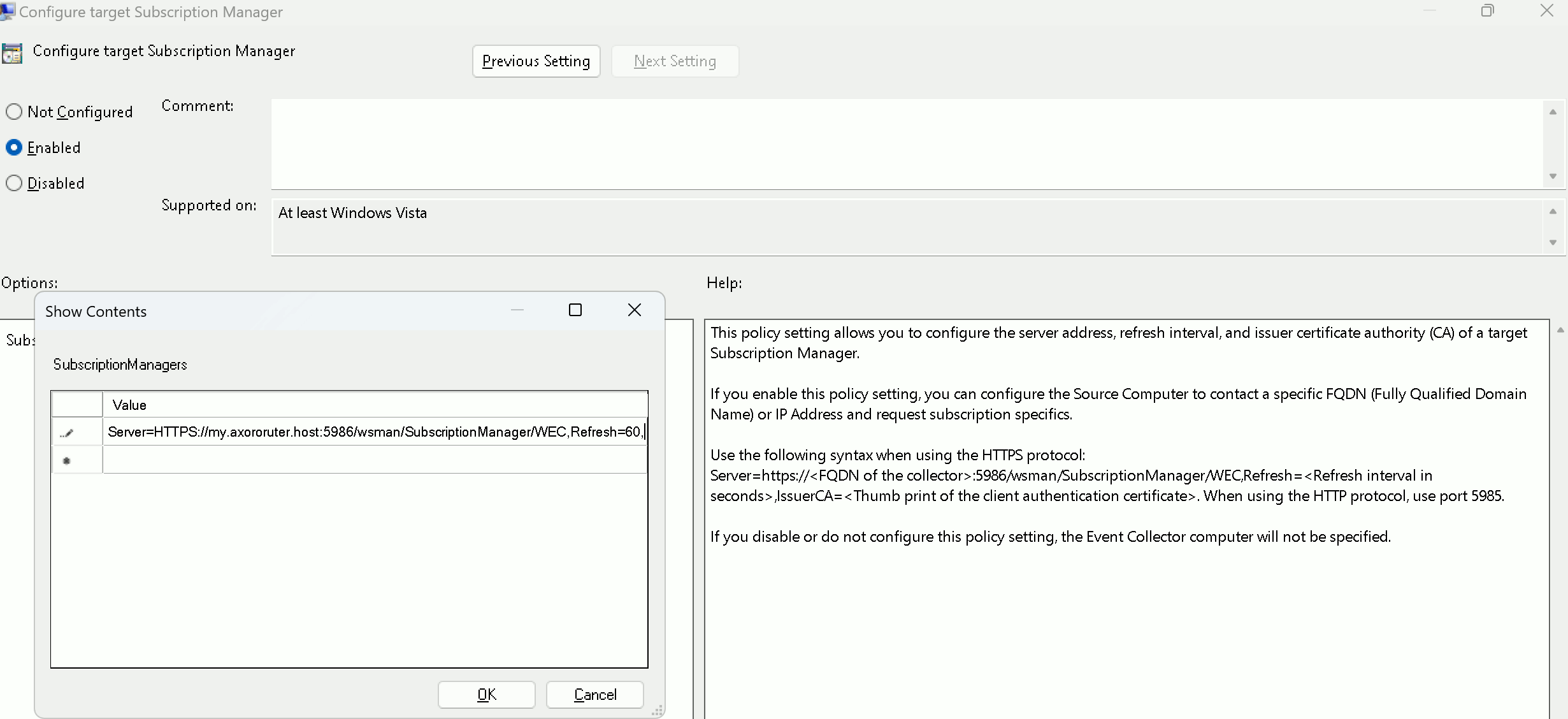

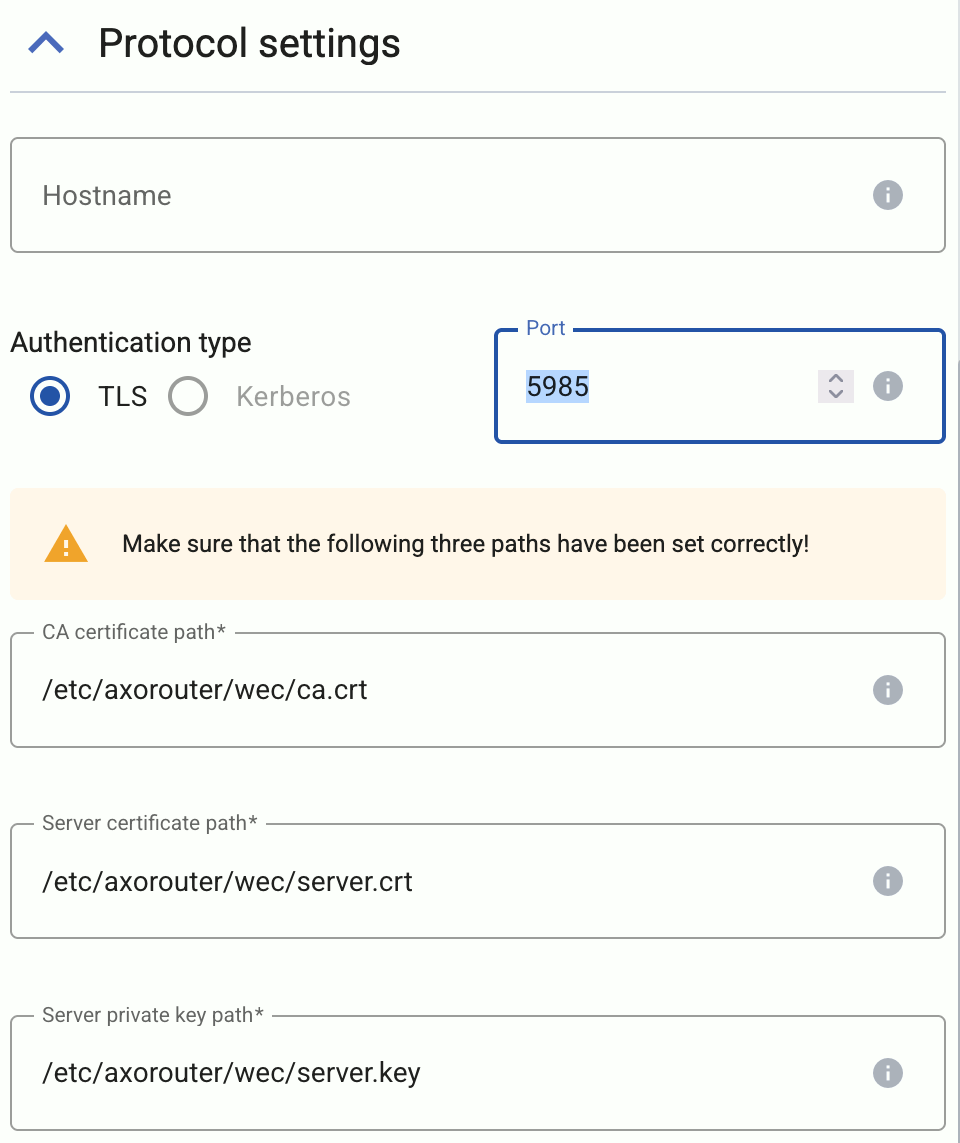

WEC parameters

These parameters are related to the Windows Event Collector server that can be run on AxoRouter. For details, see Windows Event Collector (WEC).

WEC Image repository

|

|

| Default value: |

us-docker.pkg.dev/axoflow-registry-prod/axoflow/axorouter-wec |

| Environment variable |

AXO_WEC_IMAGE_REPO |

| URL parameter |

wec_image_repo |

Description: Deploy the Windows Event Collector server from a custom image repository.

WEC Image version

|

|

| Default value: |

Current Axoflow version |

| Environment variable |

AXO_WEC_IMAGE_VERSION |

| URL parameter |

wec_image_version |

Description: Deploy the specified Windows Event Collector server version.

7.1.1.2.2 - Run AxoRouter as non-root

To run AxoRouter as a non-root user, set the AXO_USER and AXO_GROUP environment variables to the user’s username and groupname on the host you want to deploy AxoRouter. For details, see Advanced installation options.

Operators must have access to the following commands:

-

/usr/bin/systemctl * axolet.service: Controls the axolet.service systemd unit. Usually * is start, stop, restart, enable, and status. Used by the operators for troubleshooting.

-

/usr/local/bin/configure-axolet: Creates initial axolet configuration and enables/starts the axolet service. Executed by the bootstrap script.

-

Command to install and upgrade the axolet package. Executed by the bootstrap script if the packages aren’t already installed.

- On RPM-based Linux distributions:

/usr/bin/rpm -Uv axo*.rpm

- On DEB-based Linux distributions:

/usr/bin/dpkg -i axo*.deb

-

/usr/bin/systemctl * axorouter.service: Controls the axorouter.service systemd unit. Usually * is start, stop, restart, enable, and status. Used by the operators for troubleshooting.

-

/usr/local/bin/configure-axorouter: Creates the initial axorouter configuration and enables/starts the axorouter service. Executed by the bootstrap script.

-

Command to install and upgrade the axorouter and the axolet package. Executed by the bootstrap script if the packages aren’t already installed.

- On RPM-based Linux distributions:

/usr/bin/rpm -Uv axo*.rpm

- On DEB-based Linux distributions:

/usr/bin/dpkg -i axo*.deb

You can permit the syslogng user to run these commands by running on of the following:

sudo tee /etc/sudoers.d/configure-axoflow <<A

syslogng ALL=(ALL) NOPASSWD: /usr/local/bin/configure-axolet

syslogng ALL=(ALL) NOPASSWD: /bin/systemctl * axolet.service

# for rpm installation:

syslogng ALL=(ALL) NOPASSWD: /usr/bin/rpm -Uv axo*.rpm

A

sudo tee /etc/sudoers.d/configure-axorouter <<A

syslogng ALL=(ALL) NOPASSWD: /usr/local/bin/configure-axorouter

syslogng ALL=(ALL) NOPASSWD: /bin/systemctl * axorouter.service

# for rpm installation:

syslogng ALL=(ALL) NOPASSWD: /usr/bin/rpm -Uv axo*.rpm

A

sudo tee /etc/sudoers.d/configure-axorouter <<A

syslogng ALL=(ALL) NOPASSWD: /usr/local/bin/configure-axorouter

syslogng ALL=(ALL) NOPASSWD: /bin/systemctl * axorouter.service

# for deb installation:

syslogng ALL=(ALL) NOPASSWD: /usr/bin/dpkg -i axo*.deb

A

sudo tee /etc/sudoers.d/configure-axorouter <<A

syslogng ALL=(ALL) NOPASSWD: /usr/local/bin/configure-axorouter

syslogng ALL=(ALL) NOPASSWD: /bin/systemctl * axorouter.service

# for deb installation:

syslogng ALL=(ALL) NOPASSWD: /usr/bin/rpm -Uv axo*.deb

A

7.1.2 - AxoSyslog

Onboarding allows you to collect metrics about the host, display the host on the Topology page, and to tap into the log flow.

Onboarding requires you to modify the host and the configuration of the logging agent running on the host.

- Level 1: Install Axolet on the host. Axolet collects metrics from the host and sends them to the Axoflow Console, so you can check host-level metrics on the Metrics & Health page of the host, and displays the host on the Topology page.

- Level 2: Instrument the configuration of the logging agent to provide detailed metrics about the traffic flow. This allows you to display data about the host on the Analytics page.

- Level 3: Instrument the configuration of the logging agent to allow you to access the logs of the logging agent and to tap into the log flow from the Axoflow Console. The exact steps for this integration step depend on the configuration of your logging agent. Contact us so our professional services can help you with the integration.

To onboard an existing AxoSyslog instance into Axoflow, complete the following steps.

-

Install Axolet on the host, then approve its registration on the Provisioning page of the Axoflow Console.

-

The AxoSyslog host is now visible on the Topology page of the Axoflow Console as a source.

-

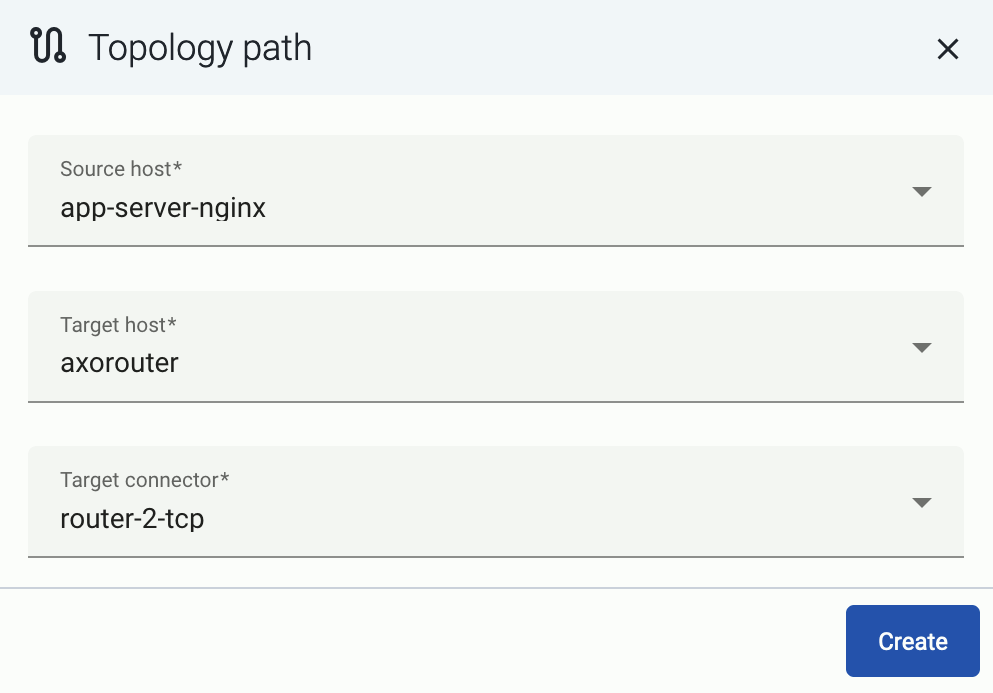

If you've already added the AxoRouter instance or the destination where this host is sending data to the Axoflow Console, add a path to connect the host to the AxoRouter or the destination.

-

Select Topology > + > Path.

-

Select your data source in the Source host field.

-

Select the target router or aggregator this source is sending its data to in the Target host field, for example, axorouter.

-

Select the Target connector. The connector determines how the destination receives the data (for example, using which protocol or port).

-

Select Create. The new path appears on the Topology page.

-

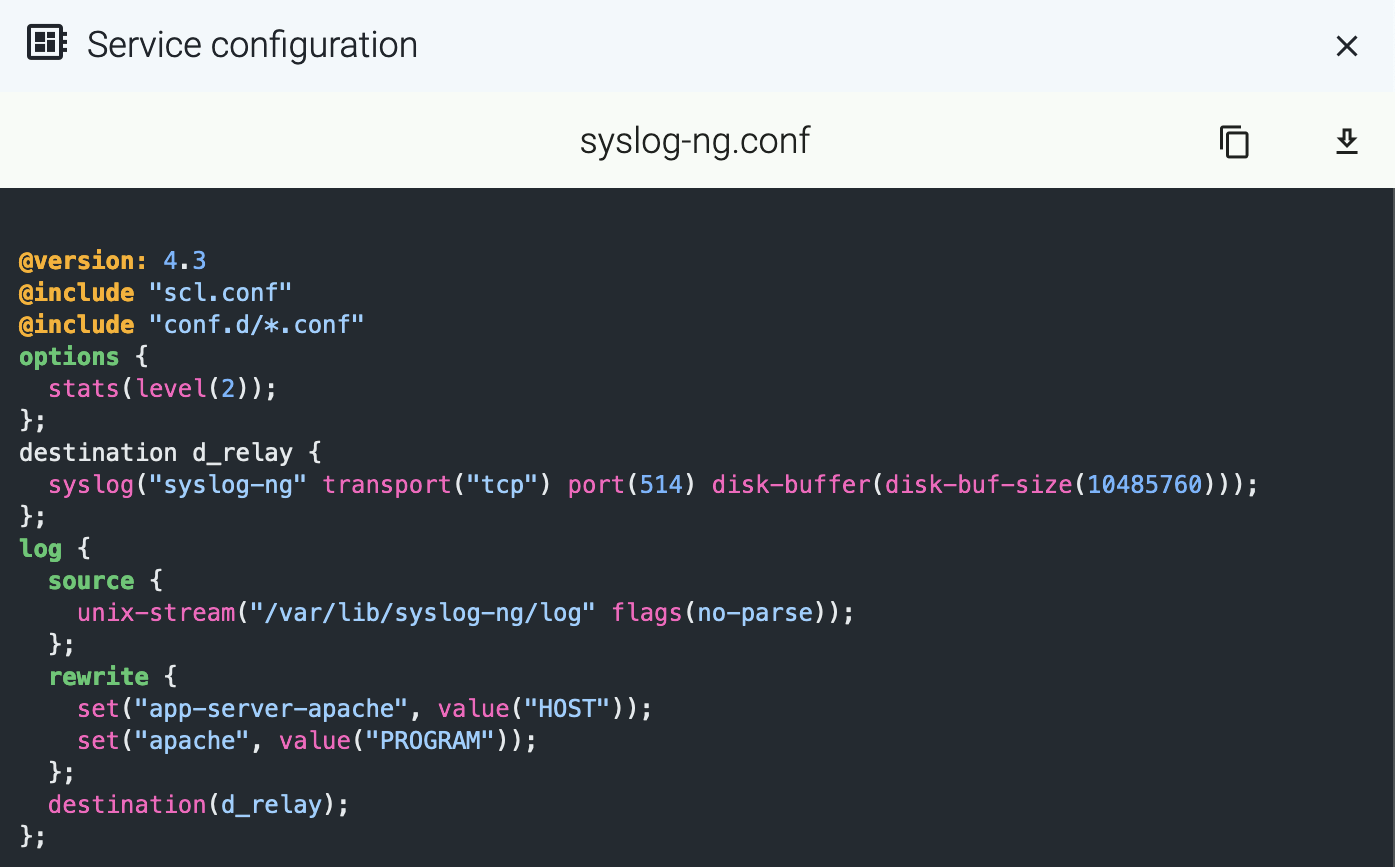

Access the AxoSyslog host and edit the configuration of AxoSyslog. Set the statistics-related global options like this (if the options block already exists, add these lines to the bottom of the block):

options {

stats(

level(2)

freq(0) # Inhibit statistics output to stdout

);

};

-

(Optional) To get detailed metrics and analytics about the traffic that flows through the host, instrument your AxoSyslog configuration as follows:

Note

You can use Axolet with an un-instrumented AxoSyslog configuration file, but that limits available metrics to host statistics (for example, disk, memory, queue information). You won’t access data about the actual traffic flowing through the host. To collect traffic-related metrics, instrument configuration with

metrics-probe() stanzas. The example below shows how to instrument the configuration to highlight common macros such as

$HOST and

$PROTOCOL. If you want to customize the collected metrics or need help with the instrumentation,

contact us.

-

Download the following configuration snippet to the AxoSyslog host, for example, as /etc/syslog-ng/conf.d/axoflow-instrumentation.conf.

-

Include it in at the top of your configuration file:

@version: current

@include "axoflow-instrumentation.conf"

-

Edit every destination statement to include a parser { metrics-output(destination(<custom-ID-for-the-destination>)); }; line, for example:

destination d_file {

channel {

parser { metrics-output(destination(my-file-destination)); };

destination { file("/dev/null" persist-name("d_s3")); };

};

};

-

Reload the configuration of AxoSyslog.

systemctl reload syslog-ng

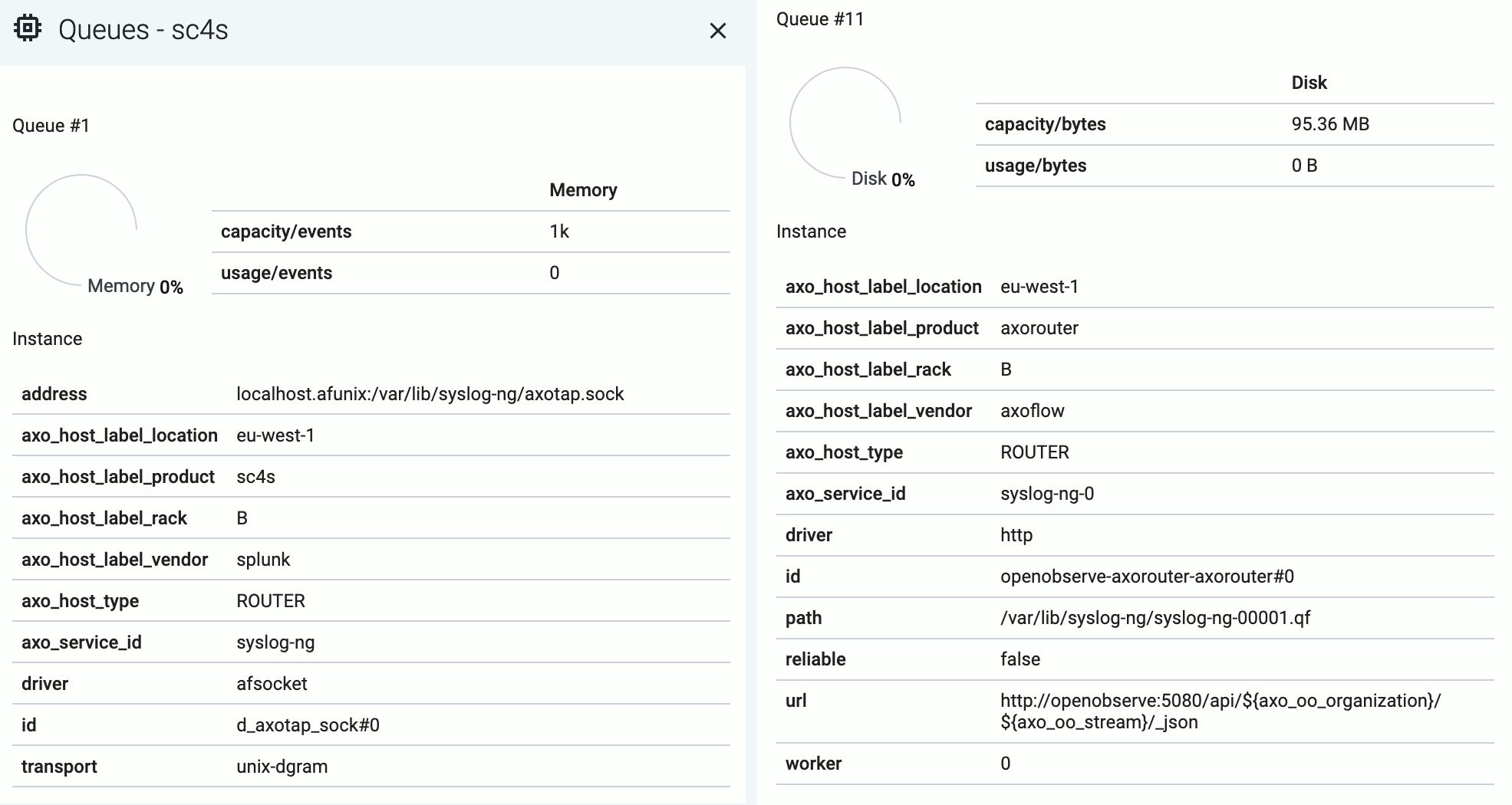

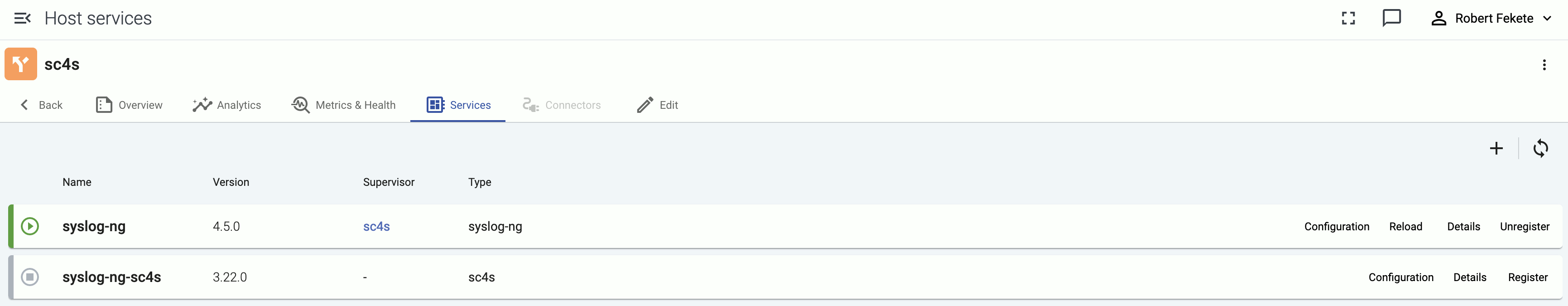

7.1.3 - Splunk Connect for Syslog (SC4S)

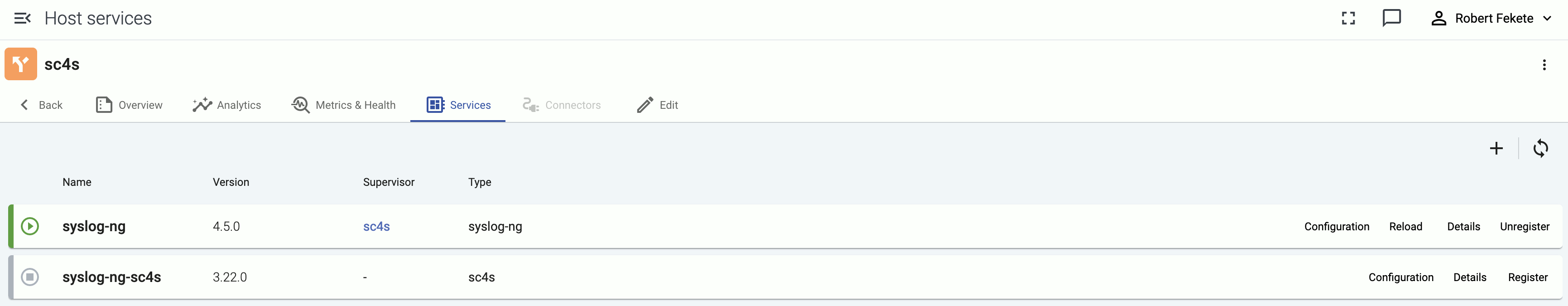

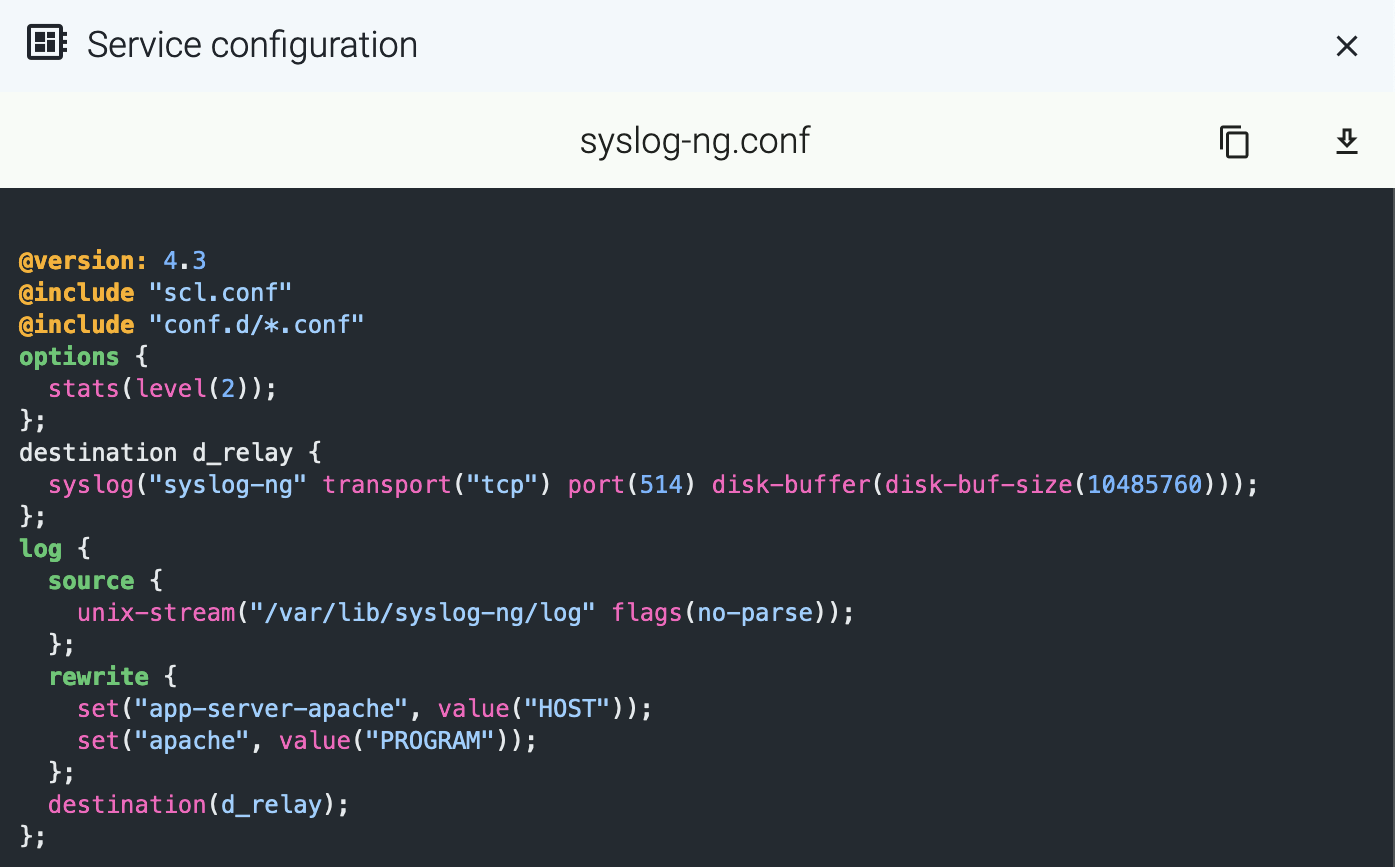

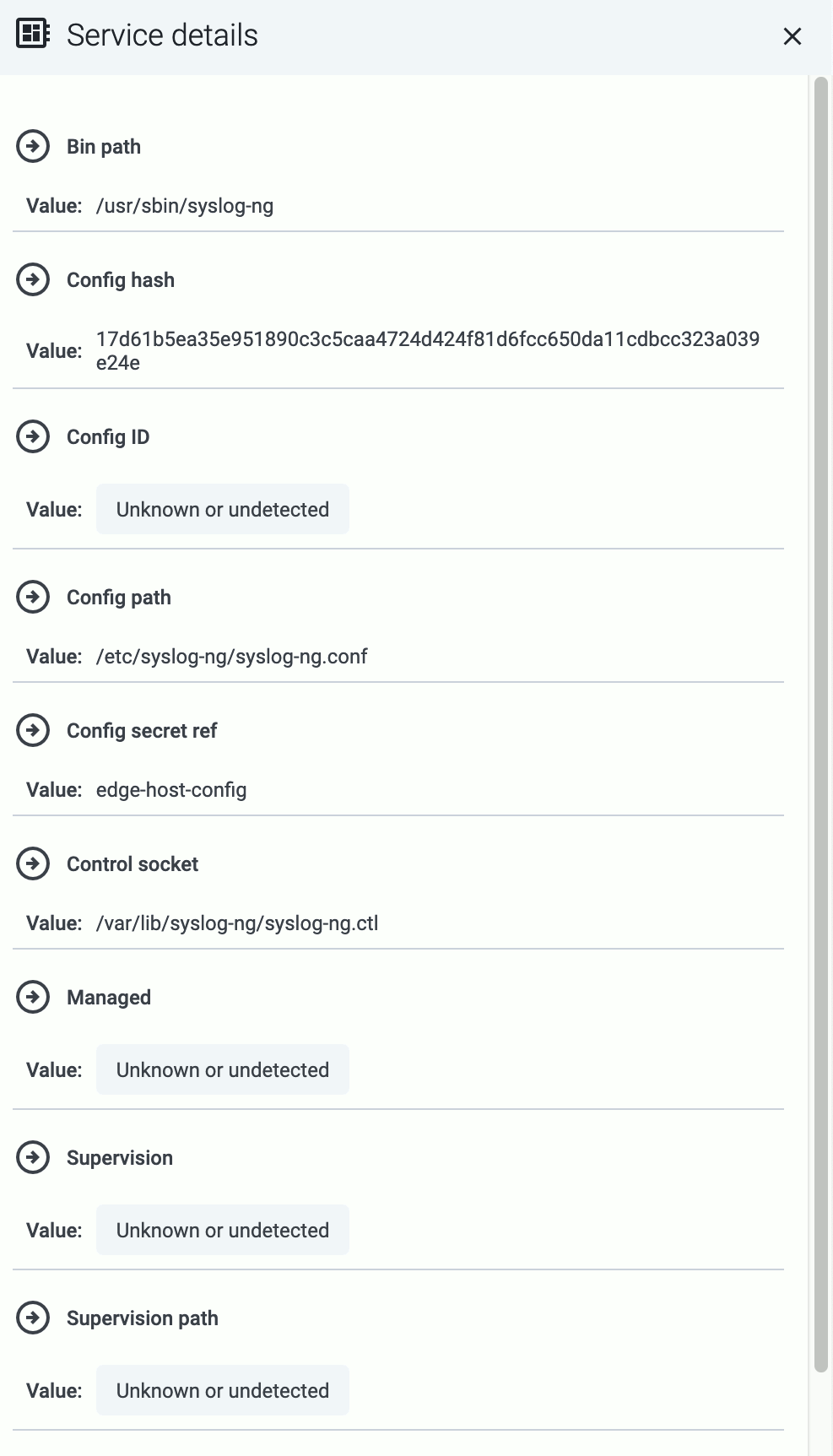

Onboarding allows you to collect metrics about the host, display the host on the Topology page, and to tap into the log flow.

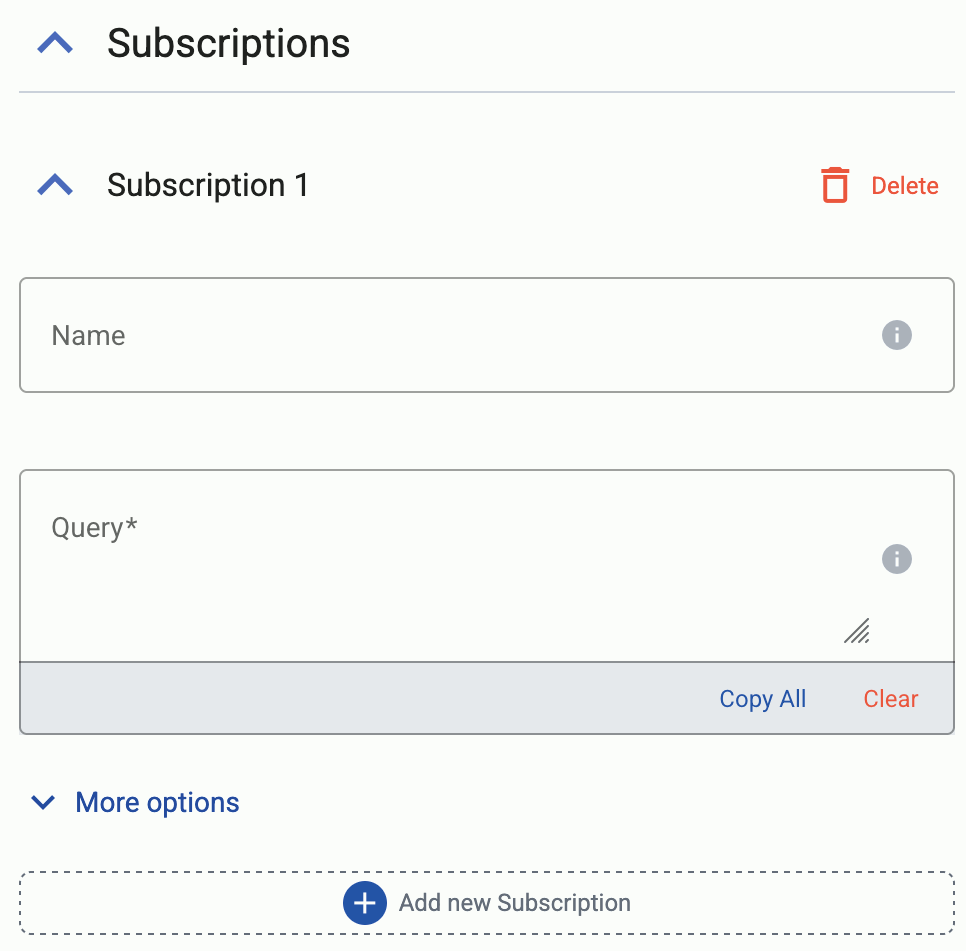

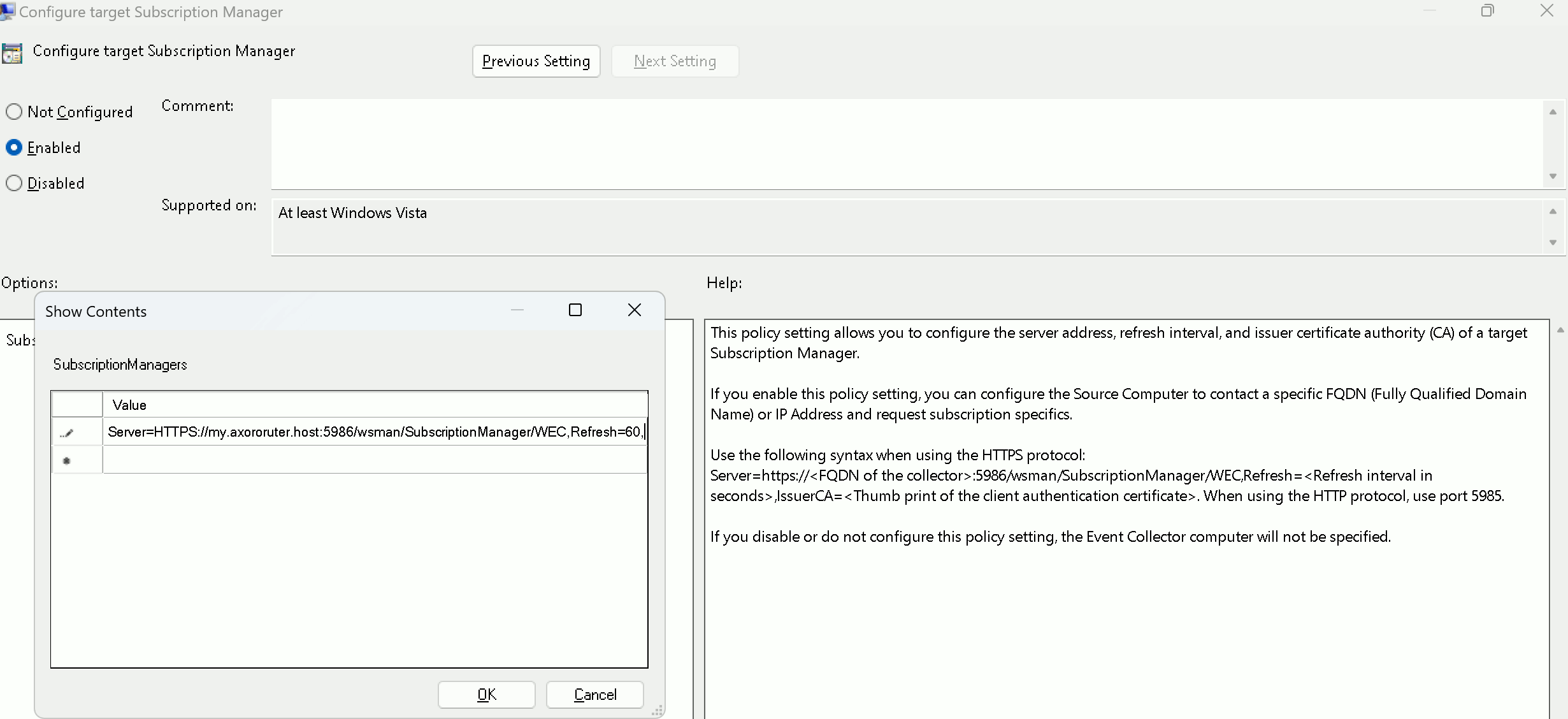

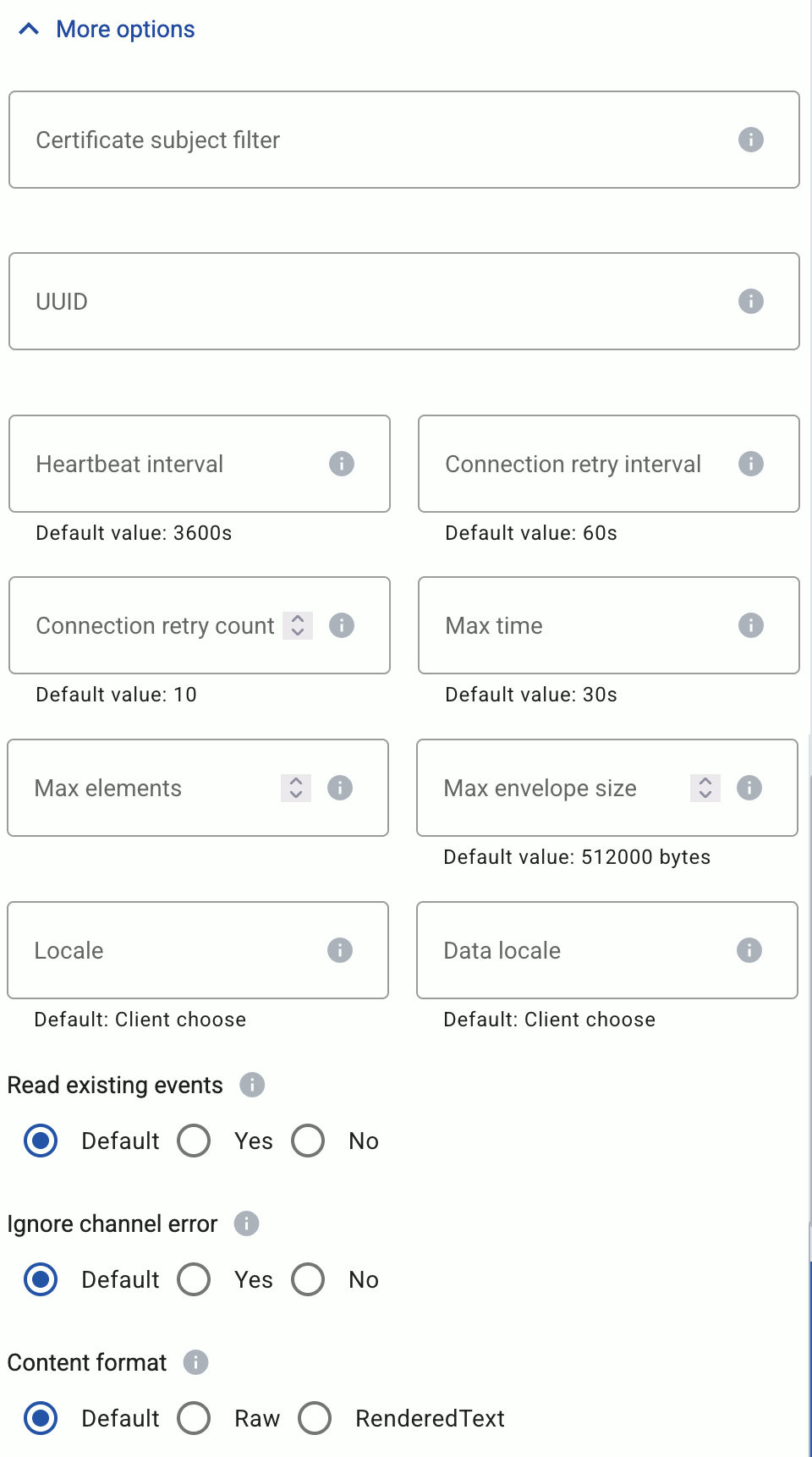

Onboarding requires you to modify the host and the configuration of the logging agent running on the host.