Reinvent Kubernetes Logging with Telemetry Controller

UPDATE: The installation procedure and the examples have been updated for the Telemetry Controller 0.0.10 release, which contained some API changes.

Telemetry Controller overview

What is the Telemetry Controller?

The Telemetry Controller is the controller that can turn telemetry event streams - logs, metrics, and traces - into Kubernetes resources. It provides an opinionated, convenient, and robust multi-tenant API on top of OpenTelemetry. That way you don't have to bother with the details, you can just describe what telemetry data you need, and where it should be forwarded. The Telemetry Controller provides isolation and access control for telemetry data, similar to what Kubernetes provides for pods, secrets, and other resources.

Who can benefit from Telemetry Controller?

- Everyone who wants to use a friendly API to get logs, metrics and traces shipped with as little configuration as possible!

- Infrastructure and Platform engineers to provide a convenient self-serve, multi-tenant API for their teams based on namespace label selectors. Rancher and Capsule are two examples that work well with this model out of the box.

- Managed service providers to deliver a robust, efficient, and secure API for their clients to consume their telemetry events on demand.

What problems does Telemetry Controller solve?

Telemetry signals are collectively processed without isolation. There is no built-in solution to let users decide what happens to the data that belongs to them. The Telemetry Controller solves this problem by introducing new resources that give granular control over the shared data and hide the complexity of setting up and maintaining a well configured OpenTelemetry Collector manually.

- Administrators can define tenants to provide isolation and access control for telemetry data.

- Users can create subscriptions to select telemetry data streams accessible by their tenant only.

- Users can create or refer to available outputs for subscriptions to route and transport data.

Telemetry data may come from various sources. For example, but not limited to:

- Container logs that come from stdout/stderr and are written to the host filesystem by the container runtime.

- Logs, metrics, and traces that are sent to an OTLP endpoint.

- Metrics collected from exporter endpoints.

In general we can say that we can apply the same distribution model for all the different telemetry signals.

What is beyond the scope of Telemetry Controller?

Telemetry Controller is not a generic OpenTelemetry Collector abstraction, so it doesn't aim to provide an API for all the knobs and levers of the Collector.

Also it doesn't aim to provide aggregation capabilities, as it is primarily focused on the log collection problem space.

Which technologies does it use?

Telemetry Controller leverages the Axoflow distribution for OpenTelemetry Collector as the agent. You can use any upstream-compatible distribution as the agent, but we suggest using the Axoflow distribution for OpenTelemetry Collector as it is tailored for this use case:

- Since the configuration is opinionated, we can reduce the footprint of the final image by removing unnecessary components. This reduces not just the size, but also the vulnerability surface of the collector.

- The Axoflow distribution will contain additional components to extend the capabilities of the collector beyond what is available upstream for example to provide a richer set of metrics.

The Axoflow distribution is and will be compatible with the upstream regarding core features. Additionally it builds on the OpenTelemetry Operator as the primary controller to implicitly manage the collector.

Finally, born as a side project of the Logging operator, Telemetry Controller extends the Logging operator with collector-level selection and routing capabilities.

How does it relate to the Logging operator exactly?

Telemetry Controller implements filtering and routing right at the edge, rather than at the aggregation level. Basically it extends the Logging operator by bringing flexibility to the edge. The aggregators managed by the Logging operator are natural destinations for subscriptions and should work together flawlessly. In a situation where there is no need for aggregation, the Telemetry Controller can directly send all or a portion of your data to a remote destination. In scenarios like these it completely replaces other agents like Fluent Bit, Vector, or Promtail. Telemetry Controller also provides flexible routing capabilities out of the box with minimal complexity.

As an integral part of the Logging operator, it belongs to the same CNCF Sandbox project and leverages the support of the same community.

Telemetry Controller details

How does Telemetry Controller work?

Collecting logs with node agents suits a multi-tenant scenario the best, because the collection is ensured as-is, without intervention from development teams. With collection implemented, you can either:

- set up central log forwarding policies that apply to all workloads, or

- provide an interface for development teams to configure what they want to collect, and where they want to send it within their tenant’s scope.

In a Kubernetes native environment, it is straightforward to use the Kubernetes API as the interface, enabling operations teams to configure the base logging infrastructure, and specify tenants, while letting developers set up their desired log filtering and forwarding rules themselves.

However, naive node-level log collection doesn't provide the level of isolation and self-service tenants of a shared cluster would expect, so we needed a solution that was aware of those boundaries.

Collecting telemetry data

Telemetry Controller uses the Axoflow distribution for OpenTelemetry Collector to collect logs from the nodes by running the collector as a DaemonSet, mounting, and reading the container log files present on the node. During the initial parsing of the log entries, we extract the pod name, pod namespace, and some other metadata. This allows us to associate the log entry to the respective source pod through the Kubernetes API, and enables us to fetch metadata which cannot be extracted from the message alone. For the default use-case (discussed in the example), pod name and namespace are enough to route the message to the configured endpoint.

Routing telemetry data

Routing can be configured in two distinct layers. First, the cluster administrator can define a Collector and Tenants, which are cluster scoped resources. Subscriptions are the concern of development teams and are namespaced resources to allow fine grained access control with Kubernetes RBAC.

The Collector

Collectors specify global settings for the OTEL Collector DaemonSet, and a tenantSelector that is used for listing Tenants that should be picked up by the Collector.

The collector attaches metadata to the telemetry data sources. In case of Kubernetes logs, it fetches additional metadata like pod labels and adds those as attributes to log records.

Currently only Kubernetes logs are supported, but stay tuned for more (generic) sources in the future! If you have specific ideas or needs, let us know.

The Tenants

Typically a tenant is a set of Kubernetes namespaces, just like it is implemented in Rancher and Capsule as well, which is a best practice for managing multi-tenant workloads inside a single cluster.

Tenant resources specify subscriptionNamespaceSelectors for namespaces that contain Subscriptions created by the tenant users, and logSourceNamespaceSelectors that specify the namespaces where the logs are produced that are also the concern of the tenant users. In a trivial use-case these two label selectors are the same.

The Tenant is actually a routing rule that helps to make sure that telemetry data is only accessible to a given Subscription if it matches the policies set by the administrator.

On the long run, tenants should not be limited to the Kubernetes telemetry data of workloads running in specific namespaces, but can be composed from shared proxy logs, Kubernetes node logs, or even external sources.

The Subscriptions

Tenant users can define their Subscriptions in the namespace(s) of their Tenants. Subscriptions can make a selection on the telemetry data that is already filtered as part of the Tenant definition and set Output endpoints where the data is forwarded. Such an endpoint can be:

- an aggregator, for example, Logging operator

- a remote telemetry backend, for example, Loki, Jaeger, or Prometheus, or

- any managed service provider, for example, Splunk or Sumologic.

Subscription selectors are currently implemented with raw OTTL statements that allow filtering based on all the available attributes the OpenTelemetry collector supports by default. For example, you can filter on Kubernetes pod labels.

In a future version this will become a more restricted API to make sure that a subscription cannot add any arbitrary statement which could be problematic from a security point of view.

Telemetry Controller demo

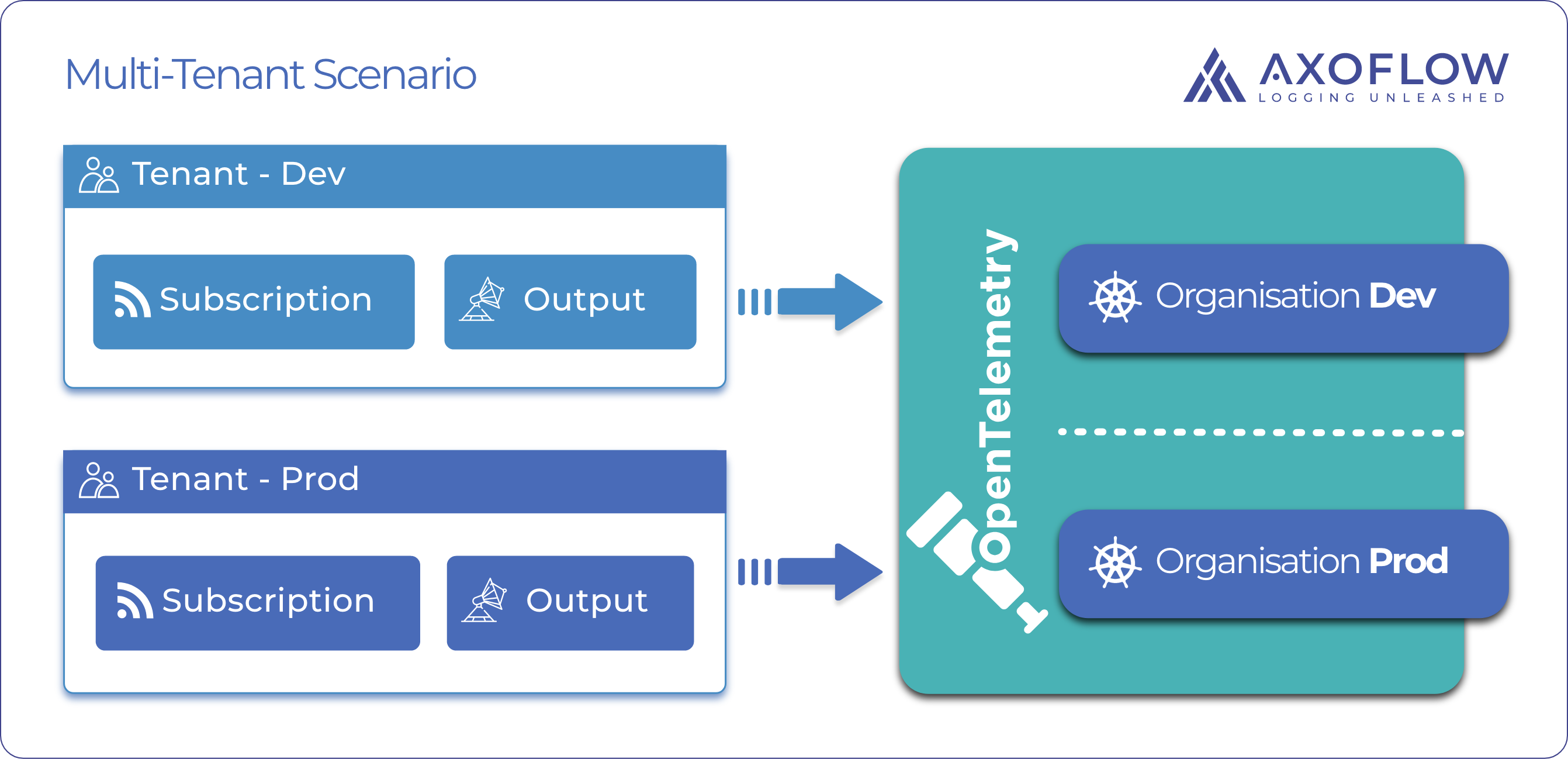

In the demo, we will set up telemetry forwarding for a cluster that has two environments (tenants), dev and prod. The prod tenant has a namespace designated for its workloads, and a separate namespace for deploying subscriptions. The dev tenant has both its workload and subscriptions in the same namespace. Data will be routed to the same external OpenObserve instance, separated as two organizations. (If you're interested in sending data from Telemetry Controller to Logging operator, see the Using Telemetry Controller with Logging Operator blog post.)

Prerequisites

- Create a KinD cluster.

- Deploy an OpenObserve instance, where the logs will be collected and visualized.

kubectl apply -f https://raw.githubusercontent.com/kube-logging/telemetry-controller/0.0.3/docs/examples/simple-demo/openobserve.yaml - To acquire the auth token to enable log ingestion, check the Web UI of the OpenObserve instance.

# get the username and password required to log in to OpenObserve

kubectl get sts -n openobserve -o yaml | grep ZO_ROOT_USER -A1

kubectl -n openobserve port-forward svc/openobserve 5080:5080 &

# save the token for ingestion in a variable

TOKEN=yourTokenFromTheUIInstall the Telemetry Controller

Deploy the Telemetry Controller

helm upgrade --install --wait --create-namespace --namespace telemetry-controller-system telemetry-controller oci://ghcr.io/kube-logging/helm-charts/telemetry-controllerCreate namespaces for the example scenario

kubectl create ns collector # Namespace where the collector DaemonSet will be deployed

kubectl create ns prod-ctrl # Namespace where the Subscriptions for the prod environment are deployed

kubectl create ns prod-workload # Namespace for prod workload resources

kubectl create ns dev # Namespace for the dev environmentDeploy the log pipeline

First, set up a Collector:

kubectl apply -f - <<EOF

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Collector

metadata:

name: example-collector

spec:

# Since the Collector is cluster-scoped, controlNamespace specifies the namespace where the DaemonSet will be deployed

controlNamespace: collector

# The tenantSelector LabelSelector is used to select the Tenants that are assigned to this Collector

tenantSelector:

matchLabels:

collectorLabel: example

EOFIMPORTANT: The collector does not specify any routing by default. You need to configure at least one Tenant and one Subscription to forward any logs.

In the example scenario, two tenants are present on the cluster, each having one namespace for Subscriptions, and one set as logging source, where an application will be deployed.

kubectl apply -f - <<EOF

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Tenant

metadata:

labels:

# This label ensures that the tenant is selected by the Collector

collectorLabel: example

name: dev

spec:

# The subscriptionNamespaceSelectors field is used to specify the namespaces, which will be considered when listing Subscriptions

subscriptionNamespaceSelectors:

- matchLabels:

kubernetes.io/metadata.name: dev

# The logSourceNamespaceSelectors field is used to specify the namespaces, which will be considered when selecting telemetry data for the tenant

logSourceNamespaceSelectors:

- matchLabels:

kubernetes.io/metadata.name: dev

---

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Tenant

metadata:

labels:

collectorLabel: example

name: prod

spec:

subscriptionNamespaceSelectors:

- matchLabels:

kubernetes.io/metadata.name: prod-ctrl

logSourceNamespaceSelectors:

- matchLabels:

kubernetes.io/metadata.name: prod-workload

EOFLet's say that you are a member of the development team (one of the tenants), and you want to forward messages. You have to define a Subscription and an OtelOutput to select a subset or all of the logs that are produced in the tenant's workload namespace, and define where they should be forwarded. Outputs can be configured and selected in any namespace, meaning that the operations team can pre-configure log sink endpoints, and the developers can use them in their Subscriptions.

Set the output first, which will be used by both Subscriptions.

kubectl apply -f - <<EOF

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Output

metadata:

name: otlp-prod-openobserve

namespace: prod-ctrl

spec:

otlp:

# Selecting an OpenObserve GRPC endpoint in the openobserve namespace

endpoint: openobserve-otlp-grpc.openobserve.svc.cluster.local:5081

headers:

# This token can be acquired through the OpenObserve Web UI

Authorization: "Basic ${TOKEN}"

organization: prod

stream-name: default

tls:

insecure: true

---

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Output

metadata:

name: otlp-dev-openobserve

namespace: dev

spec:

otlp:

# Selecting an OpenObserve GRPC endpoint in the openobserve namespace

endpoint: openobserve-otlp-grpc.openobserve.svc.cluster.local:5081

headers:

# This token can be acquired through the OpenObserve Web UI

Authorization: "Basic ${TOKEN}"

organization: dev

stream-name: default

tls:

insecure: true

EOFkubectl apply -f - <<EOF

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Subscription

metadata:

name: subscription-prod

namespace: prod-ctrl

spec:

# This statement will select all messages from the namespaces specified as log source for the tenant

ottl: 'route()'

# Multiple outputs can be set for a Subscription. In this case, logs will be sent to an OpenObserve instance through OTLP protocol

outputs:

- name: otlp-prod-openobserve

namespace: prod-ctrl

---

apiVersion: telemetry.kube-logging.dev/v1alpha1

kind: Subscription

metadata:

name: subscription-dev

namespace: dev

spec:

ottl: 'route()'

outputs:

- name: otlp-dev-openobserve

namespace: dev

EOFWith the telemetry pipeline in place, we need applications to provide the log messages. Our log-generator is a fitting tool for that, providing random web server logs. Install the log-generator:

helm install --wait --generate-name oci://ghcr.io/kube-logging/helm-charts/log-generator --version 0.7.0 --namespace dev

helm install --wait --generate-name oci://ghcr.io/kube-logging/helm-charts/log-generator --version 0.7.0 --namespace prod-workloadTo open the UI of the Openobserve instance, we have to set up port-forwarding.

kubectl -n openobserve port-forward svc/openobserve 5080:5080 &On the UI, we can see the messages, arriving from their respective paths, arriving in their respective organizations.

What’s next

Axoflow provides telemetry pipeline management for hybrid environments. The Telemetry Controller is the primary building block of our Kubernetes offering. We believe in a strong open-source foundation and offering advanced capabilities like UI, RBAC, and AI-assisted management on top of it.The Telemetry Controller project is already useful for specific use cases, but there are some promising possibilities for future development:

- Today the project supports only Kubernetes log sources, but thanks to OpenTelemetry we can start supporting all telemetry signals easily, by adding new Collector types.

- Host logs, sidecar logs, Kubernetes event logs, and external telemetry sources are planned to be supported as well.

- Rich metrics are yet to be added throughout the whole pipeline to be able to observe how the agent performs and behaves.

- Automatic merging of overlapping subscriptions will be a powerful optimization to avoid unnecessary bandwidth consumption in certain scenarios.

- Easy rehydration of telemetry events from archives will also be an exciting feature.

Follow Our Progress!

We are excited to be realizing our vision above with a full Axoflow product suite.

Sign me up

Fighting data Loss?

Book a free 30-min consultation with syslog-ng creator Balázs Scheidler