This section describes the main concepts of Axoflow.

This is the multi-page printable view of this section. Click here to print.

Concepts

- 1: Processing elements

- 2: Automatic data processing

- 3: Host attribution and inventory

- 4: Policy based routing

- 5: Classify and reduce security data

- 6: Normalization

- 7: Reliable transport

1 - Processing elements

Axoflow processes the data transported in your security data pipeline in the following stages:

-

Sources: Data enters the pipeline from a data source. A data source can be an external appliance or application, or a log collector agent managed by Axoflow.

- Sources are hosts that are sending data to a data aggregator, like AxoRouter.

- Edges are source hosts that are running a collector agent managed by AxoConsole, or have an Axolet agent reporting metrics from the host.

For edge hosts, you can create:

- collection rules that collect local data (for example, from log files, or Windows Event Log channels), and

- data forwarding rules to transport the collected data to a router.

-

Custom metadata on the source: You can configure Axoflow to automatically add custom metadata to the data received from a source.

-

Router: The AxoRouter data aggregator processes the data it receives from the sources:

- Connector: AxoRouter hosts receive data using source connectors. The different connectors are responsible for different protocols (like Syslog or OpenTelemetry). Some metadata labels are added to the data based on the connector it was received.

- Metadata: AxoRouter classifies and identifies the incoming messages and adds metadata, for example, the vendor and product of the identified source.

- Data extraction: AxoRouter extracts the relevant information from the content of the messages, and makes it available as structured data.

The router can perform other processing steps, as configured in the flows that apply to the specific router (see next step).

-

Flow: You can configure flows in the AxoConsole that Axoflow uses to configure the AxoRouter instances to filter, route, and process the security data. Flows also allow you to automatically remove unneeded or redundant information from the messages, reducing data volume and SIEM and storage costs.

-

Destination: The router sends data to the specified destination in a format optimized for the specific destination.

2 - Automatic data processing

When forwarding data to your SIEM, poor quality data and malformed logs that lack critical fields like timestamps or hostnames need to be fixed. The usual solutions fix the problem in the SIEM, and involve complex regular expressions, which are difficult to create and maintain. SIEM users often rely on vendors to manage these rules, but support is limited, especially for less popular devices. Axoflow offers a unique solution by automatically processing, curating, and classifying data before it’s sent to the SIEM, ensuring accurate, structured, and optimized data, reducing ingestion costs and improving SIEM performance.

The problem

The main issue related to classification is that many devices send malformed messages: missing timestamp, missing hostname, invalid message format, and so on. Such errors can cause different kinds of problems:

- Log messages are often routed to different destinations based on the sender hostname. Missing or invalid hostnames mean that the message is not attributed to the right host, and often doesn’t arrive at its intended destination.

- Incorrect timestamp or timezone hampers investigations during an incident, resulting in potentially critical data failing to show up (or extraneous data appearing) in queries for a particular period.

- Invalid data can lead to memory leaks or resource overload in the processing software (and to unusable monitoring dashboards) when a sequence number or other rapidly varying field is mistakenly parsed as the hostname, program name, or other low cardinality field.

Overall, they decrease the quality of security data you’re sending to your SIEM tools, which increases false positives, requires secondary data processing to clean, and increases query time – all of which ends up costing firms a lot more.

For instance, this is a log message from a SonicWall firewall appliance:

<133> id=firewall sn=C0EFE33057B0 time="2024-10-07 14:56:47 UTC" fw=172.18.88.37 pri=6 c=1024 m=537 msg="Connection Closed" f=2 n=316039228 src=192.0.0.159:61254:X1: dst=10.0.0.7:53:X3:SIMILDC01 proto=udp/dns sent=59 rcvd=134 vpnpolicy="ELG Main"

A well-formed syslog message should look like this:

<priority>timestamp hostname application: message body

As you can see, the SonicWall format is completely invalid after the initial <priority> field. Instead of the timestamp, hostname, and application name comes the free-form part of the message (in this case a whitespace-separated key=value list). Unless you extract the hostname and timestamp from the content of this malformed message, you won’t be able to reconstruct the course of events during a security incident.

Axoflow provides data processing, curation, and classification intelligence that’s built into the data pipeline, so it processes and fixes the data before it’s sent to the SIEM.

Our solution

Our data engine and database solution automatically processes the incoming data: AxoRouter recognizes and classifies the incoming data, applies device-specific fixes for the errors, then enriches and optimizes the formatting for the specific destination (SIEM). This approach has several benefits:

- Cost reduction: All data is processed before it’s sent to the SIEM. That way, we can automatically reduce the amount of data sent to the SIEM (for example, by removing empty and redundant fields), cutting your data ingestion costs.

- Structured data: Axoflow recognizes the format of the incoming data payload (for example, JSON, CSV, LEEF, free text), and automatically parses the payload into a structured map. This allows us to have detailed, content-based metrics and alerts, and also makes it easy for you to add custom transformations if needed.

- SIEM-independent: The structured data representation allows us to support multiple SIEMs (and other destinations) and optimize the data for every destination.

- Performance: Compared to the commonly used regular expressions, it’s more robust and has better performance, allowing you to process more data with fewer resources.

- Maintained by Axoflow: We maintain the database; you don’t have work with it. This includes updates for new product versions and adding new devices. We proactively monitor and check the new releases of main security devices for logging-related changes and update our database. (If something’s not working as expected, you can easily submit log samples and we’ll fix it ASAP). Currently, we have over 80 application adapters in our database.

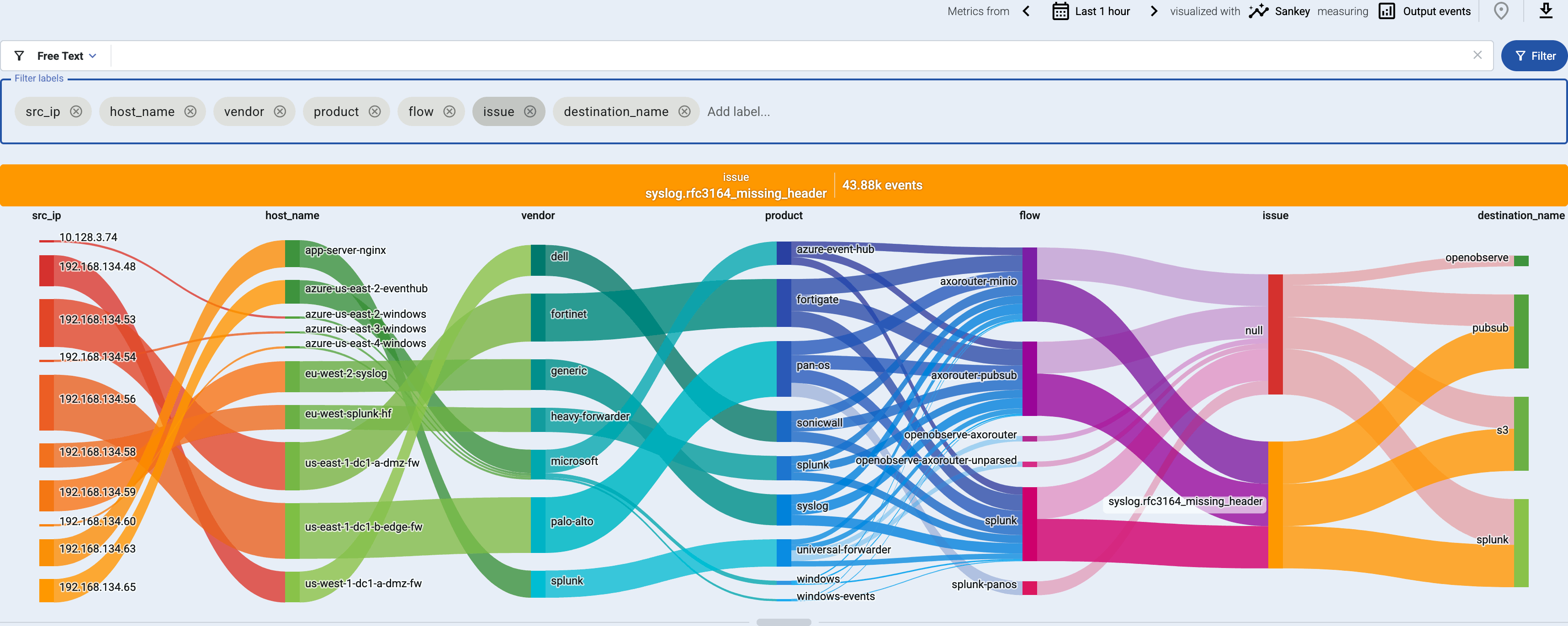

The automatic classification and curation also adds labels and metadata that can be used to make decisions and route your data. Messages with errors are also tagged with error-specific tags. For example, you can easily route all firewall and security logs to your Splunk deployment, and exclude logs (like debug logs) that have no security relevance and shouldn’t be sent to the SIEM.

Message fixup

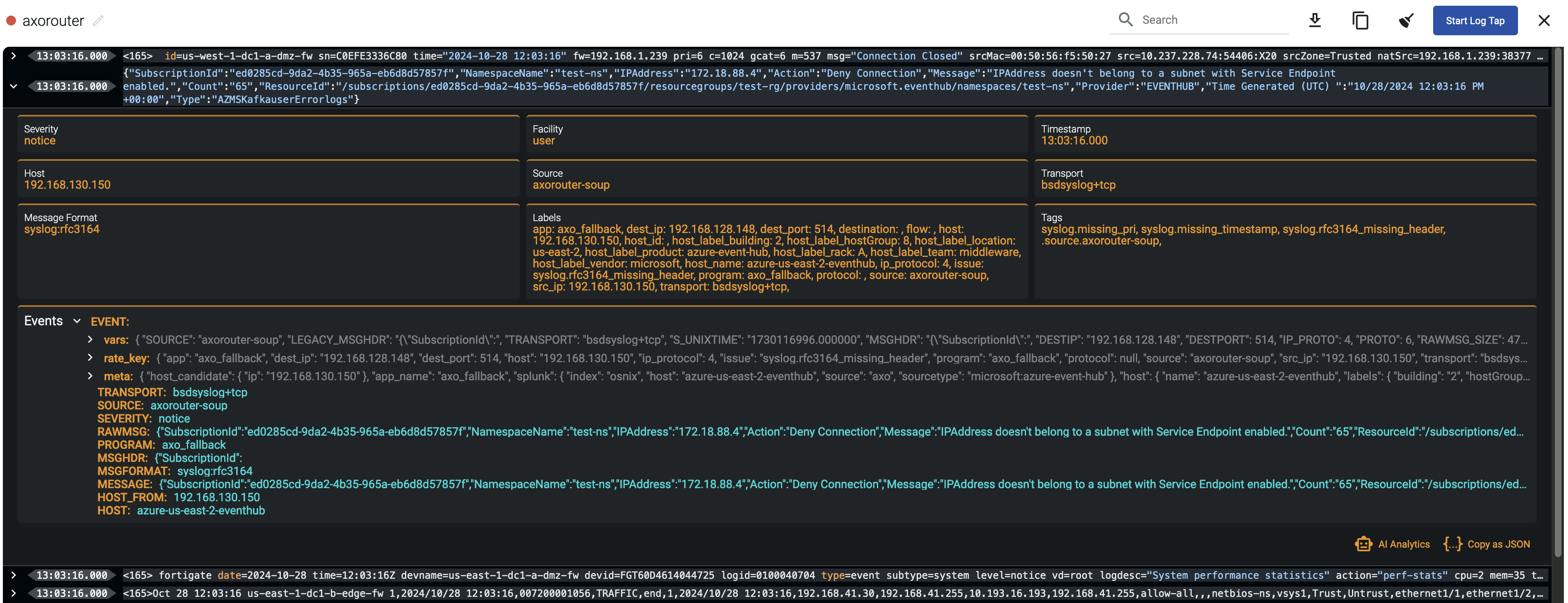

AxoConsole allows you to quickly drill down to find log flows with issues, and to tap into the log flow and see samples of the specific messages that are processed, along with the related parsing information, like tags that describe the errors of invalid messages.

message.utf8_sanitized: The message is not valid UTF-8.syslog.missing_timestamp: The message has no timestamp.syslog.invalid_hostname: The hostname field doesn’t seem to be valid, for example, it contains invalid characters.syslog.missing_pri: The priority (PRI) field is missing from the message.syslog.unexpected_framing: An octet count was found in front of the message, suggested invalid framing.syslog.rfc3164_missing_header: The date and the host are missing from the message – practically that’s the entire header of RFC3164-formatted messages.syslog.rfc5424_unquoted_sdata_value: The message contains an incorrectly quoted RFC5424 SDATA field.message.parse_error: Some other parsing error occurred.

You can get an overview of such problems in your pipeline on the Analytics page by adding issue to the Filter labels field.

3 - Host attribution and inventory

Axoflow’s built-in inventory solution enriches your security data with critical metadata (like the origin host) so you can pinpoint the exact source of every data entry, enabling precise, label-based routing and more informed security decisions.

Enterprises and organizations collect security data (like syslog and other event data) from various data sources, including network devices, security devices, servers, and so on. When looking at a particular log entry during a security incident, it’s not always trivial to determine what generated it. Was it an appliance or an application? Which team is the owner of the application or device? If it was a network device like a switch or a Wi-Fi access point (of which even medium-sized organizations have dozens), can you tell which one it was, and where is it located?

Cloud-native environments, like Kubernetes have addressed this issue using resource metadata. You can attach labels to every element of the infrastructure: containers, pods, nodes, and so on, to include region, role, owner or other custom metadata. When collecting log data from the applications running in containers, the log collector agent can retrieve these labels and attach them to the log data as metadata. This helps immensely to associate routing decisions and security conclusions with the source systems.

In non-cloud environments, like traditionally operated physical or virtual machine clusters, the logs of applications, appliances, and other data sources lack such labels. To identify the log source, you’re stuck with the sender’s IP address, the sender’s hostname, and in some cases the path and filename of the log file, plus whatever information is available in the log message.

Why isn’t the IP address or hostname enough?

- Source IP addresses are poor identifiers, as they aren’t strongly tied to the host, especially when they’re dynamically allocated using DHCP or when a group of senders are behind a NAT and have the same address.

- Some organizations encode metadata into the hostname or DNS record. However, compared to the volume of log messages, DNS resolving is slow. Also, the DNS record is available at the source, but might not be available at the log aggregation device or the log server. As a result, this data is often lost.

- Many data sources and devices omit important information from their messages. For example, the log messages of Cisco routers by default omit the hostname. (They can be configured to send their hostname, but unfortunately, often they aren’t.) You can resolve their IP address to obtain the hostname of the device, but that leads back to the problem in the previous point.

- In addition, all the above issues are rooted in human configuration practices, which tend to be the main cause of anomalies in the system.

Correlating data to data source

Some SIEMs (like IBM QRadar) rely on the sender IP address to identify the data source. Others, like Splunk, delegate the task of providing metadata to the collector agent, but log sources often don’t supply metadata, and even if they do, the information is based on IP address or DNS.

Certain devices, like SonicWall firewalls include a unique device ID in the content of their log messages. Having access to this ID makes attribution straightforward, but logging solutions rarely extract such information. AxoRouter does.

Axoflow builds an inventory to match the messages in your data flow to the available data sources, based on data including:

-

IP address, host name, and DNS records of the source (if available),

-

serial number, device ID, and other unique identifiers extracted from the messages, and

-

metadata based on automatic classification of the log messages, like product and vendor name.

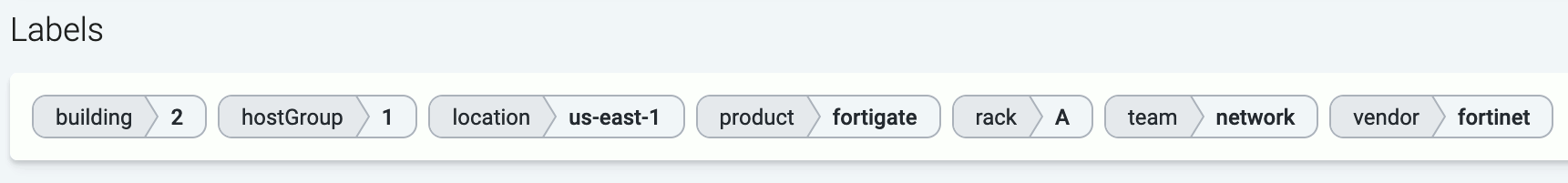

Axoflow (or more precisely, AxoRouter) classifies the processed log data to identify common vendors and products, and automatically applies labels to attach this information.

You can also integrate the telemetry pipeline with external asset management systems to further enrich your security data with custom labels and additional contextual information about your data sources.

4 - Policy based routing

Axoflow’s host attribution gives you unprecedented possibilities to route your data. You can tell exactly what kind of data you want to send where. Not only based on technical parameters like sender IP or application name, but using a much more fine-grained inventory that includes information about the devices, their roles, and their location. For example:

- The vendor and the product (for example, a SonicWall firewall)

- The physical location of the device (geographical location, rack number)

- Owner of the device (organization/department/team)

Axoflow automatically adds metadata labels based on information extracted from the data payload, but you can also add static custom labels to hosts manually, or dynamically using flows.

Paired with the information from the content of the messages, all this metadata allows you to formulate high-level, declarative policies and alerts using the metadata labels, for example:

- Route the logs of devices to the owner’s Splunk index.

- Route every firewall and security log to a specific index.

- Let the different teams manage and observe their own devices.

- Granular access control based on relevant log content (for example, identify a proxy log-stream based on the upstream address), instead of just device access.

- Ensure that specific logs won’t cross geographic borders, violating GDPR or other compliance requirements.

5 - Classify and reduce security data

Axoflow provides a robust classification system that actually verifies the data it receives, instead of relying on using dedicated ports. This approach results in automatic data labeling, high-quality data, and volume reduction - out of the box, automated, without coding.

Classify the incoming data

Verifying which device or service a certain message belongs to is difficult: even a single data source (like an appliance) can have different kinds of messages, and you have to be able to recognize each one, uniquely. This requires:

- deep, device and vendor-specific understanding of the data, and also

- understanding of the syslog data formats and protocols, because oftentimes the data sources send invalid messages that you have to recognize and fix as part of the classification process.

Also, classification needs to be both reliable and performant. A naive implementation using regexps is neither, nevertheless, that’s the solution you find at the core of today’s ingestion pipelines. We at Axoflow understand that creating and maintaining such a classification database is difficult, this is why we decided to make classification a core functionality of the Axoflow Platform, so you will never need to write another parsing regexp. At the moment, Axoflow supports over 90 data sources of well-known vendors.

Classification and the ability to process your security data in the pipeline also allows you to:

- Fix the incoming data (like the malformed firewall messages shown above) to add missing information, like hostname or timestamp.

- Identify the source host,

- Parse the log to access the information contained within,

- Redact sensitive information before it gets sent to a SIEM or storage, like PII information,

- Reduce the data volume and as a result, storage and SIEM costs,

- Enrich the data with contextual information, like adding labels based on the source or content of the data,

- Use all the above to route the data to the appropriate destinations, and finally

- Transform the data into an optimized format that the destination can reliably and effortlessly consume. This includes mapping your data to multiple different schemas if you use multiple analytic tools.

Reduce data volume

Classifying and parsing the incoming data allows you to remove the parts that aren’t needed, for example, to:

- drop entire messages if they are redundant or not relevant from a security perspective, or

- remove parts of individual messages, like fields that are non-empty even if they do not convey information (for example, that contain values such as “N/A” or “0”).

As this data reduction happens in the pipeline, before the data arrives in the SIEM or storage, it can save you significant costs, and also improves the quality of the data your detection engineers get to work with.

Palo Alto log reduction example

Let’s see an example on how data reduction works in Axoflow. Here is a log message from a Palo Alto firewall:

<165>Mar 26 18:41:06 us-east-1-dc1-b-edge-fw 1,2025/03/26 18:41:06,007200001056,TRAFFIC,end,1,2025/03/26 18:41:06,192.168.41.30,192.168.41.255,10.193.16.193,192.168.41.255,allow-all,,,netbios-ns,vsys1,Trust,Untrust,ethernet1/1,ethernet1/2,To-Panorama,2025/03/26 18:41:06,8720,1,137,137,11637,137,0x400000,udp,allow,276,276,0,3,2025/03/26 18:41:06,2,any,0,2345136,0x0,192.168.0.0-192.168.255.255,192.168.0.0-192.168.255.255,0,3,0

Here’s what you can drop from this particular message:

-

Redundant timestamps: Palo Alto log messages contain up to five, practically identical timestamps (see the Receive time, Generated time, and High resolution timestamp fields in the Traffic Log Fields documentation):

- the syslog timestamp in the header (Mar 26 18:41:06),

- the time Panorama (the management plane of Palo Alto firewalls) collected the message (2025/03/26 18:41:06), and

- the time when the event was generated (2025/03/26 18:41:06).

The sample log message has five timestamps. Leaving only one timestamp can reduce the message size by up to 15%.

-

The priority field (

<165>) is identical in most messages and has no information value. While that takes up only about 1% of the size of the, on high-traffic firewalls even this small change adds up to significant data saving. -

Several fields contain default or empty values that provide no information, for example, default internal IP ranges like

192.168.0.0-192.168.255.255. Removing such fields yields over 10% size reduction.

Note that when removing fields, we can delete only the value of the field, because the message format (CSV) relies on having a fixed order of columns for each message type. This also means that we have to individually check what can be removed from each of the 17 Palo Alto log type.

Palo Alto firewalls send this specific message when a connection is closed. They also send a message when a new connection is started, but that doesn’t contain any information that’s not available in the ending message, so it’s completely redundant and can be dropped. As every connection has a beginning and an end, this alone almost halves the size of the data stored per connection. For example:

Connection start message:

<113>Apr 11 10:58:18 us-east-1-dc1-b-edge-fw 1,10:58:18.421048,007200001056,TRAFFIC,end,1210,10:58:18.421048,192.168.41.30,192.168.41.255,10.193.16.193,192.168.41.255,allow-all,,,ssl,vsys1,trust-users,untrust,ethernet1/2.30,ethernet1/1,To-Panorama,2020/10/09 17:43:54,36459,1,39681,443,32326,443,0x400053,tcp,allow,43135,24629,18506,189,2020/10/09 16:53:27,3012,laptops,0,1353226782,0x8000000000000000,10.0.0.0-10.255.255.255,United States,0,90,99,tcp-fin,16,0,0,0,,testhost,from-policy,,,0,,0,,N/A,0,0,0,0,ace432fe-a9f2-5a1e-327a-91fdce0077da,0

Connection end message:

<113>Apr 11 10:58:18 us-east-1-dc1-b-edge-fw 1,10:58:18.421048,007200001056,TRAFFIC,end,1210,10:58:18.421048,192.168.41.30,192.168.41.255,10.193.16.193,192.168.41.255,allow-all,,,ssl,vsys1,trust-users,untrust,ethernet1/2.30,ethernet1/1,To-Panorama,2020/10/09 17:43:54,36459,1,39681,443,32326,443,0x400053,tcp,allow,43135,24629,18506,189,2020/10/09 16:53:27,3012,laptops,0,1353226782,0x8000000000000000,10.0.0.0-10.255.255.255,United States,0,90,99,tcp-fin,16,0,0,0,,testhost,from-policy,,,0,,0,,N/A,0,0,0,0,ace432fe-a9f2-5a1e-327a-91fdce0077da,0

You can enable data reduction in your data flows using the Reduce processing step, and see the amount of data received and transferred in the flow on the Metrics page of the flow.

6 - Normalization

Axoflow normalizes incoming data and delivers it in a destination-friendly format and schema - covering keys, data types, streams, or indexes - rather than ingesting raw blobs. This includes consistent timestamps and attributes that downstream search queries, alerts, and detection engineers can immediately use. When a destination supports a specific normalization framework, Axoflow applies it by default. For vendor-specific schemas, such as Dynatrace Grail’s semantic dictionary, Axoflow adds native support to ensure seamless integration.

Why Normalization Matters

Logs and security data come in different formats, from different devices, even when referring to the same event, making it difficult to find, understand, and correlate the data. Security events and detection rules should be agnostic to how the data was collected or where it came from. Your detection engineers shouldn’t care about what’s the exact name of the field where your different firewalls store the IP of the client: all such events should refer to a standard name. However, it needs expertise and a lot of legwork to do that properly. There is no one-to-one mapping between sources and schemas. You need to check the schema descriptions, understand the original content and know how the data will be used for analysis. The normalized version of an event helps your security teams best in their detection and investigation, as it makes it easy to correlate events received from different vendors (or different devices).

Axoflow builds this intelligence in. By combining AI with our deep data and security expertise, Axoflow automatically normalizes your data in real time within the pipeline. It supports multiple normalization frameworks, including Elastic Common Schema (ECS) and Open Cybersecurity Schema Framework (OCSF), so your security teams can work with normalized data from the start, enabling them to write stronger detection rules and uncover insights faster. You can even send the same data to multiple destinations in the normalized format that the specific destination supports.

We create and maintain our normalization database using an AI-backed process supervised by our engineers, so development is rapid, while it maintains high-quality and consistency.

To sum up, normalizing data:

- simplifies data ingestion for your SIEM

- improves correlation and detection

- speeds up investigations

- decreases vendor lock-in and improves flexibility.

7 - Reliable transport

Between its components, Axoflow transports data using the reliable OpenTelemetry protocol (OTLP) for high performance, and to avoid losing messages. Under the hood, OpenTelemetry uses gRPC, and has superior performance compared to other log transport protocols, consumes less bandwidth (because it uses protocol buffers), and also provides features like:

- on-the-wire compression

- authentication

- application layer acknowledgement

- batched data sending

- multi-worker scalability on the client and the server.