This chapter describes the installation and configuration of AxoConsole in different scenarios.

AxoConsole is available as:

- an SaaS service,

- on-premises deployment,

- Kubernetes deployment.

This is the multi-page printable view of this section. Click here to print.

This chapter describes the installation and configuration of AxoConsole in different scenarios.

AxoConsole is available as:

This guide shows you how to install AxoConsole on a local, on-premises virtual machine using k3s and Helm. Installation of other components like the NGINX ingress controller NS cert-manager is also included. Since this is a single instance deployment, we don’t recommend using it in production environments. For additional steps and configurations needed in production environments, contact our support team.

At a high level, the deployment consists of the following steps:

To install AxoConsole, you’ll need the following:

The URL for the AxoConsole Helm chart. You’ll receive this URL from our team. You can request it using the contact form.

CAUTION:

Don’t start the Install AxoConsole process until you’ve received the chart URL.A license key for AxoConsole. You’ll receive this from our team. You can request it using the contact form.

A host that meets the system requirements.

Network access set up for the host.

Supported operating system: Ubuntu 24.04, Red Hat 9 and compatible (tested with AlmaLinux 9)

The virtual machine (VM) must have at least:

This setup can handle about 100 AxoRouter instances and 1000 data source hosts.

For reference, a real-life scenario that handles 100 AxoRouter and 3000 data source hosts with 30-day metric retention would need:

For details on sizing, contact our support team.

You’ll need to have access to a user with sudo privileges.

The hosts must be able to access the following domains related to the AxoConsole:

When using AxoConsole SaaS:

<your-tenant-id>.cloud.axoflow.io: HTTPS traffic on TCP port 443, needed to download the binaries for Axoflow software (like Axolet and AxoRouter).kcp.<your-tenant-id>.cloud.axoflow.io: HTTPS (mutual TLS) traffic on TCP port 443 for management traffic.telemetry.<your-tenant-id>.cloud.axoflow.io: HTTPS (mutual TLS) traffic on TCP port 443, where Axolet sends the metrics of the host.us-docker.pkg.dev: HTTPS traffic on TCP port 443, for pulling container images (AxoRouter only).When using an on-premise AxoConsole:

The following domains should point to AxoConsole IP address to access Axoflow from your desktop and AxoRouter hosts:

your-host.your-domain: The main domain of your AxoConsole deployment.authenticate.your-host.your-domain: A subdomain used for authentication.idp.your-host.your-domain: A subdomain for the identity provider.The AxoConsole host must have the following Open Ports:

When installing Axoflow agent for Windows or Linux:

github.com: HTTPS traffic on TCP port 443, for downloading installer packages.Complete the following steps to install k3s and some dependencies on the virtual machine.

Run getenforce to check the status of SELinux.

Check the /etc/selinux/config file and make sure that the settings match the results of the getenforce output (for example, SELINUX=disabled). K3S supports SELinux, but the installation can fail if there is a mismatch between the configured and actual settings.

Install k3s.

Run the following command:

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="--disable traefik --disable metrics-server" K3S_KUBECONFIG_MODE="644" sh -s -

Check that the k3s service is running:

systemctl status k3s

Install the NGINX ingress controller.

NGINX_VERSION="v4.11.3"

sudo tee /var/lib/rancher/k3s/server/manifests/ingress-nginx.yaml > /dev/null <<EOF

---

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: ingress-nginx

namespace: kube-system

spec:

createNamespace: true

chart: ingress-nginx

repo: https://kubernetes.github.io/ingress-nginx

version: $NGINX_VERSION

targetNamespace: ingress-nginx

valuesContent: |-

controller:

extraArgs:

enable-ssl-passthrough: true

hostNetwork: true

hostPort:

enabled: true

service:

enabled: false

admissionWebhooks:

enabled: false

updateStrategy:

strategy:

type: Recreate

EOF

Install the cert-manager controller.

Run the command:

CM_VERSION="1.16.2"

sudo tee /var/lib/rancher/k3s/server/manifests/cert-manager.yaml > /dev/null <<EOF

---

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: cert-manager

namespace: kube-system

spec:

createNamespace: true

chart: cert-manager

version: $CM_VERSION

repo: https://charts.jetstack.io

targetNamespace: cert-manager

valuesContent: |-

crds:

enabled: true

enableCertificateOwnerRef: true

EOF

Wait for the helm chart to be installed:

while ! kubectl get job helm-install-cert-manager -n kube-system; do

sleep 1

done

kubectl wait --for=condition=complete job/helm-install-cert-manager -n kube-system --timeout=300s

Complete the following steps to deploy the AxoConsole Helm chart.

Set the following environment variables as needed for your deployment.

The domain name for your AxoConsole deployment. This must point to the IP address of the VM you’re installing AxoConsole on.

BASE_HOSTNAME=your-host.your-domain

$BASE_HOSTNAME must end with a valid top-level domain, for example, .com or .io.)

The license key of AxoConsole. You’ll receive this from our team. You can request it using the contact form.

LICENSE_KEY="your-license-key"

The version number of AxoConsole you want to deploy.

VERSION="0.73.0"

The internal IP address of the virtual machine. This is needed so the authentication service can communicate with the identity provider without relying on DNS.

VM_IP_ADDRESS=$(hostname -I | cut -d' ' -f1)

Check that the above command returned the correct IP, it might not be accurate if the host has multiple IP addresses.

The URL of the Axoflow Helm chart. You’ll receive this URL from our team. You can request it using the contact form.

AXOFLOW_HELM_CHART_URL="<the-chart-URL-received-from-Axoflow>"

Install AxoConsole. You can either let the chart create a password, or you can set an email and password manually.

Add password automatically: The following configuration file enables automatic password creation. If this basic setup is working, you can configure another authentication provider.

sudo tee /var/lib/rancher/k3s/server/manifests/axoflow.yaml > /dev/null <<EOF

---

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: axoflow

namespace: kube-system

spec:

createNamespace: true

targetNamespace: axoflow

version: $VERSION

chart: $AXOFLOW_HELM_CHART_URL

failurePolicy: abort

valuesContent: |-

baseHostName: $BASE_HOSTNAME

licenseKey: $LICENSE_KEY

dex:

localIP: $VM_IP_ADDRESS

EOF

To reclaim your autogenerated password, use the following command.

kubectl -n axoflow get secret axoflow-admin -o go-template='Email: {{ .data.email | base64decode }}{{ printf "\n" }}Password: {{ .data.password | base64decode }}{{ printf "\n" }}'

Record the email and password, you’ll need it to log in to AxoConsole.

Set password manually: If you’d like to set the email/password manually, create the secret storing the required information, then set a unique identifier for the administrator user using uuidgen. Note that:

uuid-runtime package by running sudo apt install uuid-runtime.htpasswd tool, which is part of the httpd-tools package on Red Hat (install it using sudo dnf install httpd-tools), and the apache2-utils package on Ubuntu (install it using sudo apt install apache2-utils).ADMIN_USERNAME="<your admin username>"

ADMIN_EMAIL="<your admin email>"

ADMIN_PASSWORD="<your admin password>"

ADMIN_UUID=$(uuidgen)

ADMIN_PASSWORD_HASH=$(echo $ADMIN_PASSWORD | htpasswd -BinC 10 $ADMIN_USERNAME | cut -d: -f2)

sudo tee /var/lib/rancher/k3s/server/manifests/axoflow.yaml > /dev/null <<EOF

---

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: axoflow

namespace: kube-system

spec:

createNamespace: true

targetNamespace: axoflow

version: $VERSION

chart: $AXOFLOW_HELM_CHART_URL

failurePolicy: abort

valuesContent: |-

baseHostName: $BASE_HOSTNAME

licenseKey: $LICENSE_KEY

pomerium:

policy:

emails:

- $ADMIN_EMAIL

dex:

localIP: $VM_IP_ADDRESS

defaultCredential:

create: false

EOF

kubectl -n axoflow create secret generic axoflow-admin --from-literal=username=$ADMIN_USERNAME --from-literal=password_hash=$ADMIN_PASSWORD_HASH --from-literal=email=$ADMIN_EMAIL --from-literal=uuid=$ADMIN_UUID

Wait a few minutes until everything is installed. Check that every component has been installed and is running:

kubectl get pods -A

The output should be similar to:

NAMESPACE NAME READY STATUS RESTARTS AGE

axoflow axolet-dist-55ccbc4759-q7l7n 1/1 Running 0 2d3h

axoflow chalco-6cfc74b4fb-mwbbz 1/1 Running 0 2d3h

axoflow configure-kcp-1-x6js6 0/1 Completed 2 2d3h

axoflow configure-kcp-cert-manager-1-wvhhp 0/1 Completed 1 2d3h

axoflow configure-kcp-token-1-xggg7 0/1 Completed 1 2d3h

axoflow controller-manager-5ff8d5ffd-f6xtq 1/1 Running 0 2d3h

axoflow dex-5b9d7b88b-bv82d 1/1 Running 0 2d3h

axoflow kcp-0 1/1 Running 1 (2d3h ago) 2d3h

axoflow kcp-cert-manager-0 1/1 Running 0 2d3h

axoflow pomerium-56b8b9b6b9-vt4t8 1/1 Running 0 2d3h

axoflow prometheus-server-0 2/2 Running 0 2d3h

axoflow telemetryproxy-65f77dcf66-4xr6b 1/1 Running 0 2d3h

axoflow ui-6dc7bdb4c5-frn2p 2/2 Running 0 2d3h

cert-manager cert-manager-74c755695c-5fs6v 1/1 Running 5 (144m ago) 2d3h

cert-manager cert-manager-cainjector-dcc5966bc-9kn4s 1/1 Running 0 2d3h

cert-manager cert-manager-webhook-dfb76c7bd-z9kqr 1/1 Running 0 2d3h

ingress-nginx ingress-nginx-controller-bcc7fb5bd-n5htp 1/1 Running 0 2d3h

kube-system coredns-ccb96694c-fhk8f 1/1 Running 0 2d3h

kube-system helm-install-axoflow-b9crk 0/1 Completed 0 2d3h

kube-system helm-install-cert-manager-88lt7 0/1 Completed 0 2d3h

kube-system helm-install-ingress-nginx-qvkhf 0/1 Completed 0 2d3h

kube-system local-path-provisioner-5cf85fd84d-xdlnr 1/1 Running 0 2d3h

(For details on what the different services do, see the service reference.)

If the domain name of AxoConsole cannot be resolved from your desktop, add it to the /etc/hosts file in the following format. Use and IP address of AxoConsole that can be accessed from your desktop.

<AXOFLOW-CONSOLE-IP-ADDRESS> <your-host.your-domain> idp.<your-host.your-domain> authenticate.<your-host.your-domain>

Open the https://<your-host.your-domain> URL in your browser.

The on-premise deployment of AxoConsole shows a self-signed certificate. If your browser complains about the related risks, accept it.

Use the email address and password you got or set in the installation step to log in to AxoConsole.

| Service Name | Namespace | Purposes | Function |

|---|---|---|---|

| KCP | axoflow |

Backend API | Kubernetes Like Service with built in database, that service manage all the settings that our system manage |

| Chalco | axoflow |

Frontend API | Serve the UI API Calls, implement business logic for the UI |

| Controller-Manager | axoflow |

Backend Service | Reacts to state changes in our business entities, manage business logic for the Backend |

| Telemetry Proxy | axoflow |

Backend API | Receives agents telemetries |

| UI | axoflow |

Dashboard | The frontend for AxoConsole |

| Prometheus | axoflow |

Backend API /Service | Monitoring component to store time series information and an API for query, manage alert rules |

| Alertmanager | axoflow |

Backend API /Service | Monitoring component to Send alert based on alerting rules |

| Dex | axoflow |

Identity Connector/Proxy | Identity Connector/Proxy to allow the customer to use own identity (Google, Ldap, etc.) |

| Pomerium | axoflow |

HTTP Proxy | Allow policy based access to the Frontend API (Chalco) |

| Axolet Dist | axoflow |

Backend API | Static artifact store to contains agents binaries |

| Cert Manager (kcp) | axoflow |

Automated Certificate management tool | Manage certificates for Agents |

| Cert Manager | cert-manager |

Automated Certificate management tool | Manage certificates for Axoflow components (Backend API, HTTP Proxy) |

| NGINX Ingress Controller | ingress-nginx |

HTTP Proxy | Allow routing the HTTP traffic between multiple Frontend/Backend API |

These sections show you how to configure on-premises and Kubernetes deployments of AxoConsole to use different authentication backends.

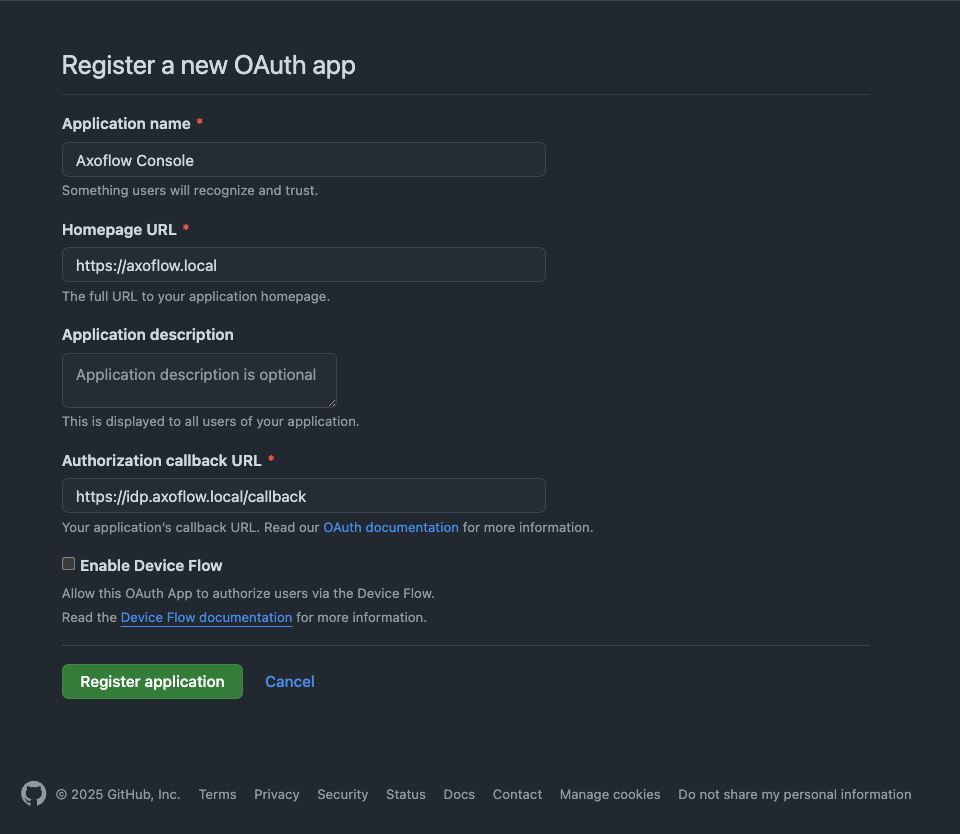

This section shows you how to use GitHub OAuth2 as an authentication backend for AxoConsole. It is assumed that you already have a GitHub organization. Complete the following steps.

Register a new OAuth GitHub application for your organization. (For testing, you can create it under your personal account.) Make sure to:

Set the Homepage URL to the URL of your AxoConsole deployment: https://<your-console-host.your-domain>/callback, for example, https://axoflow-console.example.com.

Set the Authorization callback URL to: https://auth.<your-console-host.your-domain>/callback, for example, https://auth.axoflow-console.example.com/callback.

Save the Client id of the app, you’ll need it to configure AxoConsole.

Generate a Client secret and save it, you’ll need it to configure AxoConsole.

Configure authentication by editing the spec.dex.config section of the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

(Optional) If you’ve used our earlier example, delete the spec.dex.config.staticPasswords section.

Add the spec.dex.config.connectors section to the file, like this:

dex:

enabled: true

localIP: $VM_IP_ADDRESS

config:

create: true

connectors:

- type: github

# Required field for connector id.

id: github

# Required field for connector name.

name: GitHub

config:

# Credentials can be string literals or pulled from the environment.

clientID: <ID-of-GitHub-application>

clientSecret: <Secret-of-GitHub-application>

redirectURI: <idp.your-host.your-domain/callback>

orgs:

- name: <your-GitHub-organization>

Note that if you have a valid domain name that points to the VM, you can omit the localIP: $VM_IP_ADDRESS line.

Edit the following fields. For details on the configuration parameters, see the Dex GitHub connector documentation.

connectors.config.clientID: The ID of the GitHub OAuth application.connectors.config.clientSecret: The client secret of the GitHub OAuth application.connectors.config.redirectURI: The callback URL of the GitHub OAuth application: https://auth.<your-console-host.your-domain>/callback, for example, https://auth.axoflow-console.example.com/callback.connectors.config.orgs: List of the GitHub organizations whose members can access AxoConsole. Restrict access to your organization.Configure authorization in the spec.pomerium.policy section of the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

emails section.readOnly.emails section.Use the primary GitHub email addresses of your users, otherwise the authorization will fail.

policy:

emails:

- username@yourdomain.com

domains: []

groups: []

claim/groups: []

readOnly:

emails:

- readonly-username@yourdomain.com

domains: []

groups: []

claim/groups: []

For details on authorization settings, see Authorization.

Save the file.

Restart the dex deployment after changing the connector:

kubectl rollout restart deployment/dex -n axoflow

Expected output:

deployment.apps/dex restarted

Open the main page of your AxoConsole deployment in your browser. You’ll be redirected to the GitHub authentication page.

After completing the GitHub authentication you can access AxoConsole.

You can troubleshoot common errors by running kubectl logs -n axoflow <dex-container-name>

If you run into problems setting up the authentication or authorization, contact our support team.

This section shows you how to use Google OpenID Connect as an authentication backend for AxoConsole. It is assumed that you already have a Google organization and Google Cloud Console access. Complete the following steps.

To use Google authentication, AxoConsole must be deployed on a publicly accessible domain name (the $BASE_HOSTNAME must end with a valid top-level domain, for example, .com or .io.)

Configure OpenID Connect and create an OpenID credential for a Web application.

https://auth.<your-console-host.your-domain>/callback, for example, https://auth.axoflow-console.example.com/callback.For details on setting up OpenID Connect, see the official documentation.

Configure authentication by editing the spec.dex.config section of the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

(Optional) If you’ve used our earlier example, delete the spec.dex.config.staticPasswords section.

Add the spec.dex.config.connectors section to the file, like this:

connectors:

- type: google

id: google

name: Google

config:

# Connector config values starting with a "$" will read from the environment.

clientID: <ID-of-Google-application>

clientSecret: <Secret-of-GitHub-application>

# Dex's issuer URL + "/callback"

redirectURI: <idp.your-host.your-domain/callback>

# Set the value of `prompt` query parameter in the authorization request

# The default value is "consent" when not set.

promptType: consent

# Google supports whitelisting allowed domains when using G Suite

# (Google Apps). The following field can be set to a list of domains

# that can log in:

#

hostedDomains:

- <your-domain>

Edit the following fields. For details on the configuration parameters, see the Dex Google connector documentation.

connectors.config.clientID: The ID of the Google application.connectors.config.clientSecret: The client secret of the Google application.connectors.config.redirectURI: The callback URL of the Google application: https://auth.<your-console-host.your-domain>/callback, for example, https://auth.axoflow-console.example.com/callback.connectors.config.hostedDomains: The domain where AxoConsole is deployed, for example, example.com. Your users must have email addresses for this domain at Google.Configure authorization in the spec.pomerium.policy section of the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

emails section.readOnly.emails section.The email addresses of your users must have the same domain as the one set under `connectors.config.hostedDomains`, otherwise the authorization will fail.

policy:

emails:

- username@yourdomain.com

domains: []

groups: []

claim/groups: []

readOnly:

emails:

- readonly-username@yourdomain.com

domains: []

groups: []

claim/groups: []

For details on authorization settings, see Authorization.

Save the file.

Restart the dex deployment after changing the connector:

kubectl rollout restart deployment/dex -n axoflow

Expected output:

deployment.apps/dex restarted

Open the main page of your AxoConsole deployment in your browser. You’ll be redirected to the Google authentication page.

After completing the Google authentication you can access AxoConsole.

You can troubleshoot common errors by running kubectl logs -n axoflow <dex-container-name>

If you run into problems setting up the authentication or authorization, contact our support team.

This section shows you how to use LDAP as an authentication backend for AxoConsole. In the examples we used the public demo service of FreeIPA as an LDAP server. It is assumed that you already have an LDAP server in place. Complete the following steps.

Configure authentication by editing the spec.dex.config section of the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

(Optional) If you’ve used our earlier example, delete the spec.dex.config.staticPasswords section.

Add the spec.dex.config.connectors section to the file, like this:

CAUTION:

This example shows a simple configuration suitable for testing. In production environments, make sure to:

- configure TLS encryption to access your LDAP server

- retrieve the bind password from a vault or environment variable. Note that if the bind password contains the `$` character, you must set it in an environment variable and pass it like `bindPW: $LDAP_BINDPW`.

dex:

enabled: true

localIP: $VM_IP_ADDRESS

config:

create: true

connectors:

- type: ldap

name: OpenLDAP

id: ldap

config:

host: ipa.demo1.freeipa.org

insecureNoSSL: true

# This would normally be a read-only user.

bindDN: uid=admin,cn=users,cn=accounts,dc=demo1,dc=freeipa,dc=org

bindPW: Secret123

usernamePrompt: Email Address

userSearch:

baseDN: dc=demo1,dc=freeipa,dc=org

filter: "(objectClass=person)"

username: mail

# "DN" (case sensitive) is a special attribute name. It indicates that

# this value should be taken from the entity's DN not an attribute on

# the entity.

idAttr: uid

emailAttr: mail

nameAttr: cn

groupSearch:

baseDN: dc=demo1,dc=freeipa,dc=org

filter: "(objectClass=groupOfNames)"

userMatchers:

# A user is a member of a group when their DN matches

# the value of a "member" attribute on the group entity.

- userAttr: DN

groupAttr: member

# The group name should be the "cn" value.

nameAttr: cn

Edit the following fields. For details on the configuration parameters, see the Dex LDAP connector documentation.

connectors.config.host: The hostname and optionally the port of the LDAP server in “host:port” format.connectors.config.bindDN and connectors.config.bindPW: The DN and password for an application service account that the connector uses to search for users and groups.connectors.config.userSearch.bindDN and connectors.config.groupSearch.bindDN: The base DN for the user and group search.Configure authorization in the spec.pomerium.policy section of the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

claim/groups. (Group managers in the example.)readOnly.claim/groups. (Group employee in the example.) policy:

emails: []

domains: []

groups: []

claim/groups:

- managers

readOnly:

emails: []

domains: []

groups: []

claim/groups:

- employee

For details on authorization settings, see Authorization.

Save the file.

Restart the dex deployment after changing the connector:

kubectl rollout restart deployment/dex -n axoflow

Expected output:

deployment.apps/dex restarted

You can troubleshoot common errors by running kubectl logs -n axoflow <dex-container-name>

If you run into problems setting up the authentication or authorization, contact our support team.

These sections show you how to configure the authorization of AxoConsole with different authentication backends.

You can configure authorization in the spec.pomerium.policy section of the AxoConsole manifest. In on-premise deployments, the manifest is in the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file.

You can list individual email addresses and user groups to have read and write (using the keys under spec.pomerium.policy) and read-only (using the keys under spec.pomerium.policy.readOnly) access to AxoConsole. Which key to use depends on the authentication backend configured for AxoConsole:

emails: Email addresses used with static passwords and GitHub authentication.

With GitHub authentication, use the primary GitHub email addresses of your users, otherwise the authorization will fail.

claim/groups: LDAP groups used with LDAP authentication. For example:

policy:

emails: []

domains: []

groups: []

claim/groups:

- managers

readOnly:

emails: []

domains: []

groups: []

claim/groups:

- employee

When using AxoRouter with an on-premises AxoConsole deployment, you have to complete the following steps on the hosts you want to deploy AxoRouter on. These steps are specific to on-premises AxoConsole deployments, and are not needed when using the SaaS AxoConsole.

If the domain name of AxoConsole cannot be resolved from the AxoRouter host, add it to the /etc/hosts file of the AxoRouter host in the following format. Use and IP address of AxoConsole that can be accessed from the AxoRouter host.

<AXOFLOW-CONSOLE-IP-ADDRESS> <AXOFLOW-CONSOLE-BASE-URL> kcp.<AXOFLOW-CONSOLE-BASE-URL> telemetry.<AXOFLOW-CONSOLE-BASE-URL> idp.<AXOFLOW-CONSOLE-BASE-URL> authenticate.<AXOFLOW-CONSOLE-BASE-URL>

Import AxoConsole certificates to AxoRouter hosts.

On the AxoConsole host: Run the following command to extract the AxoConsole CA certificate. The AxoRouter host will need this certificate to download the installation binaries.

kubectl get secret -n axoflow pomerium-certificates -o=jsonpath='{.data.ca\.crt}'|base64 -d > axoflow-ca.crt

Copy this file to the AxoRouter hosts.

On the AxoRouter hosts: Copy the certificate file extracted from the AxoConsole host.

/etc/pki/ca-trust/source/anchors/ folder, then run sudo update-ca-trust extract. (If needed, install the ca-certificates package.)/usr/local/share/ca-certificates/ folder, then run sudo update-ca-certificatescurl -I https://<your-host.your-domain> should give you a valid HTTP/2 302 response

Now you can deploy AxoRouter on the host.

To upgrade an on-prem AxoConsole deployment, complete the following steps.

Log in to the host running AxoConsole.

Run the following command to update the version number in the /var/lib/rancher/k3s/server/manifests/axoflow.yaml file to 0.73.0.

sudo sed -i.old -E 's/^( *version: ).*/\10.73.0/' /var/lib/rancher/k3s/server/manifests/axoflow.yaml

Wait a few minutes until the pods are restarted. You can check their status by running:

kubectl get pods -A

If everything is working as expected, re-deploy your AxoRouter and Axoflow agent instances to upgrade them.

In case you experience problems during the upgrade, contact our support team.