This guide shows you how to install AxoConsole on a local, on-premises virtual machine using k3s and Helm. Installation of other components like the NGINX ingress controller NS cert-manager is also included. Since this is a single instance deployment, we don’t recommend using it in production environments. For additional steps and configurations needed in production environments, contact our support team.

At a high level, the deployment consists of the following steps:

- Preparing a virtual machine.

- Installing Kubernetes on the virtual machine.

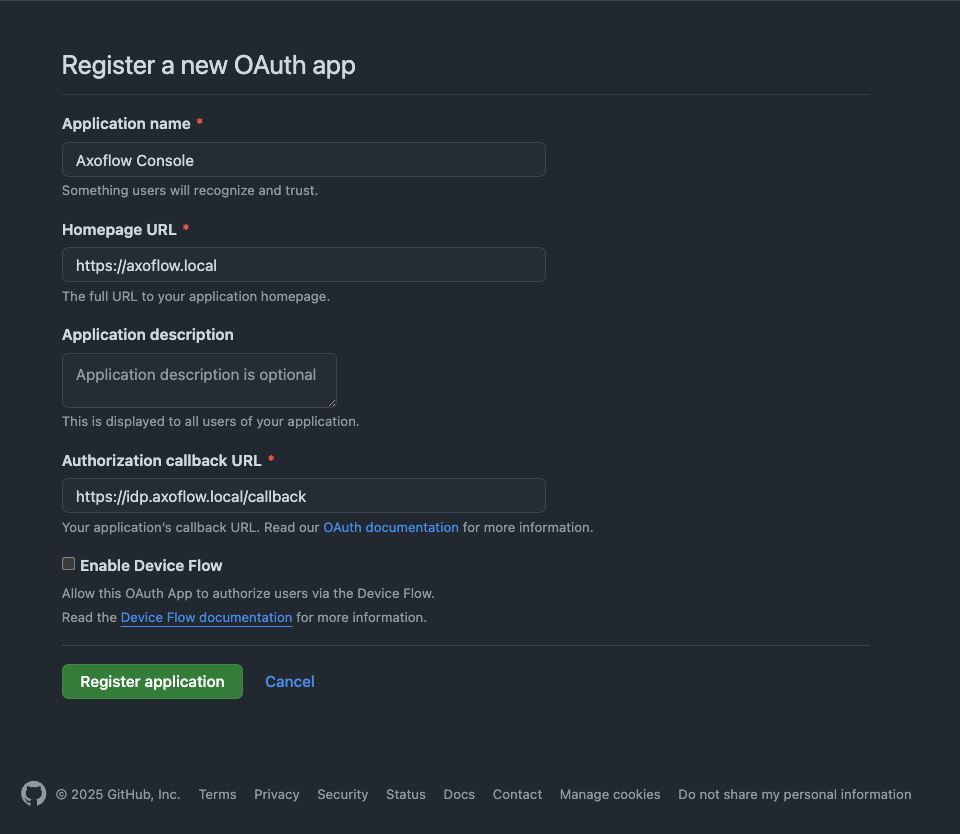

- Basic authentication with admin user and password is configured by default. This guide also shows you how to configure other common authentication methods like LDAP, Github and Google.

- Before deploying AxoRouter describes the steps you have to complete on a host before deploying AxoRouter on it. These steps are specific to on-premises AxoConsole deployments, and are not needed when using the SaaS AxoConsole.

Prerequisites

To install AxoConsole, you’ll need the following:

-

The URL for the AxoConsole Helm chart. You’ll receive this URL from our team. You can request it using the contact form.

CAUTION:

Don’t start the Install AxoConsole process until you’ve received the chart URL. -

A license key for AxoConsole. You’ll receive this from our team. You can request it using the contact form.

-

A host that meets the system requirements.

-

Network access set up for the host.

System requirements

Supported operating system: Ubuntu 24.04, Red Hat 9 and compatible (tested with AlmaLinux 9)

The virtual machine (VM) must have at least:

- 4 vCPUs (x86_64-based)

- 8 GB RAM

- 100 GB disk space

This setup can handle about 100 AxoRouter instances and 1000 data source hosts.

For reference, a real-life scenario that handles 100 AxoRouter and 3000 data source hosts with 30-day metric retention would need:

- 16 vCPU (x86_64-based)

- 16 GB RAM

- 250 GB disk space

For details on sizing, contact our support team.

You’ll need to have access to a user with sudo privileges.

Network access

The hosts must be able to access the following domains related to the AxoConsole:

-

When using AxoConsole SaaS:

<your-tenant-id>.cloud.axoflow.io: HTTPS traffic on TCP port 443, needed to download the binaries for Axoflow software (like Axolet and AxoRouter).kcp.<your-tenant-id>.cloud.axoflow.io: HTTPS (mutual TLS) traffic on TCP port 443 for management traffic.telemetry.<your-tenant-id>.cloud.axoflow.io: HTTPS (mutual TLS) traffic on TCP port 443, where Axolet sends the metrics of the host.us-docker.pkg.dev: HTTPS traffic on TCP port 443, for pulling container images (AxoRouter only).

-

When using an on-premise AxoConsole:

-

The following domains should point to AxoConsole IP address to access Axoflow from your desktop and AxoRouter hosts:

your-host.your-domain: The main domain of your AxoConsole deployment.authenticate.your-host.your-domain: A subdomain used for authentication.idp.your-host.your-domain: A subdomain for the identity provider.

-

The AxoConsole host must have the following Open Ports:

- Port 80 (HTTP)

- Port 443 (HTTPS)

-

-

When installing Axoflow agent for Windows or Linux:

github.com: HTTPS traffic on TCP port 443, for downloading installer packages.

Set up Kubernetes

Complete the following steps to install k3s and some dependencies on the virtual machine.

-

Run

getenforceto check the status of SELinux. -

Check the

/etc/selinux/configfile and make sure that the settings match the results of thegetenforceoutput (for example,SELINUX=disabled). K3S supports SELinux, but the installation can fail if there is a mismatch between the configured and actual settings. -

Install k3s.

-

Run the following command:

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC="--disable traefik --disable metrics-server" K3S_KUBECONFIG_MODE="644" sh -s - -

Check that the k3s service is running:

systemctl status k3s

-

-

Install the NGINX ingress controller.

NGINX_VERSION="v4.11.3" sudo tee /var/lib/rancher/k3s/server/manifests/ingress-nginx.yaml > /dev/null <<EOF --- apiVersion: helm.cattle.io/v1 kind: HelmChart metadata: name: ingress-nginx namespace: kube-system spec: createNamespace: true chart: ingress-nginx repo: https://kubernetes.github.io/ingress-nginx version: $NGINX_VERSION targetNamespace: ingress-nginx valuesContent: |- controller: extraArgs: enable-ssl-passthrough: true hostNetwork: true hostPort: enabled: true service: enabled: false admissionWebhooks: enabled: false updateStrategy: strategy: type: Recreate EOF -

Install the cert-manager controller.

-

Run the command:

CM_VERSION="1.16.2" sudo tee /var/lib/rancher/k3s/server/manifests/cert-manager.yaml > /dev/null <<EOF --- apiVersion: helm.cattle.io/v1 kind: HelmChart metadata: name: cert-manager namespace: kube-system spec: createNamespace: true chart: cert-manager version: $CM_VERSION repo: https://charts.jetstack.io targetNamespace: cert-manager valuesContent: |- crds: enabled: true enableCertificateOwnerRef: true EOF -

Wait for the helm chart to be installed:

while ! kubectl get job helm-install-cert-manager -n kube-system; do sleep 1 done kubectl wait --for=condition=complete job/helm-install-cert-manager -n kube-system --timeout=300s

-

Install the AxoConsole

Complete the following steps to deploy the AxoConsole Helm chart.

-

Set the following environment variables as needed for your deployment.

-

The domain name for your AxoConsole deployment. This must point to the IP address of the VM you’re installing AxoConsole on.

BASE_HOSTNAME=your-host.your-domainNote To use Google authentication, AxoConsole must be deployed on a publicly accessible domain name (the$BASE_HOSTNAMEmust end with a valid top-level domain, for example,.comor.io.) -

The license key of AxoConsole. You’ll receive this from our team. You can request it using the contact form.

LICENSE_KEY="your-license-key" -

The version number of AxoConsole you want to deploy.

VERSION="0.73.0" -

The internal IP address of the virtual machine. This is needed so the authentication service can communicate with the identity provider without relying on DNS.

VM_IP_ADDRESS=$(hostname -I | cut -d' ' -f1)Check that the above command returned the correct IP, it might not be accurate if the host has multiple IP addresses.

-

The URL of the Axoflow Helm chart. You’ll receive this URL from our team. You can request it using the contact form.

AXOFLOW_HELM_CHART_URL="<the-chart-URL-received-from-Axoflow>"

-

-

Install AxoConsole. You can either let the chart create a password, or you can set an email and password manually.

-

Add password automatically: The following configuration file enables automatic password creation. If this basic setup is working, you can configure another authentication provider.

sudo tee /var/lib/rancher/k3s/server/manifests/axoflow.yaml > /dev/null <<EOF --- apiVersion: helm.cattle.io/v1 kind: HelmChart metadata: name: axoflow namespace: kube-system spec: createNamespace: true targetNamespace: axoflow version: $VERSION chart: $AXOFLOW_HELM_CHART_URL failurePolicy: abort valuesContent: |- baseHostName: $BASE_HOSTNAME licenseKey: $LICENSE_KEY dex: localIP: $VM_IP_ADDRESS EOFTo reclaim your autogenerated password, use the following command.

kubectl -n axoflow get secret axoflow-admin -o go-template='Email: {{ .data.email | base64decode }}{{ printf "\n" }}Password: {{ .data.password | base64decode }}{{ printf "\n" }}'Record the email and password, you’ll need it to log in to AxoConsole.

-

Set password manually: If you’d like to set the email/password manually, create the secret storing the required information, then set a unique identifier for the administrator user using

uuidgen. Note that:- On Ubuntu, you must first install the

uuid-runtimepackage by runningsudo apt install uuid-runtime. - To set the bcrypt hash of the administrator password, you can generate it with the

htpasswdtool, which is part of thehttpd-toolspackage on Red Hat (install it usingsudo dnf install httpd-tools), and theapache2-utilspackage on Ubuntu (install it usingsudo apt install apache2-utils).

ADMIN_USERNAME="<your admin username>" ADMIN_EMAIL="<your admin email>" ADMIN_PASSWORD="<your admin password>" ADMIN_UUID=$(uuidgen) ADMIN_PASSWORD_HASH=$(echo $ADMIN_PASSWORD | htpasswd -BinC 10 $ADMIN_USERNAME | cut -d: -f2) sudo tee /var/lib/rancher/k3s/server/manifests/axoflow.yaml > /dev/null <<EOF --- apiVersion: helm.cattle.io/v1 kind: HelmChart metadata: name: axoflow namespace: kube-system spec: createNamespace: true targetNamespace: axoflow version: $VERSION chart: $AXOFLOW_HELM_CHART_URL failurePolicy: abort valuesContent: |- baseHostName: $BASE_HOSTNAME licenseKey: $LICENSE_KEY pomerium: policy: emails: - $ADMIN_EMAIL dex: localIP: $VM_IP_ADDRESS defaultCredential: create: false EOF kubectl -n axoflow create secret generic axoflow-admin --from-literal=username=$ADMIN_USERNAME --from-literal=password_hash=$ADMIN_PASSWORD_HASH --from-literal=email=$ADMIN_EMAIL --from-literal=uuid=$ADMIN_UUID - On Ubuntu, you must first install the

-

-

Wait a few minutes until everything is installed. Check that every component has been installed and is running:

kubectl get pods -AThe output should be similar to:

NAMESPACE NAME READY STATUS RESTARTS AGE axoflow axolet-dist-55ccbc4759-q7l7n 1/1 Running 0 2d3h axoflow chalco-6cfc74b4fb-mwbbz 1/1 Running 0 2d3h axoflow configure-kcp-1-x6js6 0/1 Completed 2 2d3h axoflow configure-kcp-cert-manager-1-wvhhp 0/1 Completed 1 2d3h axoflow configure-kcp-token-1-xggg7 0/1 Completed 1 2d3h axoflow controller-manager-5ff8d5ffd-f6xtq 1/1 Running 0 2d3h axoflow dex-5b9d7b88b-bv82d 1/1 Running 0 2d3h axoflow kcp-0 1/1 Running 1 (2d3h ago) 2d3h axoflow kcp-cert-manager-0 1/1 Running 0 2d3h axoflow pomerium-56b8b9b6b9-vt4t8 1/1 Running 0 2d3h axoflow prometheus-server-0 2/2 Running 0 2d3h axoflow telemetryproxy-65f77dcf66-4xr6b 1/1 Running 0 2d3h axoflow ui-6dc7bdb4c5-frn2p 2/2 Running 0 2d3h cert-manager cert-manager-74c755695c-5fs6v 1/1 Running 5 (144m ago) 2d3h cert-manager cert-manager-cainjector-dcc5966bc-9kn4s 1/1 Running 0 2d3h cert-manager cert-manager-webhook-dfb76c7bd-z9kqr 1/1 Running 0 2d3h ingress-nginx ingress-nginx-controller-bcc7fb5bd-n5htp 1/1 Running 0 2d3h kube-system coredns-ccb96694c-fhk8f 1/1 Running 0 2d3h kube-system helm-install-axoflow-b9crk 0/1 Completed 0 2d3h kube-system helm-install-cert-manager-88lt7 0/1 Completed 0 2d3h kube-system helm-install-ingress-nginx-qvkhf 0/1 Completed 0 2d3h kube-system local-path-provisioner-5cf85fd84d-xdlnr 1/1 Running 0 2d3h(For details on what the different services do, see the service reference.)

Login to AxoConsole

-

If the domain name of AxoConsole cannot be resolved from your desktop, add it to the

/etc/hostsfile in the following format. Use and IP address of AxoConsole that can be accessed from your desktop.<AXOFLOW-CONSOLE-IP-ADDRESS> <your-host.your-domain> idp.<your-host.your-domain> authenticate.<your-host.your-domain> -

Open the

https://<your-host.your-domain>URL in your browser. -

The on-premise deployment of AxoConsole shows a self-signed certificate. If your browser complains about the related risks, accept it.

-

Use the email address and password you got or set in the installation step to log in to AxoConsole.

Prepare AxoRouter hosts

AxoConsole service reference

| Service Name | Namespace | Purposes | Function |

|---|---|---|---|

| KCP | axoflow |

Backend API | Kubernetes Like Service with built in database, that service manage all the settings that our system manage |

| Chalco | axoflow |

Frontend API | Serve the UI API Calls, implement business logic for the UI |

| Controller-Manager | axoflow |

Backend Service | Reacts to state changes in our business entities, manage business logic for the Backend |

| Telemetry Proxy | axoflow |

Backend API | Receives agents telemetries |

| UI | axoflow |

Dashboard | The frontend for AxoConsole |

| Prometheus | axoflow |

Backend API /Service | Monitoring component to store time series information and an API for query, manage alert rules |

| Alertmanager | axoflow |

Backend API /Service | Monitoring component to Send alert based on alerting rules |

| Dex | axoflow |

Identity Connector/Proxy | Identity Connector/Proxy to allow the customer to use own identity (Google, Ldap, etc.) |

| Pomerium | axoflow |

HTTP Proxy | Allow policy based access to the Frontend API (Chalco) |

| Axolet Dist | axoflow |

Backend API | Static artifact store to contains agents binaries |

| Cert Manager (kcp) | axoflow |

Automated Certificate management tool | Manage certificates for Agents |

| Cert Manager | cert-manager |

Automated Certificate management tool | Manage certificates for Axoflow components (Backend API, HTTP Proxy) |

| NGINX Ingress Controller | ingress-nginx |

HTTP Proxy | Allow routing the HTTP traffic between multiple Frontend/Backend API |